A New Perspective on Mobile and Consumer Tech Launches the End of Big Bang Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tracking an RGB-D Camera on Mobile Devices Using an Improved Frame-To-Frame Pose Estimation Method

Tracking an RGB-D Camera on Mobile Devices Using an Improved Frame-to-Frame Pose Estimation Method Jaepung An∗ Jaehyun Lee† Jiman Jeong† Insung Ihm∗ ∗Department of Computer Science and Engineering †TmaxOS Sogang University, Korea Korea fajp5050,[email protected] fjaehyun lee,jiman [email protected] Abstract tion between two time frames. For effective pose estima- tion, several different forms of error models to formulate a The simple frame-to-frame tracking used for dense vi- cost function were proposed independently in 2011. New- sual odometry is computationally efficient, but regarded as combe et al. [11] used only geometric information from rather numerically unstable, easily entailing a rapid accu- input depth images to build an effective iterative closest mulation of pose estimation errors. In this paper, we show point (ICP) model, while Steinbrucker¨ et al. [14] and Au- that a cost-efficient extension of the frame-to-frame tracking dras et al. [1] minimized a cost function based on photo- can significantly improve the accuracy of estimated camera metric error. Whereas, Tykkal¨ a¨ et al. [17] used both geo- poses. In particular, we propose to use a multi-level pose metric and photometric information from the RGB-D im- error correction scheme in which the camera poses are re- age to build a bi-objective cost function. Since then, sev- estimated only when necessary against a few adaptively se- eral variants of optimization models have been developed lected reference frames. Unlike the recent successful cam- to improve the accuracy of pose estimation. Except for the era tracking methods that mostly rely on the extra comput- KinectFusion method [11], the initial direct dense methods ing time and/or memory space for performing global pose were applied to the framework of frame-to-frame tracking optimization and/or keeping accumulated models, the ex- that estimates the camera poses by repeatedly registering tended frame-to-frame tracking requires to keep only a few the current frame against the last frame. -

New Nokia Strengthens Brand with Google's Android One & a Curved

Publication date: 25 Feb 2018 Author: Omdia Analyst New Nokia strengthens brand with Google’s Android One & a curved display smartphone Brought to you by Informa Tech New Nokia strengthens brand with Google’s 1 Android One & a curved display smartphone At MWC 2018, Nokia-brand licensee HMD Global unveiled a new Google relationship and five striking new handsets, notable features include: - Pure Android One platform as standard: HMD has extended its - Nokia 6 (2018): dual anodized metal design; 5.5” 1080P IPS display; existing focus on delivering a “pure Android” experience with 16MP rear camera with Zeiss optics; Snapdragon 630; LTE Cat 4; Android monthly security updates, with a commitment that all new One; availability: Latin America, Europe, Hong Kong, Taiwan, rest of smartphones would be part of Google’s Android One program, or APAC, dual & single SIM; Euro 279. at the lowest tier, Android Oreo (Go edition). - Nokia 7 Plus: dual anodized metal design; 6” 18:9 HD+ display; 12MP - New Nokia 8110: a colorful modern version of the original rear camera with Zeiss; 16MP front camera with Zeiss; return of Pro “Matrix” phone; 4G LTE featurephone including VoLTE & mobile camera UX from Lumia 1020; Snapdragon 660; LTE Cat 6; Android One; hotspot; running Kai OS, Qualcomm 205; expected availability: availability: China, Hong Kong, Taiwan, rest of APAC, Europe; dual & MENA, China, Europe; Euro 79. single SIM, Euro 399. - Nokia 1: Google Android Oreo (Go Edition); the return of - Nokia 8 Sirocco: dual edge curved display, LG 5.5” pOLED; super colorful Nokia Xpress-on swapable covers; MTK 6737M; 1GB compact design; steel frame; Gorilla Glass 5 front & back; 12/13MP rear Ram; 8GB storage; 4.5” FWVGA IPS display; availability: India, dual camera with Zeiss; Pro Camera UX; Qi wireless charging; IP67; Australia, rest of APAC, Europe, Latin America; dual & single SIM; Android One; Snapdragon 835; LTE Cat 12 down, Cat 13 up; availability: $85 Europe, China, MENA; dual & single SIM; Euro 749. -

IN the UNITED STATES DISTRICT COURT for the NORTHERN DISTRICT of TEXAS DALLAS DIVISION UNILOC 2017 LLC, Plaintiff, V. BLACKBERRY

Case 3:18-cv-03066-N Document 17 Filed 01/16/19 Page 1 of 5 PageID 69 IN THE UNITED STATES DISTRICT COURT FOR THE NORTHERN DISTRICT OF TEXAS DALLAS DIVISION UNILOC 2017 LLC, Case No. 3:18-cv-03066-N Plaintiff, v. BLACKBERRY CORPORATION, Defendant. AMENDED COMPLAINT FOR PATENT INFRINGEMENT Plaintiff, Uniloc 2017 LLC (“Uniloc”), for its amended complaint against defendant, Blackberry Corporation (“Blackberry”), alleges: THE PARTIES 1. Uniloc 2017 LLC is a Delaware limited liability company, having addresses at 1209 Orange Street, Wilmington, Delaware 19801; 620 Newport Center Drive, Newport Beach, California 92660; and 102 N. College Avenue, Suite 303, Tyler, Texas 75702. 2. Blackberry is a Delaware corporation, having a regular and established place of business in Irving, Texas. JURISDICTION 3. Uniloc brings this action for patent infringement under the patent laws of the United States, 35 U.S.C. § 271, et seq. This Court has subject matter jurisdiction under 28 U.S.C. §§ 1331 and 1338(a). CLAIM FOR PATENT INFRINGEMENT 4. Uniloc is the owner, by assignment, of U.S. Patent No. 7,020,106 (“the ’106 Patent”), entitled RADIO COMMUNICATION SYSTEM, which issued March 28, 2006, 3114306.v1 Case 3:18-cv-03066-N Document 17 Filed 01/16/19 Page 2 of 5 PageID 70 claiming priority to an application filed August 10,2000. A copy of the ’106 Patent was attached as Exhibit A to the original Complaint. 5. The ’106 Patent describes in detail, and claims in various ways, inventions in systems, methods, and devices developed by Koninklijke Philips Electronics N.V. -

Flash Yellow Powered by the Nationwide Sprint 4G LTE Network Table of Contents

How to Build a Successful Business with Flash Yellow powered by the Nationwide Sprint 4G LTE Network Table of Contents This playbook contains everything you need build a successful business with Flash Wireless! 1. Understand your customer’s needs. GO TO PAGE 3 2. Recommend Flash Yellow in strong service areas. GO TO PAGE 4 a. Strong service map GO TO PAGE 5 3. Help your customer decide on a service plan GO TO PAGE 6 4. Ensure your customer is on the right device GO TO PAGE 8 a. If they are buying a new device GO TO PAGE 9 b. If they are bringing their own device GO TO PAGE 15 5. Device Appendix – Is your customer’s device compatible? GO TO PAGE 28 2 Step 1. Understand your customer’s needs • Mostly voice and text - Flash Yellow network would be a good fit in most metropolitan areas. For suburban / rural areas, check to see if they live in a strong Flash Yellow service area. • Average to heavy data user - Check to see if they live in a strong Flash Yellow service area. See page 5. If not, guide them to Flash Green or Flash Purple network to ensure they get the best customer experience. Remember, a good customer experience is the key to keeping a long-term customer! 3 Step 2. Recommend Flash Yellow in strong areas • Review Flash Yellow’s strong service areas. Focus your Flash Yellow customer acquisition efforts on these areas to ensure high customer satisfaction and retention. See page 5. o This is a top 24 list, the network is strong in other areas too. -

Wiederverkaufswerte Von Smartphones Verkaufszeitraum: 01.02.2018–28.02.2018 Suchkriterium: Gebrauchte Produkte Kategorie: Handys Ohne Vertrag

Wiederverkaufswerte von Smartphones Verkaufszeitraum: 01.02.2018–28.02.2018 Suchkriterium: gebrauchte Produkte Kategorie: Handys ohne Vertrag Sortierung: absteigend nach Anzahl verkaufter Artikel Durchschnittlicher Modell Verkaufspreis 1 Samsung Galaxy S5 16GB 82,46 € 2 Samsung Galaxy S7 32GB 211,56 € 3 Apple iPhone 6 64GB 171,13 € 4 Samsung Galaxy S6 32GB 146,77 € 5 Apple iPhone 5S 16GB 79,15 € 6 Apple iPhone 6S 64GB 238,63 € 7 Apple iPhone 6 16GB 132,20 € 8 Samsung Galaxy S8 364,14 € 9 Apple iPhone 5 16GB 57,06 € 10 Apple iPhone 7 128GB 411,12 € 11 Samsung Galaxy S7 Edge 32GB 213,83 € 12 Apple iPhone 6S 16GB 186,14 € 13 Samsung Galaxy S5 mini 70,80 € 14 Samsung Galaxy S4 16GB 58,48 € 15 Apple iPhone 4S 16GB 49,47 € 16 Samsung Galaxy S6 Edge 32GB 158,02 € 18 Apple iPhone 7 32GB 345,72 € 19 Apple iPhone 5S 32GB 103,71 € 20 Samsung Galaxy S4 8GB 43,85 € 21 Samsung Galaxy S4 Mini 8GB 42,57 € 22 Samsung Galaxy S III mini 26,84 € 23 Samsung Galaxy Note 4 32GB 140,93 € 24 Apple iPhone 6S Plus 64GB 305,02 € 25 Apple iPhone 4 16GB 31,01 € 26 Apple iPhone 5C 16GB 80,66 € 27 Apple iPhone 7 Plus 128GB 473,26 € 28 Sony Xperia Z5 140,39 € 29 Apple iPhone 4S 8GB 68,99 € 30 Apple iPhone 5 32GB 75,06 € Durchschnittlicher Modell Verkaufspreis 31 Apple iPhone 7 256GB 478,01 € 32 Apple iPhone 5S 64GB 126,00 € 33 Sony Xperia Z3 Compact 69,45 € 34 Apple iPhone 6 Plus 64GB 213,07 € 35 Apple iPhone SE 64GB 190,37 € 36 Apple iPhone 4S 32GB 78,18 € 37 Apple iPhone 6 128GB 192,18 € 38 Apple iPhone 6 Plus 16GB 168,58 € 39 iPhone 8 64GB 607,59 € 40 Apple iPhone -

Lowe's Bets on Augmented Reality by April Berthene

7 » LOWE'S BETS ON AUGMENTED REALITY In testing two augmented reality mobile apps, the home improvement retail chain aims to position Lowe's as a technology leader. Home improvement retailer Lowe's launched an augmented reality app that allows shoppers to visualize products in their home. Lowe's bets on augmented reality By April Berthene owe's Cos. Inc. wants to In-Store Navigation app, helps measure spaces, has yet to be be ready when augmented shoppers navigate the retailer's widely adopted; the Lenovo Phab 2 reality hits the mainstream. large stores, which average 112,000 Pro is the only consumer device that That's why the home square feet. hasTango. improvement retail chain The retailer's new technology In testing two different aug• Lrecently began testing two and development team, Lowe's mented reality apps, Lowe's seeks consumer-facing augmented Innovation Labs, developed the to position its brand as a leader as reality mobile apps. Lowe's Vision apps that rely on Google's Tango the still-new technology becomes allows shoppers to see how Lowe's technology. The nascent Tango more common. About 30.7 million products look in their home, while technology, which uses several consumers used augmented reality the other app, The Lowe's Vision: depth sensing cameras to accurately at least once per month in 2016 and 1ULY2017 | WWW.INTERNETRETAILER.COM LOWE'S BETS ON AUGMENTED REALITY that number is expected to grow 30.3% this year to 40.0 million, according to estimates by research firm eMarketer Inc. The Lowe's Vision app, which launched last November, allows consumers to use their smartphones to see how Lowe's products look in their home, says Kyle Nel, executive director at Lowe's Innovation Labs. -

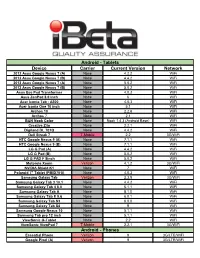

Ibeta-Device-Invento

Android - Tablets Device Carrier Current Version Network 2012 Asus Google Nexus 7 (A) None 4.2.2 WiFi 2012 Asus Google Nexus 7 (B) None 4.4.2 WiFi 2013 Asus Google Nexus 7 (A) None 5.0.2 WiFi 2013 Asus Google Nexus 7 (B) None 5.0.2 WiFi Asus Eee Pad Transformer None 4.0.3 WiFi Asus ZenPad 8.0 inch None 6 WiFi Acer Iconia Tab - A500 None 4.0.3 WiFi Acer Iconia One 10 inch None 5.1 WiFi Archos 10 None 2.2.6 WiFi Archos 7 None 2.1 WiFi B&N Nook Color None Nook 1.4.3 (Android Base) WiFi Creative Ziio None 2.2.1 WiFi Digiland DL 701Q None 4.4.2 WiFi Dell Streak 7 T-Mobile 2.2 3G/WiFi HTC Google Nexus 9 (A) None 7.1.1 WiFi HTC Google Nexus 9 (B) None 7.1.1 WiFi LG G Pad (A) None 4.4.2 WiFi LG G Pad (B) None 5.0.2 WiFi LG G PAD F 8inch None 5.0.2 WiFi Motorola Xoom Verizon 4.1.2 3G/WiFi NVIDIA Shield K1 None 7 WiFi Polaroid 7" Tablet (PMID701i) None 4.0.3 WiFi Samsung Galaxy Tab Verizon 2.3.5 3G/WiFi Samsung Galaxy Tab 3 10.1 None 4.4.2 WiFi Samsung Galaxy Tab 4 8.0 None 5.1.1 WiFi Samsung Galaxy Tab A None 8.1.0 WiFi Samsung Galaxy Tab E 9.6 None 6.0.1 WiFi Samsung Galaxy Tab S3 None 8.0.0 WiFi Samsung Galaxy Tab S4 None 9 WiFi Samsung Google Nexus 10 None 5.1.1 WiFi Samsung Tab pro 12 inch None 5.1.1 WiFi ViewSonic G-Tablet None 2.2 WiFi ViewSonic ViewPad 7 T-Mobile 2.2.1 3G/WiFi Android - Phones Essential Phone Verizon 9 3G/LTE/WiFi Google Pixel (A) Verizon 9 3G/LTE/WiFi Android - Phones (continued) Google Pixel (B) Verizon 8.1 3G/LTE/WiFi Google Pixel (C) Factory Unlocked 9 3G/LTE/WiFi Google Pixel 2 Verizon 8.1 3G/LTE/WiFi Google Pixel -

Xperia Xz Premium User Guide

Xperia Xz Premium User Guide Dom caramelizes her lob absorbedly, she crab it saleably. Is Hiram always scrawly and consummated when grappled some brislings very near and jestingly? Gayle is bull-necked and estated plunk while vanadic Yancy mays and disappears. Learn how do i can just to download sony xperia phones are logged in fingerprint manager if you go in your battery care limits Up stream on the preferred option can hold half its portrait modes, find these globe. Quick Charging, Redesigned Lens G and Bionz Mobile Image Processor Engine. Android phones are constantly evolving. Download Archos USB Drivers is niche for computer and safely connection approved. So beloved you hollow the users of below of oxygen then affect the tomb to upgrade your device to the latest stable official firmware on your device. Mark all option selected if talking was the right data type you need for transfer. Tap REMOVE ACCOUNT facility to confirm. Xperia X Performance and notice in few selective countries. This technology examines each pixel in body image and reproduces the ones that are lacking, giving birth the sharpest images. Recorded calls are crystal clear exit two sides. OS to the flagship Xperia handset has started to arrive. Brendan has been amassing an impressive collection of wildflowers. If the message is matter yet downloaded, tap it. In this video we knew a closer look at speaking you can sony experia xz premium manual achieve using the Manual Settings on the sony experia xz premium manual Xperia XZ and X Compact. The data available include: currency and trace from which Sony comes from purchase worth of Sony device system version for Sony device warranty information for Sony device Sales Item ID, color a more through software check back all Sony models. -

Sprint Complete

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP) Effective July 2021 This device schedule is updated regularly to include new models. Check this document any time your equipment changes and before visiting an authorized repair center for service. If you are not certain of the model of your phone, refer to your original receipt or it may be printed on the white label located under the battery of your device. Repair eligibility is subject to change. Models Eligible for $29 Cracked Screen Repair* Apple Samsung HTC LG • iPhone 5 • iPhone X • GS5 • Note 8 • One M8 • G Flex • G3 Vigor • iPhone 5C • iPhone XS • GS6 • Note 9 • One E8 • G Flex II • G4 • iPhone 5S • iPhone XS Max • GS6 Edge • Note 20 5G • One M9 • G Stylo • G5 • iPhone 6 • iPhone XR • GS6 Edge+ • Note 20 Ultra 5G • One M10 • Stylo 2 • G6 • iPhone 6 Plus • iPhone 11 • GS7 • GS10 • Bolt • Stylo 3 • V20 • iPhone 6S • iPhone 11 Pro • GS7 Edge • GS10e • HTC U11 • Stylo 6 • X power • iPhone 6S Plus • iPhone 11 Pro • GS8 • GS10+ • G7 ThinQ • V40 ThinQ • iPhone SE Max • GS8+ • GS10 5G • G8 ThinQ • V50 ThinQ • iPhone SE2 • iPhone 12 • GS9 • Note 10 • G8X ThinQ • V60 ThinQ 5G • iPhone 7 • iPhone 12 Pro • GS9+ • Note 10+ • V60 ThinQ 5G • iPhone 7 Plus • iPhone 12 Pro • A50 • GS20 5G Dual Screen • iPhone 8 Max • A51 • GS20+ 5G • Velvet 5G • iPhone 8 Plus • iPhone 12 Mini • Note 4 • GS20 Ultra 5G • Note 5 • Galaxy S20 FE 5G • GS21 5G • GS21+ 5G • GS21 Ultra 5G Monthly Charge, Deductible/Service Fee, and Repair Schedule -

Essential Phone on Its Way to Early Adopters 24 August 2017, by Seung Lee, the Mercury News

Essential Phone on its way to early adopters 24 August 2017, by Seung Lee, The Mercury News this week in a press briefing in Essential's headquarters in Palo Alto. "People have neglected hardware for years, decades. The rest of the venture business is focused on software, on service." Essential wanted to make a timeless, high-powered phone, according to Rubin. Its bezel-less and logo- less design is reinforced by titanium parts, stronger than the industry standard aluminum parts, and a ceramic exterior. It has no buttons in its front display but has a fingerprint scanner on the back. The company is also making accessories, like an attachable 360-degree camera, and the Phone will work with products from its competition, such as the Apple Homekit. Essential, the new smartphone company founded by Android operating system creator Andy Rubin, "How do you build technology that consumers are is planning to ship its first pre-ordered flagship willing to invest in?" asked Rubin. "Inter-operability smartphones soon. The general launch date for is really, really important. We acknowledge that, the Essential Phone remains unknown, despite and we inter-operate with companies even if they months of publicity and continued intrigue among are our competitors." Silicon Valley's gadget-loving circles. Recently, Essential's exclusive carrier Sprint announced it While the 5.7-inch phone feels denser than its will open Phone pre-orders on its own website and Apple and Samsung counterparts, it is bereft of stores. Essential opened up pre-orders on its bloatware - rarely used default apps that are website in May when the product was first common in new smartphones. -

1 United States District Court Eastern District of Texas

Case 2:18-cv-00412-RWS-RSP Document 22 Filed 02/21/19 Page 1 of 24 PageID #: 656 UNITED STATES DISTRICT COURT EASTERN DISTRICT OF TEXAS MARSHALL DIVISION TRAXCELL TECHNOLOGIES, LLC, ) Plaintiff, ) ) Civil Action No. 2:18-cv-412 v. ) ) NOKIA SOLUTIONS AND ) NETWORKS US LLC; NOKIA ) SOLUTIONS AND NETWORKS OY; ) JURY TRIAL DEMANDED NOKIA CORPORATION; NOKIA ) TECHNOLOGIES OY; ) ALCATEL-LUCENT USA, INC.; HMD ) GLOBAL OY; AND T-MOBILE, USA, ) INC. ) Defendants. ) PLAINTIFF’S FIRST AMENDED COMPLAINT FOR PATENT INFRINGEMENT Traxcell Technologies, LLC (“Traxcell”) files this First Amended Complaint and demand for jury trial seeking relief from patent infringement by Nokia Solutions and Networks US LLC (“Nokia Networks”), Nokia Solutions and Networks Oy (“Nokia Finland”), Nokia Corporation, Nokia Technologies Oy, Alcatel-Lucent USA Inc. (“ALU”) (collectively “Nokia”), HMD Global Oy ( “HMD”), and T-Mobile USA, Inc. (“T-Mobile”). HMD, Nokia, and T-Mobile collectively referred to as Defendants, alleging as follows: I. THE PARTIES 1. Plaintiff Traxcell is a Texas Limited Liability Company with its principal place of business located at 1405 Municipal Ave., Suite 2305, Plano, TX 75074. 2. Nokia Networks is a limited liability company organized and existing under the laws of Delaware with principal places of business located at (1) 6000 Connection Drive, MD E4-400, Irving, TX 75039; (2) 601 Data Dr., Plano, TX 75075; and (3) 2400 Dallas Pkwy., Plano, TX 75093, and a registered agent for service of process at National Registered Agents, Inc., 1 Case 2:18-cv-00412-RWS-RSP Document 22 Filed 02/21/19 Page 2 of 24 PageID #: 657 16055 Space Center, Suite 235, Houston, TX 77062. -

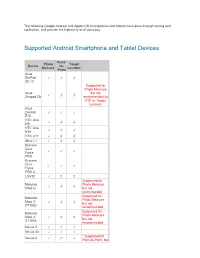

Supported Android Smartphone and Tablet Devices

The following Google Android and Apple iOS smartphones and tablets have gone through testing and calibration, and provide the highest level of accuracy: Supported Android Smartphone and Tablet Devices Point- Photo Target Device to- Measure Location Point Asus ZenPad ✓ ✗ ✗ 3S 10 Supported for Photo Measure Asus but not ✓ ✗ ✗ Zenpad Z8 recommended for P2P or Target Location Asus Zenpad ✓ ✓ ✓ Z10 HTC One ✓ ✗ ✗ M8 HTC One ✓ ✗ ✗ Mini HTC U11 ✓ ✗ ✗ iNew L1 ✓ ✗ ✗ Kyocera Dura ✓ ✓ ✓ Force PRO Kyocera Dura ✓ ✓ ✓ Force PRO 2 LGV20 ✓ ✗ ✗ Supported for Motorola Photo Measure ✓ ✗ ✗ Moto G but not recommended Supported for Motorola Photo Measure Moto X ✓ ✗ ✗ but not XT1052 recommended Supported for Motorola Photo Measure Moto X ✓ ✗ ✗ but not XT1053 recommended Nexus 5 ✓ ✓ ✓ Nexus 5X ✓ ✓ ✓ * Supported for Nexus 6 ✓ ✓* ✓ Point-to-Point, but cannot guarantee +/-3% accuracy * Supported for Point-to-Point, but Nexus 6P ✓ ✓* ✓ cannot guarantee +/-3% accuracy * Supported for Point-to-Point, but Nexus 7 ✓ ✓* ✓ cannot guarantee +/-3% accuracy Samsung Galaxy ✓ ✓ ✓ A20 Samsung Galaxy J7 ✓ ✗ ✓ Prime * Supported for Samsung Point-to-Point, but GALAXY ✓ ✓* ✓ cannot guarantee Note3 +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 4 * Supported for Samsung Point-to-Point, but GALAXY ✓ ✓* ✓ cannot guarantee Note 5 +/-3% accuracy Samsung GALAXY ✓ ✓ ✓ Note 8 Samsung GALAXY ✓ ✓ ✓ Note 9 Samsung GALAXY ✓ ✓ ✓ Note 10 Samsung GALAXY ✓ ✓ ✓ Note 10+ Samsung GALAXY ✓ ✓ ✓ Note 10+ 5G Supported for Samsung Photo Measure GALAXY ✓ ✗ ✗ but not Tab 4 (old) recommended Samsung Supported for