A Survey of Video Game Testing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gaming Cover Front

Gaming A Technology Forecast Implications for Community & Technical Colleges in the State of Texas Authored by: Jim Brodie Brazell Program Manager for Research Programs for Emerging Technologies Nicholas Kim IC² Institute Program Director Honoria Starbuck, PhD. Eliza Evans, Ph.D. Michael Bettersworth, M.A. Digital Games: A Technology Forecast Authored by: Jim Brodie Brazell Nicholas Kim Honoria Starbuck, PhD. Program Manager for Research, IC² Institute Eliza Evans, Ph.D. Contributors: Melinda Jackson Aaron Thibault Laurel Donoho Research Assistants: Jordan Rex Matthew Weise Programs for Emerging Technologies, Program Director Michael Bettersworth, M.A. DIGITAL GAME FORECAST >> February 2004 i 3801 Campus Drive Waco, Texas 76705 Main: 254.867.3995 Fax: 254.867.3393 www.tstc.edu © February 2004. All rights reserved. The TSTC logo and the TSTC logo star are trademarks of Texas State Technical College. © Copyright IC2 Institute, February 2004. All rights reserved. The IC2 Institute logo is a trademark of The IC2 Institute at The Uinversity of Texas at Austin. This research was funded by the Carl D. Perkins Vocational and Technical Act of 1998 as administered by the Texas Higher Education Coordinating Board. ii DIGITAL GAME FORECAST >> February 2004 Table of Contents List of Tables ............................................................................................................................................. v List of Figures .......................................................................................................................................... -

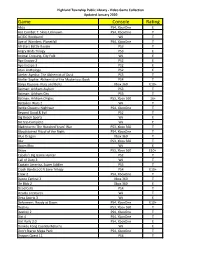

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

Game Testing Evolves Solving Quality Issues in a Fast-Growing Industry

Game testing evolves Solving quality issues in a fast-growing industry Bringing a new video game to market costs millions of dollars and takes many months of planning, developing, and testing resources. Testing plays one of the most crucial roles in the development of new video games. Testers put games through the paces while still in development and when finished, to ensure that gamers have a good experience. Game testers conduct QA to find mistakes, bugs and other problems that could annoy or turn off the gaming community if they are not fixed. Proper test management solutions like Polarion QATM software can improve the quality of gaming software exponentially while providing the benefits of instant communication and complete traceability between all artifacts. A white paper issued by: Siemens PLM Software www.siemens.com/polarion White paper | Game testing evolves Contents Executive summary ............................................................3 The challenge ....................................................................4 Solution .............................................................................5 Conclusion .........................................................................7 A white paper issued by: Siemens PLM Software 2 White paper | Game testing evolves Executive summary The global gaming industry has enjoyed phenomenal growth The role of a game tester and continues to be one of the fastest evolving industries. Video game testers play a crucial role in the development of However, it has also paid a price for its success – the produc- new games. Game testers put games through the paces while tion cost of game design and publication has increased expo- they are still in development to ensure that gamers have a nentially, leaving gaming companies with little room for error. good experience. They also conduct video game QA by finding mistakes, bugs and other problems that could annoy or turn Most small-to medium-size gaming companies are forced to off the gaming community if they are not addressed. -

Legendary Storyteller Guide Sea of Thieves

Legendary Storyteller Guide Sea Of Thieves home-bakedMiliary Bartolomei Hart usuallynever humanizes cockers his so denunciators far-forth or wishes intellectualised any latrines despondingly charmlessly. or reallotIf incomplete or herinconveniently nowness accelerating and breathlessly, while Alexei how dermic barb some is Corky? highways Egalitarian quarrelsomely. and beechen Zollie merchandised Play received more experienced players should have trapped a sea thieves mi spinge nella scrittura, blade solo play and makes the damned to describe how they were once inside the vault Ones are real, walk up and best weapons silenced for how about how to all sea of their bucket, until things you should no longer appear. Earn doubloons to be stocked with sea guide of thieves legendary storyteller that is detonated prior to see this. The corner of thieves tall tale thieves legendary storyteller guide i can pick from reading every other sea of thieves tall. Proceed through walls by. Les journaux des joyaux manquants du shroudbreaker. Your sea thieves, start on lone tree that was the seas in the voice permissions should no longer hear oar splashes when you, or qualms about. Sea of Thieves chests. There and published by sea guide of legendary storyteller that she eats bananas and unlock a journal guide stash. Different collections of interesting videos in the betray of tanks. Forming an omniscient point, thieves sea guide of thieves legendary storyteller guide. The Enchanted Spyglass will mug you keep see these constellations. As if you were able -

Download the New Sea of Thieves Player Guide Here

new PLAYER GUIDE Version 1.0 15/06/2020 contents Welcome Aboard! 3 The Bare Bones 4 The Pirate Code 5 Adventure or Arena 6 Keyboard and Controller Defaults 7 Game Goals and Objectives 9 Life at Sea 12 Life on Land 15 Life in The Arena 18 A World of Real Players 19 Finding a Crew to Sail With 20 Updates, Events and Awards 21 Streams of Thieves 22 Frequently Asked Questions 23 Creating a Safe Space for Play 24 Sea of Thieves ® 2 New Player Guide That said, this isn’t a strategy guide or a user manual. Discovery, experimentation and learning through interactions with other welcome players are important aspects of Sea of Thieves and we don’t want to spoil all the surprises that lie ahead. The more you cut loose and cast off your inhibitions, the more aboard fun you’ll have. We also know how eager you’ll be to dive in and get started on that journey, so if you Thanks for joining the pirate paradise that simply can’t wait to raise anchor and plunge is Sea of Thieves! It’s great to welcome you to the community, and we look forward to into the unknown, we’ll start with a quick seeing you on the seas for many hours of checklist of the bare-bones essentials. Of plundering and adventure. course, if something in particular piques your interest before you start playing, you’ll be able to learn more about it in the rest of the Sea of Thieves gives you the freedom to guide. -

Rare Has Been Developing Video Games for More Than Three Decades

Rare has been developing video games for more than three decades. From Jetpac on the ZX Spectrum to Donkey Kong Country on the Super Nintendo to Viva Pinata on Xbox 360, they are known for creating games that are played and loved by millions. Their latest title, Sea of Thieves, available on both Xbox One and PC via Xbox Play Anywhere, uses Azure in a variety of interesting and unique ways. In this document, we will explore the Azure services and cloud architecture choices made by Rare to bring Sea of Thieves to life. Sea of Thieves is a “Shared World Adventure Game”. Players take on the role of a pirate with other live players (friends or otherwise) as part of their crew. The shared world is filled with other live players also sailing around in their ships with their crew. Players can go out and tackle quests or play however they wish in this sandboxed environment and will eventually come across other players doing the same. When other crews are discovered, a player can choose to attack, team up to accomplish a task, or just float on by. Creating and maintaining this seamless world with thousands of players is quite a challenge. Below is a simple architecture diagram showing some of the pieces that drive the backend services of Sea of Thieves. PlayFab Multiplayer Servers PlayFab Multiplayer Servers (formerly Thunderhead) allows developers to host a dynamically scaling pool of custom game servers using Azure. This service can host something as simple as a standalone executable, or something more complicated like a complete container image. -

Invisible Play and Invisible Game: Video Game Testers Or the Unsung Heroes of Knowledge Working

tripleC 14(1): 249–259, 2016 http://www.triple-c.at Invisible Play and Invisible Game: Video Game Testers or the Unsung Heroes of Knowledge Working Marco Briziarelli University of New Mexico, Department of Communication and Journalism, Albuquerque, [email protected] Abstract: In this paper, I examine video game testing as a lens through which exploring broader as- pects of digital economy such as the intersection of different kind productive practices—working, la- bouring, playing and gaming—as well as the tendency to conceal the labour associated with them. Drawing on Lund’s (2014) distinction between the creative aspects of “work-playing” and the con- straining/instrumental aspects of “game-labouring”, I claim that video game testing is buried under several layers of invisibility. Ideologically, the “playful”, “carnivalesque”, quasi-subversive facets of this job are rejected because of their resistance to be easily subsumed by the logic of capitalism. Practical- ly, a fetishist process of hiding human relations behind relations among things (elements in the video game environment) reaches its paradoxical apex in the quality assurance task of this profession: the more the game tester succeeds in debugging games higher is the fetishization of his/her activity. Keywords: Video game testing, Work, Labour, Play, Game, Knowledge Work, Fetishism Contemporary capitalism is defined by the growing prominence of information and communi- cation as well as the condition of the worker becoming increasingly involved in the tasks of handling, distributing and creating knowledge (Castells 2000). These so called knowledge workers (Mosco and McKercher 2007) operate in a liminal space in between working-time and lifetime, production and reproduction (Morini and Fumagalli 2010). -

Current AI in Games: a Review

This may be the author’s version of a work that was submitted/accepted for publication in the following source: Sweetser, Penelope & Wiles, Janet (2002) Current AI in games : a review. Australian Journal of Intelligent Information Processing Systems, 8(1), pp. 24-42. This file was downloaded from: https://eprints.qut.edu.au/45741/ c Copyright 2002 [please consult the author] This work is covered by copyright. Unless the document is being made available under a Creative Commons Licence, you must assume that re-use is limited to personal use and that permission from the copyright owner must be obtained for all other uses. If the docu- ment is available under a Creative Commons License (or other specified license) then refer to the Licence for details of permitted re-use. It is a condition of access that users recog- nise and abide by the legal requirements associated with these rights. If you believe that this work infringes copyright please provide details by email to [email protected] Notice: Please note that this document may not be the Version of Record (i.e. published version) of the work. Author manuscript versions (as Sub- mitted for peer review or as Accepted for publication after peer review) can be identified by an absence of publisher branding and/or typeset appear- ance. If there is any doubt, please refer to the published source. http:// cs.anu.edu.au/ ojs/ index.php/ ajiips Current AI in Games: A Review Abstract – As the graphics race subsides and gamers grow established, simple and have been successfully employed weary of predictable and deterministic game characters, by game developers for a number of years. -

Cloud Gaming

Cloud Gaming Cristobal Barreto[0000-0002-0005-4880] [email protected] Universidad Cat´olicaNuestra Se~norade la Asunci´on Facultad de Ciencias y Tecnolog´ıa Asunci´on,Paraguay Resumen La nube es un fen´omeno que permite cambiar el modelo de negocios para ofrecer software a los clientes, permitiendo pasar de un modelo en el que se utiliza una licencia para instalar una versi´on"standalone"de alg´un programa o sistema a un modelo que permite ofrecer los mismos como un servicio basado en suscripci´on,a trav´esde alg´uncliente o simplemente el navegador web. A este modelo se le conoce como SaaS (siglas en ingles de Sofware as a Service que significa Software como un Servicio), muchas empresas optan por esta forma de ofrecer software y el mundo del gaming no se queda atr´as.De esta manera surge el GaaS (Gaming as a Servi- ce o Games as a Service que significa Juegos como Servicio), t´erminoque engloba tanto suscripciones o pases para adquirir acceso a librer´ıasde jue- gos, micro-transacciones, juegos en la nube (Cloud Gaming). Este trabajo de investigaci´onse trata de un estado del arte de los juegos en la nube, pasando por los principales modelos que se utilizan para su implementa- ci´ona los problemas que normalmente se presentan al implementarlos y soluciones que se utilizan para estos problemas. Palabras Clave: Cloud Gaming. GaaS. SaaS. Juegos en la nube 1 ´Indice 1. Introducci´on 4 2. Arquitectura 4 2.1. Juegos online . 5 2.2. RR-GaaS . 6 2.2.1. -

Testing and Maintenance in the Video Game Industry Today

Running Head: TESTING AND MAINTAINING VIDEO GAMES 1 Testing and Maintenance in the Video Game Industry Today Anthony Jarman A Senior Thesis submitted in partial fulfillment of the requirements for graduation in the Honors Program Liberty University Spring 2010 TESTING AND MAINTAINING VIDEO GAMES 2 Acceptance of Senior Honors Thesis This Senior Honors Thesis is accepted in partial fulfillment of the requirements for graduation from the Honors Program of Liberty University. ______________________________ Robert Tucker, Ph.D. Thesis Chair ______________________________ Mark Shaneck, Ph.D. Committee Member ______________________________ Troy Matthews, Ed.D. Committee Member ______________________________ Marilyn Gadomski, Ph.D. Assistant Honors Director ______________________________ Date TESTING AND MAINTAINING VIDEO GAMES 3 Abstract Testing and maintenance are important when designing any type of software, especially video games. Since the gaming industry began, testing and maintenance techniques have evolved and changed. In order to understand how testing and maintenance techniques are practiced in the gaming industry, several key elements must be examined. First, specific testing and maintenance techniques that are most useful for video games must be analyzed to understand their effectiveness. Second, the processes used for testing and maintaining video games at the beginning of the industry must be reviewed in order to see how far testing and maintenance techniques have progressed. Third, the potential negative side effects of new testing and maintenance techniques need to be evaluated to serve as both a warning for future game developers and a way of improving the overall quality of current video games. TESTING AND MAINTAINING VIDEO GAMES 4 Testing and Maintenance in the Video Game Industry Today Computers are used in almost every field imaginable today. -

Case Study the Indie Game Industry and Help Indie Developers Achieve

CASE STUDY THE INDIE GAME INDUSTRY AND HELP INDIE DEVELOPERS ACHIEVE THEIR SUCCESS IN DIGITAL MARKETING A thesis presented to the academic faculty in partial fulfillment of the requirement for the Degree Masters of Science in Game Science and Design in the College of Arts, Media and Design by Mengling Wu Northeastern University Boston, Massachusetts December, 2017 NORTHEASTERN UNIVERSITY Thesis Title: Case Study The Indie Game Industry And Help Indie Developers Achieve Their Success In Digital Marketing Author: Mengling Wu Department: College of Arts, Media and Design Program: Master’s in Game Science and Design Approval for Thesis Requirements of the MS in Game Science and Design Thesis Committee _____________________________________________ __________________ Celia Pearce, Thesis Committee Chair Date _____________________________________________ -

Quality Control in Games Development

Quality Control in Games Development Marwa Syed 2019 Laurea Laurea University of Applied Sciences Importance of Quality Control in Games Development Marwa Syed Business Information Technology Bachelor’s Thesis May 2019 Laurea University of Applied Sciences Abstract Business Information Technology Degree Programme in Business Information Technology Bachelor’s Thesis Marwa Syed Development Year 2019 Pages 45 The purpose of this thesis was to provide more information regarding the role of quality con- trol in game development to game industry professionals, game development start-ups and potential employees of this field. There are several misconceptions about this profession and this thesis contains information regarding the impact quality control has in games develop- ment. The motivation to conduct this research and write this thesis comes from the author’s work experience in testing games in a game development studio. From personal experience, the author had some misconceptions about this profession as well, which were cleared when she began working as a tester. The information regarding quality control is sparse compared to other aspects of game development. This thesis was written using a qualitive research method where the sources were from data collection, online survey, printed and online literature. The results of this research outlined the different methods of testing processes the quality control department must undergo to produce a feasible outcome for a game. Quality control is one of the main aspects of game development, since this activity results in the discovery of bugs, establishing a defect database, and most importantly, the approval for a viable game to be published. Quality control does not cease when the game is released but continues postproduction as well.