Implementation of an Intelligent Force Feedback Multimedia Game

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THINC: a Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices

THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2011 c 2011 Ricardo A. Baratto This work may be used in accordance with Creative Commons, Attribution-NonCommercial-NoDerivs License. For more information about that license, see http://creativecommons.org/licenses/by-nc-nd/3.0/. For other uses, please contact the author. ABSTRACT THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto THINC is a new virtual and remote display architecture for desktop computing. It has been designed to address the limitations and performance shortcomings of existing remote display technology, and to provide a building block around which novel desktop architectures can be built. THINC is architected around the notion of a virtual display device driver, a software-only component that behaves like a traditional device driver, but instead of managing specific hardware, enables desktop input and output to be intercepted, manipulated, and redirected at will. On top of this architecture, THINC introduces a simple, low-level, device-independent representation of display changes, and a number of novel optimizations and techniques to perform efficient interception and redirection of display output. This dissertation presents the design and implementation of THINC. It also intro- duces a number of novel systems which build upon THINC's architecture to provide new and improved desktop computing services. The contributions of this dissertation are as follows: • A high performance remote display system for LAN and WAN environments. -

Video Game Programming ITP 380 (4 Units) Fall 2017

Video Game Programming ITP 380 (4 Units) Fall 2017 Objective This course provides students with an in-depth introduction to technologies and techniques used in the game industry today. At semester’s end, students will have: 1. Gained an understanding of core game systems (incl. rendering, input, sound, and collision/physics) 2. Developed a strong understanding of essential mathematics for games 3. Written multiple functional games in C++ individually 4. Learned critical thinking skills required to continue further study in the field Concepts 3D math for games. C++. 3D graphics. Collision detection. Introduction to A.I. Implementing gameplay. Getting a job in the game industry. Prerequisites CSCI 104 or ITP 365x Instructor Sanjay Madhav Contact Students in the course should post their questions on Piazza. Email: [email protected] (Only for non-course questions or prospective students). Office Hours Tuesday, Wednesday, and Thursday 12-1:30PM in OHE 530H Time/Location Tuesday and Thursday, 5 – 6:50PM in OHE 540 Course Structure Each week, we have a lecture on Tuesday and a lab assignment assigned in class on Thursday. The first part of each lab assignment is due at the end of class on Thursdays, and the final submission is due the following Wednesday. There are two midterm exams and a final exam. All exams are cumulative. Textbook Game Programming Algorithms and Techniques. Sanjay Madhav. ISBN-10: 0321940156. (Amazon link) Grading The course is graded with the following weights: Lab Assignments (12 x 5%) 60% Midterm Exam I 12.5% Midterm Exam II 12.5% Final Exam 15% TOTAL POSSIBLE 100% Software Students will be able to setup their own PC and/or Mac computers for use in the class. -

STEM Student's Guide: Learning to Code and Design Video Games

Log In A STEM Student's Guide: Learning to Code and Design Video Games For many, video gaming is not only a hobby but a passion. But while playing video games is fun, you could also consider taking things a step further and becoming a game developer someday. There are a lot of di!erent ways to get involved in game design, but one of the most useful skills in this "eld is the ability to code. Anyone can learn to code and create video games, and there are all sorts of free resources online that can help. The two main roles for people who create video games are designers and programmers, but often, their responsibilities will overlap. While there are many di!erent types of video games in the world, all of them are created with the same basic process. Brainstorming A game designer should "rst come up with a basic concept for their game. It's helpful to keep a list of ideas written down somewhere, whether this is on paper or in an app. Next, you'll need to think about the di!erent abilities and actions possible for each character, the mood and tone of the game, and the story that will hold it all together. When thinking of the game's mood, it's essential to consider visual and audio e!ects and the overall aesthetic you're going for. Think about color, shape, and space, and keep in mind that the look of your game should be both unique and functional. While brainstorming new games, designers should keep in mind that they will often go through many ideas that do not work out before "nding one that works. -

Microsoft Directshow: a New Media Architecture

TECHNICAL PAPER Microsoft Directshow: A New Media Architecture By Amit Chatterjee and Andrew Maltz The desktop revolution in production and post-production has dramatical- streaming. Other motivating factors are ly changed the way film and television programs are made, simultaneously the new hardware buses such as the reducing equipment costs and increasing operator eficiency. The enabling IEEE 1394 serial bus and Universal digital innovations by individual companies using standard computing serial bus (USB), which are designed with multimedia devices in mind and platforms has come at a price-these custom implementations and closed promise to enable broad new classes of solutions make sharing of media and hardware between applications difi- audio and video application programs. cult if not impossible. Microsoft s DirectShowTMStreaming Media To address these and other require- Architecture and Windows Driver Model provide the infrastructure for ments, Microsoft introduced Direct- today’s post-production applications and hardware to truly become inter- ShowTM, a next-generation media- operable. This paper describes the architecture, supporting technologies, streaming architecture for the and their application in post-production scenarios. Windows and Macintosh platforms. In development for two and a half years, Directshow was released in August he year 1989 marked a turning Additionally, every implementation 1996, primarily as an MPEG-1 play- Tpoint in post-production equip- had to fight with operating system back vehicle for Internet applications, ment design with the introduction of constraints and surprises, particularly although the infrastructure was desktop digital nonlinear editing sys- in the areas of internal stream synchro- designed with a wide range of applica- tems. -

5.1-Channel PCI Sound Card

PSCPSC705705 5.1-Channel PCI Sound Card • Play all games in 5.1-channel surround sound, including EAX™ 2.0, A3D™ 1.0 and even ordinary stereo games! • Full compatablility with EAX™ 1.0, EAX™ 2.0 and A3D™ 1.0 games • Hear high-impact 3D sound from games, movies, music, and external sources using two, four, or six speakers. • 96 distinct 3D voices, 256 distinct DirectSound voices & 576 distinct synthesized Wavetable voices. • Included software: Sonic Foundry® SIREN Xpress™, Acid XPress™, QSound AudioPix™. 5.1-Channel PCI Sound Card PSCPSC705705 Arouse your senses. Make your games come to life with the excite- Technical Specifications ment of full-blown home cinema. Seismic Edge supports the latest multi-channel audio games and DVD movies, and can transform stereo Digital Acceleration sources into deep-immersion 5.1-channel surround sound. (5.1 refers • 96 streams of 3D audio acceleration including reverb, obstruction, and occlusion to five main speakers – front left, right and center, and rear left and • 256 streams of DirectSound accelerations and digital mixing right – and one bass subwoofer.) All this without straining your com- • Full-duplex, 48khz digital recording and playback puter’s resources, because complex audio demands are handled on- • 64 hardware sample rate conversion channels up to 48khz board by Seismic Edge’s powerful computing chip.You’ve never experi- • Wavetable and FM Synthesis enced games like this before! • DirectInput devices Experience state-of-the-art 360º Surround Sound. An embed- Comprehensive Connectivity ded, patented QSound algorithm extracts complex and distinct 5.1- • 5.1-channel (6 channel) analog output channel information from stereo or ProLogic sources. -

Introduction

Indie Game The Movie - Official Trailer - YouTube.flv 235 Free Indie Games in 10 Minutes - YouTube.flv Introduction Video Game Programming Spring 2012 Video Game Programming - A. Sharf 1 © Nintendo What is this course about? • Game design – Real world abstractions • Visuals • Interaction • Design iterations Video Game Programming - A. Sharf 2 © Nintendo What is this course about? • Gameplay mechanics • Rapid prototyping • Many examples • FUN ! Video Game Programming - A. Sharf 3 © Nintendo play mechanics are the foundation on which games are built… Playing with power www.1up.com/do/feature?cId=3151392 Video Game Programming - A. Sharf 4 © Nintendo Game mechanics=ideas • mechanics determine structure and rules: – how you win – how you lose – what to do to stay alive Video Game Programming - A. Sharf 5 © Nintendo • Basics - fundamental concepts that have become a part of many of today's games Video Game Programming - A. Sharf 6 © Nintendo Video Game Programming - A. Sharf 7 © Nintendo Video Game Programming - A. Sharf 8 © Nintendo Video Game Programming - A. Sharf 9 © Nintendo Video Game Programming - A. Sharf 10 © Nintendo • Handy features - ideas not absolutely essential to the design but enhance experience. Video Game Programming - A. Sharf 11 © Nintendo Video Game Programming - A. Sharf 12 © Nintendo Video Game Programming - A. Sharf 13 © Nintendo Video Game Programming - A. Sharf 14 © Nintendo Video Game Programming - A. Sharf 15 © Nintendo • Style - play mechanics that add an exciting touch of artistic flair to games Video Game Programming - A. Sharf 16 © Nintendo Video Game Programming - A. Sharf 17 © Nintendo Video Game Programming - A. Sharf 18 © Nintendo Video Game Programming - A. Sharf 19 © Nintendo Video Game Programming - A. -

Video Game Programming ITP 380 (4 Units) Fall 2018

Video Game Programming ITP 380 (4 Units) Fall 2018 Objective This course provides students with an in-depth introduction to technologies and techniques used in the game industry today. At semester’s end, students will have: 1. Gained an understanding of core game systems (incl. rendering, input, sound, and collision/physics) 2. Developed a strong understanding of essential mathematics for games 3. Written multiple functional games in C++ individually 4. Learned critical thinking skills required to continue further study in the field Concepts 3D math for games. C++. 3D graphics. Collision detection. Introduction to A.I. Implementing gameplay. Getting a job in the game industry. Prerequisites CSCI 104 or ITP 365x Instructor Sanjay Madhav Contact Students in the course should post their questions on Piazza. Email: [email protected] (Only for non-course questions or prospective students). Office Hours Monday and Wednesday, 4 – 6PM in OHE 530H or by appointment Time/Location Tuesday and Thursday, 5 – 6:50PM in OHE 540 Course Structure Most weeks, we have a lecture on Tuesday and a lab assignment assigned in class on Thursday. The first part of each lab assignment is due at the end of class on Thursdays, and the final submission is due the following Wednesday. There are two midterm exams and a final exam. All exams are cumulative. Textbook Madhav, Sanjay. Game Programming in C++. Students can read this book for free through the USC library website (here). Alternatively, students can purchase a copy of the book from Amazon or the USC bookstore. Grading The course is graded with the following weights: Lab Assignments (12 x 5%) 60% Midterm Exam I 12.5% Midterm Exam II 12.5% Final Exam 15% TOTAL POSSIBLE 100% Software Students will be able to setup their own PC and/or Mac computers for use in the class. -

Microsoft Palladium

Microsoft Palladium: A Business Overview Combining Microsoft Windows Features, Personal Computing Hardware, and Software Applications for Greater Security, Personal Privacy, and System Integrity by Amy Carroll, Mario Juarez, Julia Polk, Tony Leininger Microsoft Content Security Business Unit June 2002 Legal Notice This is a preliminary document and may be changed substantially prior to final commercial release of the software described herein. The information contained in this document represents the current view of Microsoft Corporation on the issues discussed as of the date of publication. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information presented after the date of publication. This White Paper is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AS TO THE INFORMATION IN THIS DOCUMENT. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. Unless otherwise noted, the example companies, organizations, products, domain names, e-mail addresses, logos, people, places and events depicted herein are fictitious, and no association with any real company, organization, product, domain name, e-mail address, logo, person, place or event is intended or should be inferred. -

Directx 11 Extended to the Implementation of Compute Shader

DirectX 1 DirectX About the Tutorial Microsoft DirectX is considered as a collection of application programming interfaces (APIs) for managing tasks related to multimedia, especially with respect to game programming and video which are designed on Microsoft platforms. Direct3D which is a renowned product of DirectX is also used by other software applications for visualization and graphics tasks such as CAD/CAM engineering. Audience This tutorial has been prepared for developers and programmers in multimedia industry who are interested to pursue their career in DirectX. Prerequisites Before proceeding with this tutorial, it is expected that reader should have knowledge of multimedia, graphics and game programming basics. This includes mathematical foundations as well. Copyright & Disclaimer Copyright 2019 by Tutorials Point (I) Pvt. Ltd. All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book is prohibited to reuse, retain, copy, distribute or republish any contents or a part of contents of this e-book in any manner without written consent of the publisher. We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at [email protected] -

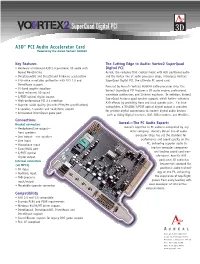

*Final New V2 PCI Super

SuperQuad Digital PCI A3DTM PCI Audio Accelerator Card Powered by the Aureal Vortex2 AU8830 Key Features The Cutting Edge in Audio: Vortex2 SuperQuad • Hardware accelerated A3D 2.0 positional 3D audio with Digital PCI Aureal Wavetracing Aureal, the company that created magic with A3D positional audio • DirectSound3D and DirectSound hardware acceleration and the Vortex line of audio processor chips, introduces Vortex2 • 320-voice wavetable synthesizer with DLS 1.0 and SuperQuad Digital PCI, the ultimate PC sound card. DirectMusic support Powered by Aureal’s Vortex2 AU8830 audio processor chip, the • 10-band graphic equalizer Vortex2 SuperQuad PCI features a 3D audio engine, professional • Quad enhanced 3D sound wavetable synthesizer, and 10-band equalizer. In addition, Vortex2 • S/PDIF optical digital output SuperQuad features quad speaker support, which further enhances • High-performance PCI 2.1 interface A3D effects by providing front and back speaker pairs. For true • Superior audio quality (exceeds PC98/99 specifications) audiophiles, a TOSLINK S/PDIF optical digital output is provided • 4-speaker, 2-speaker and headphone support for pristine digital connections to modern digital audio devices • Accelerated DirectInput game port such as Dolby Digital receivers, DAT, CDR recorders, and MiniDisc. Connections Bracket connectors Aureal—The PC Audio Experts • Headphone/Line output— Aureal’s expertise in PC audio is unequalled by any front speakers other company. Aureal’s Vortex line of audio • Line output—rear speakers processor chips has set the standard for • Line input performance and sound quality on the • Microphone input PC, delivering superior audio to • Game/MIDI port top-tier computer companies • S/PDIF optical and leading sound card man- digital output ufacturers. -

Nokia Standard Document Template

HELSINKI UNIVERSITY OF TECHNOLOGY Department of Electrical and Communications Engineering Matti Paavola Advanced audio interfaces for mobile Java This Master's Thesis has been submitted for official examination for the degree of Master of Science in Espoo on June 2, 2003. Supervisor of the Thesis: Professor Matti Karjalainen Instructor of the Thesis: Antti Rantalahti, M.Sc. (Tech.) TEKNILLINEN KORKEAKOULU Diplomityön tiivistelmä Tekijä: Matti Paavola Työn nimi: Edistyneet äänirajapinnat kannettavaan Javaan Päivämäärä: 2. kesäkuuta 2003 Sivumäärä: 64 Osasto: Sähkö- ja tietoliikennetekniikan osasto Professuuri: S-89 Akustiikka ja äänenkäsittelytekniikka Työn valvoja: professori Matti Karjalainen Työn ohjaaja: dipl.ins. Antti Rantalahti Tiivistelmäteksti: Tämä työ käsittelee kannettavan laitteen äänisignaalinkäsittelyn ohjaamista ohjelmal- lisesti. Työssä käydään läpi työn kannalta oleelliset psykoakustiikan ilmiöt ja niiden sovellus: virtuaaliakustiikka. Toisaalta työssä tutustutaan viiteen eri äänisignaalinkäsittelyn ohjaus- rajapintaan, jotka ovat yleisesti käytössä henkilökohtaisissa tietokoneissa. Lisäksi yksi kannettaviin laitteisiin suunniteltu Java-kielinen rajapinta käydään läpi. Tämä ohjelmointi- rajapinta muodostaa alustan työssä itse kehitettävälle rajapinnalle. Työn pääasiallisena tuloksena syntyy uusi äänisignaalinkäsittelyn ohjausrajapinta. Tämä rajapinta on suunniteltu erityisesti kannettaville laitteille. Uusi rajapinta tukee muun muassa 3D-ääntä, keinotekoista kaiuntaa, taajuuskorjausta ja erilaisia tehostesuotimia. Esitelty -

3D Graphics for Virtual Desktops Smackdown

3D Graphics for Virtual Desktops Smackdown 3D Graphics for Virtual Desktops Smackdown Author(s): Shawn Bass, Benny Tritsch and Ruben Spruijt Version: 1.11 Date: May 2014 Page i CONTENTS 1. Introduction ........................................................................ 1 1.1 Objectives .......................................................................... 1 1.2 Intended Audience .............................................................. 1 1.3 Vendor Involvement ............................................................ 2 1.4 Feedback ............................................................................ 2 1.5 Contact .............................................................................. 2 2. About ................................................................................. 4 2.1 About PQR .......................................................................... 4 2.2 Acknowledgements ............................................................. 4 3. Team Remoting Graphics Experts - TeamRGE ....................... 6 4. Quotes ............................................................................... 7 5. Tomorrow’s Workspace ....................................................... 9 5.1 Vendor Matrix, who delivers what ...................................... 18 6. Desktop Virtualization 101 ................................................. 24 6.1 Server Hosted Desktop Virtualization directions ................... 24 6.2 VDcry?! ...........................................................................