The Impact of Electronic Tests on Students' Performance Assessment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to Electronic Assessment Portfolios

Introduction to Electronic Assessment Portfolios Teacher portfolios: The process of developing electronic portfolios can document evidence of teacher competencies and guide long-term professional development. The competencies may be locally defined, or linked to national teaching standards. Two primary assumptions in this process are: 1.) a portfolio is not a haphazard collection of artifacts (i.e., a scrapbook) but rather a reflective tool which demonstrates growth over time; and 2.) as we move to more standards-based teacher performance assessment, we need new tools to record and organize evidence of successful teaching, for both practicing professionals and student teachers. Student portfolios: One of the most exciting developments in the school reform movement is the use of alternative forms of assessment to evaluate student learning, and one of the most popular forms of authentic assessment is the use of portfolios. The point of the portfolio (electronic or paper) is to provide a "richer picture" of a student's abilities, and to show growth over time. Portfolios are being developed at all phases of the life span, beginning in early childhood, through K-12 and higher education, to professional teaching portfolios. As more schools expand student access to technology, there are an increasing number of options available for developing electronic student portfolios, including relational databases, hypermedia programs, WWW pages, PDF files, and commercial proprietary programs. Electronic portfolios: Electronic portfolio development draws on two bodies of literature: multimedia development (decide, design, develop, evaluate) (Ivers & Barron, 1998) and portfolio development (collection, selection, reflection, projection) (Danielson & Abrutyn, 1997). Both processes are complimentary and essential for effective electronic portfolio development. -

International Dimensions of Lifelong Education in General and Liberal Studies

Perspectives (1969-1979) Volume 6 Number 3 Winter Article 8 1974 International Dimensions of Lifelong Education in General and Liberal Studies Warren L. Hickman Eisenhower College Albert E. Levak Michigan State University Wolf D. Fuhrig MacMurray College Follow this and additional works at: https://scholarworks.wmich.edu/perspectives Part of the Higher Education Commons, and the Liberal Studies Commons Recommended Citation Hickman, Warren L.; Levak, Albert E.; and Fuhrig, Wolf D. (1974) "International Dimensions of Lifelong Education in General and Liberal Studies," Perspectives (1969-1979): Vol. 6 : No. 3 , Article 8. Available at: https://scholarworks.wmich.edu/perspectives/vol6/iss3/8 This Panel is brought to you for free and open access by the Western Michigan University at ScholarWorks at WMU. It has been accepted for inclusion in Perspectives (1969-1979) by an authorized editor of ScholarWorks at WMU. For more information, please contact wmu- [email protected]. SESSION 5 International Dimensions of Lifelong Education in General and Liberal Studies Li£ elong and Worldwide By w ARREN L. HICKMAN Probably no society has ever so mangled and abused its own vocab ulary as much as have Americans. Language is meant for communica tion, to simplify the exchange of ideas. But when academic disciplines and professions extract words from the common language and arbi trarily attach a new restricted disciplinary meaning, they do a disservice to the entire society. In place of a common language, we are faced with ever-expanding jargons. -

Towards a Research Agenda on Computer-Based

TOWARDS A RESEARCH AGENDA ON COMPUTER-BASED ASSESSMENT Challenges and needs for European Educational Measurement Friedrich Scheuermann & Angela Guimarães Pereira (Eds.) EUR 23306 EN - 2008 The Institute for the Protection and Security of the Citizen provides research-based, systems- oriented support to EU policies so as to protect the citizen against economic and technological risk. The Institute maintains and develops its expertise and networks in information, communication, space and engineering technologies in support of its mission. The strong cross- fertilisation between its nuclear and non-nuclear activities strengthens the expertise it can bring to the benefit of customers in both domains. European Commission Joint Research Centre Institute for the Protection and Security of the Citizen Contact information Address: Unit G09, QSI / CRELL TP-361, Via Enrico Fermi, 2749; 21027 Ispra (VA), Italy E-mail: [email protected], [email protected] Tel.: +39-0332-78.6111 Fax: +39-0332-78.5733 http://ipsc.jrc.ec.europa.eu/ http://www.jrc.ec.europa.eu/ Legal Notice Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use which might be made of this publication. Europe Direct is a service to help you find answers to your questions about the European Union Freephone number (*): 00 800 6 7 8 9 10 11 (*) Certain mobile telephone operators do not allow access to 00 800 numbers or these calls may be billed. A great deal of additional information on the European Union is available on the Internet. It can be accessed through the Europa server http://europa.eu/ JRC44526 EUR 23306 EN ISSN 1018-5593 Luxembourg: Office for Official Publications of the European Communities © European Communities, 2008 Reproduction is authorised provided the source is acknowledged Printed in Italy Table of Contents Introduction……………………………………………………………………………………………... -

Meta-Analysis of the Relationships Between Different Leadership Practices and Organizational, Teaming, Leader, and Employee Outcomes*

Journal of International Education and Leadership Volume 8 Issue 2 Fall 2018 http://www.jielusa.org/ ISSN: 2161-7252 Meta-Analysis of the Relationships Between Different Leadership Practices and Organizational, Teaming, Leader, and Employee Outcomes* Carl J. Dunst Orelena Hawks Puckett Institute Mary Beth Bruder University of Connecticut Health Center Deborah W. Hamby, Robin Howse, and Helen Wilkie Orelena Hawks Puckett Institute * This study was supported, in part, by funding from the U.S. Department of Education, Office of Special Education Programs (No. 325B120004) for the Early Childhood Personnel Center, University of Connecticut Health Center. The contents and opinions expressed, however, are those of the authors and do not necessarily reflect the policy or official position of either the Department or Office and no endorsement should be inferred or implied. The authors report no conflicts of interest. The meta-analysis described in this paper evaluated the relationships between 11 types of leadership practices and 7 organizational, teaming, leader, and employee outcomes. A main focus of analysis was whether the leadership practices were differentially related to the study outcomes. Studies were eligible for inclusion if the correlations between leadership subscale measures (rather than global measures of leadership) and outcomes of interest were reported. The random effects weighted average correlations between the independent and dependent measures were used as the sizes of effects for evaluating the influences of the leadership practices on the outcome measures. One hundred and twelve studies met the inclusion criteria and included 39,433 participants. The studies were conducted in 31 countries in different kinds of programs, organizations, companies, and businesses. -

E-Lev: Solution for Early Childhood Education Teachers in Industrial Revolution Era 4.0

Advances in Social Science, Education and Humanities Research, volume 387 3rd International Conference on Education Innovation (ICEI 2019) E-LEv: Solution for Early Childhood Education Teachers in Industrial Revolution Era 4.0 Prima Suci Rohmadheny, Avanti Vera Risti Pramudyani Teacher Training of Early Childhood Education Universitas Ahmad Dahlan Yogyakarta, Indonesia [email protected] Abstract—Early Childhood Education (ECE) learning administration, scoring, and its interpretation. It was the evaluation refers to the evaluation of early aged-children' competence in communicating the result of the assessment developmental achievement. This is pivotal to measure that becomes the problem faced by the teachers there [6]. It is developmental achievement during and after the learning activity different from what happens in Indonesia. is conducted. From the previous study shown that teacher faced The empirical fact proves that the learning assessment and various problems to do assessment and evaluation. Accordingly, this study aimed to explore the ECE teachers' need to find evaluation in ECE are still sub-optimal; it was due to various solutions for the problems. The data were collected using a problems faced by the ECE teacher. This fact is supported by questionnaire distributed randomly in Indonesia through google the result of the preliminary study conducted by the form. The data were analyzed in accordance with the Miles and researchers. The preliminary study found various problems Hubberman analysis model. The data were presented such as teachers who perceive that learning assessment and descriptively to clear up the finding of the study. The study found evaluation in Indonesia is time-consuming. While, the teachers that (a) the majority of teachers had made attempts to improve are responsible for a vast amount of duties. -

A Meta-Analysis of the Associations Among Quality Indicators in Early Childhood Education and Care and Relations with Child Development and Learning

A Meta-analysis of the associations among quality indicators in early childhood education and care and relations with child development and learning Full reference list of identified studies This document includes a full reference list of all studies included in the data extraction process for the meta-analysis “Associations among quality indicators in early childhood education and care (ECEC) and relations with child development and learning”. The meta-analysis was commissioned as part of initial desk-based research for the OECD ECEC project “Policy Review: Quality beyond Regulations in ECEC”, focusing in particular on process quality, to be conducted in 2017-2020. This document provides auxiliary information to the report “Engaging young children: Lessons from research about quality in Early Childhood Education and Care” released on 27 March 2018, and available for download on this link. The report includes an annex (Annex C) that summarizes the meta-analysis methodology and provides further context for this document. Authors and contact information: Antje von Suchodoletz, New York University Abu Dhabi, UAE, Email: [email protected] D. Susie Lee, New York University, USA Bharathy Premachandra, New York University, USA Hirokazu Yoshikawa, New York University, USA 2 │ FULL REFERENCE LIST OF IDENTIFIED STUDIES Full reference list of identified studies Key 0= Not Prescreened 1=Prescreened 1,2=Prescreened/Full Screened 1,2,3=Prescreened/Full Screened/Qualified for Coding 1,2,3,4=Prescreened/Full Screened/Qualified for Coding/Fully Coded X= Prescreened/Qualified for full screening but full screening pending. Report # Citation Status ID 1 200000 A comparative study of childcare in Japan and the USA: Who needs to take care of our young children? (2011). -

Electronic Evaluation: Facts, Challenges and Expectations

The Online Journal of New Horizons in Education - Ocrober 2019 Volume 9, Issue 4 ELECTRONIC EVALUATION: FACTS, CHALLENGES AND EXPECTATIONS Aissa HANIFI Chlef University ,Algeria, Algeria , Relizane , 48011 [email protected] & [email protected] ABSTRACT Due to its fundamental role in the teaching and learning process, the concept of evaluation has been widely tackled in academic spheres. As a matter of fact, evaluation can help us discriminate between good and bad courses and thus establishes a solid background for setting success to major courses’ aims and outcomes. Whether in electronic or paper form and whether as a continuous or periodic process, evaluation helps teachers to form judgement values and students’ development and achievements. With regard to electronic evaluation, more and more university Algerian teachers are performing it though with a low degree through the online Master and Doctorate theses works’ supervision, for instance .The current study aims at investigating the teachers’ attitude to the use of electronic evaluation as a means to evaluate students’ performance and progress. The other aim is also to shed light on other aspects of electronic evaluation which are still under limited experimental process and needs to be upgraded in the university departments’ database. This might include mainly the electronic course evaluation system and course work assignment electronic submission. Results which were collected through an online survey submitted in the form of Likert scale questionnaire to 16 teachers randomly selected across different Algerian universities revealed interesting findings. Most teachers agreed that electronic evaluation system can replace paper evaluation system forms since it saves much of the teacher’s time and energy. -

Tools and Possibilities for Electronic Assessment in Higher Education

E-ASSESSMENT: TOOLS AND POSSIBILITIES FOR ELECTRONIC ASSESSMENT IN HIGHER EDUCATION P. Miranda1, P. Isaias2, S. Pifano3 1Research Center for Engineering a Sustainable Development (Sustain. RD-IPS) Setubal School of Technology, Polytechnic Institute of Setubal (PORTUGAL) 2Institute for Teaching and Learning Innovation (ITaLI) and UQ Business School, The University of Queensland (AUSTRALIA) 3ISR Lab – Information Society Research Lab (PORTUGAL) Abstract Technology deployment in higher education is at a thriving stage, with an increasing number of institutions embracing the possibilities afforded by technological tools and a mounting interest from teachers who are becoming more familiar with the intricacies of technology. There are several aspects of higher education that have been digitalised and transformed by the constant technological advancements of key tools for learning. Assessment, as an integral part of learning, has also been adopting a growing number and variety of tools to assist the measurement of the learning outcomes of the students. This paper intends to examine the technology that is available to develop effective e- assessment practices. It reviews key literature about the use of technology to develop assessment in higher education to provide a comprehensive account of the tools that teachers can use to assist the assessment of their students’ learning outcomes. The e-assessment instruments that are presented in this paper have different degrees of complexity, ranging from simple e-exams to the use of complete gamified learning and assessment experiences. They represent varied solutions that can be adapted to an ample assortment of assessment scenarios and needs, and they extend the possibilities of assessment far beyond the limits of conventional grading, offering a more current and more adequate answer to the demands of the contemporary higher education sector. -

Electronic Assessment and Feedback Tool in Supervision of Nursing Students During Clinical Training

Electronic Assessment and Feedback Tool in Supervision of Nursing Students During Clinical Training Sari Mettiäinen Tampere University of Applied Sciences, Finland [email protected] Abstract: The aim of this study was to determine nursing teachers’ and students’ attitudes to and experiences of using an electronic assessment and feedback tool in supervision of clinical training. The tool was called eTaitava, and it was developed in Finland. During the pilot project, the software was used by 12 nursing teachers and 430 nursing students. Nine of the teachers participated in the interviews and survey, and 112 students responded to the survey. The data were mainly analysed with qualitative methods. In the eTaitava web-based user interface, the teacher constructs questions to map the students’ learning process, and sets them to be sent on a daily basis. According to the findings, four-fifths of the students responded to the questions almost daily. They thought the software was easy to use and answering the questions took about 5 minutes a day. Based on the students’ and teachers’ experiences, the use of the electronic assessment and feedback tool supported supervision of clinical training. It supported the students’ target-oriented learning, supervised the students’ daily work, and made it visible for the teachers. Responding to the software questions inspired the students’ cognitive learning, and based on the responses, the teachers noticed which students needed more support and could consequently allocate them more supervision time. Responding also supported the students’ continuous self-evaluation, and considering the responses structured the students’ and teachers’ final assessment discussion. By means of the electronic assessment and feedback tool, it is possible to promote learning during clinical training by challenging students to reflect on their learning experiences. -

World Education Report, 2000

World education report The right to education Towards education for all throughout life UNESCO PUBLISHING World education report 2000 The right to education: towards education for all throughout life UNESCO Publishing The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of UNESCO concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. Published in 2000 by the United Nations Educational, Scientific and Cultural Organization 7, Place de Fontenoy, 75352 Paris 07 SP Cover design by Jean-Francis Chériez Graphics by Visit-Graph, Boulogne-Billancourt Printed by Darantiere, 21800 Quétigny ISBN 92-3-103729-3 © UNESCO 2000 Printed in France Foreword The World Education Report 2000’s focus on promoting reflection on the many different education as a basic human right is a fitting facets of the right to education, extending from choice for the International Year for the Culture initial or basic education to lifelong learning. of Peace. Education is one of the principal The report is also designed to complement the means to build the ‘defences of peace’ in the Education for All 2000 Assessment undertaken minds of men and women everywhere – the by the international community as a follow- mission assumed by UNESCO when the Organ- up to the World Conference on Education for ization was created more than half a century All (Jomtien, Thailand, 1990). This assessment ago. The twentieth century saw human rights process, culminating at the World Education accepted worldwide as a guiding principle. -

View Document

Understanding the Differences A Working Paper Series on Higher Education in Canada, Mexico and the United States Working Paper #9 Academic Mobility in North America: Towards New Models of Integration and Collaboration by Fernando León-García Dewayne Matthews Lorna Smith Western Interstate Commission for Higher Education The Western Interstate Commission for Higher Education (WICHE) is a public interstate agency established to promote and to facilitate resource sharing, collaboration, and cooperative planning among the western states and their colleges and universities. Member and affiliate states include Alaska, Arizona, California, Colorado, Hawaii, Idaho, Montana, Nevada, New Mexico, North Dakota, Oregon, South Dakota, Utah, Washington, and Wyoming. In 1993, WICHE, working in partnership with the Mexican Association for International Education (AMPEI), developed the U.S.-Mexico Educational Interchange Project to facilitate educational interchange and the sharing of resources across the western region of the U.S. and with Mexico. In 1995, the project began a trinational focus which includes Canada, with the goal of fostering educational collaboration across North America. In 1997, the project changed its name to the "Consortium for North American Higher Education Collaboration" (CONAHEC). The "Understanding the Differences" series was developed as a resource for the initiative and was created under the direction of WICHE's Constituent Relations and Communications and Policy and Information Units. CONAHEC’s Web site is located at http://conahec.org. -

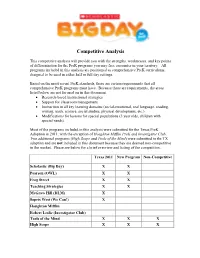

Competitive Analysis

Competitive Analysis This competitive analysis will provide you with the strengths, weaknesses, and key points of differentiation for the PreK programs you may face encounter in your territory. All programs included in this analysis are positioned as comprehensive PreK curriculums, designed to be used in either half or full day settings. Based on the most recent PreK standards, there are certain requirements that all comprehensive PreK programs must have. Because these are requirements, the areas listed below are not focused on in this document. • Research-based instructional strategies • Support for classroom management • Instruction in all key learning domains (social-emotional, oral language, reading, writing, math, science, social studies, physical development, etc.) • Modifications for lessons for special populations (3 year olds, children with special needs). Most of the programs included in this analysis were submitted for the Texas PreK Adoption in 2011, with the exception of Houghton Mifflin PreK and Investigator Club. Two additional programs (High Scope and Tools of the Mind) were submitted in the TX adoption and are not included in this document because they are deemed non-competitive in the market. Please see below for a brief overview and listing of the competition. Texas 2011 New Program Non-Competitive Scholastic (Big Day) X X Pearson (OWL) X X Frog Street X X Teaching Strategies X X McGraw Hill (DLM) X Sopris West (We Can!) X Houghton Mifflin Robert Leslie (Investigator Club) Tools of the Mind X X X High Scope X X X Competitive