IMU-Based Locomotor Intention Prediction for Real-Time Use In

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Malware Classification with BERT

San Jose State University SJSU ScholarWorks Master's Projects Master's Theses and Graduate Research Spring 5-25-2021 Malware Classification with BERT Joel Lawrence Alvares Follow this and additional works at: https://scholarworks.sjsu.edu/etd_projects Part of the Artificial Intelligence and Robotics Commons, and the Information Security Commons Malware Classification with Word Embeddings Generated by BERT and Word2Vec Malware Classification with BERT Presented to Department of Computer Science San José State University In Partial Fulfillment of the Requirements for the Degree By Joel Alvares May 2021 Malware Classification with Word Embeddings Generated by BERT and Word2Vec The Designated Project Committee Approves the Project Titled Malware Classification with BERT by Joel Lawrence Alvares APPROVED FOR THE DEPARTMENT OF COMPUTER SCIENCE San Jose State University May 2021 Prof. Fabio Di Troia Department of Computer Science Prof. William Andreopoulos Department of Computer Science Prof. Katerina Potika Department of Computer Science 1 Malware Classification with Word Embeddings Generated by BERT and Word2Vec ABSTRACT Malware Classification is used to distinguish unique types of malware from each other. This project aims to carry out malware classification using word embeddings which are used in Natural Language Processing (NLP) to identify and evaluate the relationship between words of a sentence. Word embeddings generated by BERT and Word2Vec for malware samples to carry out multi-class classification. BERT is a transformer based pre- trained natural language processing (NLP) model which can be used for a wide range of tasks such as question answering, paraphrase generation and next sentence prediction. However, the attention mechanism of a pre-trained BERT model can also be used in malware classification by capturing information about relation between each opcode and every other opcode belonging to a malware family. -

Lecture 4 Feedforward Neural Networks, Backpropagation

CS7015 (Deep Learning): Lecture 4 Feedforward Neural Networks, Backpropagation Mitesh M. Khapra Department of Computer Science and Engineering Indian Institute of Technology Madras 1/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 References/Acknowledgments See the excellent videos by Hugo Larochelle on Backpropagation 2/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 Module 4.1: Feedforward Neural Networks (a.k.a. multilayered network of neurons) 3/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 The input to the network is an n-dimensional hL =y ^ = f(x) vector The network contains L − 1 hidden layers (2, in a3 this case) having n neurons each W3 b Finally, there is one output layer containing k h 3 2 neurons (say, corresponding to k classes) Each neuron in the hidden layer and output layer a2 can be split into two parts : pre-activation and W 2 b2 activation (ai and hi are vectors) h1 The input layer can be called the 0-th layer and the output layer can be called the (L)-th layer a1 W 2 n×n and b 2 n are the weight and bias W i R i R 1 b1 between layers i − 1 and i (0 < i < L) W 2 n×k and b 2 k are the weight and bias x1 x2 xn L R L R between the last hidden layer and the output layer (L = 3 in this case) 4/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 hL =y ^ = f(x) The pre-activation at layer i is given by ai(x) = bi + Wihi−1(x) a3 W3 b3 The activation at layer i is given by h2 hi(x) = g(ai(x)) a2 W where g is called the activation function (for 2 b2 h1 example, logistic, tanh, linear, etc.) The activation at the output layer is given by a1 f(x) = h (x) = O(a (x)) W L L 1 b1 where O is the output activation function (for x1 x2 xn example, softmax, linear, etc.) To simplify notation we will refer to ai(x) as ai and hi(x) as hi 5/9 Mitesh M. -

Incorporating Automated Feature Engineering Routines Into Automated Machine Learning Pipelines by Wesley Runnels

Incorporating Automated Feature Engineering Routines into Automated Machine Learning Pipelines by Wesley Runnels Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2020 c Massachusetts Institute of Technology 2020. All rights reserved. Author . Wesley Runnels Department of Electrical Engineering and Computer Science May 12th, 2020 Certified by . Tim Kraska Department of Electrical Engineering and Computer Science Thesis Supervisor Accepted by . Katrina LaCurts Chair, Master of Engineering Thesis Committee 2 Incorporating Automated Feature Engineering Routines into Automated Machine Learning Pipelines by Wesley Runnels Submitted to the Department of Electrical Engineering and Computer Science on May 12, 2020, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract Automating the construction of consistently high-performing machine learning pipelines has remained difficult for researchers, especially given the domain knowl- edge and expertise often necessary for achieving optimal performance on a given dataset. In particular, the task of feature engineering, a key step in achieving high performance for machine learning tasks, is still mostly performed manually by ex- perienced data scientists. In this thesis, building upon the results of prior work in this domain, we present a tool, rl feature eng, which automatically generates promising features for an arbitrary dataset. In particular, this tool is specifically adapted to the requirements of augmenting a more general auto-ML framework. We discuss the performance of this tool in a series of experiments highlighting the various options available for use, and finally discuss its performance when used in conjunction with Alpine Meadow, a general auto-ML package. -

Revisiting the Softmax Bellman Operator: New Benefits and New Perspective

Revisiting the Softmax Bellman Operator: New Benefits and New Perspective Zhao Song 1 * Ronald E. Parr 1 Lawrence Carin 1 Abstract tivates the use of exploratory and potentially sub-optimal actions during learning, and one commonly-used strategy The impact of softmax on the value function itself is to add randomness by replacing the max function with in reinforcement learning (RL) is often viewed as the softmax function, as in Boltzmann exploration (Sutton problematic because it leads to sub-optimal value & Barto, 1998). Furthermore, the softmax function is a (or Q) functions and interferes with the contrac- differentiable approximation to the max function, and hence tion properties of the Bellman operator. Surpris- can facilitate analysis (Reverdy & Leonard, 2016). ingly, despite these concerns, and independent of its effect on exploration, the softmax Bellman The beneficial properties of the softmax Bellman opera- operator when combined with Deep Q-learning, tor are in contrast to its potentially negative effect on the leads to Q-functions with superior policies in prac- accuracy of the resulting value or Q-functions. For exam- tice, even outperforming its double Q-learning ple, it has been demonstrated that the softmax Bellman counterpart. To better understand how and why operator is not a contraction, for certain temperature pa- this occurs, we revisit theoretical properties of the rameters (Littman, 1996, Page 205). Given this, one might softmax Bellman operator, and prove that (i) it expect that the convenient properties of the softmax Bell- converges to the standard Bellman operator expo- man operator would come at the expense of the accuracy nentially fast in the inverse temperature parameter, of the resulting value or Q-functions, or the quality of the and (ii) the distance of its Q function from the resulting policies. -

Performance Comparison of Support Vector Machine, Random Forest, and Extreme Learning Machine for Intrusion Detection

Technological University Dublin ARROW@TU Dublin Articles School of Science and Computing 2018-7 Performance Comparison of Support Vector Machine, Random Forest, and Extreme Learning Machine for Intrusion Detection Iftikhar Ahmad King Abdulaziz University, Saudi Arabia, [email protected] MUHAMMAD JAVED IQBAL UET Taxila MOHAMMAD BASHERI King Abdulaziz University, Saudi Arabia See next page for additional authors Follow this and additional works at: https://arrow.tudublin.ie/ittsciart Part of the Computer Sciences Commons Recommended Citation Ahmad, I. et al. (2018) Performance Comparison of Support Vector Machine, Random Forest, and Extreme Learning Machine for Intrusion Detection, IEEE Access, vol. 6, pp. 33789-33795, 2018. DOI :10.1109/ACCESS.2018.2841987 This Article is brought to you for free and open access by the School of Science and Computing at ARROW@TU Dublin. It has been accepted for inclusion in Articles by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License Authors Iftikhar Ahmad, MUHAMMAD JAVED IQBAL, MOHAMMAD BASHERI, and Aneel Rahim This article is available at ARROW@TU Dublin: https://arrow.tudublin.ie/ittsciart/44 SPECIAL SECTION ON SURVIVABILITY STRATEGIES FOR EMERGING WIRELESS NETWORKS Received April 15, 2018, accepted May 18, 2018, date of publication May 30, 2018, date of current version July 6, 2018. Digital Object Identifier 10.1109/ACCESS.2018.2841987 -

Machine Learning Methods for Classification of the Green

International Journal of Geo-Information Article Machine Learning Methods for Classification of the Green Infrastructure in City Areas Nikola Kranjˇci´c 1,* , Damir Medak 2, Robert Župan 2 and Milan Rezo 1 1 Faculty of Geotechnical Engineering, University of Zagreb, Hallerova aleja 7, 42000 Varaždin, Croatia; [email protected] 2 Faculty of Geodesy, University of Zagreb, Kaˇci´ceva26, 10000 Zagreb, Croatia; [email protected] (D.M.); [email protected] (R.Ž.) * Correspondence: [email protected]; Tel.: +385-95-505-8336 Received: 23 August 2019; Accepted: 21 October 2019; Published: 22 October 2019 Abstract: Rapid urbanization in cities can result in a decrease in green urban areas. Reductions in green urban infrastructure pose a threat to the sustainability of cities. Up-to-date maps are important for the effective planning of urban development and the maintenance of green urban infrastructure. There are many possible ways to map vegetation; however, the most effective way is to apply machine learning methods to satellite imagery. In this study, we analyze four machine learning methods (support vector machine, random forest, artificial neural network, and the naïve Bayes classifier) for mapping green urban areas using satellite imagery from the Sentinel-2 multispectral instrument. The methods are tested on two cities in Croatia (Varaždin and Osijek). Support vector machines outperform random forest, artificial neural networks, and the naïve Bayes classifier in terms of classification accuracy (a Kappa value of 0.87 for Varaždin and 0.89 for Osijek) and performance time. Keywords: green urban infrastructure; support vector machines; artificial neural networks; naïve Bayes classifier; random forest; Sentinel 2-MSI 1. -

Random Forest Regression of Markov Chains for Accessible Music Generation

Random Forest Regression of Markov Chains for Accessible Music Generation Vivian Chen Jackson DeVico Arianna Reischer [email protected] [email protected] [email protected] Leo Stepanewk Ananya Vasireddy Nicholas Zhang [email protected] [email protected] [email protected] Sabar Dasgupta* [email protected] New Jersey’s Governor’s School of Engineering and Technology July 24, 2020 *Corresponding Author Abstract—With the advent of machine learning, new generative algorithms have expanded the ability of computers to compose creative and meaningful music. These advances allow for a greater balance between human input and autonomy when creating original compositions. This project proposes a method of melody generation using random forest regression, which in- creases the accessibility of generative music models by addressing the downsides of previous approaches. The solution generalizes the concept of Markov chains while avoiding the excessive computational costs and dataset requirements associated with past models. To improve the musical quality of the outputs, the model utilizes post-processing based on various scoring metrics. A user interface combines these modules into an application that achieves the ultimate goal of creating an accessible generative music model. Fig. 1. A screenshot of the user interface developed for this project. I. INTRODUCTION One of the greatest challenges in making generative music is emulating human artistic expression. DeepMind’s generative II. BACKGROUND audio model, WaveNet, attempts this challenge, but requires A. History of Generative Music large datasets and extensive training time to produce qual- ity musical outputs [1]. Similarly, other music generation The term “generative music,” first popularized by English algorithms such as MelodyRNN, while effective, are also musician Brian Eno in the late 20th century, describes the resource intensive and time-consuming. -

Evaluating the Combination of Word Embeddings with Mixture of Experts and Cascading Gcforest in Identifying Sentiment Polarity

Evaluating the Combination of Word Embeddings with Mixture of Experts and Cascading gcForest In Identifying Sentiment Polarity by Mounika Marreddy, Subba Reddy Oota, Radha Agarwal, Radhika Mamidi in 25TH ACM SIGKDD CONFERENCE ON KNOWLEDGE DISCOVERY AND DATA MINING (SIGKDD-2019) Anchorage, Alaska, USA Report No: IIIT/TR/2019/-1 Centre for Language Technologies Research Centre International Institute of Information Technology Hyderabad - 500 032, INDIA August 2019 Evaluating the Combination of Word Embeddings with Mixture of Experts and Cascading gcForest In Identifying Sentiment Polarity Mounika Marreddy Subba Reddy Oota [email protected] IIIT-Hyderabad IIIT-Hyderabad Hyderabad, India Hyderabad, India [email protected] [email protected] Radha Agarwal Radhika Mamidi IIIT-Hyderabad IIIT-Hyderabad Hyderabad, India Hyderabad, India [email protected] [email protected] ABSTRACT an effective neural networks to generate low dimensional contex- Neural word embeddings have been able to deliver impressive re- tual representations and yields promising results on the sentiment sults in many Natural Language Processing tasks. The quality of analysis [7, 14, 21]. the word embedding determines the performance of a supervised Since the work of [2], NLP community is focusing on improving model. However, choosing the right set of word embeddings for a the feature representation of sentence/document with continuous given dataset is a major challenging task for enhancing the results. development in neural word embedding. Word2Vec embedding In this paper, we have evaluated neural word embeddings with was the first powerful technique to achieve semantic similarity (i) a mixture of classification experts (MoCE) model for sentiment between words but fail to capture the meaning of a word based classification task, (ii) to compare and improve the classification on context [17]. -

On the Learning Property of Logistic and Softmax Losses for Deep Neural Networks

The Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20) On the Learning Property of Logistic and Softmax Losses for Deep Neural Networks Xiangrui Li, Xin Li, Deng Pan, Dongxiao Zhu∗ Department of Computer Science Wayne State University {xiangruili, xinlee, pan.deng, dzhu}@wayne.edu Abstract (unweighted) loss, resulting in performance degradation Deep convolutional neural networks (CNNs) trained with lo- for minority classes. To remedy this issue, the class-wise gistic and softmax losses have made significant advancement reweighted loss is often used to emphasize the minority in visual recognition tasks in computer vision. When training classes that can boost the predictive performance without data exhibit class imbalances, the class-wise reweighted ver- introducing much additional difficulty in model training sion of logistic and softmax losses are often used to boost per- (Cui et al. 2019; Huang et al. 2016; Mahajan et al. 2018; formance of the unweighted version. In this paper, motivated Wang, Ramanan, and Hebert 2017). A typical choice of to explain the reweighting mechanism, we explicate the learn- weights for each class is the inverse-class frequency. ing property of those two loss functions by analyzing the nec- essary condition (e.g., gradient equals to zero) after training A natural question then to ask is what roles are those CNNs to converge to a local minimum. The analysis imme- class-wise weights playing in CNN training using LGL diately provides us explanations for understanding (1) quan- or SML that lead to performance gain? Intuitively, those titative effects of the class-wise reweighting mechanism: de- weights make tradeoffs on the predictive performance terministic effectiveness for binary classification using logis- among different classes. -

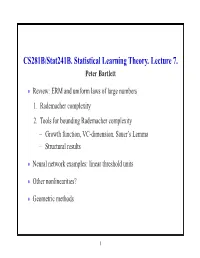

CS281B/Stat241b. Statistical Learning Theory. Lecture 7. Peter Bartlett

CS281B/Stat241B. Statistical Learning Theory. Lecture 7. Peter Bartlett Review: ERM and uniform laws of large numbers • 1. Rademacher complexity 2. Tools for bounding Rademacher complexity Growth function, VC-dimension, Sauer’s Lemma − Structural results − Neural network examples: linear threshold units • Other nonlinearities? • Geometric methods • 1 ERM and uniform laws of large numbers Empirical risk minimization: Choose fn F to minimize Rˆ(f). ∈ How does R(fn) behave? ∗ For f = arg minf∈F R(f), ∗ ∗ ∗ ∗ R(fn) R(f )= R(fn) Rˆ(fn) + Rˆ(fn) Rˆ(f ) + Rˆ(f ) R(f ) − − − − ∗ ULLN for F ≤ 0 for ERM LLN for f |sup R{z(f) Rˆ}(f)| + O(1{z/√n).} | {z } ≤ f∈F − 2 Uniform laws and Rademacher complexity Definition: The Rademacher complexity of F is E Rn F , k k where the empirical process Rn is defined as n 1 R (f)= ǫ f(X ), n n i i i=1 X and the ǫ1,...,ǫn are Rademacher random variables: i.i.d. uni- form on 1 . {± } 3 Uniform laws and Rademacher complexity Theorem: For any F [0, 1]X , ⊂ 1 E Rn F O 1/n E P Pn F 2E Rn F , 2 k k − ≤ k − k ≤ k k p and, with probability at least 1 2exp( 2ǫ2n), − − E P Pn F ǫ P Pn F E P Pn F + ǫ. k − k − ≤ k − k ≤ k − k Thus, P Pn F E Rn F , and k − k ≈ k k R(fn) inf R(f)= O (E Rn F ) . − f∈F k k 4 Tools for controlling Rademacher complexity 1. -

Loss Function Search for Face Recognition

Loss Function Search for Face Recognition Xiaobo Wang * 1 Shuo Wang * 1 Cheng Chi 2 Shifeng Zhang 2 Tao Mei 1 Abstract Generally, the CNNs are equipped with classification loss In face recognition, designing margin-based (e.g., functions (Liu et al., 2017; Wang et al., 2018f;e; 2019a; Yao angular, additive, additive angular margins) soft- et al., 2018; 2017; Guo et al., 2020), metric learning loss max loss functions plays an important role in functions (Sun et al., 2014; Schroff et al., 2015) or both learning discriminative features. However, these (Sun et al., 2015; Wen et al., 2016; Zheng et al., 2018b). hand-crafted heuristic methods are sub-optimal Metric learning loss functions such as contrastive loss (Sun because they require much effort to explore the et al., 2014) or triplet loss (Schroff et al., 2015) usually large design space. Recently, an AutoML for loss suffer from high computational cost. To avoid this problem, function search method AM-LFS has been de- they require well-designed sample mining strategies. So rived, which leverages reinforcement learning to the performance is very sensitive to these strategies. In- search loss functions during the training process. creasingly more researchers shift their attention to construct But its search space is complex and unstable that deep face recognition models by re-designing the classical hindering its superiority. In this paper, we first an- classification loss functions. alyze that the key to enhance the feature discrim- Intuitively, face features are discriminative if their intra- ination is actually how to reduce the softmax class compactness and inter-class separability are well max- probability. -

Deep Neural Networks for Choice Analysis: Architecture Design with Alternative-Specific Utility Functions Shenhao Wang Baichuan

Deep Neural Networks for Choice Analysis: Architecture Design with Alternative-Specific Utility Functions Shenhao Wang Baichuan Mo Jinhua Zhao Massachusetts Institute of Technology Abstract Whereas deep neural network (DNN) is increasingly applied to choice analysis, it is challenging to reconcile domain-specific behavioral knowledge with generic-purpose DNN, to improve DNN's interpretability and predictive power, and to identify effective regularization methods for specific tasks. To address these challenges, this study demonstrates the use of behavioral knowledge for designing a particular DNN architecture with alternative-specific utility functions (ASU-DNN) and thereby improving both the predictive power and interpretability. Unlike a fully connected DNN (F-DNN), which computes the utility value of an alternative k by using the attributes of all the alternatives, ASU-DNN computes it by using only k's own attributes. Theoretically, ASU- DNN can substantially reduce the estimation error of F-DNN because of its lighter architecture and sparser connectivity, although the constraint of alternative-specific utility can cause ASU- DNN to exhibit a larger approximation error. Empirically, ASU-DNN has 2-3% higher prediction accuracy than F-DNN over the whole hyperparameter space in a private dataset collected in Singapore and a public dataset available in the R mlogit package. The alternative-specific connectivity is associated with the independence of irrelevant alternative (IIA) constraint, which as a domain-knowledge-based regularization method is more effective than the most popular generic-purpose explicit and implicit regularization methods and architectural hyperparameters. ASU-DNN provides a more regular substitution pattern of travel mode choices than F-DNN does, rendering ASU-DNN more interpretable.