Three Dimensional Sensing by Digital Video Fringe Projection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Matrox Parhelia, Matrox Millennium P750, Matrox Millennium P650

ENGLISH Matrox Parhelia Matrox Millennium P750 Matrox Millennium P650 User Guide 10818-301-0210 2005.02.28 Hardware installation This section describes how to install your Matrox card. If your Matrox graphics card is already installed in your computer, skip to “Standard (ATX) connection setup”, page 6 or “Low-profile connection setup”, page 10. For information specific to your computer, like how to remove its cover, see your system manual. WARNING: To avoid personal injury, turn off your computer, unplug it, and then wait for it to cool before you touch any of its internal parts. Also, static electricity can severely damage electronic parts. Before touching any electronic parts, drain static electricity from your body (for example, by touching the metal frame of your computer). When handling a card, carefully hold it by its edges and avoid touching its circuitry. Note: If your Matrox product supports stereo output and you want to use a stereo-output bracket (provided with some Matrox products), you need to connect your stereo-output bracket to your graphics card. For more information, see “Stereo output”, page 21. Stereo-output bracket Note: Matrox low-profile graphics cards ship with standard (ATX) brackets compatible with most systems. If you have a low-profile system, you may need to change the standard bracket on your graphics card to a low-profile bracket. For more information, see “Replacing brackets on a low-profile graphics card”, page 5. 1 Open your computer and remove your existing graphics card * If a graphics card isn’t already installed in your computer, skip to step 2. -

John Carmack Archive - .Plan (2002)

John Carmack Archive - .plan (2002) http://www.team5150.com/~andrew/carmack March 18, 2007 Contents 1 February 2 1.1 Last month I wrote the Radeon 8500 support for Doom. (Feb 11, 2002) .......................... 2 2 March 6 2.1 Mar 15, 2002 ........................... 6 3 June 7 3.1 The Matrox Parhelia Report (Jun 25, 2002) .......... 7 3.2 More graphics card notes (Jun 27, 2002) ........... 8 1 Chapter 1 February 1.1 Last month I wrote the Radeon 8500 sup- port for Doom. (Feb 11, 2002) The bottom line is that it will be a fine card for the game, but the details are sort of interesting. I had a pre-production board before Siggraph last year, and we were dis- cussing the possibility of letting ATI show a Doom demo behind closed doors on it. We were all very busy at the time, but I took a shot at bringing up support over a weekend. I hadn’t coded any of the support for the cus- tom ATI extensions yet, but I ran the game using only standard OpenGL calls (this is not a supported path, because without bump mapping ev- erything looks horrible) to see how it would do. It didn’t even draw the console correctly, because they had driver bugs with texGen. I thought the odds were very long against having all the new, untested extensions working properly, so I pushed off working on it until they had revved the drivers a few more times. My judgment was colored by the experience of bringing up Doom on the original Radeon card a year earlier, which involved chasing a lot of driver bugs. -

Multispectral Imaging for Medical and Industrial Machine Vision Systems

1 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Tech Guide: Multispectral Imaging Multispectral imaging for medical and industrial machine vision systems 2 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Table of contents Introduction Chapter 1: What is multispectral imaging? Chapter 2: Multispectral imaging applications Chapter 3: Multispectral camera technologies Chapter 4: Key considerations when selecting camera technology for multispectral imaging Chapter 5: Hyperspectral and the future of multispectral imaging 3 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Introduction Just as machine vision systems have evolved from traditional monochrome cameras to many systems that now utilize full color imaging information, there has also been an evolution from systems that only captured broadband images in the visible spectrum, to those that can utilize targeted spectral bands in both visible and non- visible spectral regions to perform more sophisticated inspection and analysis. The color output of the cameras used in the machine vision industry today is largely based on Bayer-pattern or trilinear sensor technology. But imaging is moving well beyond conventional color where standard RGB is not enough to carry out inspection tasks. Some applications demand unconventional RGB wavelength bands while others demand a combination of visible and non-visible wavelengths. Others require exclusively non-visible wavelengths such as UV, NIR or SWIR, with no wavebands in the visible spectrum. Complex metrology and imaging applications are beginning to demand higher numbers of spectral channels or possibilities to select application-specific spectral filtering at high inspection throughputs. With the traditional machine vision industry merging with intricate measurement technologies, consistent, reliable, high-fidelity color and multispectral imaging are playing key roles in industrial quality control. -

United States Patent (19) 11 Patent Number: 6,072,635 Hashizume Et Al

US006072635A United States Patent (19) 11 Patent Number: 6,072,635 Hashizume et al. (45) Date of Patent: Jun. 6, 2000 54) DICHROIC PRISM AND PROJECTION FOREIGN PATENT DOCUMENTS DISPLAY APPARATUS 7-294.845 11/1995 Japan. 75 Inventors: Toshiaki Hashizume, Okaya; Akitaka Primary Examiner Ricky Mack Yajima, Tatsuno-machi, both of Japan Attorney, Agent, or Firm-Oliff & Berridge, PLC 73 Assignee: Seiko Epson Corporation, Tokyo, 57 ABSTRACT Japan The invention provides a dichroic prism capable of reducing displacements of projection pixels of colors caused by 21 Appl. No.: 09/112,132 chromatic aberration of magnification. A dichroic prism is formed in the shape of a quadrangular prism as a whole by 22 Filed: Jul. 9, 1998 joining four rectangular prisms together. A red reflecting 30 Foreign Application Priority Data dichroic plane and a blue reflecting dichroic plane intersect to form Substantially an X shape along junction Surfaces of Jul. 15, 1997 JP Japan .................................... 9-1900OS the prisms. The red reflecting dichroic plane is convex shaped by partly changing the thickness of an adhesive layer 51 Int. Cl." ............................ G02B 27/12: G02B 27/14 for connecting the rectangular prisms together. Accordingly, 52 U.S. Cl. ............................................. 359/640; 359/634 Since a red beam can be guided to a projection optical System 58 Field of Search ..................................... 359/634, 637, while being enlarged, it is possible to reduce a projection 359/640 image of the red beam to be projected onto a projection plane Via the projection optical System. This makes it 56) References Cited possible to reduce relative displacements of projection pix U.S. -

Characterization of Color Cross-Talk of CCD Detectors and Its Influence in Multispectral Quantitative Phase Imaging

Characterization of color cross-talk of CCD detectors and its influence in multispectral quantitative phase imaging 1,2,3 1 1,2 1 AZEEM AHMAD , ANAND KUMAR , VISHESH DUBEY , ANKIT BUTOLA , 2 1,4 BALPREET SINGH AHLUWALIA , DALIP SINGH MEHTA 1Department of Physics, Indian Institute of Technology Delhi, Hauz Khas, New Delhi 110016, India 2Department of Physics and Technology, UiT The Arctic University of Norway, Tromsø 9037, Norway [email protected] [email protected] Abstract: Multi-spectral quantitative phase imaging (QPI) is an emerging imaging modality for wavelength dependent studies of several biological and industrial specimens. Simultaneous multi-spectral QPI is generally performed with color CCD cameras. However, color CCD cameras are suffered from the color crosstalk issue, which needed to be explored. Here, we present a new approach for accurately measuring the color crosstalk of 2D area detectors, without needing prior information about camera specifications. Color crosstalk of two different cameras commonly used in QPI, single chip CCD (1-CCD) and three chip CCD (3-CCD), is systematically studied and compared using compact interference microscopy. The influence of color crosstalk on the fringe width and the visibility of the monochromatic constituents corresponding to three color channels of white light interferogram are studied both through simulations and experiments. It is observed that presence of color crosstalk changes the fringe width and visibility over the imaging field of view. This leads to an unwanted non-uniform background error in the multi-spectral phase imaging of the specimens. It is demonstrated that the color crosstalk of the detector is the key limiting factor for phase measurement accuracy of simultaneous multi-spectral QPI systems. -

Comparative Analysis of Color Architectures for Image Sensors

Comparative analysis of color architectures for image sensors Peter B. Catrysse*a, Brian A. Wande11', Abbas El Gamala aDept of Electrical Engineering, Stanford University, CA 94305, USA bDept ofPsychology, Stanford University, CA 94305,USA ABSTRACT We have developed a software simulator to create physical models of a scene, compute camera responses, render the camera images and to measure the perceptual color errors (CIELAB) between the scene and rendered images. The simulator can be used to measure color reproduction errors and analyze the contributions of different sources to the error. We compare three color architectures for digital cameras: (a) a sensor array containing three interleaved color mosaics, (b) an architecture using dichroic prisms to create three spatially separated copies of the image, (c) a single sensor array coupled with a time-varying color filter measuring three images sequentially in time. Here, we analyze the color accuracy of several exposure control methods applied to these architectures. The first exposure control algorithm (traditional) simply stops image acquisition when one channel reaches saturation. In a second scheme, we determine the optimal exposure time for each color channel separately, resulting in a longer total exposure time. In a third scheme we restrict the total exposure duration to that of the first scheme, but we preserve the optimum ratio between color channels. Simulator analyses measure the color reproduction quality of these different exposure control methods as a function of illumination taking into account photon and sensor noise, quantization and color conversion errors. Keywords: color, digital camera, image quality, CMOS image sensors 1. INTRODUCTION The use of CMOS sensors in digital cameras has created new opportunities for developing digital camera architectures. -

Can I Bring Dreams to Life with Quality & Versatility?

Can I bring dreams to life with quality & versatility? They've been dreaming about the wedding day for months. Positioning the green CCD out of line PROFESSIONAL FLUORITE LENS (pixel shift) sharpens image reproduction Canon has always led the way in optical Every detail has been planned. It goes perfectly. Bride and even if your subject moves. A new Super development. The first to equip a cam- Pixel Shift (horizontal + vertical) achieves corder with a fluorite lens – a lens that groom look radiant and the guests enjoy themselves. wider dynamic range for movies, reducing provides the quality of 35mm and vertical smear and gives 1.7 mega-pixel broadcast TV. The XM2 has a Professional You can bring back those special moments in a top-quality high resolution photos. L-Series fluorite lens. Combined with 20x optical zoom and 100x digital zoom, video recording. plus an optical image stabiliser, you can be sure of superior images. SUPERB QUALITY INSPIRATIONAL VERSATILITY The Canon XM2 Digital Camcorder No two video shoots are alike. What's combines the best of all worlds. Its happening, where it's happening, move- advanced technology delivers unri- ment, colour – it all demands a certain valled image quality in its class, while it amount of fine-tuning to capture the is still easy to operate. That makes it an special moments you want. The XM2 ideal choice for both serious camcorder features adjustments and enhancements enthusiasts, professional video- for sound and picture for your personal graphers and filmmakers. Whether creativity. Plus a LCD screen for easy you're regularly providing broadcast viewing. -

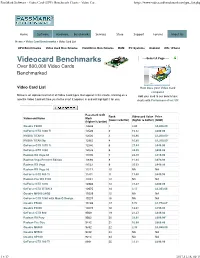

Passmark Software - Video Card (GPU) Benchmark Charts - Video Car

PassMark Software - Video Card (GPU) Benchmark Charts - Video Car... https://www.videocardbenchmark.net/gpu_list.php Home Software Hardware Benchmarks Services Store Support Forums About Us Home » Video Card Benchmarks » Video Card List CPU Benchmarks Video Card Benchmarks Hard Drive Benchmarks RAM PC Systems Android iOS / iPhone Videocard Benchmarks ----Select A Page ---- Over 800,000 Video Cards Benchmarked Video Card List How does your Video Card compare? Below is an alphabetical list of all Video Card types that appear in the charts. Clicking on a Add your card to our benchmark specific Video Card will take you to the chart it appears in and will highlight it for you. charts with PerformanceTest V9 ! Passmark G3D Rank Videocard Value Price Videocard Name Mark (lower is better) (higher is better) (USD) (higher is better) Quadro P6000 13648 1 2.84 $4,808.00 GeForce GTX 1080 Ti 13526 2 19.32 $699.99 NVIDIA TITAN X 13026 3 10.86 $1,200.00* NVIDIA TITAN Xp 12962 4 10.80 $1,200.00* GeForce GTX 1070 Ti 12346 5 27.44 $449.99 GeForce GTX 1080 12038 6 24.08 $499.99 Radeon RX Vega 64 11805 7 22.70 $519.99 Radeon Vega Frontier Edition 11698 8 11.94 $979.99 Radeon RX Vega 11533 9 25.63 $449.99 Radeon RX Vega 56 11517 10 NA NA GeForce GTX 980 Ti 11311 11 17.40 $649.99 Radeon Pro WX 9100 11021 12 NA NA GeForce GTX 1070 10986 13 27.47 $399.99 GeForce GTX TITAN X 10675 14 3.17 $3,363.06 Quadro M6000 24GB 10239 15 NA NA GeForce GTX 1080 with Max-Q Design 10207 16 NA NA Quadro P5000 10188 17 5.72 $1,779.67 Quadro P4000 10078 18 12.61 $799.00 GeForce GTX 980 9569 19 21.27 $449.86 Radeon R9 Fury 9562 20 23.91 $399.99* Radeon Pro Duo 9472 21 10.89 $869.99 Quadro M6000 9392 22 2.35 $3,999.00 Quadro M5500 9322 23 NA NA Quadro GP100 9214 24 NA NA GeForce GTX 780 Ti 8881 25 22.21 $399.99 1 z 37 2017-11-16, 09:13 PassMark Software - Video Card (GPU) Benchmark Charts - Video Car.. -

Release Letter

Security Systems From Product Manager Telephone Nuremberg STVC/PRM +49 911 93456 0 07.08.2008 Release Letter Product: MPEG-ActiveX Version: 3.03.0009 This letter contains latest information about the above mentioned software version. This version is a maintenance release to version 3.02 and thus provides the same feature set as well as the same performance values. 1. Changes • This MPEG-ActiveX allows showing video while both the VIDOS Lite Viewer and a Web browser displaying a FW 3.5x BVIP unit’s Web page are running on the same PC. 1 BOSCH and the symbol are registered trademarks of Robert Bosch GmbH, Germany Security Systems From Product Manager Telephone Nuremberg STVC/PRM +49 911 93456 0 07.08.2008 2. Restrictions; Known Issues • For 32-bit color mode the PC must support YUV overlay. • MPEG-ActiveX versions 2.7x and newer cannot display the live video of a VideoJet 400 in quad mode anymore when local recording for more than one camera is active. • For MPEG-2 rendering on Nvidia graphic cards, use driver version 71.84 or older for optimal performance. • In display mode “Dual view” on Nvidia FX1400/4400 and ATI GL V 3100 graphic cards, drag & drop from primary to secondary monitor and vice versa does not automatically refresh camera images. • Streaming works only if a RCP+ connection is established. If multicast streaming is enabled, a device can only connect to the stream if a RCP+ connection exists. Although the multicast address is enabled, the IP address must be set in addition. 3. -

04-Prog-On-Gpu-Schaber.Pdf

Seminar - Paralleles Rechnen auf Grafikkarten Seminarvortrag: Übersicht über die Programmierung von Grafikkarten Marcus Schaber 05.05.2009 Betreuer: J. Kunkel, O. Mordvinova 05.05.2009 Marcus Schaber 1/33 Gliederung ● Allgemeine Programmierung Parallelität, Einschränkungen, Vorteile ● Grafikpipeline Programmierbare Einheiten, Hardwarefunktionalität ● Programmiersprachen Übersicht und Vergleich, Datenstrukturen, Programmieransätze ● Shader Shader Standards ● Beispiele Aus Bereich Grafik 05.05.2009 Marcus Schaber 2/33 Programmierung Einschränkungen ● Anpassung an Hardware Kernels und Streams müssen erstellt werden. Daten als „Vertizes“, Erstellung geeigneter Fragmente notwendig. ● Rahmenprogramm Programme können nicht direkt von der GPU ausgeführt werden. Stream und Kernel werden von der CPU erstellt und auf die Grafikkarte gebracht 05.05.2009 Marcus Schaber 3/33 Programmierung Parallelität ● „Sequentielle“ Sprachen Keine spezielle Syntax für parallele Programmierung. Einzelne shader sind sequentielle Programme. ● Parallelität wird durch gleichzeitiges ausführen eines Shaders auf unterschiedlichen Daten erreicht ● Hardwareunterstützung Verwaltung der Threads wird von Hardware unterstützt 05.05.2009 Marcus Schaber 4/33 Programmierung Typische Aufgaben ● Geeignet: Datenparallele Probleme Gleiche/ähnliche Operationen auf vielen Daten ausführen, wenig Kommunikation zwischen Elementen notwendig ● Arithmetische Dichte: Anzahl Rechenoperationen / Lese- und Schreibzugiffe ● Klassisch: Grafik Viele unabhängige Daten: Vertizes, Fragmente/Pixel Operationen: -

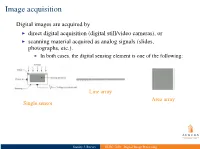

ELEC 7450 - Digital Image Processing Image Acquisition

I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor Stanley J. Reeves ELEC 7450 - Digital Image Processing Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Stanley J. Reeves ELEC 7450 - Digital Image Processing Single sensor acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Linear array acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Two types of quantization: I spatial: limited number of pixels I gray-level: limited number of bits to represent intensity at a pixel Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized intensities are stored as a grayscale image Stanley J. Reeves ELEC 7450 - Digital Image Processing Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized Two types of quantization: intensities are stored as a I spatial: limited number of pixels grayscale image I gray-level: limited number of bits to represent intensity at a pixel Stanley J. -

Matrox RT.X10 Xtra Installation and User Guide

Matrox RT.X10 Xtra Installation and User Guide May 1, 2003 v 10805-201-0200 Trademarks • Marques déposées • Warenzeichen • Marchi registrati • Marcas registradas Matrox Electronic Systems Ltd.........................................Matrox®, DigiSuite®, Flex 3D™, MediaExport™, MediaTools™, RT2500™, RT.X10 Xtra™, SinglePass™, TurboDV™, X.tools™ Matrox Graphics Inc ........................................................G550™, P650™, P750™, Millennium™, Parhelia™ Adobe Systems Inc..........................................................Adobe®, Acrobat®, Photoshop®, Premiere® Advanced Micro Devices, Inc...........................................AMD Athlon™ Apple Computer, Inc ........................................................Apple®, FireWire®, QuickTime® Canon Inc........................................................................Canon® Creative Technology Ltd. .................................................Audigy™, SoundBlaster™ Inscriber Technology Corporation.....................................Inscriber®, TitleExpress™ Intel Corporation..............................................................Intel®, Pentium® International Business Machines Corporation...................IBM®, VGA® JVC .................................................................................D-9™, Digital-S™ Ligos Incorporated...........................................................Ligos®, GoMotion® Microsoft Corporation ......................................................Microsoft®, ActiveMovie®, DirectShow®, DirectSound®, DirectX®, Internet