Fair and Diverse Data Representation in Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Learning Theoretic Framework for Clustering with Similarity Functions

A Learning Theoretic Framework for Clustering with Similarity Functions Maria-Florina Balcan∗ Avrim Blum∗ Santosh Vempala† November 23, 2007 Abstract Problems of clustering data from pairwise similarity information are ubiquitous in Computer Science. Theoretical treatments typically view the similarity information as ground-truthand then design algorithms to (approximately) optimize various graph-based objective functions. However, in most applications, this similarity information is merely based on some heuristic; the ground truth is really the (unknown) correct clustering of the data points and the real goal is to achieve low error on the data. In this work, we develop a theoretical approach to clustering from this perspective. In particular, motivated by recent work in learning theory that asks “what natural properties of a similarity (or kernel) function are sufficient to be able to learn well?” we ask “what natural properties of a similarity function are sufficient to be able to cluster well?” To study this question we develop a theoretical framework that can be viewed as an analog of the PAC learning model for clustering, where the object of study, rather than being a concept class, is a class of (concept, similarity function) pairs, or equivalently, a property the similarity function should satisfy with respect to the ground truth clustering. We then analyze both algorithmic and information theoretic issues in our model. While quite strong properties are needed if the goal is to produce a single approximately-correct clustering, we find that a number of reasonable properties are sufficient under two natural relaxations: (a) list clustering: analogous to the notion of list-decoding, the algorithm can produce a small list of clusterings (which a user can select from) and (b) hierarchical clustering: the algorithm’s goal is to produce a hierarchy such that desired clustering is some pruning of this tree (which a user could navigate). -

IARCS, the Indian Association for Research in Computing Science

IARCS, the Indian Association for Research in Computing Science, announces the 38th Foundations of Software Technology and Theoretical Computer Science conference at Ahmedabad University, Ahmedabad, Gujarat. The FSTTCS conference is a forum for presenting original results in foundational aspects of Computer Science and Software Technology. Representative areas include, but are not limited to, the following. ● Algorithmic Algebra ● Model Theory, Modal and Temporal ● Algorithms and Data Structures Logics ● Algorithmic Graph Theory and ● Models of Concurrent and Distributed Combinatorics Systems ● Approximation Algorithms ● Models of Timed, Reactive, Hybrid and ● Automata, Games and Formal Stochastic Systems Languages ● Parallel, Distributed and Online ● Combinatorial Optimization Algorithms ● Communication Complexity ● Parameterized Complexity ● Computational Biology ● Principles and Semantics of ● Computational Complexity Programming Languages ● Computational Geometry ● Program Analysis and Transformation ● Computational Learning ● Proof Complexity Theory ● Quantum Computing ● Cryptography and Security ● Randomness in Computing ● Data Streaming and Sublinear ● Specification, Verification, and algorithm Synthesis ● Game Theory and Mechanism ● Theorem Proving, Decision Procedures, Design Model Checking and Reactive Synthesis ● Logic in Computer Science ● Theoretical Aspects of Mobile and ● Machine Learning High-Performance Computing ● Quantum Computing Accepted Papers View the Accepted Papers. Click Here To Download PDF. Submissions Submissions -

Curriculum Vitae

Curriculum Vitae David P. Woodruff Biographical Computer Science Department Gates-Hillman Complex Carnegie Mellon University 5000 Forbes Avenue Pittsburgh, PA 15213 Citizenship: United States Email: [email protected] Home Page: http://www.cs.cmu.edu/~dwoodruf/ Research Interests Compressed Sensing, Data Stream Algorithms and Lower Bounds, Dimensionality Reduction, Distributed Computation, Machine Learning, Numerical Linear Algebra, Optimization Education All degrees received from Massachusetts Institute of Technology, Cambridge, MA Ph.D. in Computer Science. September 2007 Research Advisor: Piotr Indyk Thesis Title: Efficient and Private Distance Approximation in the Communication and Streaming Models. Master of Engineering in Computer Science and Electrical Engineering, May 2002 Research Advisor: Ron Rivest Thesis Title: Cryptography in an Unbounded Computational Model Bachelor of Science in Computer Science and Electrical Engineering May 2002 Bachelor of Pure Mathematics May 2002 Professional Experience August 2018-present, Carnegie Mellon University Computer Science Department Associate Professor (with tenure) August 2017 - present, Carnegie Mellon University Computer Science Department Associate Professor June 2018 – December 2018, Google, Mountain View Research Division Visiting Faculty Program August 2007 - August 2017, IBM Almaden Research Center Principles and Methodologies Group Research Scientist Aug 2005 - Aug 2006, Tsinghua University Institute for Theoretical Computer Science Visiting Scholar. Host: Andrew Yao Jun - Jul -

Visions in Theoretical Computer Science

VISIONS IN THEORETICAL COMPUTER SCIENCE A Report on the TCS Visioning Workshop 2020 The material is based upon work supported by the National Science Foundation under Grants No. 1136993 and No. 1734706. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. VISIONS IN THEORETICAL COMPUTER SCIENCE A REPORT ON THE TCS VISIONING WORKSHOP 2020 Edited by: Shuchi Chawla (University of Wisconsin-Madison) Jelani Nelson (University of California, Berkeley) Chris Umans (California Institute of Technology) David Woodruff (Carnegie Mellon University) 3 VISIONS IN THEORETICAL COMPUTER SCIENCE Table of Contents Foreword ................................................................................................................................................................ 5 How this Document came About ................................................................................................................................... 6 Models of Computation .........................................................................................................................................7 Computational Complexity ................................................................................................................................................7 Sublinear Algorithms ........................................................................................................................................................ -

Random Projection in the Brain and Computation with Assemblies Of

1 Random Projection in the Brain and Computation 2 with Assemblies of Neurons 3 Christos H. Papadimitriou 4 Columbia University, USA 5 [email protected] 6 Santosh S. Vempala 7 Georgia Tech, USA 8 [email protected] 9 Abstract 10 It has been recently shown via simulations [7] that random projection followed by a cap operation 11 (setting to one the k largest elements of a vector and everything else to zero), a map believed 12 to be an important part of the insect olfactory system, has strong locality sensitivity properties. 13 We calculate the asymptotic law whereby the overlap in the input vectors is conserved, verify- 14 ing mathematically this empirical finding. We then focus on the far more complex homologous 15 operation in the mammalian brain, the creation through successive projections and caps of an 16 assembly (roughly, a set of excitatory neurons representing a memory or concept) in the presence 17 of recurrent synapses and plasticity. After providing a careful definition of assemblies, we prove 18 that the operation of assembly projection converges with high probability, over the randomness 19 of synaptic connectivity, even if plasticity is relatively small (previous proofs relied on high plas- 20 ticity). We also show that assembly projection has itself some locality preservation properties. 21 Finally, we propose a large repertoire of assembly operations, including associate, merge, recip- 22 rocal project, and append, each of them both biologically plausible and consistent with what we 23 know from experiments, and show that this computational system is capable of simulating, again 24 with high probability, arbitrary computation in a quite natural way. -

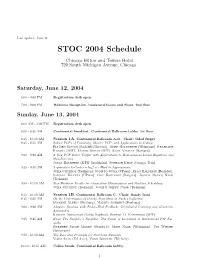

Conference Program

Last update: June 11 STOC 2004 Schedule Chicago Hilton and Towers Hotel 720 South Michigan Avenue, Chicago Saturday, June 12, 2004 5:00 - 9:00 PM Registration desk open 7:00 - 9:00 PM Welcome Reception. Boulevard Room and Foyer, 2nd floor Sunday, June 13, 2004 8:00 AM - 5:00 PM Registration desk open 8:00 - 8:35 AM Continental breakfast. Continental Ballroom Lobby, 1st floor 8:35 - 10:10 AM Session 1A. Continental Ballroom A-B. Chair: Oded Regev 8:35 - 8:55 AM Robust PCPs of Proximity, Shorter PCPs and Applications to Coding Eli Ben-Sasson (Radcliffe/Harvard), Oded Goldreich (Weizmann) Prahladh Harsha (MIT), Madhu Sudan (MIT), Salil Vadhan (Harvard) 9:00 - 9:20 AM A New PCP Outer Verifier with Applications to Homogeneous Linear Equations and Max-Bisection Jonas Holmerin (KTH, Stockholm), Subhash Khot (Georgia Tech) 9:25 - 9:45 AM Asymmetric k-Center is log∗ n - Hard to Approximate Julia Chuzhoy (Technion), Sudipto Guha (UPenn), Eran Halperin (Berkeley), Sanjeev Khanna (UPenn), Guy Kortsarz (Rutgers), Joseph (Seffi) Naor (Technion) 9:50 - 10:10 AM New Hardness Results for Congestion Minimization and Machine Scheduling Julia Chuzhoy (Technion), Joseph (Seffi) Naor (Technion) 8:35 - 10:10 AM Session 1B. Continental Ballroom C. Chair: Sandy Irani 8:35 - 8:55 AM On the Performance of Greedy Algorithms in Packet Buffering Susanne Albers (Freiburg), Markus Schmidt (Freiburg) 9:00 - 9:20 AM Adaptive Routing with End-to-End Feedback: Distributed Learning and Geometric Approaches Baruch Awerbuch (Johns Hopkins), Robert D. Kleinberg (MIT) 9:25 - 9:45 AM Know Thy Neighbor’s Neighbor: The Power of Lookahead in Randomized P2P Net- works Gurmeet Singh Manku (Stanford), Moni Naor (Weizmann), Udi Wieder (Weizmann) 9:50 - 10:10 AM The Zero-One Principle for Switching Networks Yossi Azar (Tel Aviv), Yossi Richter (Tel Aviv) 10:10 - 10:35 AM Coffee break. -

Lipics-ITCS-2018-0.Pdf (0.4

9th Innovations in Theoretical Computer Science ITCS 2018, January 11–14, 2018, Cambridge, MA, USA Edited by Anna R. Karlin LIPIcs – Vol. 94 – ITCS2018 www.dagstuhl.de/lipics Editor Anna R. Karlin Allen School of Computer Science and Engineering University of Washington [email protected] ACM Classification 1998 F. Theory of Computation, G. Mathematics of Computing ISBN 978-3-95977-060-6 Published online and open access by Schloss Dagstuhl – Leibniz-Zentrum für Informatik GmbH, Dagstuhl Publishing, Saarbrücken/Wadern, Germany. Online available at http://www.dagstuhl.de/dagpub/978-3-95977-060-6. Publication date January, 2018 Bibliographic information published by the Deutsche Nationalbibliothek The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie; detailed bibliographic data are available in the Internet at http://dnb.d-nb.de. License This work is licensed under a Creative Commons Attribution 3.0 Unported license (CC-BY 3.0): http://creativecommons.org/licenses/by/3.0/legalcode. In brief, this license authorizes each and everybody to share (to copy, distribute and transmit) the work under the following conditions, without impairing or restricting the authors’ moral rights: Attribution: The work must be attributed to its authors. The copyright is retained by the corresponding authors. Digital Object Identifier: 10.4230/LIPIcs.ITCS.2018.0 ISBN 978-3-95977-060-6 ISSN 1868-8969 http://www.dagstuhl.de/lipics 0:iii LIPIcs – Leibniz International Proceedings in Informatics LIPIcs is a series of high-quality conference proceedings across all fields in informatics. LIPIcs volumes are published according to the principle of Open Access, i.e., they are available online and free of charge. -

Sampling-Based Algorithms for Dimension Reduction Amit Jayant

Sampling-Based Algorithms for Dimension Reduction by Amit Jayant Deshpande B.Sc., Chennai Mathematical Institute, 2002 Submitted to the Department of Mathematics in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2007 @ Amit Jayant Deshpande, MMVII. All rights reserved. The author hereby grants to MIT permission to reproduce and distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Author .............................................. Department of Mathematics April 11, 2007 Certified by..... w... .............................................. Santosh S. Vempala Associate Professor of Applied Mathematics Thesis co-supervisor Certified by..- ..... ................................. ... Daniel A. Spielman Professor of Computer Science, Yale University - ,Thesia.eAuDervisor Accepted by ...................... Alar Toomre Chairman. ADDlied Mathematics Committee Accepted by MASSACHUSETTS INSTITUTE r Pavel I. Etingof OF TECHNOLOGY Chairman, Department Committee on Graduate Students JUN 0 8 2007 LIBRARIES -ARC·HN/E Sampling-Based Algorithms for Dimension Reduction by Amit Jayant Deshpande Submitted to the Department of Mathematics on April 11, 2007, in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract Can one compute a low-dimensional representation of any given data by looking only at its small sample, chosen cleverly on the fly? Motivated by the above question, we consider the problem of low-rank matrix approximation: given a matrix A E Rm", one wants to compute a rank-k matrix (where k << min{m, n}) nearest to A in the Frobenius norm (also known as the Hilbert-Schmidt norm). We prove that using a sample of roughly O(k/E) rows of A one can compute, with high probability, a (1 + E)-approximation to the nearest rank-k matrix. -

Karthekeyan Chandrasekaran

Karthekeyan Chandrasekaran Contact 301 Transportation Building Phone: +1-217-300-1160 Information 104 S. Mathews Ave Email: [email protected] Urbana, IL 61801 URL: https://karthik.ise.illinois.edu Research Combinatorial Optimization, Integer Programming, Probabilistic Methods, Randomization Interests Appointments Associate Professor University of Illinois at Urbana-Champaign, IL Department of Industrial and Enterprise Systems Engineering Aug, 2021-present Affiliate University of Illinois at Urbana-Champaign, IL Department of Computer Science Sep, 2014-present Assistant Professor University of Illinois at Urbana-Champaign, IL Department of Industrial and Enterprise Systems Engineering Sep, 2014-Jul, 2021 Simons Postdoctoral Research Fellow Harvard University, Cambridge, MA School of Engineering and Applied Sciences Host: Salil Vadhan Sep, 2012-Aug, 2014 Visiting Researcher International Computer Science Institute (ICSI), Berkeley, CA Algorithms Group Host: Richard Karp Jul-Oct, 2011 Research Intern Microsoft Research, Bangalore, India Algorithms Group Host: Navin Goyal May-Jul, 2009 Host: Amit Deshpande May-Jul, 2008 Applied Mathematics Group Host: Satya V. Lokam May-Jul, 2007 Microsoft Research, Redmond, WA Algorithms Group Host: Ramarathnam Venkatesan Jun-Aug, 2006 Education Ph.D., Algorithms, Combinatorics, and Optimization Aug, 2012 Georgia Institute of Technology, Atlanta Advisor: Santosh Vempala B.Tech., Computer Science and Engineering Jun, 2007 Indian Institute of Technology, Madras Teaching Graduate-level (UIUC) Combinatorial Optimization, IE 519/CS 586 (formerly IE 598) Fall 2015, Spring 2018, 2020 Integer Programming, IE 511 Spring 2015, 2017, 2019 PAGE 1/10 Undergraduate-level (UIUC) Deterministic Models in Optimization, IE 310 Spring 2016, Fall 2016, 2017, 2018, 2019, 2020 Operations Research Lab, IE 311 Spring 2016, Fall 2016, 2017, 2018 Students PhD Advisees Weihang Wang, UIUC (2019{present) Calvin Beideman, UIUC (2018{present) Chao Xu, UIUC (PhD, May 2018, joint with Prof. -

New Approaches to Integer Programming

NEW APPROACHES TO INTEGER PROGRAMMING A Thesis Presented to The Academic Faculty by Karthekeyan Chandrasekaran In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in Algorithms, Combinatorics and Optimization in the School of Computer Science Georgia Institute of Technology August 2012 NEW APPROACHES TO INTEGER PROGRAMMING Approved by: Santosh Vempala, Advisor Santanu Dey School of Computer Science School of Industrial and Systems Georgia Institute of Technology Engineering Georgia Institute of Technology Shabbir Ahmed Richard Karp School of Industrial and Systems Department of Electrical Engineering Engineering and Computer Sciences Georgia Institute of Technology University of California, Berkeley William Cook George Nemhauser School of Industrial and Systems School of Industrial and Systems Engineering Engineering Georgia Institute of Technology Georgia Institute of Technology Date Approved: 24th May 2012 To my parents, Nalini and Chandrasekaran. iii ACKNOWLEDGEMENTS My biggest thanks to my advisor Santosh Vempala. His constant reminder to strive for excellence has helped me push myself and motivated me to be a better researcher. I can only wish to attain as much clarity in my explanations as he does. I am very grateful to the ACO program led by Robin Thomas. It has provided me an invaluable opportunity to appreciate the various aspects of theoretical research. I consider myself lucky for having been able to interact with its exceptional faculty. I also thank ARC and NSF for their generous research and travel support. Many faculty had a positive impact on me during my time at Georgia Tech. I thank Bill Cook for his alluring treatment of linear inequalities. I thank Shabbir Ahmed, Santanu Dey, George Nemhauser and Prasad Tetali for their feedback, thoughtful questions and for all the support, help and advice. -

Submission Data for 2017 CORE Conference Re-Ranking Process

Submission Data for 2017 CORE conference Re-ranking process International Workshop on Approximation Algorithms for Combinatorial Optimization Problems (Submitted as a comparator for Innovations in Theoretical Computer Science) Submitted by: Benjamin Rubinstein [email protected] Supported by: Benjamin Rubinstein Conference Details Conference Title: International Workshop on Approximation Algorithms for Combinatorial Optimization Problems Acronym : APPROX Rank: A Recent Years Most Recent Year Year: 2017 URL: http://cui.unige.ch/tcs/random-approx/2017/index.php Papers submitted: 60 Papers published: 22 Acceptance rate: 37 Source for acceptance rate: http://drops.dagstuhl.de/portals/extern/index.php?semnr=16040 Program Chairs Name: David Williamson Affiliation: Cornell H index: 43 Google Scholar URL: https://scholar.google.com/citations?user=pOd2M64AAAAJ DBLP URL: http://dblp2.uni-trier.de/pers/hd/w/Williamson:David_P= General Chairs Name: Neha Dave Affiliation: Mills College H index: -1 Google Scholar URL: DBLP URL: http://dblp2.uni-trier.de/pers/hd/d/Dave:Neha Second Most Recent Year Year: 2016 URL: http://cui.unige.ch/tcs/random-approx/2016/ Papers submitted: 40 Papers published: 20 Acceptance rate: 50 Source for acceptance rate: http://drops.dagstuhl.de/portals/extern/index.php?semnr=16019 Program Chairs Name: Claire Mathieu Affiliation: École normale supérieure H index: -1 Google Scholar URL: DBLP URL: http://dblp2.uni-trier.de/pers/hd/m/Mathieu:Claire 1 General Chairs Name: Adi Rosén Affiliation: CNRS H index: -1 Google Scholar -

Santosh S. Vempala

Santosh S. Vempala Professor of Computer Science Office Phone: (404) 385-0811 Georgia Institute of Technology Home Phone: (404) 855-5621 Klaus 2222, 266 Ferst Drive Email: [email protected] Atlanta, GA 30332 http://www.cc.gatech.edu/~vempala Research The theory of algorithms; algorithmic tools for sampling, learning, optimiza- Interests tion and data analysis; high-dimensional geometry; randomized linear algebra; Computing-for-Good (C4G). Education Carnegie Mellon University, (1993-1997) School of CS, Ph.D. in Algorithms, Combinatorics and Optimization. Thesis: \Geometric Tools for Algorithms." Advisor: Prof. Avrim Blum Indian Institute of Technology, New Delhi, (1988-1992) B. Tech. in Computer Science. Awards Best Paper ACM-SIAM Symposium on Discrete Algorithms (SODA), 2021. Best Paper ITU Kaleideschope, 2019. Gem of PODS (test of time award), 2019. Edenfield Faculty Fellowship, 2019. CIOS Course Effectiveness Teaching Award, 2016. Frederick Storey chair, 2016-. ACM fellow, 2015. Open Source Software World Challenge, Gold prize, 2015. GT Class of 1934 Outstanding Interdisciplinary Activities Award, 2012. Georgia Trend 40-under-40, 2010. Raytheon fellow, 2008. Distinguished Professor of Computer Science, 2007- Guggenheim Fellow, 2005 Alfred P. Sloan Research Fellow, 2002 NSF Career Award, 1999 Miller Fellow, U.C. Berkeley, 1998 IEEE Machtey Prize, 1997 Best Undergraduate Thesis Award, IIT Delhi, 1992 Appointments Aug 06 { Georgia Tech, Professor, Computer Science (primary), ISYE, Mathematics. Aug 06{Apr 11 Founding Director, Algorithms and Randomness Center (ARC), Georgia Tech. Jul 03{Jul 07 MIT, Associate Professor of Applied Mathematics Jul 98{Jun 03 MIT, Assistant Professor of Applied Mathematics Sep 97{Jun 98 MIT, Applied Mathematics Instructor. 2001, 03, 06, 14 Microsoft Research, Visiting Researcher.