Essential Coding Theory Book

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

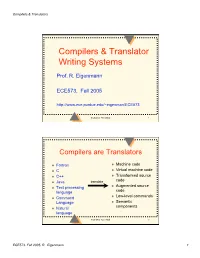

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Modern Coding Theory: the Statistical Mechanics and Computer Science Point of View

Modern Coding Theory: The Statistical Mechanics and Computer Science Point of View Andrea Montanari1 and R¨udiger Urbanke2 ∗ 1Stanford University, [email protected], 2EPFL, ruediger.urbanke@epfl.ch February 12, 2007 Abstract These are the notes for a set of lectures delivered by the two authors at the Les Houches Summer School on ‘Complex Systems’ in July 2006. They provide an introduction to the basic concepts in modern (probabilistic) coding theory, highlighting connections with statistical mechanics. We also stress common concepts with other disciplines dealing with similar problems that can be generically referred to as ‘large graphical models’. While most of the lectures are devoted to the classical channel coding problem over simple memoryless channels, we present a discussion of more complex channel models. We conclude with an overview of the main open challenges in the field. 1 Introduction and Outline The last few years have witnessed an impressive convergence of interests between disciplines which are a priori well separated: coding and information theory, statistical inference, statistical mechanics (in particular, mean field disordered systems), as well as theoretical computer science. The underlying reason for this convergence is the importance of probabilistic models and/or probabilistic techniques in each of these domains. This has long been obvious in information theory [53], statistical mechanics [10], and statistical inference [45]. In the last few years it has also become apparent in coding theory and theoretical computer science. In the first case, the invention of Turbo codes [7] and the re-invention arXiv:0704.2857v1 [cs.IT] 22 Apr 2007 of Low-Density Parity-Check (LDPC) codes [30, 28] has motivated the use of random constructions for coding information in robust/compact ways [50]. -

Chapter 2 Basics of Scanning And

Chapter 2 Basics of Scanning and Conventional Programming in Java In this chapter, we will introduce you to an initial set of Java features, the equivalent of which you should have seen in your CS-1 class; the separation of problem, representation, algorithm and program – four concepts you have probably seen in your CS-1 class; style rules with which you are probably familiar, and scanning - a general class of problems we see in both computer science and other fields. Each chapter is associated with an animating recorded PowerPoint presentation and a YouTube video created from the presentation. It is meant to be a transcript of the associated presentation that contains little graphics and thus can be read even on a small device. You should refer to the associated material if you feel the need for a different instruction medium. Also associated with each chapter is hyperlinked code examples presented here. References to previously presented code modules are links that can be traversed to remind you of the details. The resources for this chapter are: PowerPoint Presentation YouTube Video Code Examples Algorithms and Representation Four concepts we explicitly or implicitly encounter while programming are problems, representations, algorithms and programs. Programs, of course, are instructions executed by the computer. Problems are what we try to solve when we write programs. Usually we do not go directly from problems to programs. Two intermediate steps are creating algorithms and identifying representations. Algorithms are sequences of steps to solve problems. So are programs. Thus, all programs are algorithms but the reverse is not true. -

Scripting: Higher- Level Programming for the 21St Century

. John K. Ousterhout Sun Microsystems Laboratories Scripting: Higher- Cybersquare Level Programming for the 21st Century Increases in computer speed and changes in the application mix are making scripting languages more and more important for the applications of the future. Scripting languages differ from system programming languages in that they are designed for “gluing” applications together. They use typeless approaches to achieve a higher level of programming and more rapid application development than system programming languages. or the past 15 years, a fundamental change has been ated with system programming languages and glued Foccurring in the way people write computer programs. together with scripting languages. However, several The change is a transition from system programming recent trends, such as faster machines, better script- languages such as C or C++ to scripting languages such ing languages, the increasing importance of graphical as Perl or Tcl. Although many people are participat- user interfaces (GUIs) and component architectures, ing in the change, few realize that the change is occur- and the growth of the Internet, have greatly expanded ring and even fewer know why it is happening. This the applicability of scripting languages. These trends article explains why scripting languages will handle will continue over the next decade, with more and many of the programming tasks in the next century more new applications written entirely in scripting better than system programming languages. languages and system programming -

Modern Coding Theory: the Statistical Mechanics and Computer Science Point of View

Modern Coding Theory: The Statistical Mechanics and Computer Science Point of View Andrea Montanari1 and R¨udiger Urbanke2 ∗ 1Stanford University, [email protected], 2EPFL, ruediger.urbanke@epfl.ch February 12, 2007 Abstract These are the notes for a set of lectures delivered by the two authors at the Les Houches Summer School on ‘Complex Systems’ in July 2006. They provide an introduction to the basic concepts in modern (probabilistic) coding theory, highlighting connections with statistical mechanics. We also stress common concepts with other disciplines dealing with similar problems that can be generically referred to as ‘large graphical models’. While most of the lectures are devoted to the classical channel coding problem over simple memoryless channels, we present a discussion of more complex channel models. We conclude with an overview of the main open challenges in the field. 1 Introduction and Outline The last few years have witnessed an impressive convergence of interests between disciplines which are a priori well separated: coding and information theory, statistical inference, statistical mechanics (in particular, mean field disordered systems), as well as theoretical computer science. The underlying reason for this convergence is the importance of probabilistic models and/or probabilistic techniques in each of these domains. This has long been obvious in information theory [53], statistical mechanics [10], and statistical inference [45]. In the last few years it has also become apparent in coding theory and theoretical computer science. In the first case, the invention of Turbo codes [7] and the re-invention of Low-Density Parity-Check (LDPC) codes [30, 28] has motivated the use of random constructions for coding information in robust/compact ways [50]. -

The Packing Problem in Statistics, Coding Theory and Finite Projective Spaces: Update 2001

The packing problem in statistics, coding theory and finite projective spaces: update 2001 J.W.P. Hirschfeld School of Mathematical Sciences University of Sussex Brighton BN1 9QH United Kingdom http://www.maths.sussex.ac.uk/Staff/JWPH [email protected] L. Storme Department of Pure Mathematics Krijgslaan 281 Ghent University B-9000 Gent Belgium http://cage.rug.ac.be/ ls ∼ [email protected] Abstract This article updates the authors’ 1998 survey [133] on the same theme that was written for the Bose Memorial Conference (Colorado, June 7-11, 1995). That article contained the principal results on the packing problem, up to 1995. Since then, considerable progress has been made on different kinds of subconfigurations. 1 Introduction 1.1 The packing problem The packing problem in statistics, coding theory and finite projective spaces regards the deter- mination of the maximal or minimal sizes of given subconfigurations of finite projective spaces. This problem is not only interesting from a geometrical point of view; it also arises when coding-theoretical problems and problems from the design of experiments are translated into equivalent geometrical problems. The geometrical interest in the packing problem and the links with problems investigated in other research fields have given this problem a central place in Galois geometries, that is, the study of finite projective spaces. In 1983, a historical survey on the packing problem was written by the first author [126] for the 9th British Combinatorial Conference. A new survey article stating the principal results up to 1995 was written by the authors for the Bose Memorial Conference [133]. -

![Arxiv:1111.6055V1 [Math.CO] 25 Nov 2011 2 Definitions](https://docslib.b-cdn.net/cover/5635/arxiv-1111-6055v1-math-co-25-nov-2011-2-de-nitions-505635.webp)

Arxiv:1111.6055V1 [Math.CO] 25 Nov 2011 2 Definitions

Adinkras for Mathematicians Yan X Zhang Massachusetts Institute of Technology November 28, 2011 Abstract Adinkras are graphical tools created for the study of representations in supersym- metry. Besides having inherent interest for physicists, adinkras offer many easy-to-state and accessible mathematical problems of algebraic, combinatorial, and computational nature. We use a more mathematically natural language to survey these topics, suggest new definitions, and present original results. 1 Introduction In a series of papers starting with [8], different subsets of the “DFGHILM collaboration” (Doran, Faux, Gates, Hübsch, Iga, Landweber, Miller) have built and extended the ma- chinery of adinkras. Following the spirit of Feyman diagrams, adinkras are combinatorial objects that encode information about the representation theory of supersymmetry alge- bras. Adinkras have many intricate links with other fields such as graph theory, Clifford theory, and coding theory. Each of these connections provide many problems that can be compactly communicated to a (non-specialist) mathematician. This paper is a humble attempt to bridge the language gap and generate communication. We redevelop the foundations in a self-contained manner in Sections 2 and 4, using different definitions and constructions that we consider to be more mathematically natural for our purposes. Using our new setup, we prove some original results and make new interpretations in Sections 5 and 6. We wish that these purely combinatorial discussions will equip the readers with a mental model that allows them to appreciate (or to solve!) the original representation-theoretic problems in the physics literature. We return to these problems in Section 7 and reconsider some of the foundational questions of the theory. -

The Use of Coding Theory in Computational Complexity 1 Introduction

The Use of Co ding Theory in Computational Complexity Joan Feigenbaum ATT Bell Lab oratories Murray Hill NJ USA jfresearchattcom Abstract The interplay of co ding theory and computational complexity theory is a rich source of results and problems This article surveys three of the ma jor themes in this area the use of co des to improve algorithmic eciency the theory of program testing and correcting which is a complexity theoretic analogue of error detection and correction the use of co des to obtain characterizations of traditional complexity classes such as NP and PSPACE these new characterizations are in turn used to show that certain combinatorial optimization problems are as hard to approximate closely as they are to solve exactly Intro duction Complexity theory is the study of ecient computation Faced with a computational problem that can b e mo delled formally a complexity theorist seeks rst to nd a solution that is provably ecient and if such a solution is not found to prove that none exists Co ding theory which provides techniques for robust representation of information is valuable b oth in designing ecient solutions and in proving that ecient solutions do not exist This article surveys the use of co des in complexity theory The improved upp er b ounds obtained with co ding techniques include b ounds on the numb er of random bits used by probabilistic algo rithms and the communication complexity of cryptographic proto cols In these constructions co des are used to design small sample spaces that approximate the b ehavior -

Algorithmic Coding Theory ¡

ALGORITHMIC CODING THEORY Atri Rudra State University of New York at Buffalo 1 Introduction Error-correcting codes (or just codes) are clever ways of representing data so that one can recover the original information even if parts of it are corrupted. The basic idea is to judiciously introduce redundancy so that the original information can be recovered when parts of the (redundant) data have been corrupted. Perhaps the most natural and common application of error correcting codes is for communication. For example, when packets are transmitted over the Internet, some of the packets get corrupted or dropped. To deal with this, multiple layers of the TCP/IP stack use a form of error correction called CRC Checksum [Pe- terson and Davis, 1996]. Codes are used when transmitting data over the telephone line or via cell phones. They are also used in deep space communication and in satellite broadcast. Codes also have applications in areas not directly related to communication. For example, codes are used heavily in data storage. CDs and DVDs can work even in the presence of scratches precisely because they use codes. Codes are used in Redundant Array of Inexpensive Disks (RAID) [Chen et al., 1994] and error correcting memory [Chen and Hsiao, 1984]. Codes are also deployed in other applications such as paper bar codes, for example, the bar ¡ To appear as a book chapter in the CRC Handbook on Algorithms and Complexity Theory edited by M. Atallah and M. Blanton. Please send your comments to [email protected] 1 code used by UPS called MaxiCode [Chandler et al., 1989]. -

An Introduction to Algorithmic Coding Theory

An Introduction to Algorithmic Coding Theory M. Amin Shokrollahi Bell Laboratories 1 Part 1: Codes 1-1 A puzzle What do the following problems have in common? 2 Problem 1: Information Transmission G W D A S U N C L R V MESSAGE J K E MASSAGE ?? F T X M I H Z B Y 3 Problem 2: Football Pool Problem Bayern M¨unchen : 1. FC Kaiserslautern 0 Homburg : Hannover 96 1 1860 M¨unchen : FC Karlsruhe 2 1. FC K¨oln : Hertha BSC 0 Wolfsburg : Stuttgart 0 Bremen : Unterhaching 2 Frankfurt : Rostock 0 Bielefeld : Duisburg 1 Feiburg : Schalke 04 2 4 Problem 3: In-Memory Database Systems Database Corrupted! User memory malloc malloc malloc malloc Code memory 5 Problem 4: Bulk Data Distribution 6 What do they have in common? They all can be solved using (algorithmic) coding theory 7 Basic Idea of Coding Adding redundancy to be able to correct! MESSAGE MESSAGE MESSAGE MASSAGE Objectives: • Add as little redundancy as possible; • correct as many errors as possible. 8 Codes A code of block-length n over the alphabet GF (q) is a set of vectors in GF (q)n. If the set forms a vector space over GF (q), then the code is called linear. [n, k]q-code 9 Encoding Problem ? ? ? source ? ? ? Codewords Efficiency! 10 Linear Codes: Generator Matrix Generator matrix source codeword k n k x n O(n2) 11 Linear Codes: Parity Check Matrix 0 Parity check matrix codeword O(n2) after O(n3) preprocessing. 12 The Decoding Problem: Maximum Likelihood Decoding abcd 7→ abcd | abcd | abcd Received: a x c d | a b z d | abcd. -

The Future of DNA Data Storage the Future of DNA Data Storage

The Future of DNA Data Storage The Future of DNA Data Storage September 2018 A POTOMAC INSTITUTE FOR POLICY STUDIES REPORT AC INST M IT O U T B T The Future O E P F O G S R IE of DNA P D O U Data LICY ST Storage September 2018 NOTICE: This report is a product of the Potomac Institute for Policy Studies. The conclusions of this report are our own, and do not necessarily represent the views of our sponsors or participants. Many thanks to the Potomac Institute staff and experts who reviewed and provided comments on this report. © 2018 Potomac Institute for Policy Studies Cover image: Alex Taliesen POTOMAC INSTITUTE FOR POLICY STUDIES 901 North Stuart St., Suite 1200 | Arlington, VA 22203 | 703-525-0770 | www.potomacinstitute.org CONTENTS EXECUTIVE SUMMARY 4 Findings 5 BACKGROUND 7 Data Storage Crisis 7 DNA as a Data Storage Medium 9 Advantages 10 History 11 CURRENT STATE OF DNA DATA STORAGE 13 Technology of DNA Data Storage 13 Writing Data to DNA 13 Reading Data from DNA 18 Key Players in DNA Data Storage 20 Academia 20 Research Consortium 21 Industry 21 Start-ups 21 Government 22 FORECAST OF DNA DATA STORAGE 23 DNA Synthesis Cost Forecast 23 Forecast for DNA Data Storage Tech Advancement 28 Increasing Data Storage Density in DNA 29 Advanced Coding Schemes 29 DNA Sequencing Methods 30 DNA Data Retrieval 31 CONCLUSIONS 32 ENDNOTES 33 Executive Summary The demand for digital data storage is currently has been developed to support applications in outpacing the world’s storage capabilities, and the life sciences industry and not for data storage the gap is widening as the amount of digital purposes. -

Voting Rules As Error-Correcting Codes ∗ Ariel D

Artificial Intelligence 231 (2016) 1–16 Contents lists available at ScienceDirect Artificial Intelligence www.elsevier.com/locate/artint ✩ Voting rules as error-correcting codes ∗ Ariel D. Procaccia, Nisarg Shah , Yair Zick Computer Science Department, Carnegie Mellon University, Pittsburgh, PA 15213, USA a r t i c l e i n f o a b s t r a c t Article history: We present the first model of optimal voting under adversarial noise. From this viewpoint, Received 21 January 2015 voting rules are seen as error-correcting codes: their goal is to correct errors in the input Received in revised form 13 October 2015 rankings and recover a ranking that is close to the ground truth. We derive worst-case Accepted 20 October 2015 bounds on the relation between the average accuracy of the input votes, and the accuracy Available online 27 October 2015 of the output ranking. Empirical results from real data show that our approach produces Keywords: significantly more accurate rankings than alternative approaches. © Social choice 2015 Elsevier B.V. All rights reserved. Voting Ground truth Adversarial noise Error-correcting codes 1. Introduction Social choice theory develops and analyzes methods for aggregating the opinions of individuals into a collective decision. The prevalent approach is motivated by situations in which opinions are subjective, such as political elections, and focuses on the design of voting rules that satisfy normative properties [1]. An alternative approach, which was proposed by the marquis de Condorcet in the 18th Century, had confounded schol- ars for centuries (due to Condorcet’s ambiguous writing) until it was finally elucidated by Young [34].