Towards Automatic Face Recognition in Unconstrained Scenarios

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trip Hazzard 1.7 Contents

Trip Hazzard 1.7 Contents Contents............................................ 1 I Got You (I Feel Good) ................... 18 Dakota .............................................. 2 Venus .............................................. 19 Human .............................................. 3 Ooh La La ....................................... 20 Inside Out .......................................... 4 Boot Scootin Boogie ........................ 21 Have A Nice Day ............................... 5 Redneck Woman ............................. 22 One Way or Another .......................... 6 Trashy Women ................................ 23 Sunday Girl ....................................... 7 Sweet Dreams (are made of this) .... 24 Shakin All Over ................................. 8 Strict Machine.................................. 25 Tainted Love ..................................... 9 Hanging on the Telephone .............. 26 Under the Moon of Love ...................10 Denis ............................................... 27 Mr Rock N Roll .................................11 Stupid Girl ....................................... 28 If You Were A Sailboat .....................12 Molly's Chambers ............................ 29 Thinking Out Loud ............................13 Sharp Dressed Man ......................... 30 Run ..................................................14 Fire .................................................. 31 Play that funky music white boy ........15 Mustang Sally .................................. 32 Maneater -

Songs by Artist 08/29/21

Songs by Artist 09/24/21 As Sung By Song Title Track # Alexander’s Ragtime Band DK−M02−244 All Of Me PM−XK−10−08 Aloha ’Oe SC−2419−04 Alphabet Song KV−354−96 Amazing Grace DK−M02−722 KV−354−80 America (My Country, ’Tis Of Thee) ASK−PAT−01 America The Beautiful ASK−PAT−02 Anchors Aweigh ASK−PAT−03 Angelitos Negros {Spanish} MM−6166−13 Au Clair De La Lune {French} KV−355−68 Auld Lang Syne SC−2430−07 LP−203−A−01 DK−M02−260 THMX−01−03 Auprès De Ma Blonde {French} KV−355−79 Autumn Leaves SBI−G208−41 Baby Face LP−203−B−07 Beer Barrel Polka (Roll Out The Barrel) DK−3070−13 MM−6189−07 Beyond The Sunset DK−77−16 Bill Bailey, Won’t You Please Come Home? DK−M02−240 CB−5039−3−13 B−I−N−G−O CB−DEMO−12 Caisson Song ASK−PAT−05 Clementine DK−M02−234 Come Rain Or Come Shine SAVP−37−06 Cotton Fields DK−2034−04 Cry Like A Baby LAS−06−B−06 Crying In The Rain LAS−06−B−09 Danny Boy DK−M02−704 DK−70−16 CB−5039−2−15 Day By Day DK−77−13 Deep In The Heart Of Texas DK−M02−245 Dixie DK−2034−05 ASK−PAT−06 Do Your Ears Hang Low PM−XK−04−07 Down By The Riverside DK−3070−11 Down In My Heart CB−5039−2−06 Down In The Valley CB−5039−2−01 For He’s A Jolly Good Fellow CB−5039−2−07 Frère Jacques {English−French} CB−E9−30−01 Girl From Ipanema PM−XK−10−04 God Save The Queen KV−355−72 Green Grass Grows PM−XK−04−06 − 1 − Songs by Artist 09/24/21 As Sung By Song Title Track # Greensleeves DK−M02−235 KV−355−67 Happy Birthday To You DK−M02−706 CB−5039−2−03 SAVP−01−19 Happy Days Are Here Again CB−5039−1−01 Hava Nagilah {Hebrew−English} MM−6110−06 He’s Got The Whole World In His Hands -

On “Strict Machine” by Goldfrapp

On “Strict Machine” by Goldfrapp Sam Jowett Four months, fifteen days in. Honeymoon Phase meant to mellow out. Passion ought to neutralize. But how fucking wrong is that. Chrysalis shredding—not just clothes—but those thoughts. True selves emerge. Polyester veneer of politeness is now scraped clean. Goldfrapp The culprit: a single nail, obsidian sharp. Goldfrapp’s satin clad, ebony-gloved fingers. Needle digs into vinyl. You dig into my skin. “Wonderful Electriccccccc.” The lyric “Strict Machine” sashays over the bridge. We saunter past discarded inhibitions. The chorus awaits, an Black Cherry eruption with a single revelation: 04/2003 I’m in love! Mute I’m in love! I’m in love! “Felt Mountain,” their debut, was the bait. But just like us, hints flirted beneath the cabaret instrument gloss. Even masquerades can only hide appearances for so long. A truth awaited. Not to be whispered, but rather gasped aloud. “Strict Machine” dictates: Dressed in white noise. Little else. This is the inverse of a sophomore slump. Former trip-hop ambience is usurped by electroclash riptide. Siren synths draw us closer. Entrapping me to you. Lyrics our parents warned us about. Trent Reznor wishes he could be this lethal. Dance. It does not ask. It demands. Sitting is heresy. Head thrashing, body sweating. This is not some damn waltz. Hands do not clasp in graceful arcs. Rather, bodies meet perpendicular. Entwine inversely. Arms twist. Disorientation until we can’t tell each other apart. You tell me you can tie cherry stems with your tongue. I tell you I can whisper “Black Cherry” nothings into your ear. -

Proceedings of the Demonstrations at the 14Th Conference of the European Chapter of the Association for Computational Linguistics

EACL 2014 Proceedings of the Demonstrations at the 14th Conference of the European Chapter of the Association for Computational Linguistics April 26-30, 2014 Gothenburg, Sweden GOLD SPONSORS SILVER SPONSOR BRONZE SPONSORS MASTER'S PROGRAMME IN LANGUAGE SUPPORTERS TECHNOLOGY EXHIBITORS OTHER SPONSORS HOSTS c 2014 The Association for Computational Linguistics ii Order copies of this and other ACL proceedings from: Association for Computational Linguistics (ACL) 209 N. Eighth Street Stroudsburg, PA 18360 USA Tel: +1-570-476-8006 Fax: +1-570-476-0860 [email protected] ISBN 978-1-937284-75-6 iii Preface: General Chair Dear readers, Welcome to EACL 2014, the 14th Conference of the European Chapter of the Association for Computational Linguistics! This is the largest EACL meeting ever: with eighty long papers, almost fifty short ones, thirteen student research papers, twenty-six demos, fourteen workshops and six tutorials, we expect to bring to Gothenburg up to five hundred participants, for a week of excellent science interspersed with entertaining social events. It is hard to imagine how much work is involved in the preparation of such an event. It takes about three years, from the day the EACL board starts discussing the location and nominating the chairs, until the final details of the budget are resolved. The number of people involved is also huge, and I was fortunate to work with an excellent, dedicated and efficient team, to which I am enormously grateful. The scientific program was very ably composed by the Program Committee Chairs, Sharon Goldwater and Stefan Riezler, presiding over a team of twenty-four area chairs. -

Deriving Practical Implementations of First-Class Functions

Deriving Practical Implementations of First-Class Functions ZACHARY J. SULLIVAN This document explores the ways in which a practical implementation is derived from Church’s calculus of _-conversion, i.e. the core of functional programming languages today like Haskell, Ocaml, Racket, and even Javascript. Despite the implementations being based on the calculus, the languages and their semantics that are used in compilers today vary greatly from their original inspiration. I show how incremental changes to Church’s semantics yield the intermediate theories of explicit substitutions, operational semantics, abstract machines, and compilation through intermediate languages, e.g. CPS, on the way to what is seen in modern functional language implementations. Throughout, a particular focus is given to the effect of evaluation strategy which separates Haskell and its implementation from the other languages listed above. 1 INTRODUCTION The gap between theory and practice can be a large one, both in terms of the gap in time between the creation of a theory and its implementation and the appearance of ideas in one versus the other. The difference in time between the presentation of Church’s _-calculus—i.e. the core of functional programming languages today like Haskell, Ocaml, Racket—and its mechanization was a period of 20 years. In the following 60 years till now, there has been further improvements in both the efficiency of the implementation, but also in reasoning about how implementations are related to the original calculus. To compute in the calculus is flexible (even non-deterministic), but real machines are both more complex and require restrictions on the allowable computation to be tractable. -

Karaoke Catalog Updated On: 09/04/2018 Sing Online on Entire Catalog

Karaoke catalog Updated on: 09/04/2018 Sing online on www.karafun.com Entire catalog TOP 50 Tennessee Whiskey - Chris Stapleton My Way - Frank Sinatra Wannabe - Spice Girls Perfect - Ed Sheeran Take Me Home, Country Roads - John Denver Broken Halos - Chris Stapleton Sweet Caroline - Neil Diamond All Of Me - John Legend Sweet Child O'Mine - Guns N' Roses Don't Stop Believing - Journey Jackson - Johnny Cash Thinking Out Loud - Ed Sheeran Uptown Funk - Bruno Mars Wagon Wheel - Darius Rucker Neon Moon - Brooks & Dunn Friends In Low Places - Garth Brooks Fly Me To The Moon - Frank Sinatra Always On My Mind - Willie Nelson Girl Crush - Little Big Town Zombie - The Cranberries Ice Ice Baby - Vanilla Ice Folsom Prison Blues - Johnny Cash Piano Man - Billy Joel (Sittin' On) The Dock Of The Bay - Otis Redding Bohemian Rhapsody - Queen Turn The Page - Bob Seger Total Eclipse Of The Heart - Bonnie Tyler Ring Of Fire - Johnny Cash Me And Bobby McGee - Janis Joplin Man! I Feel Like A Woman! - Shania Twain Summer Nights - Grease House Of The Rising Sun - The Animals Strawberry Wine - Deana Carter Can't Help Falling In Love - Elvis Presley At Last - Etta James I Will Survive - Gloria Gaynor My Girl - The Temptations Killing Me Softly - The Fugees Jolene - Dolly Parton Before He Cheats - Carrie Underwood Amarillo By Morning - George Strait Love Shack - The B-52's Crazy - Patsy Cline I Want It That Way - Backstreet Boys In Case You Didn't Know - Brett Young Let It Go - Idina Menzel These Boots Are Made For Walkin' - Nancy Sinatra Livin' On A Prayer - Bon -

Nightlife Features Mattunleashed on Nightlife :: Bananarama Or

EDGE New York City :: Gay New York City :: Nightlife :: Gay Bars, Gay Clubs, Lesbian Bars, Lesbian ... Page 1 of 2 Nightlife Features MattUnleashed on Nightlife :: Bananarama or Goldfrapp? by Matt Kalkhoff EDGE Nightlife Contributor Thursday May 18, 2006 On Tuesday night, I had to pick between an in-store CD signing at the Columbus Circle Borders where Sara Dallin and Keren Woodward were celebrating the U.S. release of their new album, "Drama" (which is excellent, by the way) or an in-studio mini-concert by Goldfrapp in Tribeca that was to be broadcast live on Seattle’s KEXP radio station and website. As much as I love Bananarama (yes, they’re still recording, but it was just an appearance, no Bananarama signed CDs at Columbus Circle Borders performance), I think I Tuesday night. made the right decision by going with that other English dame. After a lovely little happy hour excursion (see below), we made our way over to Gigantic Studios at Franklin and Broadway to catch Goldfrapp perform live. Shortly after 8:00 about 20 of us were ushered up to the 4th floor and into a very tiny room where we stood about 8 feet from Alison & Co., separated only by a large sound-proof window. An interview with a KEXP personality split the four-song set, which included "Number 1," "Ooh La La" and two other selections from the "Supernature" CD. I was hoping to hear some older hits like "Strict Machine" as well, but alas, it wasn’t meant to be. (I’m sure they performed that one and others at their Irving Plaza concert the previous night, though.) Granted, there wasn’t a whole lot she could do in that cramped, carpeted space with all the equipment and several band members surrounding her, but the diminutive diva looked and sounded terrific, sporting a simple black number, retro-fab blond locks and some serious shades that were either shielding her from the blinding studio lights or covering up evidence of whatever post-concert partying might have gone on the night before. -

Songs by Artist

Songs by Artist Title Title (Hed) Planet Earth 2 Live Crew Bartender We Want Some Pussy Blackout 2 Pistols Other Side She Got It +44 You Know Me When Your Heart Stops Beating 20 Fingers 10 Years Short Dick Man Beautiful 21 Demands Through The Iris Give Me A Minute Wasteland 3 Doors Down 10,000 Maniacs Away From The Sun Because The Night Be Like That Candy Everybody Wants Behind Those Eyes More Than This Better Life, The These Are The Days Citizen Soldier Trouble Me Duck & Run 100 Proof Aged In Soul Every Time You Go Somebody's Been Sleeping Here By Me 10CC Here Without You I'm Not In Love It's Not My Time Things We Do For Love, The Kryptonite 112 Landing In London Come See Me Let Me Be Myself Cupid Let Me Go Dance With Me Live For Today Hot & Wet Loser It's Over Now Road I'm On, The Na Na Na So I Need You Peaches & Cream Train Right Here For You When I'm Gone U Already Know When You're Young 12 Gauge 3 Of Hearts Dunkie Butt Arizona Rain 12 Stones Love Is Enough Far Away 30 Seconds To Mars Way I Fell, The Closer To The Edge We Are One Kill, The 1910 Fruitgum Co. Kings And Queens 1, 2, 3 Red Light This Is War Simon Says Up In The Air (Explicit) 2 Chainz Yesterday Birthday Song (Explicit) 311 I'm Different (Explicit) All Mixed Up Spend It Amber 2 Live Crew Beyond The Grey Sky Doo Wah Diddy Creatures (For A While) Me So Horny Don't Tread On Me Song List Generator® Printed 5/12/2021 Page 1 of 334 Licensed to Chris Avis Songs by Artist Title Title 311 4Him First Straw Sacred Hideaway Hey You Where There Is Faith I'll Be Here Awhile Who You Are Love Song 5 Stairsteps, The You Wouldn't Believe O-O-H Child 38 Special 50 Cent Back Where You Belong 21 Questions Caught Up In You Baby By Me Hold On Loosely Best Friend If I'd Been The One Candy Shop Rockin' Into The Night Disco Inferno Second Chance Hustler's Ambition Teacher, Teacher If I Can't Wild-Eyed Southern Boys In Da Club 3LW Just A Lil' Bit I Do (Wanna Get Close To You) Outlaw No More (Baby I'ma Do Right) Outta Control Playas Gon' Play Outta Control (Remix Version) 3OH!3 P.I.M.P. -

2 Column Indented

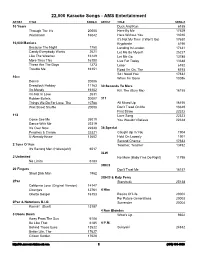

22,000 Karaoke Songs - AMS Entertainment ARTIST TITLE SONG # ARTIST TITLE SONG # 10 Years Duck And Run 6188 Through The Iris 20005 Here By Me 17629 Wasteland 16042 Here Without You 13010 It's Not My Time (I Won't Go) 17630 10,000 Maniacs Kryptonite 6190 Because The Night 1750 Landing In London 17631 Candy Everybody Wants 2621 Let Me Be Myself 25227 Like The Weather 16149 Let Me Go 13785 More Than This 16150 Live For Today 13648 These Are The Days 1273 Loser 6192 Trouble Me 16151 Road I'm On, The 6193 So I Need You 17632 10cc When I'm Gone 13086 Donna 20006 Dreadlock Holiday 11163 30 Seconds To Mars I'm Mandy 16152 Kill, The (Bury Me) 16155 I'm Not In Love 2631 Rubber Bullets 20007 311 Things We Do For Love, The 10788 All Mixed Up 16156 Wall Street Shuffle 20008 Don't Tread On Me 13649 First Straw 22322 112 Love Song 22323 Come See Me 25019 You Wouldn't Believe 22324 Dance With Me 22319 It's Over Now 22320 38 Special Peaches & Cream 22321 Caught Up In You 1904 U Already Know 13602 Hold On Loosely 1901 Second Chance 17633 2 Tons O' Fun Teacher, Teacher 13492 It's Raining Men (Hallelujah!) 6017 3LW 2 Unlimited No More (Baby I'ma Do Right) 11795 No Limits 6183 3Oh!3 20 Fingers Don't Trust Me 16157 Short Dick Man 1962 3OH!3 & Katy Perry 2Pac Starstrukk 25138 California Love (Original Version) 14147 Changes 12761 4 Him Ghetto Gospel 16153 Basics Of Life 20002 For Future Generations 20003 2Pac & Notorious B.I.G. -

Alison Goldfrapp and Will Gregory Speak to Sarah

16 CITYLIFE MUSIC FRIDAY, FEBRUARY 28, 2014 M.E.N. ‘THE MUSIC BECOMES A CREATIVE GENE POOL, A STORm’ ALISON GOLDFRAPP AND WILL GREGORY SPEAK TO SARAH WALTERS ABOUT THEIR NEW ALBUM AND TOUR AND THEIR FIRST VENTURE ONTO THE SILVER SCREEN FOR A ONE-OFF SHOWCASE OF MINI MOVIES ●●Alison Goldfrapp and below with musical partner Will Gregory ERFECTION- “I demanded I had my in a trilogy of records for the band to visualise remain unfazed. “It’s a UK, Europe, America and lover ‘Dave’ – a story that ISM has bedroom painted red. linked by mood and the songs and their way to do something we Australia. resulted in song Clay. been Alison My dad did paint it red theme that started with stories. “These cinema can’t do on tour,” Will In Manchester, the “On past albums, I’ve Goldfrapp’s for me – ceilings and all. first album Felt Mountain events are becoming a adds. “Something that’s Odeon Printworks, Vue probably referred more to most Christ,” she laughs, “what and then 2008’s Seventh popular way for people visually interesting.” Manchester at the Lowry personal experience and controversial a nightmare child I was.” Tree. Nor is it the first to see things they Alison agrees: “The music Outlet Mall in Salford made them into some Ptrait. On one hand, her Will chuckles. “I’ve got spiritually stirring place wouldn’t normally have becomes a creative gene Quays and at Cineworld other sort of story,” Alison clear and rounded artistic a youngster now and the they’ve played it; the the opportunity to see,” pool, a storm.” Didsbury will show it. -

The Ultimate Playlist This List Comprises More Than 3000 Songs (Song Titles), Which Have Been Released Between 1931 and 2018, Ei

The Ultimate Playlist This list comprises more than 3000 songs (song titles), which have been released between 1931 and 2021, either as a single or as a track of an album. However, one has to keep in mind that more than 300000 songs have been listed in music charts worldwide since the beginning of the 20th century [web: http://tsort.info/music/charts.htm]. Therefore, the present selection of songs is obviously solely a small and subjective cross-section of the most successful songs in the history of modern music. Band / Musician Song Title Released A Flock of Seagulls I ran 1982 Wishing 1983 Aaliyah Are you that somebody 1998 Back and forth 1994 More than a woman 2001 One in a million 1996 Rock the boat 2001 Try again 2000 ABBA Chiquitita 1979 Dancing queen 1976 Does your mother know? 1979 Eagle 1978 Fernando 1976 Gimme! Gimme! Gimme! 1979 Honey, honey 1974 Knowing me knowing you 1977 Lay all your love on me 1980 Mamma mia 1975 Money, money, money 1976 People need love 1973 Ring ring 1973 S.O.S. 1975 Super trouper 1980 Take a chance on me 1977 Thank you for the music 1983 The winner takes it all 1980 Voulez-Vous 1979 Waterloo 1974 ABC The look of love 1980 AC/DC Baby please don’t go 1975 Back in black 1980 Down payment blues 1978 Hells bells 1980 Highway to hell 1979 It’s a long way to the top 1975 Jail break 1976 Let me put my love into you 1980 Let there be rock 1977 Live wire 1975 Love hungry man 1979 Night prowler 1979 Ride on 1976 Rock’n roll damnation 1978 Author: Thomas Jüstel -1- Rock’n roll train 2008 Rock or bust 2014 Sin city 1978 Soul stripper 1974 Squealer 1976 T.N.T. -

![Statistical Machine Translation Arxiv:1709.07809V1 [Cs.CL]](https://docslib.b-cdn.net/cover/8091/statistical-machine-translation-arxiv-1709-07809v1-cs-cl-4318091.webp)

Statistical Machine Translation Arxiv:1709.07809V1 [Cs.CL]

Statistical Machine Translation Draft of Chapter 13: Neural Machine Translation Philipp Koehn Center for Speech and Language Processing Department of Computer Science Johns Hopkins University arXiv:1709.07809v1 [cs.CL] 22 Sep 2017 1st public draft August 7, 2015 2nd public draft September 25, 2017 2 Contents 13 Neural Machine Translation 5 13.1 A Short History . .5 13.2 Introduction to Neural Networks . .6 13.2.1 Linear Models . .7 13.2.2 Multiple Layers . .8 13.2.3 Non-Linearity . .9 13.2.4 Inference . 10 13.2.5 Back-Propagation Training . 11 13.2.6 Refinements . 18 13.3 Computation Graphs . 23 13.3.1 Neural Networks as Computation Graphs . 24 13.3.2 Gradient Computations . 25 13.3.3 Deep Learning Frameworks . 29 13.4 Neural Language Models . 31 13.4.1 Feed-Forward Neural Language Models . 32 13.4.2 Word Embedding . 35 13.4.3 Efficient Inference and Training . 37 13.4.4 Recurrent Neural Language Models . 39 13.4.5 Long Short-Term Memory Models . 41 13.4.6 Gated Recurrent Units . 43 13.4.7 Deep Models . 44 13.5 Neural Translation Models . 47 13.5.1 Encoder-Decoder Approach . 47 13.5.2 Adding an Alignment Model . 48 13.5.3 Training . 52 13.5.4 Beam Search . 54 3 4 CONTENTS 13.6 Refinements . 58 13.6.1 Ensemble Decoding . 59 13.6.2 Large Vocabularies . 61 13.6.3 Using Monolingual Data . 63 13.6.4 Deep Models . 66 13.6.5 Guided Alignment Training . 69 13.6.6 Modeling Coverage .