Shedding Light on GIS: a 3D Immersive Approach to Urban Lightscape Integration Into GIS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Integrated Parametric Urban Design in Grasshopper / Rhinoceros 3D Demonstrated on a Master Plan in Vienna

Integrated Parametric Urban Design in Grasshopper / Rhinoceros 3D Demonstrated on a Master Plan in Vienna Theresa Fink1, Reinhard Koenig2 1AIT Austrian Institute of Technology 2AIT Austrian Institute of Technology, Bauhaus- Universität Weimar [email protected] [email protected] By 2050 an estimated 70 percent of the world's population will live in megacities with more than 10 million citizens (Renner 2018). This growth calls for new target-oriented, interdisciplinary methods in urban planning and design in cities to meet sustainable development targets. In response, this paper exemplifies an integrated urban design process on a master plan project in Vienna. The objective is to investigate the potential towards a holistic, digital, urban design process aimed at the development of a practical methodology for future designs. The presented urban design process includes analyses and simulation tools within Rhinoceros 3D and its plug-in Grasshopper as quality-enhancing mediums that facilitate the creative approaches in the course of the project. The increase in efficiency and variety of design variants shows a promising future for the practical suitability of this approach. Keywords: urban design, parametric modeling, urban simulation, design evaluation, environmental performance INTRODUCTION buildings. The term public realm has broader signif- A contemporary urban design process demands icance than public space because it includes build- human-centered development where future tech- ing facades and anything that is visible from eye level nologies intertwine with traditional approaches to (Karssenberg 2016). generate a sustainable, livable environment while In general, the collection of this data is rather saving time and resources. Frey defines urban de- simple in comparison with the capacity of process- sign as a discipline that deals with issues that ex- ing (Feng 2009). -

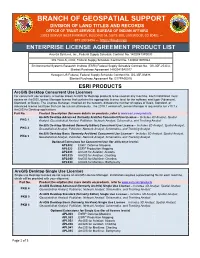

Branch of Geospatial Support

BRANCH OF GEOSPATIAL SUPPORT DIVISION OF LAND TITLES AND RECORDS OFFICE OF TRUST SERVICE, BUREAU OF INDIAN AFFAIRS 13922 DENVER WEST PARKWAY, BUILDING 54, SUITE 300, LAKEWOOD, CO 80401 — 877.293.9494 — https://bia.gov/gis ENTERPRISE LICENSE AGREEMENT PRODUCT LIST Avenza Systems, Inc., Federal Supply Schedule Contract No. 140D0419P0030 IKS TUULS, OOO, Federal Supply Schedule Contract No. 140D0418P0044 Environmental Systems Research Institute (ESRI) Federal Supply Schedule Contract No. GS-35F-253CA, Blanket Purchase Agreement 140D0419A0017 Hexagon US Federal, Federal Supply Schedule Contract No. GS-35F-0383K Blanket Purchase Agreement No. G17PA00016 ESRI PRODUCTS ArcGIS Desktop Concurrent Use Licenses For concurrent use versions, a license allows ArcGIS for Desktop products to be used on any machine. Each installation must access an ArcGIS License Manager license that contains the appropriate license level for the software seat type (Advanced, Standard, or Basic). The License Manager, installed on the network, dictates the number of copies of Basic, Standard, or Advanced license seat type that can be run simultaneously. The 2019.1 version of License Manager is required to run v10.7.x ArcGIS for Desktop applications. Part No. Product Description (for more details on products, refer to www.esri.com/products ArcGIS Desktop Advanced (formerly ArcInfo) Concurrent Use License – Includes 3D Analyst, Spatial PKG 1 Analyst, Geostatistical Analyst, Publisher, Network Analyst, Schematics, and Tracking Analyst ArcGIS Desktop Standard (formerly Editor) -

STATE of NEW YORK PRICELIST August 2015

STATE OF NEW YORK PRICELIST August 2015 CLIN Esri Part Net Price FOB Number Number Section Product Description Price NY 1 ArcGIS for Desktop Concurrent Use Licenses GIS0001 52382 ArcGIS for Desktop Advanced Concurrent Use License $7,295.00 $7,295.00 GIS0100 86353 ArcGIS for Desktop Standard Concurrent Use License $5,713.00 $5,713.00 GIS0100 86353 ArcGIS for Desktop Standard Concurrent Use License 6+ $5,142.00 $5,142.00 GIS0200 87143 ArcGIS for Desktop Basic Concurrent Use License $2,857.00 $2,857.00 GIS0200 87143 ArcGIS for Desktop Basic Concurrent Use License 6+ $2,571.00 $2,571.00 1 ArcGIS for Desktop Concurrent Use Licenses Maintenance GIS0001MP 52384 Primary Maintenance for ArcGIS for Desktop Advanced Concurrent Use License (primary 1st, 11th, 21st, 31st…) $2,406.00 $2,406.00 GIS0001MS 52385 Secondary Maintenance for ArcGIS for Desktop Advanced Concurrent Use License (secondary 2–10, 12–20...) $1,184.00 $1,184.00 GIS0100MP 86497 Primary Maintenance for ArcGIS for Desktop Standard Concurrent Use License $1,480.00 $1,480.00 GIS0100MS 86500 Secondary Maintenance for ArcGIS for Desktop Standard Concurrent Use License $1,184.00 $1,184.00 GIS0200MP 87194 Primary Maintenance for ArcGIS for Desktop Basic Concurrent Use License $691.00 $691.00 GIS0200MS 87195 Secondary Maintenance for ArcGIS for Desktop Basic Concurrent Use License $493.00 $493.00 1 ArcGIS Extensions for Desktop Concurrent Use Licenses GIS0400 88282 ArcGIS Spatial Analyst for Desktop Concurrent Use License $2,040.00 $2,040.00 GIS0400 88282 ArcGIS Spatial Analyst for Desktop -

Esri Product Lifecycle Support Policy

May 2021 Esri Product Lifecycle Support Policy Table of Contents Esri Product Lifecycle Support Policy Overview 3 Product Lifecycle Phases 3 Software Products 5 Software Products: ArcGIS Pro 5 Software Products: ArcGIS Desktop 7 Software Products: ArcGIS Enterprise 11 ArcGIS Enterprise on Kubernetes 15 ArcGIS Online 15 Online Content Services 18 Developers 20 ArcGIS API for JavaScript 23 Apps 25 Data Products 27 ArcGIS Solutions 29 Transition Announcements 31 Life Cycle Support Status for Esri Products 31 Esri Product Lifecycle Policy, May 2021 Esri Product Lifecycle Support Policy Overview The Esri Product Lifecycle Support Policy provides customers with information regarding the level of technical and software support Esri will provide customers during the lifespan of a software product. The Esri Product Lifecycle is a progression of life cycle phases starting with the initial release of a new software product (or a new version of a software product) and ending with the retirement of that version of the product. Each phase of the life cycle includes specific, but different, technical and software support. Product life cycles also vary by the type of product. For example, most on premise software products go through a four-phase life cycle, whereas online services and content products typically have a two-phase life cycle. The Product Lifecycle Phases are described below as well as the technical and software support that is provided for products during each phase of the product life cycle. Product Lifecycle Phases There are up to four phases to the product lifecycle. 1. General Availability (all) 2. Extended (four-phase life cycles only) 3. -

GIS Strategic Plan

City of Tigard GIS Strategic Plan GIS Coordinating Group Public Works, John Goodrich Public Works, Paul Izatt Public Works, Sara Doughty Library, Joyce Niewendorp Community Development, Tom McGuire Community Development, Agnes Kowacz Community Development, Tim Lehrbach Police, Gayla Shillitto City Management, Loreen Mills Finance & Information Services, Toby LaFrance Finance & Information Services, Louis Sears Finance & Information Services, Nathan Shaub Finance & Information Services, Preston Beck Document prepared by Finance and Information Services. Reviewed and endorsed by the GIS Coordinating Group. Approved by Tigard Executive Staff July 8, 2014. O R E G O N Page 2 of 36 City of Tigard GIS Strategic Plan Table of Contents Page Executive Summary…………………………………………………………………..... 4 GIS Strategic Plan……………………………………………………………...…....…. 9 Introduction…………………………………………………………………… 9 Process…...………………………………………………………………….… 9 Background………………………………………………………………….… 9 GIS Program Overview……………………………………………………...… 10 Trends Impacting GIS……………………..…………………………………….….…. 16 Policy Decisions Impacting GIS…..………..………………………………………….. 20 Organizational Evaluation……………….….……….……………………………...….. 21 Analysis of Interviews –Major Issues…………………………………………………... 25 GIS Vision, Goals, Objectives……………………………..…………………………... 32 Appendix: GIS Executive Team/GIS Technical Team………………………….... 34 References………………………………………………………………………….. 36 Page 3 of 36 City of Tigard GIS Strategic Plan Executive Summary Purpose The purpose of this GIS (Geographic Information System) Strategic Plan -

3D GIS Modeling Using ESRI´S Cityengine a Case Study from the University Jaume I in Castellon De La Plana Spain Subtitle

3d GIS modeling using ESRI´s CityEngine A case study from the University Jaume I in Castellon de la Plana Spain Subtitle Kristinn Nikulás Edvardsson I 3d GIS modeling using ESRI´s CityEngine A case study from the University Jaume I in Castellón de la plana Spain Dissertation supervised by PhD Michael Gould And co supervised by PhD Joaquin Huerta PhD Marco Painho March 2013 II Acknowledgments I wish to thank my supervisors Michael Gould, Joaquin Huerta and Marco Painho for making this exciting project a reality and for the opertunity to work with CityEngine. I would like to thank my fellow masters students for the time spent together working on our projects and for experiences that I will never forget. Last but not least I want to express my gratitude for Andres Munos and all the staff working at the University Jaume I for their support and patience. III 3d GIS modeling using ESRI´s CityEngine A case study from the University Jaume I in Castellón de la plana Spain ABSTRACT The field of GIS has in recent years been increasingly demanding better 3d functionality and ever more resources is being spent in researching the concept. The work presented here is on the integration and use of procedural modeling language for generating a realistic 3d model of the University Jaume I in Castellón de la Plana Spain. The language used is a modified version of shape grammars combined with split grammars with the software CityEngine. The work is conducted with the use of CityEngine and is intended as a contribution to the currently active research on the use of shape grammar in GI science. -

3D Modelling and Virtual Reality for the Management of Public Buildings

3D Modelling and Virtual Reality for the Management of Public Buildings Ioannis PISPIDIKIS, Kitsakis DIMITRIOS, Eftychia KALOGIANNI, Katerina ATHANASIOU, Anastasios LAMPROPOULOS and Efi DIMOPOULOU, Greece Key words: Building Information Model (BIM), Virtual Reality (VR), Public Buildings, 3D Visualisation SUMMARY Virtual Reality (VR) technologies have become incredibly popular in the era of Industry 4.0, considered to be one step higher than 3D visualisation. VR is described as a computer-generated environment that provides users with the sense of being surrounded by the real world. Visualisation through VR has made it possible to approach a range of issues in the Architecture, Engineering, Construction and Facility Management (AEC/FM) industry, as well as in the real estate domain and urban planning, under a new sustainable perspective. Moreover, as an interactive platform, VR enables smart and sustainable cities to identify and expand their potential by getting smarter. To make VR experience accessible and independent of the device used, the WebVR approach is researched and implemented. This approach mainly relies on the Three.js graphic engine based on Web Graphics Library (WebGL) which has been supported by most of the mainstream web browsers’ latest version with no embedded plugin. As a result, some of the barriers obstructing the use of VR have been removed and hence, VR has become more accessible and cheaper. VR combined with Building Information Modelling (BIM) has the potential to improve collaboration in the AEC/FM industry, offering a supportive environment for conducting analysis and decision-making process. Depending on the scale of each project, the requirements and the budget, the source data that is used to create 3D models to be represented in VR may vary. -

Tutorial 17: Desert City Tutorial

Tutorial 17: Desert City Tutorial Copyright © 1995-2013 Esri. All rights reserved. Tutorial 17: Desert City Tutorial Table of Contents Tutorial 17: Desert City Tutorial . 3 Copyright © 1995-2013 Esri. All rights reserved. 2 Tutorial 17: Desert City Tutorial Tutorial 17: Desert City Tutorial In this tutorial: • Tutorial Steps • Recreating the full scene • Focus Intervention: New School Download: • Tutorial Data • Tutorial PDF Prerequisites Installed CityEngine (Version 2012 Advanced or later) • Pay attention to user interface information (especially how to navigate) when installing • Download the Desert City Example via Help > Download Tutorials and Examples, or via the CityEngine Resource Center (http://resources.arcgis.com/content/cityengine/about). Importing the downloaded Example If you have downloaded the Example via the Resource Center, import the Example_Desert_City__2012_1.zip project into your workspace: • Click File > Import Zipped Project into Workspace, then point CityEngine to the corresponding .zip file. Tutorial Steps Exploring the Desert City scene file Steps: 1. Double click the Desert City scene file, which is located in the scene folder. 2. Click Generate in the first dialog which opens. This triggers the Model generation of all elements. 3. Navigate through the scene and explore the buildings, streets and vegetation. You’ll notice that only a fraction of all buildings are generated by default. This is obviously the case to save memory resources. 4. Edit some rule parameters, such as Building_Height, Building_Usage, or the Level of Detail. 5. Select some more building footprints or street shapes (left mouse botton click or draw a selection rectangle). Note: There is different behavior if you select ‘left-right’ or ‘right-left’ with a selection rectangle! Copyright © 1995-2013 Esri. -

CHIAMAKA OYEKWE H: 614-598-7845 | Email: [email protected] | No Sponsorship Required and Willing to Travel 100%

CHIAMAKA OYEKWE H: 614-598-7845 | Email: [email protected] | No sponsorship required and willing to travel 100% Professional Summary An integrity and result-driven GIS/Geoscience data Analyst seeking full-time opportunity to apply my knowledge/experience and committed to continuous training/development. Proficient in analyzing and solving problems, creating maps, building web-based GIS applications, geocoding, geo-referencing, creating web apps, digitizing/extracting both 2D and 3D features, building data models, research, transferring data models into database designs, data accuracy assessment, data entry and database management. Education University of Texas at Dallas MS Geoscience 2017 – Aug. 2019 MS Geospatial Information Science (GIS) 2015 – Dec. 2017 University of Jos, Nigeria B.S. Geology & Mining 2005 – Nov. 2009 Technical Skills Proficient in FIM, Esri ArcGIS Platform, QGIS, Citrix Web, Esri ArcGIS API, Erdas Imagine, ArcGIS Collector, Agisoft Photoscan, LiDAR Module, RiScan, Trimble & Topcon GPS, Tripod, data analysis and linear referencing Proficient in creating map designs and creating web applications, analysis, data collection, data processing, Proficient in ArcGIS Pro, CityEngine 2017.0, ArcSDE, Arc Scene, ArcMap 10.5, ArcCatalog, Geopack, Small world, AutoCAD, Bentley Microstation V8i Proficient in Web GIS and Mobile GIS, TOPCON & TRIMBLE GPS, Granite, Salesforce, Dock Proficient in JavaScript, HTML, Python, Revit, PowerShell, R, JSON, PJSON, SQL, Oracle, Tethys, Jupyter notebook, Hydroshare apps, debugging -

Procedural Runtime 2.3 Architecture

PROCEDURAL RUNTIME 2.3 ARCHITECTURE Abstract ArcGIS CityEngine is based on the procedural runtime, which is the underlying engine that supports also two GP tools in ArcGIS 10.X and drives procedural symbology in ArcGIS Pro. The CityEngine SDK enables you as a 3rd party developer to integrate the procedural runtime in your own client applications (such as DCC or GIS applications) taking full advantage of the procedural core without running CityEngine or ArcGIS. CityEngine is then needed only to author the procedural modeling rules. Moreover, using the CityEngine SDK, you can extend CityEngine with additional import and export formats. This document gives an overview of the procedural runtime architecture, capabilities, its API, and usage. Esri R&D Center Zurich, Förrlibuckstr. 110, 8005 Zurich, Switzerland Copyright © 2013-2020 Esri, Inc. All rights reserved. The information contained in this document is the exclusive property of Environmental Systems Research Institute, Inc. This work is protected under United States copyright law and other international copyright treaties and conventions. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying and recording, or by any information storage or retrieval system, except as expressly permitted in writing by Environmental Systems Research Institute, Inc. All requests should be sent to Attention: Contracts Manager, Environmental Systems Research Institute, Inc., 380 New York Street, Redlands, CA 92373-8100 USA. The information contained in this document is subject to change without notice. U.S. GOVERNMENT RESTRICTED/LIMITED RIGHTS Any software, documentation, and/or data delivered hereunder is subject to the terms of the License Agreement. -

Procedural 3D Modeling and Visualization of Geotypical Bavarian Rural Buildings in Esri Cityengine Software

Technische Universität München Faculty of Civil, Geo and Environmental Engineering Department of Cartography Prof. Dr.-Ing. Liqiu Meng Procedural 3D modeling and visualization of geotypical Bavarian rural buildings in Esri CityEngine software Ieva Dobraja Master’s Thesis Submitted: January, 2015 Study Program: Cartography (Master) Supervisors: Dr.-Ing. Mathias Jahnke (TUM) Dr.-Ing. Özgür Ertac (Esri Deutschland GmbH) Cooperation: ESRI Deutschland GmbH 2015 Declaration of Authorship I hereby declare that the thesis submitted is my own unaided work. All direct or indirect sources used are acknowledged as references. This paper was not previously presented to another examination board and has not been published. Place and date (Signature) Abstract and Key Words Abstract The aim of the Master’s Thesis “Procedural 3D modeling and visualization of geotypical Bavarian rural buildings in Esri CityEngine software” is to establish a 3D model library for rural Bavarian buildings and to create a 3D model of geotypical buildings for the case study area. The purpose of this thesis is to test if the software CityEngine, which is based on procedural modeling and mainly used for creating a 3D content of urban areas, can be applied for the 3D modeling of rural areas. As the area of interest with typical Bavarian buildings chosen for this thesis was Seefeld, a municipality in the district of Starnberg, located southwest of Munich. It lies on the northern coast of the Pilsen Lake. The task of the thesis is to create a 3D model of geotypical Bavarian rural buildings for the case study area by using procedural modeling. This task includes four main steps. -

Maintaining Semantic Information Across Generic 3D Model Editing Operations

Research Collection Journal Article Maintaining semantic information across generic 3D model editing operations Author(s): Yao, Sidan; Ling, Xiao; Nueesch, Fiona; Schrotter, Gerhard; Schubiger, Simon; Fang, Zheng; Ma, Long; Tian, Zhen Publication Date: 2020-01 Permanent Link: https://doi.org/10.3929/ethz-b-000405041 Originally published in: Remote Sensing 12(2), http://doi.org/10.3390/rs12020335 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library remote sensing Article Maintaining Semantic Information across Generic 3D Model Editing Operations Sidan Yao 1 , Xiao Ling 1 , Fiona Nueesch 1, Gerhard Schrotter 2, Simon Schubiger 3,*, Zheng Fang 4, Long Ma 4 and Zhen Tian 5 1 ETH Zurich, Future Cities Laboratory, Singapore-ETH Centre, Singapore 138602, Singapore; [email protected] (S.Y.); [email protected] (X.L.); fi[email protected] (F.N.) 2 Geomatics Engineering (GeoZ) City of Zurich, 8022 Zurich, Switzerland; [email protected] 3 Esri R&D Center Zurich, 8005 Zurich, Switzerland 4 Nanyang Technological University, School of Computer Science and Engineering, Singapore 639798, Singapore; [email protected] (Z.F.); [email protected] (L.M.) 5 Department of Computer Science & Engineering, Ohio State University, Columbus, OH 43210, USA; [email protected] * Correspondence: [email protected] Received: 10 December 2019; Accepted: 15 January 2020; Published: 20 January 2020 Abstract: Many of today’s data models for 3D applications, such as City Geography Markup Language (CityGML) or Industry Foundation Classes (IFC) encode rich semantic information in addition to the traditional geometry and materials representation.