Evaluation of Mobile ARM-Based Socs for High Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

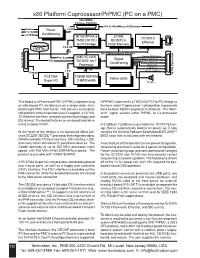

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Accelerating HPL Using the Intel Xeon Phi 7120P Coprocessors

Accelerating HPL using the Intel Xeon Phi 7120P Coprocessors Authors: Saeed Iqbal and Deepthi Cherlopalle The Intel Xeon Phi Series can be used to accelerate HPC applications in the C4130. The highly parallel architecture on Phi Coprocessors can boost the parallel applications. These coprocessors work seamlessly with the standard Xeon E5 processors series to provide additional parallel hardware to boost parallel applications. A key benefit of the Xeon Phi series is that these don’t require redesigning the application, only compiler directives are required to be able to use the Xeon Phi coprocessor. Fundamentally, the Intel Xeon series are many-core parallel processors, with each core having a dedicated L2 cache. The cores are connected through a bi-directional ring interconnects. Intel offers a complete set of development, performance monitoring and tuning tools through its Parallel Studio and VTune. The goal is to enable HPC users to get advantage from the parallel hardware with minimal changes to the code. The Xeon Phi has two modes of operation, the offload mode and native mode. In the offload mode designed parts of the application are “offloaded” to the Xeon Phi, if available in the server. Required code and data is copied from a host to the coprocessor, processing is done parallel in the Phi coprocessor and results move back to the host. There are two kinds of offload modes, non-shared and virtual-shared memory modes. Each offload mode offers different levels of user control on data movement to and from the coprocessor and incurs different types of overheads. In the native mode, the application runs on both host and Xeon Phi simultaneously, communication required data among themselves as need. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Mali-400 MP: a Scalable GPU for Mobile Devices

Mali-400 MP: A Scalable GPU for Mobile Devices Tom Olson Director, Graphics Research, ARM Outline . ARM and Mobile Graphics . Design Constraints for Mobile GPUs . Mali Architecture Overview . Multicore Scaling in Mali-400 MP . Results 2 About ARM . World’s leading supplier of semiconductor IP . Processor Architectures and Implementations . Related IP: buses, caches, debug & trace, physical IP . Software tools and infrastructure . Business Model . License fees . Per-chip royalties . Graphics at ARM . Acquired Falanx in 2006 . ARM Mali is now the world’s most widely licensed GPU family 3 Challenges for Mobile GPUs . Size . Power . Memory Bandwidth 4 More Challenges . Graphics is going into “anything that has a screen” . Mobile . Navigation . Set Top Box/DTV . Automotive . Video telephony . Cameras . Printers . Huge range of form factors, screen sizes, power budgets, and performance requirements . In some applications, a huge difference between peak and average performance requirements 5 Solution: Scalability . Address a wide variety of performance points and applications with a single IP and a single software stack. Need static scalability to adapt to different peak requirements in different platforms / markets . Need dynamic scalability to reduce power when peak performance isn’t needed 6 Options for Scalability . Fine-grained: Multiple pipes, wide SIMD, etc . Proven approach, efficient and effective . But, adding pipes / lanes is invasive . Hard for IP licensees to do on their own . And, hard to partition to provide dynamic scalability . Coarse-grained: Multicore . Easy for licensees to select desired performance . Putting cores on separate power islands allows dynamic scaling 7 Mali 400-MP Top Level Architecture Asynch Mali-400 MP Top-Level APB Geometry Pixel Processor Pixel Processor Pixel Processor Pixel Processor Processor #1 #2 #3 #4 CLKs MaliMMUs RESETs IRQs IDLEs MaliL2 AXI . -

Intel Cirrascale and Petrobras Case Study

Case Study Intel® Xeon Phi™ Coprocessor Intel® Xeon® Processor E5 Family Big Data Analytics High-Performance Computing Energy Accelerating Energy Exploration with Intel® Xeon Phi™ Coprocessors Cirrascale delivers scalable performance by combining its innovative PCIe switch riser with Intel® processors and coprocessors To find new oil and gas reservoirs, organizations are focusing exploration in the deep sea and in complex geological formations. As energy companies such as Petrobras work to locate and map those reservoirs, they need powerful IT resources that can process multiple iterations of seismic models and quickly deliver precise results. IT solution provider Cirrascale began building systems with Intel® Xeon Phi™ coprocessors to provide the scalable performance Petrobras and other customers need while holding down costs. Challenges • Enable deep-sea exploration. Improve reservoir mapping accuracy with detailed seismic processing. • Accelerate performance of seismic applications. Speed time to results while controlling costs. • Improve scalability. Enable server performance and density to scale as data volumes grow and workloads become more demanding. Solution • Custom Cirrascale servers with Intel Xeon Phi coprocessors. Employ new compute blades with the Intel® Xeon® processor E5 family and Intel Xeon Phi coprocessors. Cirrascale uses custom PCIe switch risers for fast, peer-to-peer communication among coprocessors. Technology Results • Linear scaling. Performance increases linearly as Intel Xeon Phi coprocessors “Working together, the are added to the system. Intel® Xeon® processors • Simplified development model. Developers no longer need to spend time optimizing data placement. and Intel® Xeon Phi™ coprocessors help Business Value • Faster, better analysis. More detailed and accurate modeling in less time HPC applications shed improves oil and gas exploration. -

Intel Desktop Board DG41MJ Product Guide

Intel® Desktop Board DG41MJ Product Guide Order Number: E59138-001 Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DG41MJ Product Guide February 2009 If an FCC declaration of conformity marking is present on the board, the following statement applies: FCC Declaration of Conformity This device complies with Part 15 of the FCC Rules. Operation is subject to the following two conditions: (1) this device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation. For questions related to the EMC performance of this product, contact: Intel Corporation, 5200 N.E. Elam Young Parkway, Hillsboro, OR 97124 1-800-628-8686 This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications. However, there is no guarantee that interference will not occur in a particular installation. If this equipment does cause harmful interference to radio or television reception, which can be determined by turning the equipment off and on, the user is encouraged to try to correct the interference by one or more of the following measures: • Reorient or relocate the receiving antenna. • Increase the separation between the equipment and the receiver. • Connect the equipment to an outlet on a circuit other than the one to which the receiver is connected. -

UNIT 8B a Full Adder

UNIT 8B Computer Organization: Levels of Abstraction 15110 Principles of Computing, 1 Carnegie Mellon University - CORTINA A Full Adder C ABCin Cout S in 0 0 0 A 0 0 1 0 1 0 B 0 1 1 1 0 0 1 0 1 C S out 1 1 0 1 1 1 15110 Principles of Computing, 2 Carnegie Mellon University - CORTINA 1 A Full Adder C ABCin Cout S in 0 0 0 0 0 A 0 0 1 0 1 0 1 0 0 1 B 0 1 1 1 0 1 0 0 0 1 1 0 1 1 0 C S out 1 1 0 1 0 1 1 1 1 1 ⊕ ⊕ S = A B Cin ⊕ ∧ ∨ ∧ Cout = ((A B) C) (A B) 15110 Principles of Computing, 3 Carnegie Mellon University - CORTINA Full Adder (FA) AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 4 Carnegie Mellon University - CORTINA 2 Another Full Adder (FA) http://students.cs.tamu.edu/wanglei/csce350/handout/lab6.html AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 5 Carnegie Mellon University - CORTINA 8-bit Full Adder A7 B7 A2 B2 A1 B1 A0 B0 1-bit 1-bit 1-bit 1-bit ... Cout Full Full Full Full Cin Adder Adder Adder Adder S7 S2 S1 S0 AB 8 ⁄ ⁄ 8 C 8-bit C out FA in ⁄ 8 S 15110 Principles of Computing, 6 Carnegie Mellon University - CORTINA 3 Multiplexer (MUX) • A multiplexer chooses between a set of inputs. D1 D 2 MUX F D3 D ABF 4 0 0 D1 AB 0 1 D2 1 0 D3 1 1 D4 http://www.cise.ufl.edu/~mssz/CompOrg/CDAintro.html 15110 Principles of Computing, 7 Carnegie Mellon University - CORTINA Arithmetic Logic Unit (ALU) OP 1OP 0 Carry In & OP OP 0 OP 1 F 0 0 A ∧ B 0 1 A ∨ B 1 0 A 1 1 A + B http://cs-alb-pc3.massey.ac.nz/notes/59304/l4.html 15110 Principles of Computing, 8 Carnegie Mellon University - CORTINA 4 Flip Flop • A flip flop is a sequential circuit that is able to maintain (save) a state. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Power Measurement Tutorial for the Green500 List

Power Measurement Tutorial for the Green500 List R. Ge, X. Feng, H. Pyla, K. Cameron, W. Feng June 27, 2007 Contents 1 The Metric for Energy-Efficiency Evaluation 1 2 How to Obtain P¯(Rmax)? 2 2.1 The Definition of P¯(Rmax)...................................... 2 2.2 Deriving P¯(Rmax) from Unit Power . 2 2.3 Measuring Unit Power . 3 3 The Measurement Procedure 3 3.1 Equipment Check List . 4 3.2 Software Installation . 4 3.3 Hardware Connection . 4 3.4 Power Measurement Procedure . 5 4 Appendix 6 4.1 Frequently Asked Questions . 6 4.2 Resources . 6 1 The Metric for Energy-Efficiency Evaluation This tutorial serves as a practical guide for measuring the computer system power that is required as part of a Green500 submission. It describes the basic procedures to be followed in order to measure the power consumption of a supercomputer. A supercomputer that appears on The TOP500 List can easily consume megawatts of electric power. This power consumption may lead to operating costs that exceed acquisition costs as well as intolerable system failure rates. In recent years, we have witnessed an increasingly stronger movement towards energy-efficient computing systems in academia, government, and industry. Thus, the purpose of the Green500 List is to provide a ranking of the most energy-efficient supercomputers in the world and serve as a complementary view to the TOP500 List. However, as pointed out in [1, 2], identifying a single objective metric for energy efficiency in supercom- puters is a difficult task. Based on [1, 2] and given the already existing use of the “performance per watt” metric, the Green500 List uses “performance per watt” (PPW) as its metric to rank the energy efficiency of supercomputers. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Towards Better Performance Per Watt in Virtual Environments on Asymmetric Single-ISA Multi-Core Systems

Towards Better Performance Per Watt in Virtual Environments on Asymmetric Single-ISA Multi-core Systems Viren Kumar Alexandra Fedorova Simon Fraser University Simon Fraser University 8888 University Dr 8888 University Dr Vancouver, Canada Vancouver, Canada [email protected] [email protected] ABSTRACT performance per watt than homogeneous multicore proces- Single-ISA heterogeneous multicore architectures promise to sors. As power consumption in data centers becomes a grow- deliver plenty of cores with varying complexity, speed and ing concern [3], deploying ASISA multicore systems is an performance in the near future. Virtualization enables mul- increasingly attractive opportunity. These systems perform tiple operating systems to run concurrently as distinct, in- at their best if application workloads are assigned to het- dependent guest domains, thereby reducing core idle time erogeneous cores in consideration of their runtime proper- and maximizing throughput. This paper seeks to identify a ties [4][13][12][18][24][21]. Therefore, understanding how to heuristic that can aid in intelligently scheduling these vir- schedule data-center workloads on ASISA systems is an im- tualized workloads to maximize performance while reducing portant problem. This paper takes the first step towards power consumption. understanding the properties of data center workloads that determine how they should be scheduled on ASISA multi- We propose that the controlling domain in a Virtual Ma- core processors. Since virtual machine technology is a de chine Monitor or hypervisor is relatively insensitive to changes facto standard for data centers, we study virtual machine in core frequency, and thus scheduling it on a slower core (VM) workloads. saves power while only slightly affecting guest domain per- formance.