When Less Is More: Data and Power in Advertising Experiments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Terminology of Retail Pricing

Terminology of Retail Pricing Retail pricing terminology defined for “Calculating Markup: A Merchandising Tool”. Billed cost of goods: gross wholesale cost of goods after deduction for trade and quantity discounts but before cash discounts are calculated; invoiced cost of goods Competition: firms, organizations or retail formats with which the retailer must compete for business and the same target consumers in the marketplace Industry: group of firms which offer products that are identical, similar, or close substitutes of each other Market: products/services which seek to satisfy the same consumer need or serve the same customer group Cost: wholesale, billed cost, invoiced cost charged by vendor for merchandise purchased by retailer Discounts: at retail, price reduction in the current retail price of goods (i.e., customer allowance and returns, employee discounts) Customer Allowances and Returns: reduction, usually after the completion of sale, in the retail price due to soiled, damaged, or incorrect style, color, size of merchandise Employee Discounts: reduction in price on employee purchases; an employee benefit and incentive for employee to become familiar with stock Discounts: at manufacturing level, a reduction in cost allowed by the vendor Cash Discount: predetermined discount percentage deductible from invoiced cost or billed cost of goods on invoice if invoice is paid on or before the designated payment date Quantity Discount: discount given to retailer based on quantity of purchase bought of specific product classification or -

Why Is Sales and Marketing Alignment Important in the Age of the Customer? IT’S SALES and MARKETING, NOT SALES Vs

Why is Sales and Marketing Alignment Important in the Age of the Customer? IT’S SALES and MARKETING, NOT SALES vs. MARKETING. By Cate Gutowski, Vice President, GE Commercial Sales & digitalTHREAD In my role at GE, I lead our Sales function and drive the digital transformation of our Sales force. As I work inside the company with a variety of business units, and I spend time with other firms outside of GE to learn about new best practices, one of the opportunities that I see is the untapped value that affects many organizations: creating alignment between Sales and Marketing. There’s an and between those two words for a reason. Too often, it feels like it can be Sales vs. Marketing, but the truth is….neither can succeed without the other. Photo by rawpixel.com on Unsplash It’s Sales and Marketing, not Sales vs. Marketing. 2 For GE, this alignment is especially important, because we are undergoing the transformation from an Industrial company to a Digital Industrial one. We recognize that in order to sell digital, we have to be digital. To achieve this goal, we all have to work together and put the customer first in everything that we do. While we’ve made great progress in this endeavor, there is always room for improvement. I’ll share some of what I’ve learned on our journey thus far: ? Let marketing be our guide. Our Marketing teams are the intelligence arm of our organization. They can see the future. Marketing can see what’s around the corner: they know where the biggest opportunities are located and where the hidden profit pools reside. -

8 Challenges of Sales and Marketing Alignment Introduction

Guide: 8 Challenges of Sales and Marketing Alignment Introduction Sales and marketing teams have an interesting relationship. In today’s selling environment, where custom- ers expect a personalized interaction every time, sales simply cannot exist without marketing. According to Forrester research, 78% of executive buyers claim that salespeople do not have relevant examples or case studies to share with them. Clearly, cold calls and impersonal emails aren’t cutting it anymore, and sales must rely on the content produced by marketing to make sales conversations more valuable for prospects. And it goes without saying that marketing wouldn’t have much of a job to do without sales; marketing col- lateral would be lost and lonely without anyone to share it with. While these symbiotic teams are very closely entwined, it doesn’t mean that their relationship is flawless. In fact, because of their closeness, these two can have some difficulty seeing eye to eye. Especially when it comes to content creation and dispersion, there are a number of challenges that arise when trying to align sales and marketing. But there are ways to combat these challenges that will lead to even better sales and marketing alignment, allowing these two teams to function as one synergistic entity. Below are eight of the most common pain points that marketing and sales teams often report as a result of misaligned sales and marketing processes and priorities, and surefire ways to bring the two teams back together. 2 Marketing Challenges Many of marketing’s challenges have to do with helping sales function flawlessly. But the main function of mar- keting is not just to make the sales team’s job easier; marketing has its own responsibilities and goals that are independent of sales support. -

Dynamic Pricing: Building an Advantage in B2B Sales

Dynamic Pricing: Building an Advantage in B2B Sales Pricing leaders use volatility to their advantage, capturing opportunities in market fluctuations. By Ron Kermisch, David Burns and Chuck Davenport Ron Kermisch and David Burns are partners with Bain & Company’s Customer Strategy & Marketing practice. Ron is a leader of Bain’s pricing work, and David is an expert in building pricing capabilities. Chuck Davenport is an expert vice principal specializing in pricing. They are based, respectively, in Boston, Chicago and Atlanta. The authors would like to thank Nate Hamilton, a principal in Boston; Monica Oliver, a manager in Boston; and Paulina Celedon, a consultant in Atlanta, for their contributions to this work. Copyright © 2019 Bain & Company, Inc. All rights reserved. Dynamic Pricing: Building an Advantage in B2B Sales At a Glance Nimble pricing behavior from Amazon and other online sellers has raised the imperative for everyone else to develop dynamic pricing capabilities. But dynamic pricing is more than just a defensive action. Pricing leaders use volatility to their advantage, capturing opportunities in market fluctuations and forcing competitors to chase their pricing moves. Building better pricing capabilities is about more than improving processes, technology and communication. Pricing leadership requires improving your understanding of customer needs, competitors’ behavior and market economics. Dynamic pricing is not a new strategy. For decades, companies in travel and transportation have system- atically set and modified prices based on shifting market and customer factors. Anyone who buys plane tickets should be familiar with this type of dynamic pricing, but what about other industries? Does dynamic pricing have a role? Increasingly, the answer is yes. -

The Impact of Sexuality in the Media

Pittsburg State University Pittsburg State University Digital Commons Electronic Thesis Collection 11-2013 The impact of sexuality in the media Kasey Jean Hockman Follow this and additional works at: https://digitalcommons.pittstate.edu/etd Part of the Communication Commons Recommended Citation Hockman, Kasey Jean, "The impact of sexuality in the media" (2013). Electronic Thesis Collection. 126. https://digitalcommons.pittstate.edu/etd/126 This Thesis is brought to you for free and open access by Pittsburg State University Digital Commons. It has been accepted for inclusion in Electronic Thesis Collection by an authorized administrator of Pittsburg State University Digital Commons. For more information, please contact [email protected]. THE IMPACT OF SEXUALITY IN THE MEDIA A Thesis Submitted to the Graduate School in Partial Fulfillment of the Requirements for the degree of Master of Arts Kasey Jean Hockman Pittsburg State University Pittsburg, Kansas December 2013 THE IMPACT OF SEXUALITY IN THE MEDIA Kasey Hockman APPROVED Thesis Advisor . Dr. Alicia Mason, Department of Communication Committee Member . Dr. Joey Pogue, Department of Communication Committee Member . Dr. Harriet Bachner, Department of Psychology and Counseling II THE THESIS PROCESS FOR A GRADUATE STUDENT ATTENDING PITTSBURG STATE UNIVERSITY An Abstract of the Thesis by Kasey Jean Hockman The overall goal of this study was to determine three things: 1. Does sexuality in the media appear to have a negative effect on participant’s self-concept in terms of body image, 2. Does the nature of the content as sexually implicit or sexually explicit material contribute to negative self-concepts, in terms of body image, and 3. -

The Power of Points: Strategies for Making Loyalty Programs Work

Consumer and Shopper Insights April 2013 The Power of Points: Strategies for making loyalty programs work By Liz Hilton Segel, Phil Auerbach and Ido Segev Americans love loyalty cards. Three-quarters of households are enrolled in at least one frequent customer account, such as airline miles, hotel points and grocery cards. Many shoppers have a whole stack of cards to their name – the average household has signed up for no less than 18 memberships. And while participation in loyalty programs Exhibit 1: On average, US households are now enrolled in over 18 loyalty programs; although financial has been growing over the past six years, services, airline, and specialty retail are the largest, enrollment in other sectors is substantial as well usage of them hasn’t. (Exhibit 2) Most loyalty cards never actually get used at all. Average membership per US household loyalty membership by sector American households are active in less than US household 2010, programs per HH 2008-2010 CAGR 50 percent of the programs they have signed 2010 18.3 Financial up for. 3.7 1 Mass services 1.1 2 merchant What’s worse, most of these programs, 2.8 8 Airline Department 1.0 11 even when used, are not driving sales or store Specialty 2.5 23 boosting customer satisfaction. Fewer than retail Drug 0.8 15 20 percent of loyalty members say their store 1.5 5 memberships are influential in purchasing Hotel Fuel & 0.3 (11) decisions and only 33 percent of loyalty 1.5 7 convenience Grocery customers feel that those programs are Car rental 1.1 12 0.2 13 addressing their needs. -

The True Value of Pricing from Pricing Strategy to Sales Excellence the True Value of Pricing

The True Value of Pricing From pricing strategy to sales excellence The True Value of Pricing The challenge of value in price management The world’s current political, economic and In this context, price management becomes In order to define a pricing strategy aligned social scenario poses major challenges, a powerful advantage to guide organizations with the organization’s goals, the Pricing including the political uncertainties brought throughout the changes, to boost their function must take into consideration not by the intensification of nationalist speeches performance and to maximize their earnings. only economic trends but also product and the increase of barriers to foreign With regard to strategic alignment, the differences and specificities in consumer trade. On the business side, the economic concept of Pricing is particularly relevant in habits in each region. This is the case of the recession has affected organizations which, the business world as it consolidates itself end-of-the-month discount policy, quite in order to continue to optimize earnings, as a charging practice intended to capture common in the Brazilian market, and that need to take into consideration drivers such the highest value from each customer by has a direct impact on the pricing and the as Gross Domestic Product unpredictability, addressing issues from strategy to sales margin of each product. high inflation, interest hikes and fiscal excellence, and aimed to maximizing adjustments, besides unemployment growth, profitability. Even though many organizations see Pricing and the customers’ higher indebtedness and as a back office function, there is a trend reduced income resulting from that. “The True Value of Pricing – From pricing in Brazil to boost its importance and its strategy to sales excellence” presents reach from a strategic point of view, while At the same time, the integration of the data about how companies from different differencing customer segments. -

Customer Loyalty Marketing Strategies

Essential Strategies for Customer Loyalty Marketing Ignite Guide AN ELEVEN-MINUTE READ INTRODUCTION Deepening customer relationships is key to unlocking revenue potential Loyal customers spend more1. That fact alone should be reason enough for brands to leverage loyalty marketing programs. But the benefits extend far beyond increased revenue—loyalty programs help brands increase customer retention, customer lifetime value (CLTV), brand awareness, and customer satisfaction. They also provide companies with greater opportunities to capture rich first-party customer data. This data not only powers personalized customer experiences but allows for better, more-informed business decisions. In short, loyalty marketing programs should a program that resonates with their customers and What’s inside? be an essential part of every company’s engages with them across all touchpoints, it Customer loyalty marketing, defined 3 customer acquisition and retention strategy. creates an opportunity to become more customer- But many companies are unsure how to launch focused and omnichannel. Build your foundation 5 a loyalty initiative. Five steps to get started 6 This guide will help marketing leaders responsible in customer loyalty marketing From choosing the right type of program to for loyalty, branding, customer relationships, The power of customer 9 determining the level of investment to figuring out and customer retention and acquisition to better loyalty marketing how to promote it to the audience, customer loyalty understand how to deepen customer relationships marketing is a big undertaking—but a worthwhile through loyalty marketing—and how to launch Use Oracle to form deeper 10 connections with your customers one. When brands take the necessary steps to launch and optimize loyalty programs. -

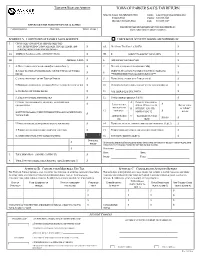

Sales and Use Tax Return and Instructions

TAXPAYER NAME AND ADDRESS TOWN OF PARKER SALES TAX RETURN MAIL TO: SALES TAX ADMINISTRATION EMAIL: [email protected] PO BOX 5602 PHONE: 303.805.3228 DENVER, CO 80217-5602 FAX: 303.805.3219 RETURN MUST BE FILED EVEN IF LINE 16 IS ZERO THIS RETURN MAY BE FILED AND PAID OVER THE INTERNET AT: PERIOD COVERED DUE DATE TOWN LICENSE # WWW.PARKERONLINE.ORG/FILEANDPAY SCHEDULE A – COMPUTATION OF TAXABLE SALES & SERVICE CHECK BOX IF ACCOUNT CHANGES ARE NOTED BELOW 1. GROSS SALES AND SERVICES: (BEFORE SALES TAX) MUST BE REPORTED INCLUDING ALL SALES, RENTALS, LEASES, AND $ 5A. NET SALES TAX (LINE 4 X 3.00%) $ SERVICES, BOTH TAXABLE AND NON-TAXABLE 2A. ADDITIONS TO GROSS SALES (SEE INSTRUCTIONS) $ 5B. $_____________ SUBJECT TO LODGING TAX X 3.00% $ 2B. ADD LINES 1 & 2A $ 6. ADD: EXCESS TAX COLLECTED $ 3. A. NON-TAXABLE SERVICES OR LABOR(INCLUDED IN LINE 1) $ 7. NET ADJUSTED SALES TAX (ADD LINES 5&6) $ B. SALES TO OTHER LICENSED DEALERS FOR THE PURPOSE OF TAXABLE DEDUCT 3.33% OF LINE 7 (ENTER 0 IF RETURN IS FILED LATE) $ 8. $ RESALE ***MAXIMUM DEDUCTION ALLOWED IS $200.00*** C. SALES SHIPPED OUT OF THE TOWN OF PARKER $ 9. TOTAL SALES TAX DUE (LINE 7 MINUS LINE 8) $ D. BAD DEBTS CHARGED OFF (ON WHICH TOWN TAX WAS PREVIOUSLY PAID) $ 10. CONSTRUCTION MATERIALS SUBJECT TO USE TAX (SCHEDULE B) $ E. TRADE-INS FOR TAXABLE RESALE $ 11. USE TAX DUE (LINE 10 X 3.00%) $ F. SALES OF GASOLINE AND CIGARETTES $ 12. TOTAL TAX DUE (ADD LINES 9 & 11) $ G. -

Advertising "In These Imes:"T How Historical Context Influenced Advertisements for Willa Cather's Fiction Erika K

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Dissertations, Theses, and Student Research: English, Department of Department of English Spring 5-2014 Advertising "In These imes:"T How Historical Context Influenced Advertisements for Willa Cather's Fiction Erika K. Hamilton University of Nebraska-Lincoln Follow this and additional works at: http://digitalcommons.unl.edu/englishdiss Part of the American Literature Commons Hamilton, Erika K., "Advertising "In These Times:" How Historical Context Influenced Advertisements for Willa Cather's Fiction" (2014). Dissertations, Theses, and Student Research: Department of English. 87. http://digitalcommons.unl.edu/englishdiss/87 This Article is brought to you for free and open access by the English, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Dissertations, Theses, and Student Research: Department of English by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. ADVERTISING “IN THESE TIMES:” HOW HISTORICAL CONTEXT INFLUENCED ADVERTISEMENTS FOR WILLA CATHER’S FICTION by Erika K. Hamilton A DISSERTATION Presented to the Faculty of The Graduate College at the University of Nebraska In Partial Fulfillment of Requirements For the Degree of Doctor of Philosophy Major: English Under the Supervision of Professor Guy Reynolds Lincoln, Nebraska May, 2014 ADVERTISING “IN THESE TIMES:” HOW HISTORICAL CONTEXT INFLUENCED ADVERTISEMENTS FOR WILLA CATHER’S FICTION Erika K. Hamilton, Ph.D. University of Nebraska, 2014 Adviser: Guy Reynolds Willa Cather’s novels were published during a time of upheaval. In the three decades between Alexander’s Bridge and Sapphira and the Slave Girl, America’s optimism, social mores, culture, literature and advertising trends were shaken and changed by World War One, the “Roaring Twenties,” and the Great Depression. -

Debt Relief Services & the Telemarketing Sales Rule

DEBT RELIEF SERVICES & THE TELEMARKETING SALES RULE: A Guide for Business Federal Trade Commission | ftc.gov Many Americans struggle to pay their credit card bills. Some turn to businesses offering “debt relief services” – for-profit companies that say they can renegotiate what consumers owe or get their interest rates reduced. The Federal Trade Commission (FTC), the nation’s consumer protection agency, has amended the Telemarketing Sales Rule (TSR) to add specific provisions to curb deceptive and abusive practices associated with debt relief services. One key change is that many more businesses will now be subject to the TSR. Debt relief companies that use telemarketing to contact poten- tial customers or hire someone to call people on their behalf have always been covered by the TSR. The new Rule expands the scope to cover not only outbound calls – calls you place to potential customers – but in-bound calls as well – calls they place to you in response to advertisements and other solicita- tions. If your business is involved in debt relief services, here are three key principles of the new Rule: ● It’s illegal to charge upfront fees. You can’t collect any fees from a customer before you have settled or other- wise resolved the consumer’s debts. If you renegotiate a customer’s debts one after the other, you can collect a fee for each debt you’ve renegotiated, but you can’t front-load payments. You can require customers to set aside money in a dedicated account for your fees and for payments to creditors and debt collectors, but the new Rule places restrictions on those accounts to make sure customers are protected. -

Sales and Use Tax Exemptions

West Virginia State Tax TSD-300 (Rev. March 2018) SALES AND USE TAX EXEMPTIONS The West Virginia sales and use tax laws contain many exemptions from the tax. The same exemptions apply to municipal sales and use taxes. This publication provides general information. It is not a substitute for tax laws and legislative regulations. It does not discuss special rules regarding sales of gasoline and special fuels. GENERAL • When discussing sales/use tax exemptions, it is important to consider the following general principles: PRINCIPLES ➢ All sales of tangible personal property or taxable services are presumed to be subject to tax. ➢ Tax must be collected unless a specific exemption applies to the transaction and proper documentation of the exempt status of the transaction is established by the purchaser and retained by the seller. ➢ Most individuals, businesses and organizations must pay tax on their purchases. ➢ The burden of proving that a transaction is exempt is on the person claiming the exemption and the vendor making the sale. ➢ Vendors who fail to collect and remit sales tax on taxable transactions or who fail to maintain proper records and documents with respect to taxable transactions are personally liable for payment of the amount of tax. ➢ Intentional disregard of the sales/use tax law or regulations is a serious matter and will result in monetary fines or criminal penalties. • For a more complete discussion regarding West Virginia sales tax responsibilities, see Publication TSD-407. • There are several distinct types of exemptions and prescribed methods by which exemptions must be claimed. Failure to follow the proper method in claiming an exemption may result in a transaction being taxable.