Computational Modelling and Analysis of Vibrato and Portamento in Expressive Music Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Of Gods and Monsters: Signification in Franz Waxman's Film Score Bride of Frankenstein

This is a repository copy of Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/118268/ Version: Accepted Version Article: McClelland, C (Cover date: 2014) Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein. Journal of Film Music, 7 (1). pp. 5-19. ISSN 1087-7142 https://doi.org/10.1558/jfm.27224 © Copyright the International Film Music Society, published by Equinox Publishing Ltd 2017, This is an author produced version of a paper published in the Journal of Film Music. Uploaded in accordance with the publisher's self-archiving policy. Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Paper for the Journal of Film Music Of Gods and Monsters: Signification in Franz Waxman’s film score Bride of Frankenstein Universal’s horror classic Bride of Frankenstein (1935) directed by James Whale is iconic not just because of its enduring images and acting, but also because of the high quality of its score by Franz Waxman. -

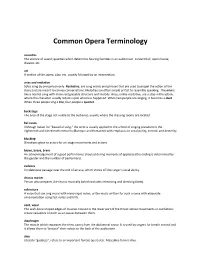

Common Opera Terminology

Common Opera Terminology acoustics The science of sound; qualities which determine hearing facilities in an auditorium, concert hall, opera house, theater, etc. act A section of the opera, play, etc. usually followed by an intermission. arias and recitative Solos sung by one person only. Recitative, are sung words and phrases that are used to propel the action of the story and are meant to convey conversations. Melodies are often simple or fast to resemble speaking. The aria is like a normal song with more recognizable structure and melody. Arias, unlike recitative, are a stop in the action, where the character usually reflects upon what has happened. When two people are singing, it becomes a duet. When three people sing a trio, four people a quartet. backstage The area of the stage not visible to the audience, usually where the dressing rooms are located. bel canto Although Italian for “beautiful song,” the term is usually applied to the school of singing prevalent in the eighteenth and nineteenth centuries (Baroque and Romantic) with emphasis on vocal purity, control, and dexterity blocking Directions given to actors for on-stage movements and actions bravo, brava, bravi An acknowledgement of a good performance shouted during moments of applause (the ending is determined by the gender and the number of performers). cadenza An elaborate passage near the end of an aria, which shows off the singer’s vocal ability. chorus master Person who prepares the chorus musically (which includes rehearsing and directing them). coloratura A voice that can sing music with many rapid notes, or the music written for such a voice with elaborate ornamentation using fast notes and trills. -

The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1980 The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance. Malcolm Eugene Beauchamp Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Beauchamp, Malcolm Eugene, "The Application of Bel Canto Concepts and Principles to Trumpet Pedagogy and Performance." (1980). LSU Historical Dissertations and Theses. 3474. https://digitalcommons.lsu.edu/gradschool_disstheses/3474 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS This was produced from a copy of a document sent to us for microfilming. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the material submitted. The following explanation of techniques is provided to help you understand markings or notations which may appear on this reproduction. 1. The sign or “target” for pages apparently lacking from the document photographed is “Missing Page(s)”. If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting through an image and duplicating adjacent pages to assure you of complete continuity. 2. When an image on the film is obliterated with a round black mark it is an indication that the film inspector noticed either blurred copy because of movement during exposure, or duplicate copy. -

That World of Somewhere in Between: the History of Cabaret and the Cabaret Songs of Richard Pearson Thomas, Volume I

That World of Somewhere In Between: The History of Cabaret and the Cabaret Songs of Richard Pearson Thomas, Volume I D.M.A. DOCUMENT Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts in the Graduate School of The Ohio State University By Rebecca Mullins Graduate Program in Music The Ohio State University 2013 D.M.A. Document Committee: Dr. Scott McCoy, advisor Dr. Graeme Boone Professor Loretta Robinson Copyright by Rebecca Mullins 2013 Abstract Cabaret songs have become a delightful and popular addition to the art song recital, yet there is no concise definition in the lexicon of classical music to explain precisely what cabaret songs are; indeed, they exist, as composer Richard Pearson Thomas says, “in that world that’s somewhere in between” other genres. So what exactly makes a cabaret song a cabaret song? This document will explore the topic first by tracing historical antecedents to and the evolution of artistic cabaret from its inception in Paris at the end of the 19th century, subsequent flourish throughout Europe, and progression into the United States. This document then aims to provide a stylistic analysis to the first volume of the cabaret songs of American composer Richard Pearson Thomas. ii Dedication This document is dedicated to the person who has been most greatly impacted by its writing, however unknowingly—my son Jack. I hope you grow up to be as proud of your mom as she is of you, and remember that the things in life most worth having are the things for which we must work the hardest. -

Microkorg Owner's Manual

E 1 ii Precautions Data handling Location THE FCC REGULATION WARNING (for U.S.A.) Unexpected malfunctions can result in the loss of memory Using the unit in the following locations can result in a This equipment has been tested and found to comply with the contents. Please be sure to save important data on an external malfunction. limits for a Class B digital device, pursuant to Part 15 of the data filer (storage device). Korg cannot accept any responsibility • In direct sunlight FCC Rules. These limits are designed to provide reasonable for any loss or damage which you may incur as a result of data • Locations of extreme temperature or humidity protection against harmful interference in a residential loss. • Excessively dusty or dirty locations installation. This equipment generates, uses, and can radiate • Locations of excessive vibration radio frequency energy and, if not installed and used in • Close to magnetic fields accordance with the instructions, may cause harmful interference to radio communications. However, there is no Printing conventions in this manual Power supply guarantee that interference will not occur in a particular Please connect the designated AC adapter to an AC outlet of installation. If this equipment does cause harmful interference Knobs and keys printed in BOLD TYPE. the correct voltage. Do not connect it to an AC outlet of to radio or television reception, which can be determined by Knobs and keys on the panel of the microKORG are printed in voltage other than that for which your unit is intended. turning the equipment off and on, the user is encouraged to BOLD TYPE. -

Portamento in Romantic Opera Deborah Kauffman

Performance Practice Review Volume 5 Article 3 Number 2 Fall Portamento in Romantic Opera Deborah Kauffman Follow this and additional works at: http://scholarship.claremont.edu/ppr Part of the Music Practice Commons Kauffman, Deborah (1992) "Portamento in Romantic Opera," Performance Practice Review: Vol. 5: No. 2, Article 3. DOI: 10.5642/ perfpr.199205.02.03 Available at: http://scholarship.claremont.edu/ppr/vol5/iss2/3 This Article is brought to you for free and open access by the Journals at Claremont at Scholarship @ Claremont. It has been accepted for inclusion in Performance Practice Review by an authorized administrator of Scholarship @ Claremont. For more information, please contact [email protected]. Romantic Ornamentation Portamento in Romantic Opera Deborah Kauffman Present day singing differs from that of the nineteenth century in a num- ber of ways. One of the most apparent is in the use of portamento, the "carrying" of the tone from one note to another. In our own century portamento has been viewed with suspicion; as one author wrote in 1938, it is "capable of much expression when judiciously employed, but when it becomes a habit it is deplorable, because then it leads to scooping."1 The attitude of more recent authors is difficult to ascertain, since porta- mento has all but disappeared as a topic for discussion in more recent texts on singing. Authors seem to prefer providing detailed technical and physiological descriptions of vocal production than offering discussions of style. But even a cursory listening of recordings from the turn of the century reveals an entirely different attitude toward portamento. -

Using Bel Canto Pedagogical Principles to Inform Vocal Exercises Repertoire Choices for Beginning University Singers by Steven M

Using Bel Canto Pedagogical Principles to Inform Vocal Exercises Repertoire Choices for Beginning University Singers by Steven M. Groth B.M. University of Wisconsin, 2013 M.M. University of Missouri, 2017 A thesis submitted to the Faculty of the Graduate School of the University of Colorado in partial fulfillment of the requirement for the degree of Doctor of Musical Arts College of Music 2020 1 2 3 Abstract The purpose of this document is to identify and explain the key ideals of bel canto singing and provide reasoned suggestions of exercises, vocalises, and repertoire choices that are readily available both to teachers and students. I provide a critical evaluation of the fundamental tenets of classic bel canto pedagogues, Manuel Garcia, Mathilde Marchesi, and Julius Stockhausen. I then offer suggested exercises to develop breath, tone, and legato, all based classic bel canto principles and more recent insights of voice science and physiology. Finally, I will explore and perform a brief survey into the vast expanse of Italian repertoire that fits more congruently with the concepts found in bel canto singing technique in order to equip teachers with the best materials for more rapid student achievement and success in legato singing. For each of these pieces, I will provide the text and a brief analysis of the characteristics that make each piece well-suited for beginning university students. 4 Acknowledgements Ever since my first vocal pedagogy class in my undergraduate degree at the University of Wisconsin-Madison, I have been interested in how vocal pedagogy can best be applied to repertoire choices in order to maximize students’ achievement in the studio environment. -

Volume 60, Number 07 (July 1942) James Francis Cooke

Gardner-Webb University Digital Commons @ Gardner-Webb University The tudeE Magazine: 1883-1957 John R. Dover Memorial Library 7-1-1942 Volume 60, Number 07 (July 1942) James Francis Cooke Follow this and additional works at: https://digitalcommons.gardner-webb.edu/etude Part of the Composition Commons, Music Pedagogy Commons, and the Music Performance Commons Recommended Citation Cooke, James Francis. "Volume 60, Number 07 (July 1942)." , (1942). https://digitalcommons.gardner-webb.edu/etude/237 This Book is brought to you for free and open access by the John R. Dover Memorial Library at Digital Commons @ Gardner-Webb University. It has been accepted for inclusion in The tudeE Magazine: 1883-1957 by an authorized administrator of Digital Commons @ Gardner-Webb University. For more information, please contact [email protected]. — . UNITED WE IMP SUMPS DR. ALFRED HOLLINS, eminent blino the organist and composer, who had held West position as organist at St. George's Church, Edinburgh, since 1337, died there Sep- on May 17. Dr. Hollins was bom He tember 11, 1865, in Hull, England. had made many concert appearances Li the United States and Canada. DR. CHARLES HF.1N- ROTH, Chairman of the Music Department of City College, New York, and for twenty-five years JAe M/ot£d o/Mmie organist and director of music at Carnegie In- stitute of Technology, Pittsburgh, has retired. Chaubs HERE, THERE. AND EVERYWHERE A former president of Hunkoth the American Associa- IN THE MUSICAL WORLD tion of Organists, Prof. Heinroth is said to be the first man to play organ music artists very active In great influence in Orchestra was assisted by visiting over the radio. -

The German School of Singing: a Compendium of German Treatises 1848-1965

THE GERMAN SCHOOL OF SINGING: A COMPENDIUM OF GERMAN TREATISES 1848-1965 by Joshua J. Whitener Submitted to the faculty of the Jacobs School of Music in partial fulfillment of the requirements for the degree, Doctor of Music Indiana University May 2016 Accepted by the faculty of the Indiana University Jacobs School of Music, in partial fulfillment of the requirements for the degree Doctor of Music Doctoral Committee ______________________________________ Costanza Cuccaro, Research Director and Chair ______________________________________ Ray Fellman ______________________________________ Brian Horne ______________________________________ Patricia Stiles Date of Approval: April 5, 2016 ii Copyright © 2016 Joshua J. Whitener iii To My Mother, Dr. Louise Miller iv Acknowledgements I would like to thank Professor Costanza Cuccaro, my chair and teacher, and my committee members, Professors Fellman, Horne, and Stiles, for guiding me through the completion of my doctoral education. Their support and patience has been invaluable as I learned about and experienced true scholarship in the study of singing and vocal pedagogy. Thanks too to my friends who were also right there with me as I completed many classes, sang in many productions, survived many auditions and grew as a person. Finally, I would like to thank my international colleagues with whom I have had the opportunity to meet and work over the past 15 years. Their friendship and encouragement has been invaluable, as have the unparalleled, unimagined experiences I have had while living and performing in Germany. It has been this cultural awareness that led me to the topic of my dissertation - to define a German School of Singing. Vielen Dank! I would like to give a special thanks to my mother without whom this paper would not have been possible. -

An Interactive System for Visual and Quantitative Analyses of Vibrato

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Queen Mary Research Online AVA: An Interactive System for Visual and Quantitative Analyses of Vibrato and Portamento Performance Styles YANG, L; Rajab, SAYID-KHALID; Chew, E; the 17th International Society for Music Information Retrieval Conference © Luwei Yang, Khalid Z. Rajab and Elaine Chew Licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0) For additional information about this publication click this link. http://qmro.qmul.ac.uk/xmlui/handle/123456789/16059 Information about this research object was correct at the time of download; we occasionally make corrections to records, please therefore check the published record when citing. For more information contact [email protected] AVA: AN INTERACTIVE SYSTEM FOR VISUAL AND QUANTITATIVE ANALYSES OF VIBRATO AND PORTAMENTO PERFORMANCE STYLES Luwei Yang1 Khalid Z. Rajab2 Elaine Chew1 1 Centre for Digital Music, Queen Mary University of London 2 Antennas & Electromagnetics Group, Queen Mary University of London l.yang, k.rajab, elaine.chew @qmul.ac.uk { } ABSTRACT While vibrato analysis and detection methods have been in existence for several decades [2, 10, 11, 13], there is Vibratos and portamenti are important expressive features currently no widely available software tool for interactive for characterizing performance style on instruments capa- analysis of vibrato features to assist in performance and ble of continuous pitch variation such as strings and voice. musicological research. Portamenti have received far less Accurate study of these features is impeded by time con- attention than vibratos due to the inherent ambiguity in suming manual annotations. -

![AVA: a Graphical User Interface for Automatic Vibrato and Portamento [23] J](https://docslib.b-cdn.net/cover/2835/ava-a-graphical-user-interface-for-automatic-vibrato-and-portamento-23-j-3152835.webp)

AVA: a Graphical User Interface for Automatic Vibrato and Portamento [23] J

steering behaviors”. Motion in Games, pp. 200-209, Springer, 2008, p. 206. AVA: A Graphical User Interface for Automatic Vibrato and Portamento [23] J. Henno, "On structure of games" in Information Detection and Analysis Modelling and Knowledge Bases XXI: Volume 206 Frontiers in Artificial Intelligence and Applications, 1 2 1 2010, p. 344. Luwei Yang Khalid Z. Rajab Elaine Chew 1 Centre for Digital Music, Queen Mary University of London 2 Antennas & Electromagnetics Group, Queen Mary University of London l.yang, k.rajab, elaine.chew @qmul.ac.uk { } ABSTRACT performance styles, and performance variation among dif- ferent musicians [4, 5, 6, 7, 8]. Musicians are able to create different expressive perfor- This paper presents an off-line system, AVA, which ac- mances of the same piece of music by varying expressive cepts raw audio and automatically tracks the vibrato and features. It is challenging to mathematically model and portamento to display their expressive parameters for in- represent musical expressivity in a general manner. Vi- spection and further statistical analysis. We employ the brato and portamento are two important expressive fea- Filter Diagonalization Method (FDM) to detect vibrato [9]. tures in singing, as well as in string, woodwind, and brass The FDM decomposes the local fundamental frequency instrumental playing. We present AVA, an off-line system into sinusoids and returns their frequencies and amplitudes, for automatic vibrato and portamento analysis. The system which the system uses to determine vibrato presence and detects vibratos and extracts their parameters from audio vibrato parameter values. A fully connected three-state input using a Filter Diagonalization Method, then detects Hidden Markov Model (HMM) is applied to identify por- portamenti using a Hidden Markov Model and presents the tamento. -

Ii the APPLICATION of BEL CANTO

THE APPLICATION OF BEL CANTO PRINCIPLES TO VIOLIN PERFORMANCE A Monograph Submitted to the Temple University Graduate Board In Partial Fulfillment of the Requirements for the Degree of DOCTOR OF MUSICAL ARTS by Vladimir Dyo May, 2012 Examining Committee Members: Charles Abramovic, Advisory Chair, Professor of Piano Eduard Schmieder, Professor of Violin, Artistic Director for Strings Richard Brodhead, Associate Professor of Composition Christine Anderson, External Member, Associate Professor of Voice, Chair Department of Voice and Opera ii © Copyright 2012 by Vladimir Dyo All Rights Reserved iii ABSTRACT The Application of Bel Canto Principles to Violin Performance Vladimir Dyo Doctor of Musical Arts Temple University, 2012 Doctoral Advisory Committee Chair: Dr. Charles Abramovic Bel canto is “the best in singing of all time.” Authors on vocal literature (Miller, Celletti, Duey, Reid, Dmitriev, Stark, Whitlock among others) agree that bel canto singing requires complete mastery of vocal technique. The "best in singing" means that the singer should possess immaculate cantilena, smooth legato, a beautiful singing tone that exhibits a full palette of colors, and evenness of tone throughout the entire vocal range. Furthermore, the singer should be able to "carry the tone" expressively from one note to another, maintain long lasting breath, flexibility, and brilliant virtuosity. Without these elements, the singer's mastery is not complete. In addition, proper mastery of bel canto technique prolongs the longevity of the voice. For centuries, —emulating beautiful singing has been a model for violin performers. Since the late Renaissance and early Baroque periods, a trend toward homophonic style, melodious songs, and arias had a tremendous influence on pre-violin and violin performers, and even on luthiers.