Chapter 4 Constructing Structures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pennsylvania Univ., Philadelphia

DOCUMENT RESUME ED 104.663 SE 018 747 AUTHOR Hudson', Janet, Ed. TITLE Resource Guide to School Mathematics. INSTITUTION- Pennsylvania Univ., Philadelphia. Graduate School of Education. PUB DATE 74 NOTE 129p.; Updated and enlarged version of ED 086 547. Best copy available; Not available in hard cqprdue to marginal legibility of original document EDRS PRICE MF -$0.76 HC Not Available from EDRS..PLUS POSTAGE DESCRIPTORS *Annotated Bibliographies; Curriculum; Educational Games; Elementary School Mathematics; Guides; *Instructional Materials; Intermediate Grades; Manipulative Materials; Mathematical Enrichment; Mathematics; *Matheiatics Education; Re'Source Materials; Secondary School Mathematics; *Textbooks; Units of Study (Subject Fields) ABSTRACT A wide variety of materials are listed and described in this resource guide. Listings include textbooks, enrichment materials, manipulative materials, and study units appropriate for students in grades K-12. Materials revieted cover the full range of matiematical subject matter, and vary widely in their approach. Listings are arranged according to the coipany from which the resource is available; materials for which teacher's guides are available are starred and those carrying the recommendation of Mu Alpha Theta are also indicated. Addresses are provided for the 100 ccmpanies whose materials are listed. Detailed reviews of the Stretchers and Shrinkers materials and three texts of Dolciani, et al., are also included. (SD) U S.DEPARTMENT OF HEALTH. EDUCATION %WELFARE NATIONAL INSTITUTE OF EDUCATION THIS DOCUMENT HAS BEEN REPRO OUCEO EXACTLY AS RECEIVED FROM BEST RAE THE PERSON OR ORGANIZATION ORIGIN ATING IT POINTS OF veEW_OR OPINIONS STATED DO NOT NECESSARILY REPRE SENT OFFICIAL NATIONAL INSTITUTEOF THE EOucAnoist POSITION OR POLICY UNIVERSITY OF PENNSYLVANIA RESOURCE GUIDE TO SCHOOL MATHLMATICS Graduace School of Education University of Pennsylvania Philadelphia, Pennsylvania 19174 r 2 .4 THE UNIVERSITY OF PENNSYLVANIA RESOURCE GUIDE TO SCHOOL MATHEMATICS 1974 Editor Janet Hudson 1973 Editor Gail Garber Dr. -

Aaboe, Asger Episodes from the Early History of Mathematics QA22 .A13 Abbott, Edwin Abbott Flatland: a Romance of Many Dimensions QA699 .A13 1953 Abbott, J

James J. Gehrig Memorial Library _________Table of Contents_______________________________________________ Section I. Cover Page..............................................i Table of Contents......................................ii Biography of James Gehrig.............................iii Section II. - Library Author’s Last Name beginning with ‘A’...................1 Author’s Last Name beginning with ‘B’...................3 Author’s Last Name beginning with ‘C’...................7 Author’s Last Name beginning with ‘D’..................10 Author’s Last Name beginning with ‘E’..................13 Author’s Last Name beginning with ‘F’..................14 Author’s Last Name beginning with ‘G’..................16 Author’s Last Name beginning with ‘H’..................18 Author’s Last Name beginning with ‘I’..................22 Author’s Last Name beginning with ‘J’..................23 Author’s Last Name beginning with ‘K’..................24 Author’s Last Name beginning with ‘L’..................27 Author’s Last Name beginning with ‘M’..................29 Author’s Last Name beginning with ‘N’..................33 Author’s Last Name beginning with ‘O’..................34 Author’s Last Name beginning with ‘P’..................35 Author’s Last Name beginning with ‘Q’..................38 Author’s Last Name beginning with ‘R’..................39 Author’s Last Name beginning with ‘S’..................41 Author’s Last Name beginning with ‘T’..................45 Author’s Last Name beginning with ‘U’..................47 Author’s Last Name beginning -

PDF # Playing with Infinity: Mathematical Explorations And

Playing with Infinity: Mathematical Explorations and Excursions # Book // MWGFIVJO6T Playing with Infinity: Mathematical Explorations and Excursions By Rozsa Peter Dover Publications Inc., United States, 2010. Paperback. Book Condition: New. New edition. 211 x 137 mm. Language: English . Brand New Book. This popular account of the many mathematical concepts relating to infinity is one of the best introductions to this subject and to the entire field of mathematics. Dividing her book into three parts The Sorcerer s Apprentice, The Creative Role of Form, and The Self-Critique of Pure Reason Peter develops her material in twenty-two chapters that sound almost too appealing to be true: playing with fingers, coloring the grey number series, we catch infinity again, the line is filled up, some workshop secrets, the building rocks, and so on. Yet, within this structure, the author discusses many important mathematical concepts with complete accuracy: number systems, arithmetical progression, diagonals of convex polygons, the theory of combinations, the law of prime numbers, equations, negative numbers, vectors, operations with fractions, infinite series, irrational numbers, Pythagoras Theorem, logarithm tables, analytical geometry, the line at infinity, indefinite and definite integrals, the squaring of the circle, transcendental numbers, the theory of groups, the theory of sets, metamathematics, and much more. Numerous illustrations and examples make all the material readily comprehensible. Without being technical or... READ ONLINE [ 9.5 MB ] Reviews Simply no phrases to explain. It is definitely simplistic but shocks from the fiy percent from the pdf. You may like the way the blogger write this ebook. -- Antonetta Tremblay This book will never be easy to start on looking at but quite entertaining to read. -

Ed 076 428 Author Title Institution Pub Date

DOCUMENT RESUME ED 076 428 SE 016 104 AUTHOR Schaaf, William L. TITLE The High School Mathematics Library. Fifth Edition. INSTITUTION National Council of Teachers of Mathematics,Inc., Washington, D.C. PUB DATE 73 NOTE 81p. AVAILABLE FROMNational Council of Teachers of Mathematics,1906 Association Drive, Reston, Virginia 22091($1.50) EDRS PRICE MF-$0.65 HC Not Available from EDRS. DESCRIPTORS *Annotated Bibliographies; Booklists;*Instruction; Instructional Materials; *MathematicalEnrichment; Mathematics; Mathematics Education;*Secondary School Mathematics; Teacher Education ABSTRACT The scope of this booklist includes booksfor students of.average ability, for themathematically talented, for the professional interests of mathematicsteachers, and for those concerned with general mathematics atthe junior college level. About 950 titles are listed,many with brief annotations. Starring of 200 titles indicates a priority choiceas viewed by the author. Booksare classified under topics which include popularreading, foundations of mathematics, history, recreations, mathematicscontent areas, professional books for teachers, mathematicsfor parents, dictionaries and handbooks, paperbackseries, and NCTM publications. Periodicals and journals are listed also.The appendix includes a directory of publishers. A related documentis SE 015 978. (DT) FILMED FROM BEST AVAILABLECOPY U S. DEPARTMENT OF HEALTH. EDUCATION & WELFARE OFFICE OF EOUCATION THIS DOCUMENT HAS BEEN REPRO OUCEO EXACTLY AS RECEIVED FROM THE PERSON OR ORGANIZATION OR1G INATING IT POINTS OF -

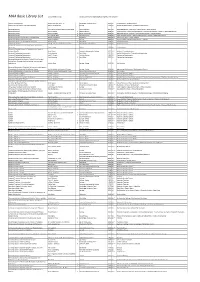

MAA Basic Library List Last Updated 1/23/18 Contact Darren Glass ([email protected]) with Questions

MAA Basic Library List Last Updated 1/23/18 Contact Darren Glass ([email protected]) with questions Additive Combinatorics Terence Tao and Van H. Vu Cambridge University Press 2010 BLL Combinatorics | Number Theory Additive Number Theory: The Classical Bases Melvyn B. Nathanson Springer 1996 BLL Analytic Number Theory | Additive Number Theory Advanced Calculus Lynn Harold Loomis and Shlomo Sternberg World Scientific 2014 BLL Advanced Calculus | Manifolds | Real Analysis | Vector Calculus Advanced Calculus Wilfred Kaplan 5 Addison Wesley 2002 BLL Vector Calculus | Single Variable Calculus | Multivariable Calculus | Calculus | Advanced Calculus Advanced Calculus David V. Widder 2 Dover Publications 1989 BLL Advanced Calculus | Calculus | Multivariable Calculus | Vector Calculus Advanced Calculus R. Creighton Buck 3 Waveland Press 2003 BLL* Single Variable Calculus | Multivariable Calculus | Calculus | Advanced Calculus Advanced Calculus: A Differential Forms Approach Harold M. Edwards Birkhauser 2013 BLL** Differential Forms | Vector Calculus Advanced Calculus: A Geometric View James J. Callahan Springer 2010 BLL Vector Calculus | Multivariable Calculus | Calculus Advanced Calculus: An Introduction to Analysis Watson Fulks 3 John Wiley 1978 BLL Advanced Calculus Advanced Caluculus Lynn H. Loomis and Shlomo Sternberg Jones & Bartlett Publishers 1989 BLL Advanced Calculus Advanced Combinatorics: The Art of Finite and Infinite Expansions Louis Comtet Kluwer 1974 BLL Combinatorics Advanced Complex Analysis: A Comprehensive Course in Analysis, -

MAA Basic Library List Last Updated 2/1/18 Contact Darren Glass ([email protected]) with Questions

MAA Basic Library List Last Updated 2/1/18 Contact Darren Glass ([email protected]) with questions Title Author Edition Publisher Publication Year BLL Rating Topics 1001 Problems in High School Mathematics E Barbeau Canadian Mathematical Congress 1976 BLL*** Problems Olympiad Level | Problems Mathematical Careers | Mathematics as a 101 Careers in Mathematics Andrew Sterrett, editor 3 Mathematical Association of America 2014 BLL* Profession 104 Number Theory Problems: From the Training of the USA IMO Team Titu Andreescu, Dorin Andrica, and Zuming Feng Birkhäuser 2007 BLL* Number Theory | Problems Olympiad Level Algebraic Number Theory | Diophantine 13 Lectures on Fermat's Last Theorem Paulo Ribenboim Springer Verlag 1979 BLL Equations 3.1416 and All That Philip J. Davis and William G. Chinn Dover Publications 2007 BLL* Mathematics for the General Reader 5000 Years of Geometry Christoph J. Scriba and Peter Schreiber Birkhäuser 2015 BLL* Geometry | History of Mathematics Hans-Dietrich Gronau, Hanns-Heirich Langmann, Problems Olympiad Level | History of 50th IMO — 50 Years of International Mathematical Olympiads and Dierk Schleicher, editors Springer 2011 BLL Mathematics 536 Puzzles and Curious Problems Henry E. Dudeney Dover Publications 1983 BLL*** Recreational Mathematics Marko Petkovšek, Herbert S. Wilf, and Doron Algorithms | Mathematical Software | Special A = B Zeilberger A K Peters/CRC Press 1996 BLL Functions A Basic Course in Algebraic Topology William S. Massey Springer Verlag 1991 BLL Algebraic Topology A Beautiful Mind Sylvia Nasar Simon & Schuster 2011 BLL** Biography | History of Mathematics Finance | Stochastic Calculus | Stochastic A Benchmark Approach to Quantitative Finance E. Platen and D. Heath Springer Verlag 2007 BLL Differential Equations A Biologist's Guide to Mathematical Modeling in Ecology and Evolution Sarah P.