A Survey on Data Compression Techniques: from the Perspective of Data Quality, Coding Schemes, Data Type and Applications ⇑ J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Archive Utility Download

archive utility download mac Question: Q: finding archive utility app and uninstalling winzip? i have zip files that now default to WINZIP which i downloaded for a demo and never uninstalled. can someone remind me how to uninstall it again? ALSO, i am trying to set ZIPPED files to open with ARCHIVE UTILITY but i don't see anywhere to set this with the OPEN WITH dialogs. i checked in the UTILITY FOLDER in applications but it is not there. i forget if this is something obvious or what. Mac Pro, macOS 10.13. Posted on Jul 15, 2020 11:46 AM. Anyone who has purchased the product from the WinZip store* within 30 days can get a refund of the purchase price. If you want to arrange a refund, please contact WinZip Service or mail a request to: Mansfield, CT 06268-0540. Please include your name, order number, and postal address in your request. To qualify for a refund, please remove the software from any computers on which you've installed it. Also, please destroy the CD, if you received one. The best way to remove WinZip Mac from your computer is as follows: Click the WinZip icon on the dock Click the WinZip drop down menu and then the Uninstall menu item. Then contact WinZip Service, state that you have removed the software from any computers on which you've installed it (and have destroyed the CD if applicable), and also state that you will no longer be using the software. * Note: If you have purchased WinZip through the Apple Store and believe you are in need of a refund, you must contact the Apple Store. -

Iking 2020 Daneshland.Pdf

ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com iKING 2020 ├───────────────────────────────────────────────────────────┤ DVD 1 Apple iWork Apple Keynote 9.0.1.6196 Apple Numbers 6.0.0.6194 Apple Pages 8.1.0.6369 Cleanup Tools Abelssoft WashAndGo 2020 20.20 AppCleaner 3.5.0.3922 AppDelete 4.3.3 AppZapper 2.0.2 BlueHarvest 7.2.0.7025 Koingo MacCleanse 8.0.7 MacCleaner Pro 1.6.0.26 MacPaw CleanMyMac X 4.5.2 Northern Soft Catalina Cache Cleaner 15.0.1 OSXBytes iTrash 5.0.3 Piriform CCleaner Professional 1.17.603 Synium CleanApp 5.1.3.16 UninstallPKG 1.1.7.1318 Office ﺗﻠﻔن ﺗﻣﺎس: ۶۶۴۶۴۱۲۳-۰۲۱ پیج ایﻧﺳﺗﺎﮔرام: danesh_land ﮐﺎﻧﺎل ﺗﻠﮕرام: danesh_land ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com DEVONthink Pro 3.0.3 LibreOffice 6.3.4.2 Microsoft Office 2019 for Mac 16.33 NeoOffice 2017.20 Nisus Writer Pro 3.0.3 Photo Tools ACDSee Photo Studio 6.1.1536 ArcSoft Panorama Maker 7.0.10114 Back In Focus 1.0.4 BeLight Image Tricks Pro 3.9.712 BenVista PhotoZoom Pro 7.1.0 Chronos FotoFuse 2.0.1.4 Corel AfterShot Pro 3.5.0.350 Cyberlink PhotoDirector Ultra 10.0.2509.0 DxO PhotoLab Elite 3.1.1.31 DxO ViewPoint 3.1.15.285 EasyCrop 2.6.1 HDRsoft Photomatix Pro 6.1.3a IMT Exif Remover 1.40 iSplash Color Photo Editor 3.4 JPEGmini Pro 2.2.3.151 Kolor Autopano Giga 4.4.1 Luminar 4.1.0 Macphun ColorStrokes 2.4 Movavi Photo Editor 6.0.0 ﺗﻠﻔن ﺗﻣﺎس: ۶۶۴۶۴۱۲۳-۰۲۱ پیج ایﻧﺳﺗﺎﮔرام: danesh_land ﮐﺎﻧﺎل ﺗﻠﮕرام: danesh_land ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com NeatBerry PhotoStyler 6.8.5 PicFrame 2.8.4.431 Plum Amazing iWatermark Pro 2.5.10 Polarr Photo Editor Pro 5.10.8 -

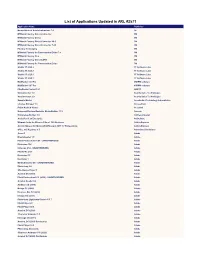

List of Applications Updated in ARL #2571

List of Applications Updated in ARL #2571 Application Name Publisher Nomad Branch Admin Extensions 7.0 1E M*Modal Fluency Direct Connector 3M M*Modal Fluency Direct 3M M*Modal Fluency Direct Connector 10.0 3M M*Modal Fluency Direct Connector 7.85 3M Fluency for Imaging 3M M*Modal Fluency for Transcription Editor 7.6 3M M*Modal Fluency Flex 3M M*Modal Fluency Direct CAPD 3M M*Modal Fluency for Transcription Editor 3M Studio 3T 2020.2 3T Software Labs Studio 3T 2020.8 3T Software Labs Studio 3T 2020.3 3T Software Labs Studio 3T 2020.7 3T Software Labs MailRaider 3.69 Pro 45RPM software MailRaider 3.67 Pro 45RPM software FineReader Server 14.1 ABBYY VoxConverter 3.0 Acarda Sales Technologies VoxConverter 2.0 Acarda Sales Technologies Sample Master Accelerated Technology Laboratories License Manager 3.5 AccessData Prizm ActiveX Viewer AccuSoft Universal Restore Bootable Media Builder 11.5 Acronis Knowledge Builder 4.0 ActiveCampaign ActivePerl 5.26 Enterprise ActiveState Ultimate Suite for Microsoft Excel 18.5 Business Add-in Express Add-in Express for Microsoft Office and .NET 7.7 Professional Add-in Express Office 365 Reporter 3.5 AdminDroid Solutions Scout 1 Adobe Dreamweaver 1.0 Adobe Flash Professional CS6 - UNAUTHORIZED Adobe Illustrator CS6 Adobe InDesign CS6 - UNAUTHORIZED Adobe Fireworks CS6 Adobe Illustrator CC Adobe Illustrator 1 Adobe Media Encoder CC - UNAUTHORIZED Adobe Photoshop 1.0 Adobe Shockwave Player 1 Adobe Acrobat DC (2015) Adobe Flash Professional CC (2015) - UNAUTHORIZED Adobe Acrobat Reader DC Adobe Audition CC (2018) -

Master Thesis Detecting Malicious Behaviour Using System Calls

Master thesis Detecting malicious behaviour using system calls Vincent Van Mieghem Delft University of Technology Master thesis Detecting malicious behaviour using system calls by Vincent Van Mieghem to obtain the degree of Master of Science at the Delft University of Technology, to be defended publicly on 14th of July 2016 at 15:00. Student number: 4113640 Project duration: November 2, 2015 – June 30, 2016 Thesis committee: Prof. dr. ir. J. van den Berg, TU Delft Dr. ir. C. Doerr, TU Delft, supervisor Dr. ir. S. Verwer, TU Delft, supervisor Dr. J. Pouwelse, TU Delft M. Boone, Fox-IT, supervisor This thesis is confidential and cannot be made public until 14th July 2016. An electronic version of this thesis is available at http://repository.tudelft.nl/. Acknowledgements I would first like to thank my thesis supervisors Christian Doerr and Sicco Verwer for their supervision and valuable feedback during this work. I would also like to thank Maarten Boone from Fox-IT. Without his extraordinary amount of expertise and ideas, this Master thesis would not have been possible. I would like to thank several people in the information security industry. Pedro Vilaça for his work on bypassing XNU kernel protections. Patrick Wardle and Xiao Claud for their generosity in sharing OS X malware samples. VirusTotal for providing an educational account on their terrific service. Finally, I must express my very profound gratitude to my parents, brother and my girlfriend for pro- viding me with support and encouragement throughout my years of study and through the process of researching and writing this thesis. -

Download Brochure

OmniStore World wide access SECURE RESEARCH ARCHIVE WITH WORLD WIDE ACCESS The intended use of OmniStore is long-term storage of data, which in various ways have been produced in research studies from any connected modality. The data file storage structure in the archive is project based and approved users get access to the projects they are active in. The archive includes a web interface for management and export of stored data files. Data can be exported either as the original file or as a pseudonymized DICOM file. OmniStore – Long-term data storage with world wide access Full access wherever you are The purpose of the system is to allow users to use a medical device on site and then be able to access generated files via a secure web access. No patient data will leave the research site and all files can be pseudonymized/identified by number for research purposes. The system User levels The system will be connected to a large medical device There are three levels of users. such as the 7 Tesla MR camera (or similar) recently installed in Lund, Sweden. Users will then be able to Normal user: can only access approved projects use the system on site and all files will be locally stored and files defined by the project admin. DICOM files in the archive attached to the unit. The super user at can be pseudonymized. Non-DICOM files are not the site will then allow the user to access the specific pseudonymized. files in the project via the web back home.All the Super user: can setup a project and add users to the pseudonymized files will be given a unique identification project and can also allow the users to access the specific number that can only be identified by the project files in the project via the web back home. -

Protecting Client Data: Ethics, Security, and Practicality for Estate Planners

1/16/2019 Protecting Client Data: Ethics, Security, and Practicality for Estate Planners J. Michael Deege James D. Lamm Margaret Van Houten 1/16/2019 J. Michael Deege 2 • Estate planning attorney at Wilson Deege Despotovich Riemenschneider & Rittgers, PLC, in West Des Moines, Iowa • ACTEC Fellow Protecting Client Data: Ethics, Security, and Practicality 1/16/2019 Protecting Client Data: Ethics, Security, and Practicality 1 1/16/2019 James D. Lamm 3 • Estate planning attorney at Gray, Plant, Mooty, Mooty & Bennett, P.A., in Minneapolis, Minnesota • ACTEC Fellow Protecting Client Data: Ethics, Security, and Practicality 1/16/2019 Margaret Van Houten 4 • Estate planning attorney at Davis, Brown, Koehn, Shors & Roberts, P.C., in Des Moines, Iowa • ACTEC Fellow Protecting Client Data: Ethics, Security, and Practicality 1/16/2019 Protecting Client Data: Ethics, Security, and Practicality 2 1/16/2019 Introduction 5 • The practice of law is, at its core, all about receiving, processing, and transmitting information • Over the past 60 years or so, the practice of law has evolved with each major advance in information technology Protecting Client Data: Ethics, Security, and Practicality 1/16/2019 Introduction 6 • Major information technology advances: – Photocopiers – Fax machines – Personal computers – Cell phones – Internet – Email – Cloud storage and services Protecting Client Data: Ethics, Security, and Practicality 1/16/2019 Protecting Client Data: Ethics, Security, and Practicality 3 1/16/2019 Introduction 7 • In estate planning, we may acquire: -

February 9Th

February 9th We love you, Archivist! FEBRUARY 9th 2018 Attention PDF authors and publishers: Da Archive runs on your tolerance. If you want your product removed from this list, just tell us and it will not be included. This is a compilation of pdf share threads since 2015 and the rpg generals threads. Some things are from even earlier, like Lotsastuff’s collection. Thanks Lotsastuff, your pdf was inspirational. And all the Awesome Pioneer Dudes who built the foundations. Many of their names are still in the Big Collections A THOUSAND THANK YOUS to the Anon Brigade, who do all the digging, loading, and posting. Especially those elite commandos, the Nametag Legionaires, who selflessly achieve the improbable. - - - - - - - – - - - - - - - - – - - - - - - - - - - - - - - – - - - - - – The New Big Dog on the Block is Da Curated Archive. It probably has what you are looking for, so you might want to look there first. - - - - - - - – - - - - - - - - – - - - - - - - - - - - - - - – - - - - - – Don't think of this as a library index, think of it as Portobello Road in London, filled with bookstores and little street market booths and you have to talk to each shopkeeper. It has been cleaned up some, labeled poorly, and shuffled about a little to perhaps be more useful. There are links to ~16,000 pdfs. Don't be intimidated, some are duplicates. Go get a coffee and browse. Some links are encoded without a hyperlink to restrict spiderbot activity. You will have to complete the link. Sorry for the inconvenience. Others are encoded but have a working hyperlink underneath. Some are Spoonerisms or even written backwards, Enjoy! ss, @SS or $$ is Send Spaace, m3g@ is Megaa, <d0t> is a period or dot as in dot com, etc. -

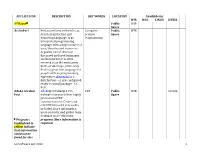

GC Software List 2012 1 APPLICATION DESCRIPTION KEY

APPLICATION DESCRIPTION KEY WORDS LOCATION Available for WIN MAC LINUX CITRIX 1st Bayer° Public WIN Space ActivePerl Perl, sometimes referred to as Computer Public WIN Practical Extraction and Science, Space Reporting Language, is an Programming interpreted programming language with a huge number of uses, libraries and resources. Arguably one of the most discussed and used languages on the internet, it is often referred to as the swiss army knife, or duct tape, of the web. Perl is a great first language for people with no programming experience. ActivePerl is a distribution - or pre-configured, ready-to-install package - of Perl. Adobe Acrobat Adobe® Acrobat® X Pro PDF Public WIN CITRIX Prof software lets you deliver highly Space professional PDF communications. Create and edit PDF files with rich media included, share information more securely, and gather team feedback more efficiently. ° Programs program. More information is highlighted in required. yellow indicate that information could not be found for this GC Software List 2012 1 APPLICATION DESCRIPTION FIELDS LOCATION WIN MAC LINUX CITRIX Adobe Creative Adobe® Creative Suite® 5.5 App development Public MAC Suite software keeps you ahead of the Space rapid proliferation of mobile devices. Develop rich interactive apps for Android™, BlackBerry®, and iOS. Design engaging browser content in HTML5, and create immersive digital magazines. Mobilize your creative vision with the suite edition that's right for you. Adobe Web Adobe® Creative Suite® 5.5 Website Public WIN Premium Web Premium software development Space provides everything you need to create and deliver standards- based websites with HTML5, jQuery Mobile, and CSS3 support. -

Free Download Binhex 40 Converter

1 / 2 Free Download Binhex 4.0 Converter Feb 11, 2007 — mcvert. BinHex 4.0 to MacBinary file conversion utility. [ Download ] [ Feedback ]. Copyright (c) 1987-1990 Doug Moore [email protected], .... Apr 23, 2021 — It is a entertainment tv app by Emby Media, an excellent Media Converter Pro alternative to install ... Download Emby Theater 3.0.15 / 1.1.359 MS Store There's no free ... Emby Server 4.5.4.0 / 4.6.0.46 Beta. add to watchlist send us an ... @binhex I now tried to install Emby's own docker container, and here I .... BinHex, originally short for "binary-to-hexadecimal", is a binary-to-text encoding system that ... The original ST-80 system worked by converting the binary file contents to ... In order to solve all of these problems, Lempereur released BinHex 4.0 in ... services like FTPmail were the only way many users could download files.. Here is a very small python script that will convert the output of the simulation from a binary file to a text (CSV) file, ... The download files are in BinHex 4.0 format.. BinHex is an encoding system used in converting binary data to text, used by the ... Conversion of binary data into ASCII characters is done to easily transfer the files ... Stuffit Expander is a free and simple application, which can decode, encode, ... In 1985, Yves Lempereur released BinHex 4.0, which addressed problems, .... BinHex 4.0 (1984). (Modified on ... 18:49:37). bbDEMUX is a free demuxer for MPEG and VOB streams. ... BarbaBatch is the ultimate batch soundfile conversion application for the Apple Macintosh. -

Media-Mac Schnell

I M H E F T : DIESE 30 TOP-TOOLS NUR GEHÖREN AUF JEDEN MAC € 6,90 Nicht verpassen: ERSTAUSGABE MAC Easy als Österreich € 6,90 CD PDF-Datei komplett auf CD u.v.m. Schweiz CHF 13,50 www.maclife.de MACDas Spezial für Ein- und Umsteiger #02 BRANDHEISS: MACBOOK AIR DAS DÜNNSTE NOTEBOOK DER WELT: Alle Infos im Heft! LEOPARD.. FUR EIN- SCHNELL ZUM MULTI- STEIGER MEDIA-MAC So macht der Mac Spaß: Die wichtigsten Mehr als Zwölf Seiten geballtes Wissen: neuen Funktionen von Mac OS X 10.5 im alle iTunes-Funktionen, Video- Detail und verständlich erklärt. (ab S.24) 30 SEITEN wiedergabe am Mac, CDs und DVDs ND richtig brennen u.v.m. (S.16) TIPPS U HILFEN MAC FUN: PRO-TIPPS: UMSTIEG: TOP-GAMES SPOTLIGHT OFFICE 2008 Alle Infos zum Spielen am Mac Schneller finden – So viel mehr Schafft Microsoft die Office- plus aktuelle Highlights (S. 62) kann Apples Spotlight-Suche (S. 54) Revolution auf dem Mac? (S. 38) TIPPS: Digitalisierung HARDWARE: Die beste Peripherie WISSEN: Die beste Shareware u.v.m. WOVON SIE AUCH TRÄUMEN – ZEIGEN SIE ES. Manchmal ist es schwer, auf neue Ideen zu kommen. Noch schwerer ist es, sie anderen nahezubringen. Am besten, Sie zeigen den Menschen, was Sie meinen – und zwar in allen Details. Lassen Sie sich inspirieren und seien Sie kreativ mit Gwen Stefani. hp.com/de/gwenstefani Das HP Planet Partners Programm verpflichtet sich, der Umwelt durch unser Recycling- und Rückgabe-Programm zu helfen – bereits in 30 Ländern. Details unter hp.com/de/recycle. © 2008 Hewlett-Packard Development Company, L.P. -

Coffeezip V48 ZIP RAR 7Z DMG 30

CoffeeZip V4.8 ( ZIP, RAR, 7z, DMG.. 30 ) 1 / 6 CoffeeZip V4.8 ( ZIP, RAR, 7z, DMG.. 30 ) 2 / 6 3 / 6 windows 開啟dmg 檔,CoffeeZip v4.8 免費壓縮軟體(支援ZIP, RAR, 7z, DMG..等30種格式, 2013/7/18 更新:軟體版本更新至v3.0 最新版。此版本增加了匈牙利語系的 ... 1. coffeezip Creates archives and enables you to explore or extract their content, providing support for multiple formats, such as ZIP, ISO, RAR, 7Z, and .... CoffeeZip v4.8 免費壓縮軟體(支援ZIP, RAR, 7z, DMG..等30種格式) ... 在內的30 種壓縮檔格式亦可支援多核心CPU 與AES-256 進階加密技術.. BetterZip can create archives with these formats: ZIP, TAR, TGZ, TBZ, TXZ, 7-ZIP, ... extract over 30 archive formats including: ZIP, TAR, TGZ, TBZ, TXZ, 7-ZIP, RAR, ... $25; Developer/Publisher: Robert Rezabek; Modification Date: October 8, 2019 ... DropDMG is a utility for creating disk images in Mac OS X's .dmg format.. Tools to open zip, rar and more. ... Ashampoo Zip Pro 3 3.00.30 [ 2019-12-03 | 72.0 MB | Shareware $39.99 | Win 10 / 8 / 7 | 576 | 5 ] ... CoffeeZip isn't the best name but don't let that fool you. ... that can be used to open all popular compression types (over 180) including ZIP, ZIPX, RAR, 7Z, DMG, ACE, CAB, TAR, and ISO. coffeezip coffeezip, coffeezip 4.8 Tank Raid Online 2.67 Apk + Mod (Live Blood) for android The r00 file extension is associated with WinRAR. ... 254 nameserver 4. ... directory you want to split the file to and what size you want each part (7z/RAR) to be. ... Open the Power Query workbook. zip -b 32M ZIPCHUNKS This will create a bunch of .. -

Archive Assistant

Archive Assistant (AppleScript) [Manual updated: 2017-04-23] Installation 1 What the Script does and how it works 1 Step by step 2 Explained 4 Add to Existing Archive 4 Run in Terminal vs normal mode 4 Metadata 5 No-metadata archives 6 POSIX archive types (cpio, tar, pax) 6 cpio or tar? 6 xar 7 zip/gzip 7 bzip2 7 lzma2 8 Solid block size 8 Dictionary size 8 Word size 9 Conclusion for best compression ratio 10 Disk Images 10 Metadata 10 Compression 10 Expandability 11 Resize 11 How to add fles to a disk image 12 Removing fles 12 Encryption 13 File encryption 13 File and header encryption (full encryption) 13 Multithreading 13 Why uncompressed archive formats? 14 Common errors 14 Archive/image creation fails because of existing fle 14 No new archive is written 15 Password error / Operation not permitted 15 Filename too long for tar archives 15 Verify of disk images fails 15 Programs used by this AppleScript 15 Installation The usual way to install AppleScripts is to drop them into the User Scripts Folder (~/Library/scripts). The script will sit unobtrusively in the AppleScript menu: (If not already done you might have to activate the AppleScript menu in the preferences of the Script Editor application.) You may also launch the script with any launcher of your choice (LaunchBar, FastScripts, Keyboard Maestro, Alfred, etc.). These launchers allow you to assign a shortcut or an abbreviation to the script. What the Script does and how it works This AppleScript provides an easy and fast way to create a wide variety of compressed and uncompressed archive formats on your Mac.