Risk Measures in Quantitative Finance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fact Sheet 2021

Q2 2021 GCI Select Equity TM Globescan Capital was Returns (Average Annual) Morningstar Rating (as of 3/31/21) © 2021 Morningstar founded on the principle Return Return that investing in GCI Select S&P +/- Percentile Quartile Overall Funds in high-quality companies at Equity 500TR Rank Rank Rating Category attractive prices is the best strategy to achieve long-run Year to Date 18.12 15.25 2.87 Q1 2021 Top 30% 2nd 582 risk-adjusted performance. 1-year 43.88 40.79 3.08 1 year Top 19% 1st 582 As such, our portfolio is 3-year 22.94 18.67 4.27 3 year Top 3% 1st 582 concentrated and focused solely on the long-term, Since Inception 20.94 17.82 3.12 moat-protected future free (01/01/17) cash flows of the companies we invest in. TOP 10 HOLDINGS PORTFOLIO CHARACTERISTICS Morningstar Performance MPT (6/30/2021) (6/30/2021) © 2021 Morningstar Core Principles Facebook Inc A 6.76% Number of Holdings 22 Return 3 yr 19.49 Microsoft Corp 6.10% Total Net Assets $44.22M Standard Deviation 3 yr 18.57 American Tower Corp 5.68% Total Firm Assets $119.32M Alpha 3 yr 3.63 EV/EBITDA (ex fincls/reits) 17.06x Upside Capture 3yr 105.46 Crown Castle International Corp 5.56% P/E FY1 (ex fincls/reits) 29.0x Downside Capture 3 yr 91.53 Charles Schwab Corp 5.53% Invest in businesses, EPS Growth (ex fincls/reits) 25.4% Sharpe Ratio 3 yr 1.04 don't trade stocks United Parcel Service Inc Class B 5.44% ROIC (ex fincls/reits) 14.1% Air Products & Chemicals Inc 5.34% Standard Deviation (3-year) 18.9% Booking Holding Inc 5.04% % of assets in top 5 holdings 29.6% Mastercard Inc A 4.74% % of assets in top 10 holdings 54.7% First American Financial Corp 4.50% Dividend Yield 0.70% Think long term, don't try to time markets Performance vs S&P 500 (Average Annual Returns) Be concentrated, 43.88 GCI Select Equity don't overdiversify 40.79 S&P 500 TR 22.94 20.94 18.12 18.67 17.82 15.25 Use the market, don't rely on it YTD 1 YR 3 YR Inception (01/01/2017) Disclosures Globescan Capital Inc., d/b/a GCI-Investors, is an investment advisor registered with the SEC. -

Incorporating Extreme Events Into Risk Measurement

Lecture notes on risk management, public policy, and the financial system Incorporating extreme events into risk measurement Allan M. Malz Columbia University Incorporating extreme events into risk measurement Outline Stress testing and scenario analysis Expected shortfall Extreme value theory © 2021 Allan M. Malz Last updated: July 25, 2021 2/24 Incorporating extreme events into risk measurement Stress testing and scenario analysis Stress testing and scenario analysis Stress testing and scenario analysis Expected shortfall Extreme value theory 3/24 Incorporating extreme events into risk measurement Stress testing and scenario analysis Stress testing and scenario analysis What are stress tests? Stress tests analyze performance under extreme loss scenarios Heuristic portfolio analysis Steps in carrying out a stress test 1. Determine appropriate scenarios 2. Calculate shocks to risk factors in each scenario 3. Value the portfolio in each scenario Objectives of stress testing Address tail risk Reduce model risk by reducing reliance on models “Know the book”: stress tests can reveal vulnerabilities in specfic positions or groups of positions Criteria for appropriate stress scenarios Should be tailored to firm’s specific key vulnerabilities And avoid assumptions that favor the firm, e.g. competitive advantages in a crisis Should be extreme but not implausible 4/24 Incorporating extreme events into risk measurement Stress testing and scenario analysis Stress testing and scenario analysis Approaches to formulating stress scenarios Historical -

Var and Other Risk Measures

What is Risk? Risk Measures Methods of estimating risk measures Bibliography VaR and other Risk Measures Francisco Ramírez Calixto International Actuarial Association November 27th, 2018 Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography Outline 1 What is Risk? 2 Risk Measures 3 Methods of estimating risk measures Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography What is Risk? Risk 6= size of loss or size of a cost Risk lies in the unexpected losses. Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography Types of Financial Risk In Basel III, there are three major broad risk categories: Credit Risk: Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography Types of Financial Risk Operational Risk: Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography Types of Financial Risk Market risk: Each one of these risks must be measured in order to allocate economic capital as a buer so that if a catastrophic event happens, the bank won't go bankrupt. Francisco Ramírez Calixto VaR and other Risk Measures What is Risk? Risk Measures Methods of estimating risk measures Bibliography Risk Measures Def. A risk measure is used to determine the amount of an asset or assets (traditionally currency) to be kept in reserve in order to cover for unexpected losses. -

University of Regina Lecture Notes Michael Kozdron

University of Regina Statistics 441 – Stochastic Calculus with Applications to Finance Lecture Notes Winter 2009 Michael Kozdron [email protected] http://stat.math.uregina.ca/∼kozdron List of Lectures and Handouts Lecture #1: Introduction to Financial Derivatives Lecture #2: Financial Option Valuation Preliminaries Lecture #3: Introduction to MATLAB and Computer Simulation Lecture #4: Normal and Lognormal Random Variables Lecture #5: Discrete-Time Martingales Lecture #6: Continuous-Time Martingales Lecture #7: Brownian Motion as a Model of a Fair Game Lecture #8: Riemann Integration Lecture #9: The Riemann Integral of Brownian Motion Lecture #10: Wiener Integration Lecture #11: Calculating Wiener Integrals Lecture #12: Further Properties of the Wiener Integral Lecture #13: ItˆoIntegration (Part I) Lecture #14: ItˆoIntegration (Part II) Lecture #15: Itˆo’s Formula (Part I) Lecture #16: Itˆo’s Formula (Part II) Lecture #17: Deriving the Black–Scholes Partial Differential Equation Lecture #18: Solving the Black–Scholes Partial Differential Equation Lecture #19: The Greeks Lecture #20: Implied Volatility Lecture #21: The Ornstein-Uhlenbeck Process as a Model of Volatility Lecture #22: The Characteristic Function for a Diffusion Lecture #23: The Characteristic Function for Heston’s Model Lecture #24: Review Lecture #25: Review Lecture #26: Review Lecture #27: Risk Neutrality Lecture #28: A Numerical Approach to Option Pricing Using Characteristic Functions Lecture #29: An Introduction to Functional Analysis for Financial Applications Lecture #30: A Linear Space of Random Variables Lecture #31: Value at Risk Lecture #32: Monetary Risk Measures Lecture #33: Risk Measures and their Acceptance Sets Lecture #34: A Representation of Coherent Risk Measures Lecture #35: Further Remarks on Value at Risk Lecture #36: Midterm Review Statistics 441 (Winter 2009) January 5, 2009 Prof. -

Lapis Global Top 50 Dividend Yield Index Ratios

Lapis Global Top 50 Dividend Yield Index Ratios MARKET RATIOS 2012 2013 2014 2015 2016 2017 2018 2019 2020 P/E Lapis Global Top 50 DY Index 14,45 16,07 16,71 17,83 21,06 22,51 14,81 16,96 19,08 MSCI ACWI Index (Benchmark) 15,42 16,78 17,22 19,45 20,91 20,48 14,98 19,75 31,97 P/E Estimated Lapis Global Top 50 DY Index 12,75 15,01 16,34 16,29 16,50 17,48 13,18 14,88 14,72 MSCI ACWI Index (Benchmark) 12,19 14,20 14,94 15,16 15,62 16,23 13,01 16,33 19,85 P/B Lapis Global Top 50 DY Index 2,52 2,85 2,76 2,52 2,59 2,92 2,28 2,74 2,43 MSCI ACWI Index (Benchmark) 1,74 2,02 2,08 2,05 2,06 2,35 2,02 2,43 2,80 P/S Lapis Global Top 50 DY Index 1,49 1,70 1,72 1,65 1,71 1,93 1,44 1,65 1,60 MSCI ACWI Index (Benchmark) 1,08 1,31 1,35 1,43 1,49 1,71 1,41 1,72 2,14 EV/EBITDA Lapis Global Top 50 DY Index 9,52 10,45 10,77 11,19 13,07 13,01 9,92 11,82 12,83 MSCI ACWI Index (Benchmark) 8,93 9,80 10,10 11,18 11,84 11,80 9,99 12,22 16,24 FINANCIAL RATIOS 2012 2013 2014 2015 2016 2017 2018 2019 2020 Debt/Equity Lapis Global Top 50 DY Index 89,71 93,46 91,08 95,51 96,68 100,66 97,56 112,24 127,34 MSCI ACWI Index (Benchmark) 155,55 137,23 133,62 131,08 134,68 130,33 125,65 129,79 140,13 PERFORMANCE MEASURES 2012 2013 2014 2015 2016 2017 2018 2019 2020 Sharpe Ratio Lapis Global Top 50 DY Index 1,48 2,26 1,05 -0,11 0,84 3,49 -1,19 2,35 -0,15 MSCI ACWI Index (Benchmark) 1,23 2,22 0,53 -0,15 0,62 3,78 -0,93 2,27 0,56 Jensen Alpha Lapis Global Top 50 DY Index 3,2 % 2,2 % 4,3 % 0,3 % 2,9 % 2,3 % -3,9 % 2,4 % -18,6 % Information Ratio Lapis Global Top 50 DY Index -0,24 -

Comparative Analyses of Expected Shortfall and Value-At-Risk Under Market Stress1

Comparative analyses of expected shortfall and value-at-risk under market stress1 Yasuhiro Yamai and Toshinao Yoshiba, Bank of Japan Abstract In this paper, we compare value-at-risk (VaR) and expected shortfall under market stress. Assuming that the multivariate extreme value distribution represents asset returns under market stress, we simulate asset returns with this distribution. With these simulated asset returns, we examine whether market stress affects the properties of VaR and expected shortfall. Our findings are as follows. First, VaR and expected shortfall may underestimate the risk of securities with fat-tailed properties and a high potential for large losses. Second, VaR and expected shortfall may both disregard the tail dependence of asset returns. Third, expected shortfall has less of a problem in disregarding the fat tails and the tail dependence than VaR does. 1. Introduction It is a well known fact that value-at-risk2 (VaR) models do not work under market stress. VaR models are usually based on normal asset returns and do not work under extreme price fluctuations. The case in point is the financial market crisis of autumn 1998. Concerning this crisis, CGFS (1999) notes that “a large majority of interviewees admitted that last autumn’s events were in the “tails” of distributions and that VaR models were useless for measuring and monitoring market risk”. Our question is this: Is this a problem of the estimation methods, or of VaR as a risk measure? The estimation methods used for standard VaR models have problems for measuring extreme price movements. They assume that the asset returns follow a normal distribution. -

Arbitrage Pricing Theory: Theory and Applications to Financial Data Analysis Basic Investment Equation

Risk and Portfolio Management Spring 2010 Arbitrage Pricing Theory: Theory and Applications To Financial Data Analysis Basic investment equation = Et equity in a trading account at time t (liquidation value) = + Δ Rit return on stock i from time t to time t t (includes dividend income) = Qit dollars invested in stock i at time t r = interest rate N N = + Δ + − ⎛ ⎞ Δ ()+ Δ Et+Δt Et Et r t ∑Qit Rit ⎜∑Qit ⎟r t before rebalancing, at time t t i=1 ⎝ i=1 ⎠ N N N = + Δ + − ⎛ ⎞ Δ + ε ()+ Δ Et+Δt Et Et r t ∑Qit Rit ⎜∑Qit ⎟r t ∑| Qi(t+Δt) - Qit | after rebalancing, at time t t i=1 ⎝ i=1 ⎠ i=1 ε = transaction cost (as percentage of stock price) Leverage N N = + Δ + − ⎛ ⎞ Δ Et+Δt Et Et r t ∑Qit Rit ⎜∑Qit ⎟r t i=1 ⎝ i=1 ⎠ N ∑ Qit Ratio of (gross) investments i=1 Leverage = to equity Et ≥ Qit 0 ``Long - only position'' N ≥ = = Qit 0, ∑Qit Et Leverage 1, long only position i=1 Reg - T : Leverage ≤ 2 ()margin accounts for retail investors Day traders : Leverage ≤ 4 Professionals & institutions : Risk - based leverage Portfolio Theory Introduce dimensionless quantities and view returns as random variables Q N θ = i Leverage = θ Dimensionless ``portfolio i ∑ i weights’’ Ei i=1 ΔΠ E − E − E rΔt ΔE = t+Δt t t = − rΔt Π Et E ~ All investments financed = − Δ Ri Ri r t (at known IR) ΔΠ N ~ = θ Ri Π ∑ i i=1 ΔΠ N ~ ΔΠ N ~ ~ N ⎛ ⎞ ⎛ ⎞ 2 ⎛ ⎞ ⎛ ⎞ E = θ E Ri ; σ = θ θ Cov Ri , R j = θ θ σ σ ρ ⎜ Π ⎟ ∑ i ⎜ ⎟ ⎜ Π ⎟ ∑ i j ⎜ ⎟ ∑ i j i j ij ⎝ ⎠ i=1 ⎝ ⎠ ⎝ ⎠ ij=1 ⎝ ⎠ ij=1 Sharpe Ratio ⎛ ΔΠ ⎞ N ⎛ ~ ⎞ E θ E R ⎜ Π ⎟ ∑ i ⎜ i ⎟ s = s()θ ,...,θ = ⎝ ⎠ = i=1 ⎝ ⎠ 1 N ⎛ ΔΠ ⎞ N σ ⎜ ⎟ θ θ σ σ ρ Π ∑ i j i j ij ⎝ ⎠ i=1 Sharpe ratio is homogeneous of degree zero in the portfolio weights. -

Sharpe Ratio

StatFACTS Sharpe Ratio StatMAP CAPITAL The most famous return-versus- voLATILITY BENCHMARK TAIL PRESERVATION risk measurement, the Sharpe ratio, RN TU E represents the added value over the R risk-free rate per unit of volatility risk. K S I R FF O - E SHARPE AD R RATIO T How Is it Useful? What Do the Graphs Show Me? The Sharpe ratio simplifies the options facing the The graphs below illustrate the two halves of the investor by separating investments into one of two Sharpe ratio. The upper graph shows the numerator, the choices, the risk-free rate or anything else. Thus, the excess return over the risk-free rate. The blue line is the Sharpe ratio allows investors to compare very different investment. The red line is the risk-free rate on a rolling, investments by the same criteria. Anything that isn’t three-year basis. More often than not, the investment’s the risk-free investment can be compared against any return exceeds that of the risk-free rate, leading to a other investment. The Sharpe ratio allows for apples-to- positive numerator. oranges comparisons. The lower graph shows the risk metric used in the denominator, standard deviation. Standard deviation What Is a Good Number? measures how volatile an investment’s returns have been. Sharpe ratios should be high, with the larger the number, the better. This would imply significant outperformance versus the risk-free rate and/or a low standard deviation. Rolling Three Year Return However, there is no set-in-stone breakpoint above, 40% 30% which is good, and below, which is bad. -

A Sharper Ratio: a General Measure for Correctly Ranking Non-Normal Investment Risks

A Sharper Ratio: A General Measure for Correctly Ranking Non-Normal Investment Risks † Kent Smetters ∗ Xingtan Zhang This Version: February 3, 2014 Abstract While the Sharpe ratio is still the dominant measure for ranking risky investments, much effort has been made over the past three decades to find more robust measures that accommodate non- Normal risks (e.g., “fat tails”). But these measures have failed to map to the actual investor problem except under strong restrictions; numerous ad-hoc measures have arisen to fill the void. We derive a generalized ranking measure that correctly ranks risks relative to the original investor problem for a broad utility-and-probability space. Like the Sharpe ratio, the generalized measure maintains wealth separation for the broad HARA utility class. The generalized measure can also correctly rank risks following different probability distributions, making it a foundation for multi-asset class optimization. This paper also explores the theoretical foundations of risk ranking, including proving a key impossibility theorem: any ranking measure that is valid for non-Normal distributions cannot generically be free from investor preferences. Finally, we show that approximation measures, which have sometimes been used in the past, fail to closely approximate the generalized ratio, even if those approximations are extended to an infinite number of higher moments. Keywords: Sharpe Ratio, portfolio ranking, infinitely divisible distributions, generalized rank- ing measure, Maclaurin expansions JEL Code: G11 ∗Kent Smetters: Professor, The Wharton School at The University of Pennsylvania, Faculty Research Associate at the NBER, and affiliated faculty member of the Penn Graduate Group in Applied Mathematics and Computational Science. -

Generating Potential Income in a Low Interest Rate World

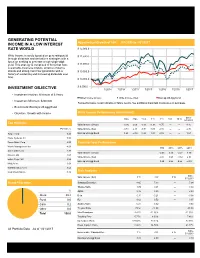

GENERATING POTENTIAL INCOME IN A LOW INTEREST Hypothetical Growth of 10K: 5/1/2013 to 1/31/2017 RATE WORLD $ 12,000.0 Write Income is solely focused on generating yield $ 11,500.0 through dividends and derivatives strategies with a focus on seeking to generate a high single-digit yield. This strategy is comprised of firms that have $ 11,000.0 sustainable business models, attractive balance sheets and strong cash flow generation with a $ 10,500.0 history of sustaining and increasing dividends over time. $ 10,000.0 $ 9,500.0 INVESTMENT OBJECTIVE 1/2014 7/2014 1/2015 7/2015 1/2016 7/2016 1/2017 Investment Horizon: Minimum of 3 Years Write Income (Gross) Write Income (Net) BarCap US Agg Bond Investment Minimum: $200,000 Past performance is not indicative of future results. See additional important disclosures on next page. Benchmark: Barclays US Agg Bond Objective: Growth with Income Write Income Performance (Annualized) Since 1 Mo 3 Mo YTD 1 Yr 3 Yr 5 Yr 10 Yr 5/1/2013 Top Holdings Write Income (Gross) 2.66-0.36-0.36 11.08 4.75 —— 4.16 Portfolio % Write Income (Net) 2.19-0.51-0.51 9.09 2.88 —— 2.30 Target Corp 5.40 BarCap US Agg Bond -2.040.200.20 1.45 2.59 —— 1.67 Cisco Systems Inc 5.38 Exxon Mobil Corp 4.39 Calendar Year Performance Waste Management Inc 4.03 YTD 2016 2015 2013 General Mills Inc 3.87 Write Income (Gross) -0.36 6.53 -2.07 4.10 Invesco Ltd 3.63 Write Income (Net) -0.51 4.63 -3.82 2.85 Eaton Corp PLC 3.62 BarCap US Agg Bond 0.20 2.65 0.55 -2.89 MetLife Inc 3.54 Wal-Mart Stores Inc 3.50 Dow Chemical Co 3.49 Risk Analysis -

A Framework for Dynamic Hedging Under Convex Risk Measures

A Framework for Dynamic Hedging under Convex Risk Measures Antoine Toussaint∗ Ronnie Sircary November 2008; revised August 2009 Abstract We consider the problem of minimizing the risk of a financial position (hedging) in an incomplete market. It is well-known that the industry standard for risk measure, the Value- at-Risk, does not take into account the natural idea that risk should be minimized through diversification. This observation led to the recent theory of coherent and convex risk measures. But, as a theory on bounded financial positions, it is not ideally suited for the problem of hedging because simple strategies such as buy-hold strategies may not be bounded. Therefore, we propose as an alternative to use convex risk measures defined as functionals on L2 (or by simple extension Lp, p > 1). This framework is more suitable for optimal hedging with L2 valued financial markets. A dual representation is given for this minimum risk or market adjusted risk when the risk measure is real-valued. In the general case, we introduce constrained hedging and prove that the market adjusted risk is still a L2 convex risk measure and the existence of the optimal hedge. We illustrate the practical advantage in the shortfall risk measure by showing how minimizing risk in this framework can lead to a HJB equation and we give an example of computation in a stochastic volatility model with the shortfall risk measure 1 Introduction We are interested in the problem of hedging in an incomplete market: an investor decides to buy a contract with a non-replicable payoff X at time T but has the opportunity to invest in a financial market to cover his risk. -

Mersberger Financial Group, Inc. Investment Policy Statement

Mersberger Financial Group, Inc. Investment Policy Statement A Fiduciary Approach to Investing Mersberger Financial Group, Inc. Investment Policy Statement Table of Contents Firm Investment Policy Statement.............................................................1 Model 1: MFG Individual Bond Strategy....................................................9 Model 2: MFG Preferred Stock Strategy...................................................13 Model 3: MFG Tactical Equity Model.......................................................17 Model 4: MFG Passive Equity Model.......................................................23 Frequently Asked Questions....................................................................27 Firm Investment Policy Statement Executive Summary The advisors at Mersberger Financial Group, Inc. (which may be referred to as “MFG”, “Us” or “We” throughout this document) has developed an investment policy statement in order to outline the investment philosophy and the investment processes of the advisors. This document also seeks to ensure that the advisors of MFG act in a fiduciary capacity for all clients. We believe it is critical in planning for its client’s futures to form a repeatable and documentable portfolio management process. In addition, MFG believes it is important to have all financial advisors and staff members educated and cognizant of MFG’s investment strategies and philosophy. This approach allows MFG to maintain consistency in it’s investment advice, as well as to always act in the clients best interest. Purpose The purpose of this document is to outline Mersberger Financial Group’s investment philosophy, strategies and procedures. This document will attempt to create a set of standards to hold MFG accountable to, as well as outline a disciplined investment approach for the advisors to follow. We believe having formal investment processes and strategies is crucial, especially in times of market volatility when investment managers may become tempted to deviate from their core strategies.