Applied Perl

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Word to LATEX for a Large, Multi-Author Scientific Paper

The PracTEX Journal TPJ 2005 No 03, 2005-07-15 Rev. 2005-07-16 Word to LATEX for a Large, Multi-Author Scientific Paper D. W. Ignat Abstract Numerous co-authors from diverse locations submitted to a scientific jour- nal a manuscript for large review article in many sections, each formatted in MS Word. Journal policy for reviews, which attract no page charges, required a translation to LATEX, including the transformation of section-based references to a non-repetitive article-based list. Saving Word files in RTF format and us- ing rtf2latex2e accomplished the basic translation, and then a perl program was used to get the references into acceptable condition. This approach to con- version succeeded and may be useful to others. 1 Introduction Twelve authors from five countries and ten research institutions proposed to the Nuclear Fusion journal (NF) of the International Atomic Energy Agency (IAEA) in Vienna, Austria, a review paper with six sections plus a glossary. This unusually large manuscript had some hundred thousand words and a thousand references. The sections had different lead authors, so that the references of each section were independent of those in other sections, while often repetitive among sections. The IAEA gave review papers the privilege of waived publication charges ($150/page), but required authors to ease the publisher’s costs by submitting c 2005 DW Ignat and IAEA, Vienna manuscripts of reviews in LATEX, the journal’s typesetting system. Therefore, a con- siderable financial incentive appeared for finding a somewhat automated transfor- mation of all the Word sources into a unified LATEX source. -

Intermediate Perl

SECOND EDITION Intermediate Perl Randal L. Schwartz, brian d foy, and Tom Phoenix Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo Intermediate Perl, Second Edition by Randal L. Schwartz, brian d foy, and Tom Phoenix Copyright © 2012 Randal Schwartz, brian d foy, Tom Phoenix. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editors: Simon St. Laurent and Shawn Wallace Indexer: Lucie Haskins Production Editor: Kristen Borg Cover Designer: Karen Montgomery Copyeditor: Absolute Service, Inc. Interior Designer: David Futato Proofreader: Absolute Service, Inc. Illustrator: Rebecca Demarest March 2006: First Edition. August 2012: Second Edition. Revision History for the Second Edition: 2012-07-20 First release See http://oreilly.com/catalog/errata.csp?isbn=9781449393090 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Intermediate Perl, the image of an alpaca, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

![Learning Perl. 5Th Edition [PDF]](https://docslib.b-cdn.net/cover/6878/learning-perl-5th-edition-pdf-1776878.webp)

Learning Perl. 5Th Edition [PDF]

Learning Perl ,perlroadmap.24755 Page ii Tuesday, June 17, 2008 8:15 AM Other Perl resources from O’Reilly Related titles Advanced Perl Programming Perl Debugger Pocket Intermediate Perl Reference Mastering Perl Perl in a Nutshell Perl 6 and Parrot Essentials Perl Testing: A Developer’s Perl Best Practices Notebook Perl Cookbook Practical mod-perl Perl Books perl.oreilly.com is a complete catalog of O’Reilly’s books on Perl Resource Center and related technologies, including sample chapters and code examples. Perl.com is the central web site for the Perl community. It is the perfect starting place for finding out everything there is to know about Perl. Conferences O’Reilly brings diverse innovators together to nurture the ideas that spark revolutionary industries. We specialize in document- ing the latest tools and systems, translating the innovator’s knowledge into useful skills for those in the trenches. Visit conferences.oreilly.com for our upcoming events. Safari Bookshelf (safari.oreilly.com) is the premier online refer- ence library for programmers and ITprofessionals. Conduct searches across more than 1,000 books. Subscribers can zero in on answers to time-critical questions in a matter of seconds. Read the books on your Bookshelf from cover to cover or sim- ply flip to the page you need. Try it today with a free trial. main.title Page iii Monday, May 19, 2008 11:21 AM FIFTH EDITION LearningTomcat Perl™ The Definitive Guide Randal L. Schwartz,Jason Tom Brittain Phoenix, and and Ian brian F. Darwin d foy Beijing • Cambridge • Farnham • Köln • Sebastopol • Taipei • Tokyo Learning Perl, Fifth Edition by Randal L. -

Read Book Perl Cookbook Ebook, Epub

PERL COOKBOOK PDF, EPUB, EBOOK Tom Christiansen,Nathan Torkington | 900 pages | 02 Sep 2003 | O'Reilly Media, Inc, USA | 9780596003135 | English | Sebastopol, United States Perl Cookbook PDF Book There are several recipes here. I greatly enjoyed the examples in this book. Executing Commands Without Shell Escapes Escaping Characters 1. Program: urlify 6. Picking a Random Line from a File 8. Su-shee rated it liked it Oct 21, Matching Shell Globs as Regular Expressions 6. With a foreword by Larry Wall, the Commenting Regular Expressions 6. Not as good as taking a class from Tom, but I've been known to browse this book and write test programs. I was impressed by the breadth of this book, and the coverage of its topics was well satisfying. POE, in turn, is distributed under the same terms as Perl. Related Searches. Invoking Methods Indirectly Just a moment while we sign you in to your Goodreads account. Jim rated it really liked it Jul 20, Want to Read saving…. Nathan Torkington Goodreads Author. Writing Bidirectional Clients Trimming Blanks from the Ends of a String 1. Operating on a Series of Integers 2. If you have JavaScript experience—with knowledge of It's just shy of lines, including whitespace and comments. Pretending a String Is a File 8. Find a Perl programmer, and you'll find a copy of Perl Cookbook nearby. Matching Words 6. Reliable bots should accept this and work around it robustly. Whether you're a novice or veteran Perl programmer, you'll find Perl Cookbook , 2nd Edition to be one of the most useful books on Perl available. -

Programming Perl, 3Rd Edition

Programming Perl Programming Perl Third Edition Larry Wall, Tom Christiansen & Jon Orwant Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Programming Perl, Third Edition by Larry Wall, Tom Christiansen, and Jon Orwant Copyright © 2000, 1996, 1991 O’Reilly & Associates, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly & Associates, Inc., 101 Morris Street, Sebastopol, CA 95472. Editor, First Edition: Tim O’Reilly Editor, Second Edition: Steve Talbott Editor, Third Edition: Linda Mui Technical Editor: Nathan Torkington Production Editor: Melanie Wang Cover Designer: Edie Freedman Printing History: January 1991: First Edition. September 1996: Second Edition. July 2000: Third Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly & Associates, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly & Associates, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. The association between the image of a camel and the Perl language is a trademark of O’Reilly & Associates, Inc. Permission may be granted for non-commercial use; please inquire by sending mail to [email protected]. While every precaution has been taken in the preparation of this book, the publisher assumes no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. Library of Congress Cataloging-in-Publication Data Wall, Larry. Programming Perl/Larry Wall, Tom Christiansen & Jon Orwant.--3rd ed. p. cm. ISBN 0-596-00027-8 1. -

Perl Cookbook

;-_=_Scrolldown to the Underground_=_-; Perl Cookbook http://kickme.to/tiger/ By Tom Christiansen & Nathan Torkington; ISBN 1-56592-243-3, 794 pages. First Edition, August 1998. (See the catalog page for this book.) Search the text of Perl Cookbook. Index Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z Table of Contents Foreword Preface Chapter 1: Strings Chapter 2: Numbers Chapter 3: Dates and Times Chapter 4: Arrays Chapter 5: Hashes Chapter 6: Pattern Matching Chapter 7: File Access Chapter 8: File Contents Chapter 9: Directories Chapter 10: Subroutines Chapter 11: References and Records Chapter 12: Packages, Libraries, and Modules Chapter 13: Classes, Objects, and Ties Chapter 14: Database Access Chapter 15: User Interfaces Chapter 16: Process Management and Communication Chapter 17: Sockets Chapter 18: Internet Services Chapter 19: CGI Programming Chapter 20: Web Automation The Perl CD Bookshelf Navigation Copyright © 1999 O'Reilly & Associates. All Rights Reserved. Foreword Next: Preface Foreword They say that it's easy to get trapped by a metaphor. But some metaphors are so magnificent that you don't mind getting trapped in them. Perhaps the cooking metaphor is one such, at least in this case. The only problem I have with it is a personal one - I feel a bit like Betty Crocker's mother. The work in question is so monumental that anything I could say here would be either redundant or irrelevant. However, that never stopped me before. Cooking is perhaps the humblest of the arts; but to me humility is a strength, not a weakness. -

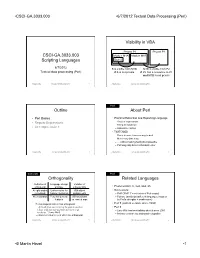

Textual Data Processing (Perl)

• CSCI-GA.3033.003 • 6/7/2012 Textual Data Processing (Perl) Visibility in VBA Project P1 Project P2 CSCI-GA.3033.003 Module M1A Module M1B Scripting Languages Sub S 6/7/2012 S is visible from M1B M1B is visible from P2 Textual data processing (Perl) if S is not private if P2 has a reference to P1 and M1B is not private © Martin Hirzel CSCI-GA.3033.003 NYU 6/7/12 1 © Martin Hirzel CSCI-GA.3033.003 NYU 6/7/12 2 Perl Outline About Perl • Perl Basics • Practical Extraction and Reporting Language • Regular Expressions – Regular expressions – String interpolation • At 7:30pm: Quiz 1 – Associative arrays • TIMTOWDI – There is more than one way to do it – Make easy jobs easy – … without making hard jobs impossible – Pathologically Eclectic Rubbish Lister © Martin Hirzel CSCI-GA.3033.003 NYU 6/7/12 3 © Martin Hirzel CSCI-GA.3033.003 NYU 6/7/12 4 Concepts Perl Orthogonality Related Languages Definition of Language design Violation of orthogonal principle orthogonality • Predecessors: C, sed, awk, sh At right angles Uniform rules for VBA object • Successors: (unrelated) feature interaction assignments – PHP (“PHP 1” = collection of Perl scripts) Not redundant Few, but general, VBA positional – Python, JavaScript (different languages, inspired features vs. named args by Perl’s strengths + weaknesses) Perl is diagonal rather than orthogonal: • Perl 5 (current version, since 1994) “If I walk from one corner of the park to another, • Perl 6 I don’t walk due east and then due north. I go – Larry Wall has been talking about it since 2001 northeast.” [Larry -

Learning Perl.Pdf

www.it-ebooks.info www.it-ebooks.info Download from Wow! eBook <www.wowebook.com> Learning Perl www.it-ebooks.info www.it-ebooks.info www.it-ebooks.info SIXTH EDITION Learning Perl Randal L. Schwartz, brian d foy, and Tom Phoenix Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo www.it-ebooks.info Learning Perl, Sixth Edition by Randal L. Schwartz, brian d foy, and Tom Phoenix Copyright © 2011 Randal L. Schwartz, brian d foy, and Tom Phoenix. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Simon St.Laurent Indexer: John Bickelhaupt Production Editor: Kristen Borg Cover Designer: Karen Montgomery Copyeditor: Audrey Doyle Interior Designer: David Futato Proofreader: Kiel Van Horn Illustrator: Robert Romano Printing History: November 1993: First Edition. July 1997: Second Edition. July 2001: Third Edition. July 2005: Fourth Edition. July 2008: Fifth Edition. June 2011: Sixth Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Learning Perl, the image of a llama, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. -

Learning Perl

Learning Perl SIXTH EDITION Learning Perl Randal L. Schwartz, brian d foy, and Tom Phoenix Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo Learning Perl, Sixth Edition by Randal L. Schwartz, brian d foy, and Tom Phoenix Copyright © 2011 Randal L. Schwartz, brian d foy, and Tom Phoenix. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Simon St.Laurent Indexer: John Bickelhaupt Production Editor: Kristen Borg Cover Designer: Karen Montgomery Copyeditor: Audrey Doyle Interior Designer: David Futato Proofreader: Kiel Van Horn Illustrator: Robert Romano Printing History: November 1993: First Edition. July 1997: Second Edition. July 2001: Third Edition. July 2005: Fourth Edition. July 2008: Fifth Edition. June 2011: Sixth Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Learning Perl, the image of a llama, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

Intermediate Perl

Intermediate Perl Boston University Information Services & Technology Course Coordinator: Timothy Kohl Last Modified: 09/19/13 Outline • explore further the data types introduced before. • introduce more advanced types • special variables • references (i.e. pointers) • multidimensional arrays • arrays of hashes • introduce functions and local variables • dig deeper into regular expressions • show how to interact with Unix, including how to process files and conduct other I/O operations 1 Data Types Scalars revisited As we saw, scalars consist of either string or number values. and for strings, the usage of " versus ' makes a difference. Ex: $name="Fred"; $wrong_greeting='Hello $name!'; $right_greeting="Hello $name!"; # print "$wrong_greeting\n"; print "$right_greeting\n"; yields Hello $name! Hello Fred! If one wishes to include characters like $ , % , \ , ", ' (called meta-characters) in a double quoted string they need to be preceded with a \ to be printed correctly Ex: print "The coffee costs \$1.20\n"; which yields The coffee costs $1.20 The rule of thumb for this is that if the character has some usage in the language, to print this character literally, escape it with a \ 2 Sometimes we need to insert variable names in such a way that there might be some ambiguity in how they get interpreted. Suppose $x="day" or $x="night" and we wish to say "It is daytime" or "It is nighttime" using this variable. incorrect correct $x="day"; $x="day"; print "It is $xtime\n"; print "It is ${x}time\n"; This is interpreted as a variable called $xtime putting { } around the name will insert $x properly Arrays revisited For any array @X, there is a related scalar variable $#X which gives the index of the last defined element of the array. -

Introduction

Introduction A Potted History Perl was originally written by Larry Wall while he was working at NASA's Jet Propulsion Labs. Larry is an Internet legend: Not only is he well-known for Perl, but as the author of the UNIX utilities rn, which was one of the original Usenet newsreaders, and patch, a tremendously useful utility that takes a list of differences between two files and allows you to turn one into the other. The word 'patch' used for this activity is now widespread. Perl started life as a 'glue' language, for the use of Larry and his officemates, allowing one to 'stick' different tools together by converting between their various data formats. It pulled together the best features of several languages: the powerful regular expressions from sed (the UNIX stream editor), the pattern-scanning language awk, and a few other languages and utilities. The syntax was further made up out of C, Pascal, Basic, UNIX shell languages, English and maybe a few other things along the way. Version 1 of Perl hit the world on December 18, 1987, and the language has been steadily developing since then, with contributions from innumerable people. Perl 2 expanded the regular expression support, while Perl 3 allowed Perl to deal with binary data. Perl 4 was released so that the Camel Book (see the Resources section at the end of this chapter) could refer to a new version of Perl. Perl 5 has seen some rather drastic changes in syntax and some pretty fantastic extensions to the language. Perl 5 is (more or less) backwardly compatible with previous versions of the language, but at the same time, makes a lot of the old code obsolete. -

Programming Perl

Programming Perl #!/usr/bin/perl −w use strict; $_=’ev al("seek\040D ATA,0, 0;");foreach(1..2) {<DATA>;}my @camel1hump;my$camel; my$Camel ;while( <DATA>){$_=sprintf("%−6 9s",$_);my@dromedary 1=split(//);if(defined($ _=<DATA>)){@camel1hum p=split(//);}while(@dromeda ry1){my$camel1hump=0 ;my$CAMEL=3;if(defined($_=shif t(@dromedary1 ))&&/\S/){$camel1hump+=1<<$CAMEL;} $CAMEL−−;if(d efined($_=shift(@dromedary1))&&/\S/){ $camel1hump+=1 <<$CAMEL;}$CAMEL−−;if(defined($_=shift( @camel1hump))&&/\S/){$camel1hump+=1<<$CAMEL;}$CAMEL−−;if( defined($_=shift(@camel1hump))&&/\S/){$camel1hump+=1<<$CAME L;;}$camel.=(split(//,"\040..m‘{/J\047\134}L^7FX"))[$camel1h ump];}$camel.="\n";}@camel1hump=split(/\n/,$camel);foreach(@ camel1hump){chomp;$Camel=$_;tr/LJF7\173\175‘\047/\061\062\063 45678/;tr/12345678/JL7F\175\173\047‘/;$_=reverse;print"$_\040 $Camel\n";}foreach(@camel1hump){chomp;$Camel=$_;y/LJF7\173\17 5‘\047/12345678/;tr/12345678/JL7F\175\173\047‘/;$_=reverse;p rint"\040$_$Camel\n";}#japh−Erudil’;;s;\s*;;g;;eval; eval ("seek\040DATA,0,0;");undef$/;$_=<DATA>;s$\s*$$g;( );;s ;^.*_;;;map{eval"print\"$_\"";}/.{4}/g; __DATA__ \124 \1 50\145\040\165\163\145\040\157\1 46\040\1 41\0 40\143\141 \155\145\1 54\040\1 51\155\ 141 \147\145\0 40\151\156 \040\141 \163\16 3\ 157\143\ 151\141\16 4\151\1 57\156 \040\167 \151\164\1 50\040\ 120\1 45\162\ 154\040\15 1\163\ 040\14 1\040\1 64\162\1 41\144 \145\ 155\14 1\162\ 153\04 0\157 \146\ 040\11 7\047\ 122\1 45\15 1\154\1 54\171 \040 \046\ 012\101\16 3\16 3\15 7\143\15 1\14 1\16 4\145\163 \054 \040 \111\156\14 3\056 \040\