Final Copy 2021 06 24 Foyer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Taxonomy of Accelerator Architectures and Their

A taxonomy of accelerator C. Cas$caval S. Chatterjee architectures and their H. Franke K. J. Gildea programming models P. Pattnaik As the clock frequency of silicon chips is leveling off, the computer architecture community is looking for different solutions to continue application performance scaling. One such solution is the multicore approach, i.e., using multiple simple cores that enable higher performance than wide superscalar processors, provided that the workload can exploit the parallelism. Another emerging alternative is the use of customized designs (accelerators) at different levels within the system. These are specialized functional units integrated with the core, specialized cores, attached processors, or attached appliances. The design tradeoff is quite compelling because current processor chips have billions of transistors, but they cannot all be activated or switched at the same time at high frequencies. Specialized designs provide increased power efficiency but cannot be used as general-purpose compute engines. Therefore, architects trade area for power efficiency by placing in the design additional units that are known to be active at different times. The resulting system is a heterogeneous architecture, with the potential of specialized execution that accelerates different workloads. While designing and building such hardware systems is attractive, writing and porting software to a heterogeneous platform is even more challenging than parallelism for homogeneous multicore systems. In this paper, we propose a taxonomy that allows us to define classes of accelerators, with the goal of focusing on a small set of programming models for accelerators. We discuss several types of currently popular accelerators and identify challenges to exploiting such accelerators in current software stacks. -

ARTIFICIAL INTELLIGENCE and ALGORITHMIC LIABILITY a Technology and Risk Engineering Perspective from Zurich Insurance Group and Microsoft Corp

White Paper ARTIFICIAL INTELLIGENCE AND ALGORITHMIC LIABILITY A technology and risk engineering perspective from Zurich Insurance Group and Microsoft Corp. July 2021 TABLE OF CONTENTS 1. Executive summary 03 This paper introduces the growing notion of AI algorithmic 2. Introduction 05 risk, explores the drivers and A. What is algorithmic risk and why is it so complex? ‘Because the computer says so!’ 05 B. Microsoft and Zurich: Market-leading Cyber Security and Risk Expertise 06 implications of algorithmic liability, and provides practical 3. Algorithmic risk : Intended or not, AI can foster discrimination 07 guidance as to the successful A. Creating bias through intended negative externalities 07 B. Bias as a result of unintended negative externalities 07 mitigation of such risk to enable the ethical and responsible use of 4. Data and design flaws as key triggers of algorithmic liability 08 AI. A. Model input phase 08 B. Model design and development phase 09 C. Model operation and output phase 10 Authors: D. Potential complications can cloud liability, make remedies difficult 11 Zurich Insurance Group 5. How to determine algorithmic liability? 13 Elisabeth Bechtold A. General legal approaches: Caution in a fast-changing field 13 Rui Manuel Melo Da Silva Ferreira B. Challenges and limitations of existing legal approaches 14 C. AI-specific best practice standards and emerging regulation 15 D. New approaches to tackle algorithmic liability risk? 17 Microsoft Corp. Rachel Azafrani 6. Principles and tools to manage algorithmic liability risk 18 A. Tools and methodologies for responsible AI and data usage 18 Christian Bucher B. Governance and principles for responsible AI and data usage 20 Franziska-Juliette Klebôn C. -

Memory Management and Garbage Collection

Overview Memory Management Stack: Data on stack (local variables on activation records) have lifetime that coincides with the life of a procedure call. Memory for stack data is allocated on entry to procedures ::: ::: and de-allocated on return. Heap: Data on heap have lifetimes that may differ from the life of a procedure call. Memory for heap data is allocated on demand (e.g. malloc, new, etc.) ::: ::: and released Manually: e.g. using free Automatically: e.g. using a garbage collector Compilers Memory Management CSE 304/504 1 / 16 Overview Memory Allocation Heap memory is divided into free and used. Free memory is kept in a data structure, usually a free list. When a new chunk of memory is needed, a chunk from the free list is returned (after marking it as used). When a chunk of memory is freed, it is added to the free list (after marking it as free) Compilers Memory Management CSE 304/504 2 / 16 Overview Fragmentation Free space is said to be fragmented when free chunks are not contiguous. Fragmentation is reduced by: Maintaining different-sized free lists (e.g. free 8-byte cells, free 16-byte cells etc.) and allocating out of the appropriate list. If a small chunk is not available (e.g. no free 8-byte cells), grab a larger chunk (say, a 32-byte chunk), subdivide it (into 4 smaller chunks) and allocate. When a small chunk is freed, check if it can be merged with adjacent areas to make a larger chunk. Compilers Memory Management CSE 304/504 3 / 16 Overview Manual Memory Management Programmer has full control over memory ::: with the responsibility to manage it well Premature free's lead to dangling references Overly conservative free's lead to memory leaks With manual free's it is virtually impossible to ensure that a program is correct and secure. -

Project Snowflake: Non-Blocking Safe Manual Memory Management in .NET

Project Snowflake: Non-blocking Safe Manual Memory Management in .NET Matthew Parkinson Dimitrios Vytiniotis Kapil Vaswani Manuel Costa Pantazis Deligiannis Microsoft Research Dylan McDermott Aaron Blankstein Jonathan Balkind University of Cambridge Princeton University July 26, 2017 Abstract Garbage collection greatly improves programmer productivity and ensures memory safety. Manual memory management on the other hand often delivers better performance but is typically unsafe and can lead to system crashes or security vulnerabilities. We propose integrating safe manual memory management with garbage collection in the .NET runtime to get the best of both worlds. In our design, programmers can choose between allocating objects in the garbage collected heap or the manual heap. All existing applications run unmodified, and without any performance degradation, using the garbage collected heap. Our programming model for manual memory management is flexible: although objects in the manual heap can have a single owning pointer, we allow deallocation at any program point and concurrent sharing of these objects amongst all the threads in the program. Experimental results from our .NET CoreCLR implementation on real-world applications show substantial performance gains especially in multithreaded scenarios: up to 3x savings in peak working sets and 2x improvements in runtime. 1 Introduction The importance of garbage collection (GC) in modern software cannot be overstated. GC greatly improves programmer productivity because it frees programmers from the burden of thinking about object lifetimes and freeing memory. Even more importantly, GC prevents temporal memory safety errors, i.e., uses of memory after it has been freed, which often lead to security breaches. Modern generational collectors, such as the .NET GC, deliver great throughput through a combination of fast thread-local bump allocation and cheap collection of young objects [63, 18, 61]. -

Practical Homomorphic Encryption and Cryptanalysis

Practical Homomorphic Encryption and Cryptanalysis Dissertation zur Erlangung des Doktorgrades der Naturwissenschaften (Dr. rer. nat.) an der Fakult¨atf¨urMathematik der Ruhr-Universit¨atBochum vorgelegt von Dipl. Ing. Matthias Minihold unter der Betreuung von Prof. Dr. Alexander May Bochum April 2019 First reviewer: Prof. Dr. Alexander May Second reviewer: Prof. Dr. Gregor Leander Date of oral examination (Defense): 3rd May 2019 Author's declaration The work presented in this thesis is the result of original research carried out by the candidate, partly in collaboration with others, whilst enrolled in and carried out in accordance with the requirements of the Department of Mathematics at Ruhr-University Bochum as a candidate for the degree of doctor rerum naturalium (Dr. rer. nat.). Except where indicated by reference in the text, the work is the candidates own work and has not been submitted for any other degree or award in any other university or educational establishment. Views expressed in this dissertation are those of the author. Place, Date Signature Chapter 1 Abstract My thesis on Practical Homomorphic Encryption and Cryptanalysis, is dedicated to efficient homomor- phic constructions, underlying primitives, and their practical security vetted by cryptanalytic methods. The wide-spread RSA cryptosystem serves as an early (partially) homomorphic example of a public- key encryption scheme, whose security reduction leads to problems believed to be have lower solution- complexity on average than nowadays fully homomorphic encryption schemes are based on. The reader goes on a journey towards designing a practical fully homomorphic encryption scheme, and one exemplary application of growing importance: privacy-preserving use of machine learning. -

Techniques for Improving the Performance of Software Transactional Memory

Techniques for Improving the Performance of Software Transactional Memory Srdan Stipi´c Department of Computer Architecture Universitat Polit`ecnicade Catalunya A thesis submitted for the degree of Doctor of Philosophy in Computer Architecture July, 2014 Advisor: Adri´anCristal Co-Advisor: Osman S. Unsal Tutor: Mateo Valero Curso académico: Acta de calificación de tesis doctoral Nombre y apellidos Programa de doctorado Unidad estructural responsable del programa Resolución del Tribunal Reunido el Tribunal designado a tal efecto, el doctorando / la doctoranda expone el tema de la su tesis doctoral titulada ____________________________________________________________________________________ __________________________________________________________________________________________. Acabada la lectura y después de dar respuesta a las cuestiones formuladas por los miembros titulares del tribunal, éste otorga la calificación: NO APTO APROBADO NOTABLE SOBRESALIENTE (Nombre, apellidos y firma) (Nombre, apellidos y firma) Presidente/a Secretario/a (Nombre, apellidos y firma) (Nombre, apellidos y firma) (Nombre, apellidos y firma) Vocal Vocal Vocal ______________________, _______ de __________________ de _______________ El resultado del escrutinio de los votos emitidos por los miembros titulares del tribunal, efectuado por la Escuela de Doctorado, a instancia de la Comisión de Doctorado de la UPC, otorga la MENCIÓN CUM LAUDE: SÍ NO (Nombre, apellidos y firma) (Nombre, apellidos y firma) Presidente de la Comisión Permanente de la Escuela de Secretaria de la Comisión Permanente de la Escuela de Doctorado Doctorado Barcelona a _______ de ____________________ de __________ To my parents. Acknowledgements I am thankful to a lot of people without whom I would not have been able to complete my PhD studies. While it is not possible to make an exhaustive list of names, I would like to mention a few. -

Information Guide

INFORMATION GUIDE 7 ALL-PRO 7 NFL MVP LAMAR JACKSON 2018 - 1ST ROUND (32ND PICK) RONNIE STANLEY 2016 - 1ST ROUND (6TH PICK) 2020 BALTIMORE DRAFT PICKS FIRST 28TH SECOND 55TH (VIA ATL.) SECOND 60TH THIRD 92ND THIRD 106TH (COMP) FOURTH 129TH (VIA NE) FOURTH 143RD (COMP) 7 ALL-PRO MARLON HUMPHREY FIFTH 170TH (VIA MIN.) SEVENTH 225TH (VIA NYJ) 2017 - 1ST ROUND (16TH PICK) 2020 RAVENS DRAFT GUIDE “[The Draft] is the lifeblood of this Ozzie Newsome organization, and we take it very Executive Vice President seriously. We try to make it a science, 25th Season w/ Ravens we really do. But in the end, it’s probably more of an art than a science. There’s a lot of nuance involved. It’s Joe Hortiz a big-picture thing. It’s a lot of bits and Director of Player Personnel pieces of information. It’s gut instinct. 23rd Season w/ Ravens It’s experience, which I think is really, really important.” Eric DeCosta George Kokinis Executive VP & General Manager Director of Player Personnel 25th Season w/ Ravens, 2nd as EVP/GM 24th Season w/ Ravens Pat Moriarty Brandon Berning Bobby Vega “Q” Attenoukon Sarah Mallepalle Sr. VP of Football Operations MW/SW Area Scout East Area Scout Player Personnel Assistant Player Personnel Analyst Vincent Newsome David Blackburn Kevin Weidl Patrick McDonough Derrick Yam Sr. Player Personnel Exec. West Area Scout SE/SW Area Scout Player Personnel Assistant Quantitative Analyst Nick Matteo Joey Cleary Corey Frazier Chas Stallard Director of Football Admin. Northeast Area Scout Pro Scout Player Personnel Assistant David McDonald Dwaune Jones Patrick Williams Jenn Werner Dir. -

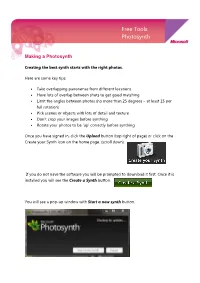

Free Tools Photosynth

Free Tools Photosynth Making a Photosynth Creating the best synth starts with the right photos. Here are some key tips: • Take overlapping panoramas from different locations • Have lots of overlap between shots to get good matching • Limit the angles between photos (no more than 25 degrees – at least 15 per full rotation) • Pick scenes or objects with lots of detail and texture • Don’t crop your images before synthing • Rotate your photos to be ‘up’ correctly before synthing Once you have signed in, click the Upload button (top right of page) or click on the Create your Synth icon on the home page. (scroll down). If you do not have the software you will be prompted to download it first. Once it is installed you will see the Create a Synth button. You will see a pop-up window with Start a new synth button. Give your synth a name, tags (descriptive words) and description. Click Add Photos, browse to your files add them. Then click on the Synth button at the bottom of the page. Photosynth will do the rest for you. Making a Panorama Many photosynths consist of photos shot from a single location. Our friends in Microsoft Research have developed a free, world class panoramic image stitcher called Microsoft Image Composite Editor (ICE for short.) ICE takes a set of overlapping photographs of a scene shot from a single camera location, and creates a single high-resolution image. Photosynth now has support for uploading, exploring and viewing ICE panoramas alongside normal synths. Here’s how to create a panorama in ICE and upload it to Photosynth: 1. -

Proceedings of the Linux Symposium

Proceedings of the Linux Symposium Volume One June 27th–30th, 2007 Ottawa, Ontario Canada Contents The Price of Safety: Evaluating IOMMU Performance 9 Ben-Yehuda, Xenidis, Mostrows, Rister, Bruemmer, Van Doorn Linux on Cell Broadband Engine status update 21 Arnd Bergmann Linux Kernel Debugging on Google-sized clusters 29 M. Bligh, M. Desnoyers, & R. Schultz Ltrace Internals 41 Rodrigo Rubira Branco Evaluating effects of cache memory compression on embedded systems 53 Anderson Briglia, Allan Bezerra, Leonid Moiseichuk, & Nitin Gupta ACPI in Linux – Myths vs. Reality 65 Len Brown Cool Hand Linux – Handheld Thermal Extensions 75 Len Brown Asynchronous System Calls 81 Zach Brown Frysk 1, Kernel 0? 87 Andrew Cagney Keeping Kernel Performance from Regressions 93 T. Chen, L. Ananiev, and A. Tikhonov Breaking the Chains—Using LinuxBIOS to Liberate Embedded x86 Processors 103 J. Crouse, M. Jones, & R. Minnich GANESHA, a multi-usage with large cache NFSv4 server 113 P. Deniel, T. Leibovici, & J.-C. Lafoucrière Why Virtualization Fragmentation Sucks 125 Justin M. Forbes A New Network File System is Born: Comparison of SMB2, CIFS, and NFS 131 Steven French Supporting the Allocation of Large Contiguous Regions of Memory 141 Mel Gorman Kernel Scalability—Expanding the Horizon Beyond Fine Grain Locks 153 Corey Gough, Suresh Siddha, & Ken Chen Kdump: Smarter, Easier, Trustier 167 Vivek Goyal Using KVM to run Xen guests without Xen 179 R.A. Harper, A.N. Aliguori & M.D. Day Djprobe—Kernel probing with the smallest overhead 189 M. Hiramatsu and S. Oshima Desktop integration of Bluetooth 201 Marcel Holtmann How virtualization makes power management different 205 Yu Ke Ptrace, Utrace, Uprobes: Lightweight, Dynamic Tracing of User Apps 215 J. -

WWW 2013 22Nd International World Wide Web Conference

WWW 2013 22nd International World Wide Web Conference General Chairs: Daniel Schwabe (PUC-Rio – Brazil) Virgílio Almeida (UFMG – Brazil) Hartmut Glaser (CGI.br – Brazil) Research Track: Ricardo Baeza-Yates (Yahoo! Labs – Spain & Chile) Sue Moon (KAIST – South Korea) Practice and Experience Track: Alejandro Jaimes (Yahoo! Labs – Spain) Haixun Wang (MSR – China) Developers Track: Denny Vrandečić (Wikimedia – Germany) Marcus Fontoura (Google – USA) Demos Track: Bernadette F. Lóscio (UFPE – Brazil) Irwin King (CUHK – Hong Kong) W3C Track: Marie-Claire Forgue (W3C Training, USA) Workshops Track: Alberto Laender (UFMG – Brazil) Les Carr (U. of Southampton – UK) Posters Track: Erik Wilde (EMC – USA) Fernanda Lima (UNB – Brazil) Tutorials Track: Bebo White (SLAC – USA) Maria Luiza M. Campos (UFRJ – Brazil) Industry Track: Marden S. Neubert (UOL – Brazil) Proceedings and Metadata Chair: Altigran Soares da Silva (UFAM - Brazil) Local Arrangements Committee: Chair – Hartmut Glaser Executive Secretary – Vagner Diniz PCO Liaison – Adriana Góes, Caroline D’Avo, and Renato Costa Conference Organization Assistant – Selma Morais International Relations – Caroline Burle Technology Liaison – Reinaldo Ferraz UX Designer / Web Developer – Yasodara Córdova, Ariadne Mello Internet infrastructure - Marcelo Gardini, Felipe Agnelli Barbosa Administration– Ana Paula Conte, Maria de Lourdes Carvalho, Beatriz Iossi, Carla Christiny de Mello Legal Issues – Kelli Angelini Press Relations and Social Network – Everton T. Rodrigues, S2Publicom and EntreNós PCO – SKL Eventos -

Mobilizing (Ourselves) for a Critical Digital Archaeology Adam Rabinowitz University of Texas at Austin, [email protected]

University of Wisconsin Milwaukee UWM Digital Commons Mobilizing the Past Art History 10-21-2016 5.2. Response: Mobilizing (Ourselves) for a Critical Digital Archaeology Adam Rabinowitz University of Texas at Austin, [email protected] Follow this and additional works at: https://dc.uwm.edu/arthist_mobilizingthepast Part of the Classical Archaeology and Art History Commons Recommended Citation Rabinowitz, Adam. “Response: Mobilizing (Ourselves) for a Critical Digital Archaeology.” In Mobilizing the Past for a Digital Future: The Potential of Digital Archaeology, edited by Erin Walcek Averett, Jody Michael Gordon, and Derek B. Counts, 493-518. Grand Forks, ND: The Digital Press at the University of North Dakota, 2016. This Book is brought to you for free and open access by UWM Digital Commons. It has been accepted for inclusion in Mobilizing the Past by an authorized administrator of UWM Digital Commons. For more information, please contact [email protected]. MOBILIZING THE PAST FOR A DIGITAL FUTURE MOBILIZING the PAST for a DIGITAL FUTURE The Potential of Digital Archaeology Edited by Erin Walcek Averett Jody Michael Gordon Derek B. Counts The Digital Press @ The University of North Dakota Grand Forks Creative Commons License This work is licensed under a Creative Commons By Attribution 4.0 International License. 2016 The Digital Press @ The University of North Dakota Book Design: Daniel Coslett and William Caraher Cover Design: Daniel Coslett Library of Congress Control Number: 2016917316 The Digital Press at the University of North Dakota, Grand Forks, North Dakota ISBN-13: 978-062790137 ISBN-10: 062790137 Version 1.1 (updated November 5, 2016) Table of Contents Preface & Acknowledgments v How to Use This Book xi Abbreviations xiii Introduction Mobile Computing in Archaeology: Exploring and Interpreting Current Practices 1 Jody Michael Gordon, Erin Walcek Averett, and Derek B. -

There Are No Limits to Learning! Academic and High School

Brick and Click Libraries An Academic Library Symposium Northwest Missouri State University Friday, November 5, 2010 Managing Editors: Frank Baudino Connie Jo Ury Sarah G. Park Co-Editor: Carolyn Johnson Vicki Wainscott Pat Wyatt Technical Editor: Kathy Ferguson Cover Design: Sean Callahan Northwest Missouri State University Maryville, Missouri Brick & Click Libraries Team Director of Libraries: Leslie Galbreath Co-Coordinators: Carolyn Johnson and Kathy Ferguson Executive Secretary & Check-in Assistant: Beverly Ruckman Proposal Reviewers: Frank Baudino, Sara Duff, Kathy Ferguson, Hong Gyu Han, Lisa Jennings, Carolyn Johnson, Sarah G. Park, Connie Jo Ury, Vicki Wainscott and Pat Wyatt Technology Coordinators: Sarah G. Park and Hong Gyu Han Union & Food Coordinator: Pat Wyatt Web Page Editors: Lori Mardis, Sarah G. Park and Vicki Wainscott Graphic Designer: Sean Callahan Table of Contents Quick & Dirty Library Promotions That Really Work! 1 Eric Jennings, Reference & Instruction Librarian Kathryn Tvaruzka, Education Reference Librarian University of Wisconsin Leveraging Technology, Improving Service: Streamlining Student Billing Procedures 2 Colleen S. Harris, Head of Access Services University of Tennessee – Chattanooga Powerful Partnerships & Great Opportunities: Promoting Archival Resources and Optimizing Outreach to Public and K12 Community 8 Lea Worcester, Public Services Librarian Evelyn Barker, Instruction & Information Literacy Librarian University of Texas at Arlington Mobile Patrons: Better Services on the Go 12 Vincci Kwong,