Xr Association Developers Guide: an Industry-Wide Collaboration for Better Xr

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using Smartphone Technology to Simulate Human Behaviour Within an Indoor Environment

Department of Computer Science BSc (Hons) Computer Science (Software Engineering) Academic Year 2016 - 2017 Using Smartphone technology to simulate human behaviour within an indoor environment Michael Beaseley 1407118 A report submitted in partial fulfilment of the requirements for the degree of Bachelor of Science Brunel University Department of Computer Science Uxbridge Middlesex UB8 3PH United Kingdom T: +44 1895 203397 F: +44 (0) 1895 251686 Using Smartphone technology to simulate human behaviour within an indoor environment Abstract The purpose of this project is to research, investigate and design software application, on android, to develop an application that enables a user to track and monitor their behaviour within an indoor environment. Apart from tracking of an individual, there will be research into other smartphone technologies, such as NFC and Bluetooth. GPS is a great way for tracking an individual outdoors but not very good for indoor navigation. There are multiple examples of software application for business, especially within healthcare, but there is a real need for an android application for the public to use for indoor navigation within a complex building. These example software applications used within business are used for assert tracking and staff locating. The approach use for this project will be the waterfall model. This model will be used as a mechanism to facilitate the development of an indoor navigation android application. By using this model, an android application will be developed. In this project, the author will show you why this model will help in developing this application. The author will also evaluate the approach made and the result of the project to discover with the aim and objectives have been met at the end of the day. -

Quick Guide for Mobile Devices Quick Guide (Mobile)

EON Reality AVR Global Classroom Challenge Quick Guide for Mobile Devices Quick Guide (Mobile) Quick GuideGuide ContentsContents 1) Creating an Accountaccount onfor FreemiumAVR Platform through 0003 Creator AVR Mobile App 2) etting Started 00 2) Introduction to Creator AVR Mobile App 06 a AVR platform intro type of lessons 00 b CAVR intro type of lessons 00 3) Creating A 3D Lesson - Step By Step Instructionss 19 3) Creating a lesson ith step by step instruction 00 4) Submissiona Create Steps3D lesson For Competitionon AVR Platform 3400 b Create 3D lesson on CAVR 00 4) Submission of lesson for competition 00 EON Reality AVR Global Classroom Challenge Quick Guide Contents 1) Creating an account on Freemium 00 2) etting Started 00 a AVR platform intro type of lessons 00 b CAVR intro type of lessons 00 3) Creating a lesson ith step by step instruction 00 a Create 3D lesson on AVR Platform 00 1) CREATING AN ACCOUNT b Create 3D lesson on CAVR 00 FOR AVR PLATFORM THROUGH 4) Submission of lesson for competition 00 CREATOR AVR MOBILE APP EON Reality AVR Global Classroom Challenge Quick Guide (Mobile) CREATING AN ACCOUNT FOR AVR PLATFORM Mobile Device Installation To get started creating an account on AVR Platform, we will first need to download the Creator AVR App. To do so, download the app by using your phone and scanning the QR code below or by searching in your respective Apple Appstore or Google Playstore for Creator AVR. Look out for the Creator AVR Logo as seen in the screenshots. Use a QR code scanner to download the Creator AVR App directly Alternatively, search your mobile store for “Creator AVR” 4 Quick Guide (Mobile) CREATING AN ACCOUNT FOR AVR PLATFORM Mobile Device Installation Signing Up To get started creating an account on AVR Platform, we will first need to download the Next, follow the steps below on the signup process for your AVR Platform Account. -

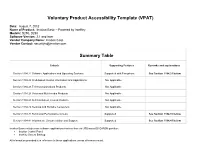

Voluntary Product Accessibility Template (VPAT)

Voluntary Product Accessibility Template (VPAT) Date: August 7, 2012 Name of Product: Imation Basic – Powered by IronKey Models: S250, D250 Software Version: 3.1 and later Vendor Company Name: Imation Corp. Vendor Contact: [email protected] Summary Table Criteria Supporting Features Remarks and explanations Section 1194.21 Software Applications and Operating Systems Supported with Exceptions See Section 1194.21 below Section 1194.22 Web-based Internet Information and Applications Not Applicable Section 1194.23 Telecommunications Products Not Applicable Section 1194.24 Video and Multi-media Products Not Applicable Section 1194.25 Self-Contained, Closed Products Not Applicable Section 1194.26 Desktop and Portable Computers Not Applicable Section 1194.31 Functional Performance Criteria Supported See Section 1194.31 below Section 1194.41 Information, Documentation and Support Supported See Section 1194.41 below Imation Basic includes two software applications that run from its USB-based DVD-ROM partition: Imation Control Panel IronKey Secure Backup All information provided is in reference to these applications unless otherwise noted. Section 1194.21 Software Applications and Operating Systems - Detail Voluntary Product Accessibility Template Supporting Criteria Remarks and explanations Features (a) When software is designed to run on a system that has a keyboard, product functions shall be executable from a Supported keyboard where the function itself or the result of performing a function can be discerned textually. (b) Applications -

MA900 Operator's Guide

C-989-100-12 (1) Chapter 1 Multi-Application Overview Chapter 2 Cell Sorter Preparation Operator’s Guide Chapter 3 Basic Operation Chapter 4 Configuring Experiments Chapter 5 Analysis Chapter 6 Sorting Chapter 7 Window Description Chapter 8 Maintenance Appendix A Operating Principles Appendix B Miscellaneous Index Software Version 3.0 LE-MA900 Series Table of Contents Using the PDF Manual .......................................................... 8 Model Name and Function Table ......................................... 9 Components and Documentation ...................................... 10 Chapter 1 Overview Main Features ...................................................................... 11 Cell Sorter Block Diagram .................................................. 13 System Configuration ......................................................... 14 Name and Function of Parts ............................................... 15 Front Panel ............................................................................... 15 Internal View (Front) ............................................................... 16 Internal View (Side) ................................................................. 22 Rear Panel ................................................................................ 23 Fluidics Cart ............................................................................. 25 Sorting chip .............................................................................. 27 Main Window .......................................................................... -

The FEPS V 1.1 Tutorial

FEPS Tutorial STUDENT WORKBOOK Land Management Tool Training Package Fire and Environmental Applications Team USFS - PNW Research Station Pacific Wildland Fire Sciences Laboratory 400 North 34th Street, Suite 201 Seattle, Washington 98103 Table of Contents Introduction .............................................................................................i Instructions for Installing FEPS Software....................................................iii FEPS Tutorial .........................................................................................iv Welcome to the FEPS v 1.1 Tutorial .........................................................1 Part 1: Background ..................................................................................2 What is FEPS? ........................................................................................ 3 History of EPM and FEPS.......................................................................... 4 Potential Applications .............................................................................. 5 Part 2: FEPS Basics ..................................................................................6 Basic Steps to Using FEPS........................................................................ 7 Installing FEPS ....................................................................................... 8 Upgrading and Reinstalling FEPS............................................................... 9 Starting FEPS...................................................................................... -

User Guide for the District of Colorado

CASE MANAGEMENT ELECTRONIC CASE FILES FILING A NOTICE OF APPEAL AND PAY.GOV USER GUIDE FOR THE DISTRICT OF COLORADO FEBRUARY 2012 Table of Contents Notice of Appeal and Pay.gov Overview ............................................ 3 Notice of Appeal event ..................................................... 3 Case Number Entry ........................................................ 4 Verification Screen .............................................................. 4 Attaching the Notice of Appeal .............................................. 4 Select the Filer .................................................................. 5 Notice of Appeal and Linkage Message Screen ................................. 5 Linkage Prompt screen ........................................................... 5 Selecting the Document to Link...................................................... 6 Pay.gov Prompt ........................................................... 7 Notice of Appeal Fee Notice Screen ............................................... 7 Pay.gov Loading Screen Message ................................................. 7 Pay.gov Payment Screen.................................................... 8 Credit Card Payment Example .................................................... 9 Authorization Screen ............................................................ 9 Final Verification Screen ......................................................... 10 final Review Screen ............................................................. 10 Notice of -

Work from Home

LET’S WORK A Step by Step Guide for Working from Home for Rush TABLE OF CONTENTS Click on the topic you would like to read. LET’S GET STARTED:......................................................................................................... 3 Computer: .......................................................................................................................... 3 Phone: ............................................................................................................................... 3 Internet:.............................................................................................................................. 3 BEFORE YOU LEAVE RUSH:............................................................................................. 4 Step 1: Move your files to the H: Drive ............................................................................ 4 Step 2: Forward your phone ............................................................................................. 6 Step 3: Register for Duo Security..................................................................................... 8 WHILE YOURE WORKING AT HOME: ............................................................................ 12 Getting connected............................................................................................................... 12 Connecting to Wi-Fi on your laptop/desktop computer ............................................. 12 Start working ...................................................................................................................... -

How to Download and Install Sharpdesk First You Will Need to Put the Sharpdesk CD Into Your PC’S CD Drive

How to download and install Sharpdesk First you will need to put the Sharpdesk CD into your PC’s CD drive. If you can not find the CD, or your computer does not have a CD drive, then you can download the software online using this link: https://drive.google.com/file/d/1jCu4LAOSkhBSuuz53LA3oh6wc4lT8jze/view?usp =sharing The autoplay window should pop up when you put the CD into the CD drive. Click on “Run setup.exe” Choose what language you want to use. Then click “ok” 1 2 3 On the first two screens you see click “Next” On the third screen, click “I Agree” and then click “Next” On this screen, enter your chosen user name, company name and the serial number. The serial # can be found on the outside of the Sharpdesk installation guide book that came with your machine. All the letters such as “A” are in upper case. 1 2 3 On the next three screens, click “Next” Click “Install” You will now see a loading screen. This loading screen may take several minutes. Double click on the Sharp desk This window will pop up as seen above Icon on the desktop Click “Cancel” When this window pops up Then click “Finish” and double click on the Click “Ok” Sharp desk icon again On the top of the screen, click on “Tools” then hover the arrow over “Product Configuration,” then click on “Network Scanner Tool” On this screen, click “New” On this screen, type in and the following window [your name] – desktop in the profile will appear as seen on the and description blanks. -

Center for Instructional Innovation

Center for Instructional Innovation www.brandman.edu/cii Adobe Connect Solutions to Common Problems These tips are provided to help solve the most common types of problems encountered in Adobe Connect. If you have any suggestions for other tips, please send them to [email protected]. Adobe Connect room appears to freeze Solution: Quit Adobe Connect and log back in to refresh the connection. Sound cuts out entirely Cause: Adobe failed to establish a strong VOIP connection or the connection has been disrupted. NOTE: If you have the phone line turned on and no one has called in, the software is set to cut out audio after 15 minutes to avoid unnecessary phone charges to the university. To avoid this issue, only turn on the phone line if you have someone calling in. Solution: First, try turning off the audio completely. Wait 10 seconds and then turn it back on to reestablish the connection. If no one is calling in, uncheck the box next to the phone line. Second, if turning off the audio does not help, close the Adobe Connect session and your browser. Then reopen both to join the session. Audio is cutting in and out Cause: This is a connection speed issue. Solution: Use a wired internet connection, (plug directly into your router instead of using wireless) or try to work as close to the wireless router as you can. Make sure you have set your connection speed in Adobe Connect to the type of connection you’re using. You can also turn on the phone line to call into the meeting. -

STAR-PAN™ II Powering Soldier Connectivity and C4ISR Mission Success QUICK-START USER’S GUIDE

MISSION-CRITICAL INTERCONNECT SOLUTIONS JTAC-TOUGH™ STAR-PAN™ II Powering Soldier Connectivity and C4ISR Mission Success QUICK-START USER’S GUIDE NOVEMBER 2020 STAR-PAN II: The Battle-Tested, Multiport USB Hub, Cable, and Power Management Solution STAR-PAN II Quick-Start User’s Guide Powering-up STAR-PAN II Battery Power Connecting STAR-PAN II to the EUD/Host Launching STAR-PAN Android Remote (SPAR) Power Management App SPAR Loading Screen SPAR Basic Operation Home Screen Operations: System Status Battery Power Status Enabling and Disabling Devices Enabled-Device Power Consumption Detailed Home Screen Operations: Power Usage Stats Accessing Detailed Data Changing Port Names Menu Options: Settings, Quitting App Powering-up STAR-PAN II Connectorized Hub: Main Battery Power General-Purpose cable (808-047) STAR-PAN II (808-194 connectorized hub) Main Battery with Adapter Connect one end of the General-Purpose cable to the battery adapter and the other end to either of the two “BATTERY” ports on the STAR- PAN II hub (located on the right- hand side). Red indicators on cable and hub connectors will align. Powering-up STAR-PAN II Cabled Hub: Main Battery Power General-Purpose cable (808-047) STAR-PAN II (808-057-1 cabled hub) Main Battery with Adapter Connect one end of the General-Purpose cable to the battery adapter and the other end to either of the two “BATT” cables on the STAR-PAN II hub (located on the right-hand side). Red indicators on cable and hub connectors will align. Note that only the “BATT 1” port has SMBus capability). -

ZEN (Blue Edition) ZEISS ZEN (Blue Edition)

Quick Guide ZEISS ZEN (blue edition) ZEISS ZEN (blue edition) Original Manual Carl Zeiss Microscopy GmbH Carl-Zeiss-Promenade 10 07745 Jena Germany [email protected] www.zeiss.com/microscopy Carl Zeiss Microscopy GmbH ZEISS Group Kistlerhofstr. 75 81379 München Document Name: ZEISS ZEN (blue edition) Quick Guide Revision: 2 Language: en-US Effective from: 04/2021 © 2021 Without the prior written consent of ZEISS, this document or any part of it must neither be translated nor reproduced or transmitted in any form or by any means - including electronic or mechanic methods, by photocopying, recording or by any in- formation or filing system. The right to make backup-copies for archiving purposes shall remain unaffected thereby. Any viola- tions may be prosecuted as copyright infringements. The use of general descriptive names, registered names, trademarks, etc. in this document does not imply that such names are exempt from the relevant intellectual property laws and regulations and therefore free for general use. This shall also apply if this is not specifically referred to. Software programs shall entirely remain the property of ZEISS. No program or subsequent up- grade thereof may be disclosed to any third party, copied or reproduced in any other form without the prior written consent of ZEISS, even if these copies or reproductions are destined for internal use at the customer's only, the only exception being one single back-up copy for archiving purposes. ZEISS reserves the right to make modifications to this document without notice. Inhalt ZEISS Inhalt 1 Welcome ....................................................................................... 4 2 Starting Software .......................................................................... 5 3 User Interface............................................................................... -

Simoa Semi-Quantitative SARS-Cov-2 Igg Antibody Test Is an Automated Igg

Simoa Semi-Quantitative SARS- CoV-2 IgG Antibody Test INSTRUCTIONS FOR USE 103750 April 2021 Quanterix Corporation 900 Middlesex Turnpike Billerica, MA 01821 Customer Support 1-877-786-8749 [email protected] For use under an Emergency Use Authorization (EUA) Only Prescription Use Only For In Vitro Diagnostic Use Only Read this package insert prior to use. Package insert instructions must be carefully followed. Reliability of assay results cannot be assured if there are deviations from the instructions in this package insert. The clinical applicability of a quantitative or semi-quantitative result is currently unknown and cannot be interpreted as an indication or degree of immunity nor protection from reinfection, nor compared to other SARS-CoV-2 antibody assays. NAME (Simoa) technology.2 In the first step, sample is drawn from a sample tube or microtiter plate by the instrument pipettor and mixed with COVID-19 spiKe protein coated paramagnetic capture beads in a reaction cuvette. IgG antibodies Simoa™ Semi-Quantitative SARS-CoV-2 IgG Antibody Test in the sample that are specific to COVID-19 spiKe protein are bound by the capture beads. After an incubation, capture beads are collected with a magnet, INTENDED USE and washed. Biotinylated anti-human IgG detector antibodies are then mixed with the capture beads, and the detector antibodies bind to the captured sample The Simoa Semi-Quantitative SARS-CoV-2 IgG Antibody Test is an automated IgG. Following a second wash, a conjugate of streptavidin-ß-galactosidase (SBG) paramagnetic microbead-based immunoassay intended for qualitative and is mixed with the capture beads. SBG binds to the biotinylated detector semi-quantitative detection of IgG antibodies to SARS-CoV-2 in human serum antibodies, resulting in enzyme labeling of captured sample IgG.