Architectural Requirements for Autonomous Human-Like Agents Aaron Sloman School of Computer Science the University of Birmingham, UK

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Read the Games Transport Plan

GAMES TRANSPORT PLAN 1 Foreword 3 Introduction 4 Purpose of Document 6 Policy and Strategy Background 7 The Games Birmingham 2022 10 The Transport Strategy 14 Transport during the Games 20 Games Family Transportation 51 Creating a Transport Legacy for All 60 Consultation and Engagement 62 Appendix A 64 Appendix B 65 2 1. FOREWORD The West Midlands is the largest urban area outside With the eyes of the world on Birmingham, our key priority will be to Greater London with a population of over 4 million ensure that the region is always kept moving and that every athlete and spectator arrives at their event in plenty of time. Our aim is people. The region has a rich history and a diverse that the Games are fully inclusive, accessible and as sustainable as economy with specialisms in creative industries, possible. We are investing in measures to get as many people walking, cycling or using public transport as their preferred and available finance and manufacturing. means of transport, both to the event and in the longer term as a In recent years, the West Midlands has been going through a positive legacy from these Games. This includes rebuilding confidence renaissance, with significant investment in housing, transport and in sustainable travel and encouraging as many people as possible to jobs. The region has real ambition to play its part on the world stage to take active travel forms of transport (such as walking and cycling) to tackle climate change and has already set challenging targets. increase their levels of physical activity and wellbeing as we emerge from Covid-19 restrictions. -

Economic-Impact-Of-University-Of-Birmingham-Full-Report.Pdf

The impact of the University of Birmingham April 2013 The impact of the University of Birmingham A report for the University of Birmingham April 2013 The impact of the University of Birmingham April 2013 Contents Executive Summary ...................................................................................... 3 1 Introduction ..................................................................................... 7 2 The University as an educator ........................................................ 9 3 The University as an employer ..................................................... 19 4 The economic impact of the University ....................................... 22 5 The University as a research hub ................................................. 43 6 The University as an international gateway ................................. 48 7 The University as a neighbour ...................................................... 56 Bibliography ................................................................................................ 67 2 The impact of the University of Birmingham April 2013 Executive Summary The University as an educator... The University of Birmingham draws students from all over the UK and the rest of the world to study at its Edgbaston campus. In 2011/12, its 27,800 students represented over 150 nationalities . The attraction of the University led over 20,700 students to move to or remain in Birmingham to study. At a regional level, it is estimated that the University attracted 22,400 people to either move to, -

Birmingham Commonwealth Games 2022: Cultural Programme

Birmingham Commonwealth Games 2022: Cultural Programme Chair Alan Heap Purple Monster Christina Boxer Warwick District Council Tim Hodgson & Louisa Davies Senior Producers (Cultural Programme & Live Sites) for Birmingham 2022. Christina Boxer Warwick District Council BOWLS & PARA BOWLS Warwick District VENUE 2022 Commonwealth Games Project ENHANCED Introduction ENVIRONMENT, PHYSICAL ACTIVITY & WELLBEING Spark Symposium 14.02.2020 MAXIMISING OPPORTUNITIES TO SHOWCASE LOCAL ENTERPRISE, CULTURE, TOURISM www.warwickdc.gov.uk & EVENTS Venues – A Regional Showcase Birmingham2022www.warwickdc.gov.uk presentation | slide26/01/2018 Lawn Bowls & Para Bowls o Matches on 9 days of competition o Minimum 2 sessions a day o 5,000 – 6,000 visitors to the District daily - Spectators - Competitors - Officials - Volunteers - Media o 240 lawn bowls competitors (2018) o Integrated Para Bowls o 28 nations (2018) www.warwickdc.gov.uk | 26/01/2018Jan 2020 WDC Commonwealth Games Project Objectives Successful CG2022 Bowls & Para Bowls Improved Bowls Venue Competition Participation & Diversity Enhanced Wider Victoria Park Facilities, Access & Riverside Links Raised Awareness of the Wellbeing Benefits of an Active Lifestyle Maximised Opportunities for Local Enterprise, Culture, Tourism and Showcasing WDC’s Reputation for Events Delivery www.warwickdc.gov.ukApril | 26/01/2018 2017 – March 2023 Louisa Davies Tim Hodgson Senior Producers, Cultural Programme & Live Sites - BIRMINGHAM 2022 BIRMINGHAM 2022 CULTURAL PROGRAMME Introduction Spark 14 February 2020 INTRODUCTION -

British Early Career Mathematicians' Colloquium 2020 Abstract Booklet

British Early Career Mathematicians' Colloquium 2020 Abstract Booklet 14th - 15th July 2020 Plenary Speakers Pure Mathematics: Applied Mathematics: Jonathan Hickman Adam Townsend University of Edinburgh Imperial College London Liana Yepremyan Gabriella Mosca The London School of Economics University of Z¨urich and Political Science Jaroslav Fowkes Anitha Thillaisundaram University of Oxford University of Lincoln Contact: Website: http://web.mat.bham.ac.uk/BYMC/BECMC20/ E-mail: [email protected] Organising Committee: Constantin Bilz, Alexander Brune, Matthew Clowe, Joseph Hyde, Amarja Kathapurkar (Chair), Cara Neal, Euan Smithers. With special thanks to the University of Birmingham, MAGIC and Olivia Renshaw. Tuesday 14th July 2020 9.30-9.50 Welcome session On convergence of Fourier integrals Microscale to macroscale in suspension mechanics 10.00-10.50 Jonathan Hickman (Plenary Speaker) Adam Townsend (Plenary Speaker) 10.55-11.30 Group networking session Strong components of random digraphs from the The evolution of a three dimensional microbubble in non- Blocks of finite groups of tame type 11.35-12.00 configuration model: the barely subcritical regime Newtonian fluid Norman MacGregor Matthew Coulson Eoin O'Brien Large trees in tournaments Donovan's conjecture and the classification of blocks Order from disorder: chaos, turbulence and recurrent flow 12.10-12.35 Alistair Benford Cesare Giulio Ardito Edward Redfern Lunch break MorphoMecanX: mixing (plant) biology with physics, Ryser's conjecture and more 14.00-14.50 mathematics -

Rising to Real World Challenges – from the Lab to Changing Lives

The Universities of the West Midlands. Rising to real world challenges – from the lab to changing lives. 1 Rising to real world challenges – from the lab to changing lives How the Universities of the West Midlands are coming together to realise the grand challenges facing the UK and the world Introduction Universities are economic engines contributing £2.9 billion GVA to the West Midlands and creating 55,000 jobs (directly and indirectly) across all skills levels. While many are recognised for their impact in talent and innovation generated through teaching and research, it can be difficult to understand the link between the work happening in their institutions and how it will affect everyday lives. The Universities of the West Midlands – Aston University, Birmingham City University, Coventry University, University of Birmingham, University of Warwick and the University of Wolverhampton – have come together to demonstrate how they are making their mark by rising to the grand challenges set out by the Government. Addressing these challenges will improve people’s lives and influence productivity. The Universities are providing life-changing solutions to make us healthier, wealthier and more productive. Their research and development reaches far beyond the laboratory and lecture theatre, creating real-world solutions to the grand challenges. Each university makes a unique contribution to specialist sectors within the West Midlands’ economy. It is their collective strength that makes the region distinctive in its ability to accelerate business growth and innovation. 2 The West Midlands Local Industrial Strategy Building on the strengths and research specialisms of its universities, the West Midlands is set to unveil a trailblazing Local Industrial Strategy. -

The Beginners Guide to Brum Making the Most of Your Time in Birmingham

International Development Department, IDD School of Government and Society The Beginners Guide to Brum Making the most of your time in Birmingham Birmingham City Birmingham is a vibrant city that has lots to see and do for almost everyone. The heart of the city offers more than 1000 shops, great hotels, the tastes of many of the world’s cuisines, performing arts, world-class museum collections and various sporting arenas. It’s a great place to take a break from the books mid-day or enjoy an evening out and getting around is very simple. The Bullring is a very popular destination for shoppers with over 140 stores spread across three levels and located right across the New Street train station. On certain days you can browse the indoor market and the Rag Market behind the Bullring where you can find collections of vibrant fabrics and a variety of fresh produce. Located on the canal side is the Mailbox with many restaurants and designer stores to choose from. Many of the most popular retail stores and chain restaurants can also be found on High Street and Corporation Street. Brindley Place is definitely worth a visit as it is home to some of the best eating in the city with a beautiful view of the canal. Birmingham University The University of Birmingham has been leading the way in research and education since the 1900’s. Birmingham is a great place to study with lots to offer to its students from brilliant sporting facilities, a wide assortment of social events, a global reputation for teaching, and a diverse student body from over 150 different countries. -

Report of the RIBA Full Visiting Board to Birmingham City University

Royal Institute of British Architects Report of the RIBA Full Visiting Board to Birmingham City University Date of visiting board: 18-19 October 2018 Confirmed by RIBA Education Committee: 13 February 1 Details of institution hosting course/s (report part A) Birmingham City University Birmingham Institute of Art & Design The Parkside Building 5 Cardigan Street Birmingham B4 7BD 2 Head of Architecture Group Head of Department Professor Kevin Singh Deputy Head of Department Hannah Vowles 3 Course/s offered for validation BA (Hons) Architecture Part 1 Master of Architecture (M Arch) Part 2 Postgraduate Diploma in Architectural Practice Part 3 4 Course leader/s BA (Hons) Architecture Victoria Farrow Master of Architecture (M Arch) Michael Dring Postgraduate Diploma in Architectural Practice Ian Shepherd 5 Awarding body Birmingham City University 6 The visiting board Matt Gaskin academic / chair Jane McAllister academic Toby Blackman academic Negar Mihanyar practitioner Lucia Medina student Sophie Bailey RIBA validation manager 7 Procedures and criteria for the visit The visiting board was carried out under the RIBA procedures for validation and validation criteria for UK and international courses and examinations in architecture (published July 2011, and effective from September 2011); this document is available at www.architecture.com. 8 Recommendation of the Visiting Board On 13 February 2019 the RIBA Education Committee confirmed that the following courses and qualifications are awarded full validation BA (Hons) Architecture Part 1 Master -

Birmingham University Campus Tour Booklet

1 Campus tour booklet I loved the campus – the atmosphere and vibe were brilliant and I could really see myself fitting in there. Campus visitor Challenge what you know. Student Recruitment 3 Welcome The University Welcome to the University of Birmingham. This guide has The University of Birmingham has a long history of academic excellence and innovation. been produced to provide information about the University We were the first civic university, where students from all religions and backgrounds were accepted on an equal basis. Our spirit of innovation continues today with ground breaking and its facilities for visitors who wish to conduct their own research in areas ranging from cancer studies to nanotechnology. Our students receive ‘self-guided’ tour around the University campus. A map a first-class academic experience with us during their studies, as well as becoming equipped of the campus is provided on the inside back cover for life beyond university. and the following pages provide information about Today you will see some of the attractions of our campus, and you may also visit the city the sights you will see on your tour. centre of which we are rightly proud. There are great social and recreational opportunities for students. The University, with its own campus train station, is only two stops from the city centre. Birmingham has an illustrious history of industry and invention, and continues to attract significant business investment today. The city centre has had over £9 billion spent on regeneration over the past few years and is home to the Bullring, one of Europe’s largest shopping centres. -

Winterbourne Events Programme

www.winterbourne.org.uk Learn more Learn of this perfectly English Edwardian home. home. Edwardian English perfectly this of Bridge or simply soak up the tranquillity tranquillity the up soak simply or Bridge many of the city’s residents during the early 20th century. century. 20th early the during residents city’s the of many and discover how he revolutionised the living conditions of of conditions living the revolutionised he how discover and hazelnut tunnel, cross the 1930’s Japanese Japanese 1930’s the cross tunnel, hazelnut America and the alpine areas of the world. world. the of areas alpine the and America work as the chair of the first housing committee in Birmingham Birmingham in committee housing first the of chair the as work along the woodland walk, stroll through the the through stroll walk, woodland the along the globe with collections from China, North and South South and North China, from collections with globe the pioneering Nettlefold’s John about more learn Study, the In around from plants displays also garden botanic The species from around the world. Wander Wander world. the around from species who lived and worked here over the years. the over here worked and lived who to beautiful antiques and over 6,000 plant plant 6,000 over and antiques beautiful to original sandstone rock garden and stream side planting. planting. side stream and garden rock sandstone original people the through occupants its and house the of stories Winterbourne is a hidden gem – home home – gem hidden a is Winterbourne glasshouses, borders, themed colour striking garden, the tell which exhibits, interactive via community the within walled beautiful a to home is garden listed II Grade Only minutes from Birmingham city centre, centre, city Birmingham from minutes Only An exhibition room highlights the importance of Winterbourne Winterbourne of importance the highlights room exhibition An the year, the throughout interest and colour Offering traditional home would have looked during Edwardian times. -

Teacher Education at the University of Birmingham

SCHOOL OF EDUCATION TEACHER EDUCATION AT THE UNIVERSITY OF BIRMINGHAM INSPIRING LEARNING @UOBTEACH WWW.BIRMINGHAM.AC.UK/ITE TRANSFORMING LIVES 2 SchoolEnglish ofLanguage Education and Applied Linguistics Welcome to the School of Education Our Primary and Secondary initial teacher education pathways in Birmingham prepare top graduates for a career inspiring learning and transforming lives. Our highly acclaimed programmes have been rated ‘Outstanding’ by Ofsted and offer the supportive professional and academic education needed to enable both recent graduates and career changers to succeed. Ranked 5th in the Times Good University Guide 2020, the School of Education has a long-standing reputation as an international centre of excellence for teaching and research. You will be taught in a school which is doing pioneering research in diverse specialisms from character education and race inequities, to innovative technology for children on the autism spectrum. The School of Education is continually expanding and developing, with new provision for teacher education at the University of Birmingham Dubai. The School also benefits from multiple links with the University of Birmingham School. English Language and Applied Linguistics 3 CONTENTS Becoming a teacher 4 School Direct 6 Life as a student teacher 8 Entry requirements and fees 11 Careers and support 12 Be part of something special 15 Studying in Birmingham 17 What to do next 19 4 School of Education Becoming a teacher Consecutive inspections by Ofsted have graded our teacher education courses -

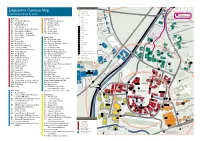

Edgbaston Campus Map (PDF)

Key utes min 15 G21 Edgbaston Campus Map Y2 Building name Information point Oakley Court SOMER SET ROAD Index to buildings by zone Level access entrance The Vale Footpath Steps Medical Practice B9 The Elms and Dental Centre Day Nursery Red Zone Orange Zone P Visitors car park Tennis Court R0 The Harding Building O1 The Guild of Students H Hospital D G20 A R1 Law Building O2 St Francis Hall 24 24 hour security O Pritchatts House R Bus stops RD R2 Frankland Building O3 University House A Athletics Track HO R G19 Library Ashcroft U R3 Hills Building O4 Ash House RQ Park House AR Museum HA R4 Aston Webb – Lapworth Museum O5 Beech House F L U A Pritchatts Park N Q A Sport facilities C Village R R5 Aston Webb – B Block P M O6 Cedar House A R A H F G I First aid T IN M R6 Aston Webb – Great Hall C R O7 Sport & Fitness E 13 Pritchatts Road I B GX H D D Food and drink The Spinney A N Environmental G18 Priorsfield A G R7 Aston Webb – Student Hub T R T E Research Facility B T S Retail ES A C R8 Physics West Green Zone R R S O T Toilets O W A O R9 Nuffield G1 32 Pritchatts Road N D G5 Lucas House Hotel ATM P G16 Pritchatts Road P R10 Physics East G2 31 Pritchatts Road A Car Park R Canal bridge K Conference R11 Medical Physics G3 European Research Institute R Park s G14 O Sculpture trail inute R12 Bramall Music Building G4 3 Elms Road B8 10 m Garth House A G4 D R13 Poynting Building G5 Computer Centre Rail G15 Westmere R14 Barber Institute of Fine Arts Lift G6 Metallurgy and Materials D B6 A Electric Vehicle Charge Point B7 Edgbaston R15 Watson Building -

Sustainability at Snow Hill Wharf

SNOW HILL WHARF A stunning new collection of canal-side apartments from St Joseph. Moments from the 04 Your place bustling city centre, this is truly to shine a place where you can shine. 11 World-class facilities 14 City living Computer generated image. Indicative only 26 All walks of life 45 Your stage to shine 1 SNOW HILL WHARF SNOW HILL WHARF HS2 (Coming 2026) Birmingham New Street Station Colmore Business District Paradise Circus & Victoria Square Jewellery Quarter The Bullring Snow Hill Station Brindley Place A CITY ON TOP Welcome to Snow Hill Wharf On a quiet stretch of the canal in the heart of Britain’s booming second city, Snow Hill Wharf is a new collection of stylish apartments from St Joseph, part of the Berkeley Group. Located in the iconic ‘Gun Quarter’ and less than a 5-minute walk to Snow Hill Station, this central area of the city is all set to benefit from the arrival of HS2 in Birmingham. Computer enhanced image. Indicative only 2 3 SNOW HILL WHARF SNOW HILL WHARF Y O U R P L A C E T O SHINE Live life to the full With every home at Snow Hill Wharf built to the Berkeley Group’s exacting standards, life here includes exclusive access to a 24-hour concierge service, on-site residents’ cinema and gym plus a series of beautiful podium gardens that offer tranquil communal spaces. Just moments from the bustling city centre, this is the place where you can live life to the full. Computer generated image.