BABS Project Book 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Information

GAME INFORMATION DOUBLE-A SOUTHERN LEAGUE AFFILIATE OF THE SEATTLE MARINERS For Information contact Chris Harris at [email protected] 4 Fun Place • Jackson, TN 38305 • (Phone) 731.988.5299 • (Fax) 731.988.5246 GAME #3 • FIRST HALF #3 • SERIES #1 • ROAD GAME #3 Jackson Generals (0-2) at Jacksonville Suns (2-0) RHP Hector Noesi (0-0, 0.00) vs. LHP Adam Conley (0-0, 0.00) Saturday, April 6, 2013 • 6:05 p.m. • Baseball Grounds of Jacksonville • Jacksonville, Fla UPCOMING GAMES AND PROBABLE PITCHERS: ALL TIMES CDT Today’s game broadcasted on 96.5 ESPN RADIO, Date Time Opponent Pitcher jacksongeneralsbaseball.com & TuneIn Radio App. April 7 2:05 at Jacksonville RHP Chance Ruffin (0-0, 0.00) vs. RHP Matthew Neil (0-0, 0.00) April 8 11:05 at Jacksonville LHP James Gillheeney (0-0, 0.00) vs. RHP Sam Dyson (0-0, 0.00) April 10 7:05 vs. Montgomery RHP Taijuan Walker (0-1, 7.20) vs. RHP Matt Buschman (0-1, 12.27) April 11 7:05 vs. Montgomery LHP Roenis Elias (0-0, 0.00) vs. RHP Albert Suarez (0-0, 0.00) TODAY’S GAME: After dropping the first two games of the series by the score of 5-3, Jackson looks to get its first win of - BY THE NUMBERS - the season tonight in game three of the five-game set in Jacksonville. RHP Hector Noesi makes his first Double-A start since 2010 tonight. Noesi has appeared in 52 Major League games over the past two seasons, including 18 starts for Seattle last season. -

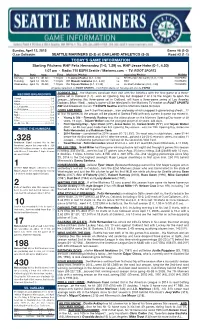

04.12.15 Game Notes.Indd

Sunday, April 12, 2015 Game #6 (2-3) O.co Coliseum SEATTLE MARINERS (2-3) at OAKLAND ATHLETICS (3-3) Road #3 (1-1) TODAY’S GAME INFORMATION Starting Pitchers: RHP Felix Hernandez (1-0, 1.29) vs. RHP Jesse Hahn (0-1, 4.50) 1:07 pm • Radio: 710 ESPN Seattle / Mariners.com • TV: ROOT SPORTS Day Date Opp. Time Mariners Pitcher Opposing Pitcher RADIO Monday April 13 @LAD 7:10 pm LH James Paxton (0-1, 3.00) vs. RH Brandon McCarthy (1-0, 7.20) 710 ESPN Tuesday April 14 @LAD 7:10 pm RH Hisashi Iwakuma (0-1, 6.00) vs. TBA 710 ESPN Wednesday April 15 @LAD 7:10 pm RH Taijuan Walker (0-1, 24.30) vs. LH Brett Anderson (0-0, 4.50) 710 ESPN All games televised on ROOT SPORTS…Civil Rights Game on Tuesday will also be ESPN2 …the Mariners conclude their visit with the Athletics with the fi nal game of a three- RECORD BREAKDOWN TODAY’S TILT game set in Oakland (1-1)…won on Opening Day but dropped 2 of 3 to the Angels to open the Overall .............................................. 2-3 season…following this three-game set in Oakland, will have a three-game series at Los Angeles Home ................................................ 1-2 ROOT SPORTS Road ................................................. 1-1 Dodgers (Mon- Wed)…today’s game will be televised in the Mariners TV market on vs. LH Starter.................................... 0-2 NW and broadcast live on 710 ESPN Seattle and the Mariners Radio Network. vs. RH Starter ...................................2-1 ODDS AND ENDS…are 2-3 on the season…won yesterday which snapped 3-game losing streak…12 Day .................................................. -

BASE 1 Miguel Cabrera Detroit Tigers® 2

BASE 1 Miguel Cabrera Detroit Tigers® 2 Michael Brantley Cleveland Indians® 3 Yasmani Grandal Los Angeles Dodgers® 4 Byron Buxton Minnesota Twins® RC 5 Daniel Murphy New York Mets® 6 Clay Buchholz Boston Red Sox® 7 James Loney Tampa Bay Rays™ 8 Dee Gordon Miami Marlins® 9 Khris Davis Milwaukee Brewers™ 10 Trevor Rosenthal St. Louis Cardinals® 11 Jered Weaver Angels® 12 Lucas Duda New York Mets® 13 James Shields San Diego Padres™ 14 Jacob Lindgren New York Yankees® RC 15 Michael Bourn Cleveland Indians® 16 Yunel Escobar Washington Nationals® 17 George Springer Houston Astros® 18 Ryan Howard Philadelphia Phillies® 19 Justin Upton San Diego Padres™ 20 Zach Britton Baltimore Orioles® 21 Santiago Casilla San Francisco Giants® 22 Max Scherzer Washington Nationals® 23 Carlos Carrasco Cleveland Indians® 24 Angel Pagan San Francisco Giants® 25 Wade Miley Boston Red Sox® 26 Ryan Braun Milwaukee Brewers™ 27 Carlos Gonzalez Colorado Rockies™ 28 Chase Utley Philadelphia Phillies® 29 Brandon Moss Cleveland Indians® 30 Juan Lagares New York Mets® 31 David Robertson Chicago White Sox® 32 Carlos Santana Cleveland Indians® 33 Ender Inciarte Arizona Diamondbacks® 34 Jimmy Rollins Los Angeles Dodgers® 35 J.D. Martinez Detroit Tigers® 36 Yadier Molina St. Louis Cardinals® 37 Ryan Zimmerman Washington Nationals® 38 Stephen Strasburg Washington Nationals® 39 Torii Hunter Minnesota Twins® 40 Anibal Sanchez Detroit Tigers® 41 Michael Cuddyer New York Mets® 42 Jorge De La Rosa Colorado Rockies™ 43 Shane Greene Detroit Tigers® 44 John Lackey St. Louis Cardinals® 45 Hyun-Jin Ryu Los Angeles Dodgers® 46 Lance Lynn St. Louis Cardinals® 47 David Freese Angels® 48 Russell Martin Toronto Blue Jays® 49 Jose Iglesias Detroit Tigers® 50 Pablo Sandoval Boston Red Sox® 51 Will Middlebrooks San Diego Padres™ 52 Joe Mauer Minnesota Twins® 53 Chris Archer Tampa Bay Rays™ 54 Starling Marte Pittsburgh Pirates® 55 Jason Heyward St. -

2018 Topps Heritage High Number Baseball BASE 501 Jordan

2018 Topps Heritage High Number Baseball BASE 501 Jordan Zimmermann Detroit Tigers® 502 Juan Soto Washington Nationals® Rookie 503 Franchy Cordero San Diego Padres™ 504 Ketel Marte Arizona Diamondbacks® 505 Mallex Smith Tampa Bay Rays™ 506 Braxton Lee Miami Marlins® Rookie 507 Jacob Barnes Milwaukee Brewers™ Rookie 508 Pedro Alvarez Baltimore Orioles® 509 Alex Blandino Cincinnati Reds® Rookie 510 Pablo Sandoval San Francisco Giants® 511 Scott Kingery Philadelphia Phillies® Rookie 512 Yoshihisa Hirano Arizona Diamondbacks® Rookie 513 Jaime Garcia Toronto Blue Jays® 514 Matt Duffy Tampa Bay Rays™ 515 Hunter Strickland San Francisco Giants® 516 Hector Velazquez Boston Red Sox® 517 Jonathan Lucroy Oakland Athletics™ 518 John Axford Toronto Blue Jays® 519 Eduardo Nunez Boston Red Sox® 520 Tony Cingrani Los Angeles Dodgers® 521 Seth Lugo New York Mets® 522 Chris Iannetta Colorado Rockies™ 523 Danny Farquhar Chicago White Sox® 524 Tyler Beede San Francisco Giants® Rookie 525 Daniel Mengden Oakland Athletics™ 526 Steven Souza Jr. Arizona Diamondbacks® 527 Corey Dickerson Pittsburgh Pirates® 528 Matt Szczur San Diego Padres™ 529 Mitch Garver Minnesota Twins® Rookie 530 Trayce Thompson Chicago White Sox® 531 Blake Swihart Boston Red Sox® 532 J.D. Davis Houston Astros® Rookie 533 Trevor Cahill Oakland Athletics™ 534 Niko Goodrum Detroit Tigers® Rookie 535 Pedro Severino Washington Nationals® 536 Asdrubal Cabrera New York Mets® 537 Matt Adams Washington Nationals® 538 Eduardo Escobar Minnesota Twins® 539 Jakob Junis Kansas City Royals® 540 David Bote Chicago Cubs® Rookie 541 Freddy Peralta Milwaukee Brewers™ Rookie 542 Marco Gonzales Seattle Mariners™ 543 Ryan Yarbrough Tampa Bay Rays™ Rookie 544 Fernando Rodney Minnesota Twins® 545 Preston Tucker Atlanta Braves™ 546 Tommy La Stella Chicago Cubs® 547 Clayton Richard San Diego Padres™ 548 Dixon Machado Detroit Tigers® 549 Jose Martinez St. -

Southland Cross Country Southland Crosouthlandss Country BASEBALL

SOUTHLAND CROSS COUNTRY SOUTHLAND CROSOUTHLANDSS COUNTRY BASEBALL Regular Season Conference Champions 2009..............................................................................................Texas State 2010..............................................................................................Texas State 1964...................................................................................................... Trinity 2011..............................................................................................Texas State 1965...................................................................................................... Trinity 2012..................................................................................Sam Houston State 1966...................................................................................................... Trinity 2013..................................................................................Sam Houston State 1967........................................................................................ Arkansas State 2014..................................................................................Sam Houston State 1968........................................................................................ Arkansas State 2015........................................................................... Southeastern Louisiana 1969...................................................................................................... Trinity 2016..................................................................................Sam -

Boston Red Sox Spring Training Game Notes

BOSTON RED SOX SPRING TRAINING GAME NOTES Boston Red Sox (4-3) at Pittsburgh Pirates (2-5-1) Wednesday, March 9, 2016 • 1:05 p.m. • McKechnie Field • Bradenton, FL GRAPEFRUIT STATUS: Today the Red Sox continue their FARRELL’S RETURN: John Farrell returns for his 4th sea- MEDIA GUIDE: The 2016 Boston Red Grapefruit League slate with a road game against the Pi- son as Red Sox Manger in 2016...He missed from August rates...This is the 8th contest (4-3 thus far) in a stretch of 14 through the end of the 2015 regular season on medical Sox Media Guide is available for down- 31 Grapefruit League games over 30 days, which began leave after being diagnosed with stage 1 non-Hodgkin’s load at http://pressroom.redsox.com. Wednesday and continues through March 31...The Sox Burkitt lymphoma...In his absence, Bench Coach Torey Members of the media may see a mem- conclude their exhibition schedule with 2 additional games Lovullo served as interim manager. ber of the Red Sox Media Relations De- against Toronto in Montreal from April 1-2. partment to request a print copy. RUBEN ADDED: The only change to the Red Sox’ major The Sox break for split-squad action on March 18 (vs. league coaching staff this offseason was the hiring of Ru- MIN, at TB) and March 30 (at PIT, at TB)...Boston’s IN CAMP: Boston has 56 players in ben Amaro Jr. as first base coach in October...The upcoming only off-day from today until the last of 2 games in Major League Spring Training Camp, season will be the first coaching baseball at any level for Montreal comes on March 23. -

JUNE 22Nd SYRACUSE (29-41) at PAWTUCKET (35-36) 7:05 Pm

JUNE 22nd SYRACUSE (29-41) at PAWTUCKET (35-36) 7:05 pm Syracuse Chiefs RHP Brady Dragmire (0-1, 14.40) vs. Pawtucket Red Sox RHP William Cuevas (4-4, 3.70) We Finally Welcome the ‘Cuse to McCoy - The Pawtucket Red Sox host the Syracuse Chiefs (AAA-Washington) - as Syracuse finally makes their first appearance at McCoy Stadium this season (the Chiefs the last team from the IL North to visit) – when the two long-time rivals open a 3-game weekend series this evening at 7:05 pm. Just over a week ago the PawSox swept a 3-game series in Syracuse from June 11-13 by scores of 3-1, 5-3, and 5-1. The teams also met in Syracuse from May 10-13 with the Chiefs winning 3 of 4 during that series. Their next two series against each other will both come here at McCoy – this weekend and then for a 4-game set just after the All-Star break from July 12-15. This Homestand - This 7-game homestand (3-1 thus far after the Sox took 3 of 4 from Buffalo this week) will continue with another exciting weekend on tap at McCoy to conclude Pawtucket’s second of two homestands here in June. Tonight at 7:05 pm (live on YurView on COX throughout Rhode Island and Spectrum 3 in Worcester) will be Pedro Martinez replica jersey night, tomorrow (Saturday) at 6:15 pm will feature Beatles post-game fireworks (and a pre-game free youth clinic from 2:00 – 3:15 pm), and then Sunday at 1:35 pm will be highlighted by a tribute to the Armed Services before the game and Run the Bases after. -

Oklahoma Redhawks (WL Record: 74-70)

SEATTLE MARINERS 2013 MINOR LEAGUE SEASON IN REVIEW Chris Taylor Player of the Year Taijuan Walker Starting Pitcher of the Year Carson Smith Relief Pitcher of the Year Abraham Almonte Heart and Soul Award 2013 SEATTLE MARINERS MINOR LEAGUE REVIEW AFFILIATES UPDATE: The Mariners 9 minor league affiliates wrapped up the 2013 season combining for a 461-433 (.516) record. The organization finished 3rd among all Major League farm On the Farm: systems in wins (461), while finishing 10th in winning percentage (.516). Tacoma (AAA) .............................76-68 Jackson (AA) ...............................62-73 OPERATION PLAYOFFS: Four of the Mariners 9 affiliates advanced to the playoffs in 2013. Pulaski High Desert (High-A) ...................64-76 (Appalachian League) and VSL Mariners (Venezuelan League) won their league titles. Clinton Clinton (A) ...................................67-72 (Midwest League) and Everett (Northwest League) advanced to the playoffs, but lost in the first round. Everett (short-season A) ..............44-32 Pulaski (rookie) ............................41-27 YOUTH MOVEMENT: The Mariners had 11 minor league players make their Major League debut this AZL Mariners (rookie) ..................22-32 season, including 9 homegrown players (OF Abraham Almonte, C Brandon Bantz, INF Nick DSL Mariners ..............................37-33 Franklin, LHP Bobby LaFromboise, RHP Brandon Maurer, INF Brad Miller, RHP Yoervis VSL Mariners ...............................48-20 Medina, LHP James Paxton, C Jesus Sucre and RHP Taijuan Walker). The 11 debuts were tied Totals 461-433 for 4th-most in club history, and 2nd-most by an American League club in 2013 (HOU – 15). Best MiLB AL Team Records MARINERS MILB AWARDS: Infielder Chris Taylor (High Desert/Jackson) was named Player of the Year, while right-hander Taijuan Walker (Jackson/Tacoma/Seattle) was named the Starting Pitcher 1. -

WEDNESDAY, APRIL 6, 2016 Vs. TORONTO BLUE JAYS LH Matt Moore (3-4, 5.43 in 2015) Vs

WEDNESDAY, APRIL 6, 2016 vs. TORONTO BLUE JAYS LH Matt Moore (3-4, 5.43 in 2015) vs. LH J.A. Happ (11-8, 3.61 in 2015) First Pitch: 1:10 p.m. | Location: Tropicana Field, St. Petersburg, Fla. | TV: Fox Sports Sun | Radio: WDAE 620 AM Game No.: 4 (1-2) | Home Game No.: 4 (1-2) | All-Time Game No.: 2,918 (1,353-1,564) | All-Time Home Game No.: 1,463 (745-717) THAT’S A FIRST—Last night the Rays won their first game of the season in 39 games are against Toronto (through May 18), then they don’t meet again unprecedented fashion when Jose Bautista’s attempt to break up a double until Aug 8-10 in Toronto…the Rays 166 wins vs. the Blue Jays are their play in the 9th inning was challenged by the Rays and overturned as a viola- most against an opponent and their all-time mark of 166-151 (.524) is their tion of the new slide rule [6.01(j), that the runner’s actions hindered and im- 2nd best against an AL opponent behind their 15-6 record vs. Houston (AL peded 2B Logan Forsythe]…it was also determined he did not engage in a only)…the Rays are 80-52 since 2009…Tampa Bay is 94-65 at home vs. bona-fide slide, as he did not attempt to remain on the base…it was the first the Jays, including 54-25 since Sep 8, 2007. game ever to end due to an overturn of the new slide rule…after the over- Ê In 2015, the Rays went 10-9 vs. -

Tonight's Game Information

Saturday, May 16, 2015 Game #36 (16-19) Safeco Field BOSTON ROYAL GIANTS (17-19) vs. SEATTLE STEELHEADS (16-19) Home #20 (10-9) TONIGHT’S GAME INFORMATION Starting Pitchers: RHP Rick Porcello (3-2, 4.50) vs. RHP Felix Hernandez (6-0, 1.85) 6:10 pm • Radio: 710 ESPN Seattle / Mariners.com • TV: ROOT SPORTS NW / MLB Network Day Date Opp. Time (PT) Mariners Pitcher Opposing Pitcher RADIO Sunday 5/17 BOS 1:10 pm LH James Paxton (1-2, 4.31) vs. RH Steven Wright (1-0, 4.22) 710 ESPN/97.3 FM Monday 5/18 Off Day in Baltimore All games televised on ROOT SPORTS with home games on Spanish Audio via TV SAP TONIGHT’S TILT…the Mariners continue a 9-game homestand tonight with the third (1-1) of four RECORD BREAKDOWN games vs. the Boston Red Sox…are 5-2 on the homestand, sweeping Oakland (3-0) and splitting Overall .......................................... 16-19 a pair with San Diego (1-1)…following this series, Seattle will travel to Baltimore (3 games), Toronto Home .............................................. 10-9 (3 games) and Tampa Bay (3 games)…tonight’s game will be televised in the Mariners TV market on Road ............................................... 6-10 vs. LH Starter.................................... 3-5 ROOT SPORTS NW and broadcast live on 710 ESPN Seattle and the Mariners Radio Network. vs. RH Starter ............................... 13-14 ODDS AND ENDS…won last night to snap mini 2-game losing streak, and have won 5 of last 7 Day ................................................... 5-2 games…season-high 4-game winning streak May 8-12…are 9-8 in last 17 games…15-15 record Night ............................................ -

05.27.17 Game Notes.Indd

Saturday, May 27, 2017 Game #50 (21-28) Fenway Park SEATTLE MARINERS (21-28) at BOSTON RED SOX (26-21) Road #28 (8-19) TODAY’S GAME INFORMATION Starting Pitchers: RHP Rob Whalen (1st ‘17 GS) at LHP Brian Johnson (1-0, 7.20) 4:05pm ET / 1:05pm PT • Radio: 710 ESPN / Mariners.com • TV: ROOT SPORTS Day Date Opp. Time (PT) Mariners Pitcher Opposing Pitcher RADIO Sunday May 28 at BOS 10:35 am RH Christian Bergman (1-2, 6.30) at RH Rick Porcello (3-5, 4.35) 710 ESPN Monday May 29 at COL 12:10 pm RH Sam Gaviglio (0-1,1.38) at RH Tyler Chatwood (4-6, 4.50) 710 ESPN Tuesday May 30 at COL 4:10 pm LH Ariel Miranda (4-2, 4.22) at LH Tyler Anderson (3-4, 5.40) 710 ESPN All games televised on ROOT SPORTS with home games featuring Spanish Audio via TV SAP TODAY’S TILT…the Mariners, who’ve split their last two games following a season-high 5-game losing ON THIS DATE streak, play the 2nd (0-1) of 3 games at the Boston Red Sox…trip began in Washington (1-2) and also 1981: Mariners third baseman Lenny includes a 2-game set at Colorado (Mon.-Tue.)…Seattle was 3-4 on its just-completed homestand against Randle got down on his hands and ROOT SPORTS NW knees to blow a bunt foul against the Oakland (2-1) and Chicago (1-3)...today’s game will be televised live in HD on and Kansas City Royals at the Kingdome… broadcast live on 710 ESPN Seattle and the Mariners Radio Network. -

04.27.15 Game Notes.Indd

Monday, April 27, 2015 Game #19 (7-11) Globe Life Park SEATTLE MARINERS (7-11) at TEXAS RANGERS (7-11) Road #7 (2-4) TONIGHT’S GAME INFORMATION Starting Pitchers: RHP Taijuan Walker (0-2, 10.66) vs. RHP Yovani Gallardo (2-2, 3.92) 5:05 pm PT/7:05 pm CT • Radio: 710 ESPN Seattle / Mariners.com • TV: ROOT SPORTS Day Date Opp. Time (PT) Mariners Pitcher Opposing Pitcher RADIO Tuesday April 28 @TEX 5:05 pm LH J.A. Happ (1-1, 2.61) vs. LH Ross Detwiler (0-2, 10.95) 710 ESPN Wednesday April 29 @TEX 5:05 pm RH Felix Hernandez (3-0, 1.61) vs. LH Wandy Rodriguez (1-0, 1.80) 710 ESPN All games televised on ROOT SPORTS TONIGHT’S TILT…the Mariners open a 10-game, 3-city road trip tonight with the fi rst of three games RECORD BREAKDOWN vs. the Texas Rangers…also travel to Houston (4 G) and Anaheim (3 G) during the second road trip of Overall ............................................ 7-11 the season…went 4-5 on just completed homestand vs. Texas (2-1), Houston (1-2) and Minnesota (1- Home ................................................ 5-7 2)…tonight’s game will be televised in the Mariners TV market on ROOT SPORTS NW and broadcast Road ................................................. 2-4 vs. LH Starter.................................... 1-4 live on 710 ESPN Seattle and the Mariners Radio Network. vs. RH Starter ...................................6-7 ODDS AND ENDS…dropped 2 straight and are 4-6 over last 10 games…have won consecutive Day ................................................... 4-1 games 3 times (last: April 22 & 24), but have yet to string together 3 consecutive wins…7-11 on the Night ..............................................