Information Retrieval and Aggregation to Dialogue For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

March 2015 Seven Seas.Indd

SEVEN SEAS Monster Musume, vol. 6 Story & Art by OKAYADO A New York Times manga bestseller Monster Musume is an ongoing manga series that presents the classic harem comedy with a fantastical twist: the female cast of characters that tempts our male hero is comprised of exotic and enticing supernatural creatures like lamias, centaurs, and harpies! Monster Musume will appeal to fans of supernatural comedies like Inukami! and harem comedies like Love Hina. Each volume is lavishly illustrated and includes a color insert. hat do world governments do when they learn that Wfantastical beings are not merely fiction, but flesh and blood —not to mention feather, hoof, and fang? Why, they create new regulations, of course! “The Interspecies Cultural Exchange Accord” ensures that these once-mythical creatures assimilate (cover in development) into human society...or else! MARKETING PLANS: When hapless human twenty-something Kurusu Kimihito becomes • Promotion at Seven Seas an involuntary “volunteer” in the government homestay program website gomanga.com for monster girls, his world is turned upside down. A reptilian lamia • Promotion on twitter.com/ named Miia is sent to live with him, and it is Kimihito’s job to tend gomanga and facebook. to her every need and make sure she integrates into his everyday com/gomanga life. While cold-blooded Miia is so sexy she makes Kimihito’s blood ALSO AVAILABLE: boil with desire, the penalties for interspecies breeding are dire. Monster Musume, vol. 5 (11/14) ISBN: 978-1-626921-06-1 Even worse, when a buxom centaur girl named Centorea and $12.99 ($14.99 CAN) a scantily clad harpy named Papi move into Kimihito’s house, what’s a full-blooded young man with raging hormones to do?! Monster Musume, vol. -

Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation

Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation Tuomas Sibakov Master’s Thesis East Asian Studies Faculty of Humanities University of Helsinki November 2020 Tiedekunta – Fakultet – Faculty Koulutusohjelma – Utbildningsprogram – Degree Programme Faculty of Humanities East Asian Studies Opintosuunta – Studieinriktning – Study Track East Asian Studies Tekijä – Författare – Author Tuomas Valtteri Sibakov Työn nimi – Arbetets titel – Title Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation Työn laji – Arbetets art – Level Aika – Datum – Month and Sivumäärä– Sidoantal – Number of pages Master’s Thesis year 83 November 2020 Tiivistelmä – Referat – Abstract In this work I examine how imōto-moe, a recent trend in Japanese animation and manga in which incestual connotations and relationships between brothers and sisters is shown, contributes to the sexualization of girls in the Japanese society. This is done by analysing four different series from 2010s, in which incest is a major theme. The analysis is done using visual analysis. The study concludes that although the series can show sexualization of drawn underage girls, reading the works as if they would posit either real or fictional little sisters as sexual targets. Instead, the analysis suggests that following the narrative, the works should be read as fictional underage girls expressing a pure feelings and sexuality, unspoiled by adult corruption. To understand moe, it is necessary to understand the history of Japanese animation. Much of the genres, themes and styles in manga and anime are due to Tezuka Osamu, the “god of manga” and “god of animation”. From the 1950s, Tezuka was influenced by Disney and other western animators at the time. -

11Eyes Achannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air

11eyes AChannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air Master Amaenaideyo Angel Beats Angelic Layer Another Ao No Exorcist Appleseed XIII Aquarion Arakawa Under The Bridge Argento Soma Asobi no Iku yo Astarotte no Omocha Asu no Yoichi Asura Cryin' B Gata H Kei Baka to Test Bakemonogatari (and sequels) Baki the Grappler Bakugan Bamboo Blade Banner of Stars Basquash BASToF Syndrome Battle Girls: Time Paradox Beelzebub BenTo Betterman Big O Binbougami ga Black Blood Brothers Black Cat Black Lagoon Blassreiter Blood Lad Blood+ Bludgeoning Angel Dokurochan Blue Drop Bobobo Boku wa Tomodachi Sukunai Brave 10 Btooom Burst Angel Busou Renkin Busou Shinki C3 Campione Cardfight Vanguard Casshern Sins Cat Girl Nuku Nuku Chaos;Head Chobits Chrome Shelled Regios Chuunibyou demo Koi ga Shitai Clannad Claymore Code Geass Cowboy Bebop Coyote Ragtime Show Cuticle Tantei Inaba DFrag Dakara Boku wa, H ga Dekinai Dan Doh Dance in the Vampire Bund Danganronpa Danshi Koukousei no Nichijou Daphne in the Brilliant Blue Darker Than Black Date A Live Deadman Wonderland DearS Death Note Dennou Coil Denpa Onna to Seishun Otoko Densetsu no Yuusha no Densetsu Desert Punk Detroit Metal City Devil May Cry Devil Survivor 2 Diabolik Lovers Disgaea Dna2 Dokkoida Dog Days Dororon EnmaKun Meeramera Ebiten Eden of the East Elemental Gelade Elfen Lied Eureka 7 Eureka 7 AO Excel Saga Eyeshield 21 Fight Ippatsu! JuudenChan Fooly Cooly Fruits Basket Full Metal Alchemist Full Metal Panic Futari Milky Holmes GaRei Zero Gatchaman Crowds Genshiken Getbackers Ghost -

Strike Witches: 1937 Fuso Sea Incident, Vol

SEVEN SEAS Alice in the Country of Joker: The Nightmare Trilogy, vol. 1 Story by QuinRose; Art by Yobu A three-book tale that focuses on Alice’s romance with the incubus of bad dreams! Seven Seas is pleased to present Alice in the Country of Joker: The Nightmare Trilogy, a three-volume story that highlights the mysterious character of Nightmare Gottschalk. Alice in the Country of Joker: The Nightmare Trilogy chronicles the further adventures of Alice as she goes deeper down the rabbit hole. Like the other New York Times bestselling books in the Alice in the Country of Clover series, this new volume is an oversized edition that features impressive artwork and color pinups. (cover not final) MARKETING PLANS: lice has been living in the Clover Tower for a while now, • Promotion on Seven Seas website and with the coming of April Season, she is able to see Julius gomanga.com A • Promotion on twitter.com/gomanga again and has met with Joker. Nightmare isn’t very pleased, and facebook.com/gomanga and comes up with ways to keep Alice distracted with all sorts of mini adventures. While Alice can’t get away from Nightmare’s penetrating gaze, she does not fear him; she may even love him. ALSO AVAILABLE: What will happen when Alice wakes up? Alice in the Country of Clover: Cheshire Cat Waltz, vol. 4 (3/13) Praise for Alice in the Country of Hearts: ISBN: 978-1-937867-10-2 $13.99 ($15.99 CAN) “It may be impossible to beat Lewis Carroll at his own game, Alice in the Country of Clover: Cheshire Cat Waltz, vol. -

Aachi Wa Ssipak Afro Samurai Afro Samurai Resurrection Air Air Gear

1001 Nights Burn Up! Excess Dragon Ball Z Movies 3 Busou Renkin Druaga no Tou: the Aegis of Uruk Byousoku 5 Centimeter Druaga no Tou: the Sword of Uruk AA! Megami-sama (2005) Durarara!! Aachi wa Ssipak Dwaejiui Wang Afro Samurai C Afro Samurai Resurrection Canaan Air Card Captor Sakura Edens Bowy Air Gear Casshern Sins El Cazador de la Bruja Akira Chaos;Head Elfen Lied Angel Beats! Chihayafuru Erementar Gerad Animatrix, The Chii's Sweet Home Evangelion Ano Natsu de Matteru Chii's Sweet Home: Atarashii Evangelion Shin Gekijouban: Ha Ao no Exorcist O'uchi Evangelion Shin Gekijouban: Jo Appleseed +(2004) Chobits Appleseed Saga Ex Machina Choujuushin Gravion Argento Soma Choujuushin Gravion Zwei Fate/Stay Night Aria the Animation Chrno Crusade Fate/Stay Night: Unlimited Blade Asobi ni Iku yo! +Ova Chuunibyou demo Koi ga Shitai! Works Ayakashi: Samurai Horror Tales Clannad Figure 17: Tsubasa & Hikaru Azumanga Daioh Clannad After Story Final Fantasy Claymore Final Fantasy Unlimited Code Geass Hangyaku no Lelouch Final Fantasy VII: Advent Children B Gata H Kei Code Geass Hangyaku no Lelouch Final Fantasy: The Spirits Within Baccano! R2 Freedom Baka to Test to Shoukanjuu Colorful Fruits Basket Bakemonogatari Cossette no Shouzou Full Metal Panic! Bakuman. Cowboy Bebop Full Metal Panic? Fumoffu + TSR Bakumatsu Kikansetsu Coyote Ragtime Show Furi Kuri Irohanihoheto Cyber City Oedo 808 Fushigi Yuugi Bakuretsu Tenshi +Ova Bamboo Blade Bartender D.Gray-man Gad Guard Basilisk: Kouga Ninpou Chou D.N. Angel Gakuen Mokushiroku: High School Beck Dance in -

![John Smith List 17/03/2014 506 1. [K] 2. 11Eyes 3. a Channel](https://docslib.b-cdn.net/cover/5287/john-smith-list-17-03-2014-506-1-k-2-11eyes-3-a-channel-1145287.webp)

John Smith List 17/03/2014 506 1. [K] 2. 11Eyes 3. a Channel

John Smith List 51. Bokurano 17/03/2014 52. Brave 10 53. BTOOOM! 506 54. Bungaku Shoujo - Gekijouban + Memoire 55. Bungaku shoujo: Kyou no oyatsu - Hatsukoi 56. C - Control - The Money of Soul and Possibility Control 57. C^3 58. Campione 1. [K] 59. Canvas - Motif of Sepia 2. 11Eyes 60. Canvas 2 - Niji Iro no Sketch 3. A channel - the animation + oav 61. Chaos:Head 4. Abenobashi - il quartiere commerciale 62. Chibits - Sumomo & Kotoko todokeru di magia 63. Chihayafuru 5. Accel World 64. Chobits 6. Acchi Kocchi 65. Chokotto sister 7. Aika r-16 66. Chou Henshin Cosprayers 8. Air - Tv 67. Choujigen Game Neptune The 9. Air Gear Animation (Hyperdimension) 10. Aishiteruze Baby 68. Chuu Bra!! 11. Akane-Iro ni Somaru Saka 69. Chuunibyou demo Koi ga Shitai! + 12. Akikan! + Oav Special 13. Amaenaide yo! 70. Chuunibyou demo Koi ga Shitai! Ren + 14. Amaenaide yo! Katsu! Special 15. Angel Beats! 71. Clannad 16. Ano Hana 72. Clannad - after story 17. Ano natsu de Matteru 73. Clannad Oav 18. Another 74. club to death angel dokuro-chan 19. Ao no Exorcise 75. Code Geass - Akito the Exiled 20. Aquarion Evol 76. Code Geass - Lelouch of the rebellion 21. Arakawa Under the Bridge 77. Code Geass - Lelouch of the Rebellion 22. Arakawa Under the Bridge x Bridge R2 23. Aria the Natural 78. Code Geass Oav - nunally // black 24. Asatte no Houkou rebellion 25. Asobi ni ikuyo! 79. Code-E 26. Astarotte no omocha 80. Colorful 27. Asu no Yoichi! 81. Coopelion 28. Asura Cryin 82. Copihan 29. Asura Cryin 2 83. -

Copy of Anime Licensing Information

Title Owner Rating Length ANN .hack//G.U. Trilogy Bandai 13UP Movie 7.58655 .hack//Legend of the Twilight Bandai 13UP 12 ep. 6.43177 .hack//ROOTS Bandai 13UP 26 ep. 6.60439 .hack//SIGN Bandai 13UP 26 ep. 6.9994 0091 Funimation TVMA 10 Tokyo Warriors MediaBlasters 13UP 6 ep. 5.03647 2009 Lost Memories ADV R 2009 Lost Memories/Yesterday ADV R 3 x 3 Eyes Geneon 16UP 801 TTS Airbats ADV 15UP A Tree of Palme ADV TV14 Movie 6.72217 Abarashi Family ADV MA AD Police (TV) ADV 15UP AD Police Files Animeigo 17UP Adventures of the MiniGoddess Geneon 13UP 48 ep/7min each 6.48196 Afro Samurai Funimation TVMA Afro Samurai: Resurrection Funimation TVMA Agent Aika Central Park Media 16UP Ah! My Buddha MediaBlasters 13UP 13 ep. 6.28279 Ah! My Goddess Geneon 13UP 5 ep. 7.52072 Ah! My Goddess MediaBlasters 13UP 26 ep. 7.58773 Ah! My Goddess 2: Flights of Fancy Funimation TVPG 24 ep. 7.76708 Ai Yori Aoshi Geneon 13UP 24 ep. 7.25091 Ai Yori Aoshi ~Enishi~ Geneon 13UP 13 ep. 7.14424 Aika R16 Virgin Mission Bandai 16UP Air Funimation 14UP Movie 7.4069 Air Funimation TV14 13 ep. 7.99849 Air Gear Funimation TVMA Akira Geneon R Alien Nine Central Park Media 13UP 4 ep. 6.85277 All Purpose Cultural Cat Girl Nuku Nuku Dash! ADV 15UP All Purpose Cultural Cat Girl Nuku Nuku TV ADV 12UP 14 ep. 6.23837 Amon Saga Manga Video NA Angel Links Bandai 13UP 13 ep. 5.91024 Angel Sanctuary Central Park Media 16UP Angel Tales Bandai 13UP 14 ep. -

Aniplex of America Announces March Comes in Like a Lion Season 2 Acquisition and Season 1 Release on Blu-Ray with English Dub

FOR IMMEDIATE RELEASE September 30, 2017 Aniplex of America Announces March comes in like a lion Season 2 Acquisition and Season 1 Release on Blu-ray with English Dub © Chica Umino, HAKUSENSHA/March comes in like a lion Animation Committee The award winning coming-of-age story finally gets its English dub and Blu-ray box set release! SANTA MONICA, CA (September 30, 2017) – At their industry panel at Anime Weekend Atlanta (Atlanta, GA), Aniplex of America announced the release of March comes in like a lion season 1 on Blu-ray and the acquisition of season 2. The Volume 1 and 2 Blu-ray Box Sets will feature the highly anticipated English dub with two of the episodes adapted and voice directed by seasoned veteran, Wendee Lee (Blue Exorcist, Your lie in April). The heartfelt series is based on an award winning manga by Chica Umino, who is best known as the creator of Honey and Clover. Produced by studio SHAFT (Puella Magi Madoka Magica, KIZUMONOGATARI), March comes in like a lion is helmed by a well-respected creative team including Director Akiyuki Shimbou (Puella Magi Madoka Magica,, Monogatari series) and Character Designer Nobuhiro Sugiyama (NISEKOI, BAKEMONOGATARI). Preorders begin October 2nd for both Volume 1 and 2 of the Blu-ray Box Sets with Volume 1 available on December 19th and Volume 2 on April 10th in 2018. Season 2 of the series is scheduled to begin streaming on October 14th on Crunchyroll. The long-awaited English dub of March comes in like a lion will also be included in the Blu-ray Box Sets with an all-star English dub cast featuring Khoi Dao (Magi: The Kingdom of Magic, Sword Art Online the Movie -Ordinal Scale-) as main character Rei Kiriyama, Laura Post (The Asterisk War, KILL la KILL) as Akari Kawamoto, Kayli Mills (Occultic;Nine, Sword Art Online the Movie -Ordinal Scale-) as Hinata Kawamoto, and Xanthe Hunyh (anohana – The Flower We Saw That Day - TV Series, Puella Magi Madoka Magica the Movie –Rebellion-) as Momo Kawamoto. -

JUN17 World.Com PREVIEWS

#345 | JUN17 PREVIEWS world.com ORDERS DUE JUNE 18 THE COMIC SHOP’S CATALOG PREVIEWSPREVIEWS CUSTOMER ORDER FORM CUSTOMER 601 7 Jun17 Cover ROF and COF.indd 1 5/11/2017 3:04:11 PM June17 DST Ads.indd 2 5/11/2017 3:10:14 PM HALO: RISE OF MISTER MIRACLE #1 ATRIOX #1 DC ENTERTAINMENT DARK HORSE COMICS TEENAGE MUTANT NINJA TURTLES: DIMENSION X #1-5 IDW ENTERTAINMENT REDLANDS #1 IMAGE COMICS FIRST STRIKE #1 & 2 IDW ENTERTAINMENT DARK NIGHTS: METAL #1 DC ENTERTAINMENT MR. HIGGINS COMES INHUMANS: HOME HC ONCE AND FUTURE DARK HORSE COMICS KINGS #1 MAGE: MARVEL COMICS THE HERO DENIED #1 IMAGE COMICS June17 Gem Page ROF COF.indd 1 5/11/2017 3:41:33 PM FEATURED ITEMS COMIC BOOKS & GRAPHIC NOVELS Equilibrium: Deconstruction #1 l AMERICAN MYTHOLOGY PRODUCTIONS Providence Act 3 HC l AVATAR PRESS INC Mech Cadet Yu #1 l BOOM! STUDIOS Adventure Time/Regular Show #1 l BOOM! STUDIOS Sheena #0 l D. E./DYNAMITE ENTERTAINMENT 1 The Shadow #1 l D. E./DYNAMITE ENTERTAINMENT Lark’s Killer #1 l DEVILS DUE /1FIRST COMICS Ghost In Shell Readme 1995-2017 HC l KODANSHA COMICS The Tick #1 l NEW ENGLAND COMICS Dead of Winter #1 l ONI PRESS INC. 1 Captain Harlock Dimensional Voyage Volume 1 GN l SEVEN SEAS ENTERTAINMENT LLC Cutie Honey A-Go-Go Volume 1 GN l SEVEN SEAS ENTERTAINMENT LLC Doctor Who: The Lost Dimension Alpha #1 l TITAN COMICS Quake Champions #1 l TITAN COMICS Back Issue #100 l TWOMORROWS PUBLISHING Robotech Visual Archive Macross Saga HC l UDON ENTERTAINMENT INC War Mother #1 l VALIANT ENTERTAINMENT LLC The Art of My Little Pony: The Movie l VIZ MEDIA LLC -

Reflections of (And On) Otaku and Fujoshi in Anime and Manga

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2014 The Great Mirror of Fandom: Reflections of (and on) Otaku and Fujoshi in Anime and Manga Clarissa Graffeo University of Central Florida Part of the Film and Media Studies Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Masters Thesis (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Graffeo, Clarissa, "The Great Mirror of Fandom: Reflections of (and on) Otaku and ujoshiF in Anime and Manga" (2014). Electronic Theses and Dissertations, 2004-2019. 4695. https://stars.library.ucf.edu/etd/4695 THE GREAT MIRROR OF FANDOM: REFLECTIONS OF (AND ON) OTAKU AND FUJOSHI IN ANIME AND MANGA by CLARISSA GRAFFEO B.A. University of Central Florida, 2006 A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts in the Department of English in the College of Arts and Humanities at the University of Central Florida Orlando, Florida Spring Term 2014 © 2014 Clarissa Graffeo ii ABSTRACT The focus of this thesis is to examine representations of otaku and fujoshi (i.e., dedicated fans of pop culture) in Japanese anime and manga from 1991 until the present. I analyze how these fictional images of fans participate in larger mass media and academic discourses about otaku and fujoshi, and how even self-produced reflections of fan identity are defined by the combination of larger normative discourses and market demands. -

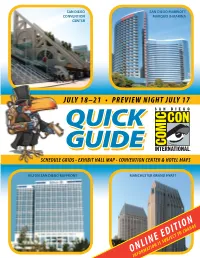

Quick Guide Is Online

SAN DIEGO SAN DIEGO MARRIOTT CONVENTION MARQUIS & MARINA CENTER JULY 18–21 • PREVIEW NIGHT JULY 17 QUICKQUICK GUIDEGUIDE SCHEDULE GRIDS • EXHIBIT HALL MAP • CONVENTION CENTER & HOTEL MAPS HILTON SAN DIEGO BAYFRONT MANCHESTER GRAND HYATT ONLINE EDITION INFORMATION IS SUBJECT TO CHANGE MAPu HOTELS AND SHUTTLE STOPS MAP 1 28 10 24 47 48 33 2 4 42 34 16 20 21 9 59 3 50 56 31 14 38 58 52 6 54 53 11 LYCEUM 57 THEATER 1 19 40 41 THANK YOU TO OUR GENEROUS SHUTTLE 36 30 SPONSOR FOR COMIC-CON 2013: 32 38 43 44 45 THANK YOU TO OUR GENEROUS SHUTTLE SPONSOR OF COMIC‐CON 2013 26 23 60 37 51 61 25 46 18 49 55 27 35 8 13 22 5 17 15 7 12 Shuttle Information ©2013 S�E�A�T Planners Incorporated® Subject to change ℡619‐921‐0173 www.seatplanners.com and traffic conditions MAP KEY • MAP #, LOCATION, ROUTE COLOR 1. Andaz San Diego GREEN 18. DoubleTree San Diego Mission Valley PURPLE 35. La Quinta Inn Mission Valley PURPLE 50. Sheraton Suites San Diego Symphony Hall GREEN 2. Bay Club Hotel and Marina TEALl 19. Embassy Suites San Diego Bay PINK 36. Manchester Grand Hyatt PINK 51. uTailgate–MTS Parking Lot ORANGE 3. Best Western Bayside Inn GREEN 20. Four Points by Sheraton SD Downtown GREEN 37. uOmni San Diego Hotel ORANGE 52. The Sofia Hotel BLUE 4. Best Western Island Palms Hotel and Marina TEAL 21. Hampton Inn San Diego Downtown PINK 38. One America Plaza | Amtrak BLUE 53. The US Grant San Diego BLUE 5. -

FOR IMMEDIATE RELEASE Otakon to Feature Voice Actors Jād Saxton

Press Relations Head: Victor Albisharat [email protected] 484-264-6787 FOR IMMEDIATE RELEASE Otakon to Feature Voice Actors Jād Saxton and Micah Solusod for the Wolf Children Dub Premiere Baltimore, MD (July 16, 2013) – We are pleased to announce that the voice actors featured in the dub of Wolf Children, Jād Saxton and Micah Solusod, will be attending Otakon 20. Jād Saxton has been acting and singing for Funimation Entertainment consistently for 6 years – singing is what actually landed her her first role in anime in Sasami: Magical Girls Club. After doing bits for Sasami and Hell Girl she landed her first major role as Masako Hara in Ghost Hunt. She then went on to voice Eve Genoard in Baccano!, Perrine-H. Clostermann in seasons 1 and 2 of Strike Witches, and Jacqueline O. Lantern Dupré in Soul Eater. Most recently announced releases include: Fairy Tail as Carla, Haganai as Sena Kashiwazaki, Michiko & Hatchin as Hatchin, Eureka Seven AO as Elena Peoples, High School DxD as Koneko Tōjō, Last Exile: Fam, The Silver Wing as Fam Fan Fan, Toriko as Yun, A Certain Scientific Railgun as Komoe, Steins;Gate as Faris Nyannyan, and Freezing as Arnett McMillan. Micah made his debut in the world of anime as the timid Malek Werner in Blassreiter, and was cast as the cool Soul Evans in Soul Eater shortly after. Since then, he has had the honor voicing a number of characters in other FUNimation titles such as Toma Kamijo in A Certain Magical Index, Tsutomu Senkawa in Birdy the Mighty: Decode, Yukitaka Tsuitsui in Level E, Kazuki Makabe in Fafner: Heaven and Earth Movie, Kamui and Subaru in Tsubasa Tokyo Revelations, Liszt Kiriki in Okami-San and Her Seven Companions, and many others.