Development and Characterization of an Iot Network for Agricultural Imaging Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

EML 4840 Robot Design Department of Mechanical and Materials Engineering Florida International University Spring 2018

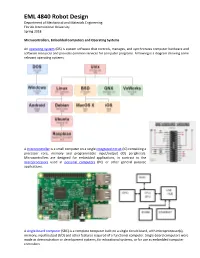

EML 4840 Robot Design Department of Mechanical and Materials Engineering Florida International University Spring 2018 Microcontrollers, Embedded Computers and Operating Systems An operating system (OS) is system software that controls, manages, and synchronizes computer hardware and software resources and provides common services for computer programs. Following is a diagram showing some relevant operating systems. A microcontroller is a small computer on a single integrated circuit (IC) containing a processor core, memory and programmable input/output (IO) peripherals. Microcontrollers are designed for embedded applications, in contrast to the microprocessors used in personal computers (PC) or other general purpose applications. A single-board computer (SBC) is a complete computer built on a single circuit board, with microprocessor(s), memory, input/output (I/O) and other features required of a functional computer. Single-board computers were made as demonstration or development systems, for educational systems, or for use as embedded computer controllers. 0.2 W In an Arduino Uno, if you draw too much current (40mA or more) from an I/O pin, it will damage the pin. There are no fuses on the I/O pins. Model GPIO per pin Power Clock Core RAM Price Arduino Uno 5 v 40 mA 9 - 12 v 45 mA* 500 mA 16 MHz 1 2 KB $25 Arduino Pro Mini 5 V 5 v 40 mA 5 - 12 v 20 mA* 500 mA 16 MHz 1 2 KB $10 Arduino Pro Mini 3.3 V 3.3 v 40 mA 3.35 - 12 v 20 mA* 500 mA 8 MHz 1 2 KB $10 ESP32 3.3 v 12 mA 2.3 - 3.6 v 250 mA 240 MHz 2 520 KB $10 Raspberry Pi 3 3.3 v 16 mA 5 v 400 mA* 2.5 A 1.2 GHz 4 1 GB $35** Raspberry Zero W 3.3 v 16 mA 5 v 150 mA* 1.2 A 1 GHz 1 512 MB $10** ODROID-XU4 1.8 v 10 mA 5 v 4 A* 6 A 15 GHz 8 2 GB $60** * idling. -

IEEE Iot Sketch01 – Blink

Internet of Things Weather Station IEEE Northern Virginia Section Hands-On Professional Development Series October 29, 2016 Montgomery College Unboxing & Sketch 01-Blink 2 10/29/2016 Course Materials All course materials are at: http://w4krl.com/projects/ieee-iot/2016october/ Download the construction slides so that you can follow along: – IEEE IoT Sketch01 – Blink – IEEE IoT Sketch02 – Hello World – IEEE IoT Sketch03 – Standalone Weather Station – IEEE IoT Sketch04 – IoT Weather Station – IEEE IoT Sketch05 – Smartphone Weather Station (if time is available) We will download Arduino sketches and libraries when needed. There are links to software, schematics, data sheets, and tutorials. 3 10/29/2016 Project Road Map 4 10/29/2016 Parts List Jumpers Power Supply Dual Voltage 4-Wire Regulator Jumper Micro USB Liquid Crystal Cable Display NodeMCU Level Shifter BME280 BH1750 LEDs (2) Resistors (2) Breadboard Switch Keep the small parts in the bag for now. 5 10/29/2016 Microcontroller A microcontroller is a System on Chip computer –Processor, memory, analog & digital I/O, & radio frequency circuits Embedded in a device with a dedicated purpose Generally low power and often battery powered Program is stored in firmware & is rarely changed Has multiple digital General Purpose Input / Output, analog-to-digital conversion, pulse width modulation, timers, special purpose I/O 6 10/29/2016 ESP8266 Timeline January 2014 - Introduced by Expressif Systems of Shanghai as a Wi-Fi modem chip. Early adopters used Hayes “AT” commands generated by an Arduino or Raspberry Pi. Not breadboard friendly. No FCC certification. October 2014 - Expressif released the Software Development Kit (SDK) making its use as a slave modem obsolete. -

How to Choose a Microcontroller Created by Mike Stone

How to Choose a Microcontroller Created by mike stone Last updated on 2021-03-16 09:01:47 PM EDT Guide Contents Guide Contents 2 Overview 4 Which Microcontroller is the Best? 4 How to Make a Bad Choice 5 How to Make a Good Choice 6 Sketching out the features you might want 6 A list of design considerations 6 Kinds of Microcontrollers 8 8-bit Microcontrollers 8 32-bit Microcontrollers 9 More About Peripherals with 32-Bit Chips 9 System On Chip Devices (SOC) 9 The microcontrollers in Adafruit products 11 8-bit Microcontrollers: 11 The ATtiny85 11 The ATmega328P 11 The ATmega32u4 12 32-bit Microcontrollers: 13 The SAMD21G 13 The SAMD21E 14 The SAMD51 15 System-On-a-Chip (SOCs) 16 The STM32F205 16 The nRF52832 16 The ESP8266 17 The ESP32 18 Simple Boards 20 Simple is Good 20 Where do I Start? 21 I want to learn microcontrollers, but don't want to buy a lot of extra stuff 21 Resources 22 Arduino 328 Compatibles 24 I want a microcontroller that is Arduino-Compatible 24 I want to build an Arduino-compatible microcontroller into a project 25 I want to build a battery-powered device 26 Next Step - 32u4 Boards 29 The Feather 32u4 Basic: 29 The Feather 32u4 Adalogger: 30 The ItsyBitsy 32u4: 30 Intermediate Boards 33 Branching Out 33 32-bit Boards 34 The Feather M0 Basic: 34 The Feather M0 Adalogger: 34 The ItsyBitsy M0: 35 The Metro M0: 36 CircuitPython Boards 37 The Circuit Playground Express: 37 The Metro M0 Express: 38 The Feather M0 Express: 39 © Adafruit Industries https://learn.adafruit.com/how-to-choose-a-microcontroller Page 2 of 54 The Trinket -

Design Specifications

July 7, 2019 Dr. Craig Scratchley Dr. Andrew Rawicz School of Engineering Science Simon Fraser University Burnaby, British Columbia V5A 1S6 RE: ENSC 405W/440 Design Specifications for Arkriveia Beacon Dear Dr. Scratchley and Dr. Rawicz, The following document contains the Design Specification for Akriveia Beacon - The Indoor Location Rescue System created by TRIWAVE SYSTEMS. The Akriveia Beacon focuses on locating personnel trapped in buildings during small scale disasters such as fires and low magnitude earthquakes. This is achieved by incorporating combinations of advanced Ultra-wide-band radio modules and microcon- trollers to create a dependable indoor positioning system using trilateration. We believe our system allows search and rescue operator to safely and reliably locate victims during an emergency or disaster. The purpose of this document is to provide low and high-level design specifications regarding the overall system architecture, functionality and implementation of the Akriveia Beacon system. The system will be presented according to three different development stages: proof-of-concept, prototype, and final product. This document consists of system overview, system design, hardware design, electrical design, software design and as well as a detailed test plans for the Alpha and Beta products. TRIWAVE SYSTEMS is composed of five dedicated and talented senior engineering students. The members include Keith Leung, Jeffrey Yeung, Scott Checko, Ryne Watterson, and Jerry Liu. Each member came from various engineering concentrations and with a diverse set of skills and experiences, we believe that our product will truly provide a layer of safety and reliability to emergency search and rescue operations. Thank you for taking the time to review our design specifications document. -

Nodemcu-32S Catalogue

INTRODUCTION TO NodeMCU ESP32 DevKIT v1.1 JULY 2017 www.einstronic.com Internet of Things NodeMCU ESP32 Wireless & Bluetooth Development Board NodeMCU is an open source IoT platform. ESP32 is a series of low cost, low power system-on-chip (SoC) microcontrollers with integrated Wi-Fi & dual-mode Bluetooth. The ESP32 series employs a Tensilica Xtensa LX6 microprocessor in both dual-core and single-core variations, with a clock rate of up to 240 MHz. ESP32 is highly integrated with built-in antenna switches, RF balun, power amplifier, low-noise receive amplifier, filters, and power management modules. Features Manufactured by TSMC using their 40 nm process. Able to achieve ultra-low power consumption. Built-in ESP-WROOM-32 chip. Breadboard Friendly module. Light Weight and small size. On-chip Hall and temperature sensor Uses wireless protocol 802.11b/g/n. Built-in wireless connectivity capabilities. Wireless Connectivity Breadboard Friendly USB Compatible Lightweight Built-in PCB antenna on the ESP32-WROOM-32 Capable of PWM, I2C, SPI, UART, 1-wire, 1 analog pin. Uses CP2102 USB Serial Communication interface module. Classic + BLE Low Power Consumption Programmable with ESP-IDF Toolchain, LuaNode SDK supports Eclipse project (C language). Safety Precaution All GPIO runs at 3.3V !! Source https://www.ioxhop.com/product/532/nodemcu-32s-esp32-wifibluetooth-development-board 1 NodeMCU ESP32S Front View Front View Specifications of ESP-WROOM-32 WiFi+BLE BT Module Wireless Standard FCC/CE/IC/TELEC/KCC/SRRC/NCC Wireless Protocol 802.11 b/g/n/d/e/l/k/r -

Adafruit Airlift Shield - ESP32 Wifi Co-Processor Created by Brent Rubell

Adafruit AirLift Shield - ESP32 WiFi Co-Processor Created by Brent Rubell Last updated on 2021-03-29 01:04:51 PM EDT Guide Contents Guide Contents 2 Overview 4 Pinouts 8 Power Pins 8 SPI Interface Pins 9 ESP32 Control Pins 9 SD Card Interface 10 LEDs 10 Prototyping Area 11 Assembly 12 Installing Standard Headers 12 Stack Alert! 16 CircuitPython WiFi 21 CircuitPython Microcontroller Pinout 21 CircuitPython Installation of ESP32SPI Library 21 CircuitPython Usage 22 Internet Connect! 24 What's a secrets file? 24 Connect to WiFi 24 Requests 28 HTTP GET with Requests 30 HTTP POST with Requests 31 Advanced Requests Usage 32 WiFi Manager 33 CircuitPython BLE 36 CircuitPython BLE UART Example 36 Adafruit AirLift ESP32 Shield Wiring 36 Update the AirLift Firmware 36 Install CircuitPython Libraries 36 Install the Adafruit Bluefruit LE Connect App 37 Copy and Adjust the Example Program 37 Talk to the AirLift via the Bluefruit LE Connect App 38 Arduino WiFi 41 Library Install 41 First Test 41 Arduino Microcontroller Pin Definition 42 WiFi Connection Test 43 Secure Connection Example 44 JSON Parsing Example 46 Adapting Other Examples 47 Upgrade External ESP32 Airlift Firmware 48 External AirLift FeatherWing, Shield, or ItsyWing 48 Upload Serial Passthrough code for Feather or ItsyBitsy 49 External AirLift Breakout 50 Code Usage 52 Install esptool.py 53 © Adafruit Industries https://learn.adafruit.com/adafruit-airlift-shield-esp32-wifi-co-processor Page 2 of 56 Burning nina-fw with esptool 53 Verifying the Upgraded Firmware Version 54 Arduino 54 CircuitPython 54 Downloads 55 Files 55 Schematic 55 Fab Print 55 © Adafruit Industries https://learn.adafruit.com/adafruit-airlift-shield-esp32-wifi-co-processor Page 3 of 56 Overview Give your Arduino project a lift with the Adafruit AirLift Shield (https://adafru.it/F6v) - a shield that lets you use the powerful ESP32 as a WiFi or BLE co-processor. -

Nodemcu-32S Datasheet Version V1 Copyright ©2019

Nodemcu-32s WIFI MODULE V1 Nodemcu-32s Datasheet Version V1 Copyright ©2019 Copyright © 2019 Shenzhen Ai-Thinker Technology Co., Ltd All Rights Reserved Nodemcu-32s WIFI MODULE V1 Disclaimer and Copyright Notice Information in this document, including URL references, is subject to change without notice. This document is provided "as is" without warranty of any kind, including any warranties of merchantability, non-infringement, fitness for any particular purpose, or any other proposal, specification or sample. All liability, including liability for infringement of any proprietary rights, relating to use of information in this document is disclaimed. No licenses express or implied, by estoppel or otherwise, to any intellectual property rights are granted herein. The test data obtained in this paper are all tested by Ai-Thinker lab, and the actual results may be slightly different. The Wi-Fi Alliance Member logo is a trademark of the Wi-Fi Alliance. The Bluetooth logo is a registered trademark of Bluetooth SIG. All trade names, trademarks and registered trademarks mentioned in this document are property of their respective owners, and are hereby acknowledged. The final interpretation is owned by Shenzhen Ai-Thinker Technology Co., Ltd. NOTE The contents of this manual are subject to change due to product version upgrades or other reasons. Shenzhen Anxinke Technology Co., Ltd. reserves the right to modify the contents of this manual without any notice or prompt. This manual is for guidance only. Shenzhen Anxinke Technology Co., Ltd. makes Copyright © 2019 Shenzhen Ai-Thinker Technology Co., Ltd All Rights Reserved 第 1 页 共 12 页 Nodemcu-32s WIFI MODULE V1 every effort to provide accurate information in this manual. -

ESP32 Series Datasheet

ESP32 Series Datasheet Including: ESP32-D0WD-V3 ESP32-D0WDQ6-V3 ESP32-D0WD – Not Recommended for New Designs (NRND) ESP32-D0WDQ6 – Not Recommended for New Designs (NRND) ESP32-S0WD ESP32-U4WDH Version 3.7 Espressif Systems Copyright © 2021 www.espressif.com About This Guide This document provides the specifications of ESP32 family of chips. Document Updates Please always refer to the latest version on https://www.espressif.com/en/support/download/documents. Revision History For any changes to this document over time, please refer to the last page. Documentation Change Notification Espressif provides email notifications to keep customers updated on changes to technical documentation. Please subscribe at www.espressif.com/en/subscribe. Note that you need to update your subscription to receive notifications of new products you are not currently subscribed to. Certification Download certificates for Espressif products from www.espressif.com/en/certificates. Contents Contents 1 Overview 8 1.1 Featured Solutions 8 1.1.1 Ultra-Low-Power Solution 8 1.1.2 Complete Integration Solution 8 1.2 Wi-Fi Key Features 8 1.3 BT Key Features 9 1.4 MCU and Advanced Features 9 1.4.1 CPU and Memory 9 1.4.2 Clocks and Timers 10 1.4.3 Advanced Peripheral Interfaces 10 1.4.4 Security 10 1.5 Applications (A Non-exhaustive List) 11 1.6 Block Diagram 12 2 Pin Definitions 13 2.1 Pin Layout 13 2.2 Pin Description 15 2.3 Power Scheme 18 2.4 Strapping Pins 19 3 Functional Description 22 3.1 CPU and Memory 22 3.1.1 CPU 22 3.1.2 Internal Memory 22 3.1.3 External Flash and -

Esp32 Node Mcu

ESP32 NODE MCU www.microelectrónicash.com Autor: Andrés Raúl Bruno Saravia 2019 Microelectrónica Componentes srl ESP32 NODE MCU ESP32 - WiFi & Bluetooth SoC Module Creado por Espressif Systems , ESP32 es un sistema de bajo consumo y bajo costo en un chips SoC (System On Chip) con Wi-Fi y modo dual con Bluetooth! En el fondo, hay un microprocesador Tensilica Xtensa LX6 de doble núcleo o de un solo núcleo con un frecuencia de reloj de hasta 240MHz . ESP32 está altamente integrado con switch de antena , balun para RF, amplificador de potencia, amplificador de recepción con bajo nivel de ruido, filtros y módulos de administración de energía, totalmente integrados dentro del mismo chip!!. Diseñado para dispositivos móviles; tanto en las aplicaciones de electrónica, y las de IoT (Internet de las cosas), ESP32 logran un consumo de energía ultra bajo a través de funciones de ahorro de energía Incluye la sintonización de reloj con una resolución fina, modos de potencia múltiple y escalado de potencia dinámica. Características principales: Procesador principal: Tensilica Xtensa LX6 de 32 bits. Wi-Fi: 802.11 b / g / n / e / i (802.11n @ 2.4 GHz hasta 150 Mbit / s). Bluetooth: v4.2 BR / EDR y Bluetooth Low Energy (BLE). Frecuencia de Clock: Programable, hasta 240MHz. Rendimiento: hasta 600DMIPS. ROM: 448KB, para arranque y funciones básicas. SRAM: 520KiB, para datos e instrucciones. 2 Microelectrónica Componentes srl PIN-OUT Instalando el complemento ESP32 en Arduino IDE Importante: Antes de iniciar el procedimiento de instalación, asegúrese de tener la última versión del IDE de Arduino instalada en su computadora. Si no lo hace, desinstálelo e instálelo de nuevo. -

Analysis of the Performance of the New Generation of 32-Bit Microcontrollers for Iot and Big Data Application

7th International Conference on Information Society and Technology ICIST 2017 Analysis of the Performance of the New Generation of 32-bit Microcontrollers for IoT and Big Data Application Jovan Ivković*, Jelena Lužija Ivković** * ITS Visoka škola strukovnih studija za IT/ Informacione tehnologije, Belgrade, Serbia ** MyITLab, Belgrade, Serbia [email protected], [email protected] Abstract — This paper explores the relationship between progress in the field of transfer functions with SoC / performance of different generations of MCU solutions for FPGA system on these solutions. Previous works [2] [3] classical computing processing, as well as FPU/DSP [4] show, that computer systems based on low power processing of input information. Finally, it questions how multi-core SoC CPUs with HMP can take role of pre- fast software-controlled I/O ports (GPIO) are. By using the processing a very fast local sensor network data prior to battery of standardized computer tests, and custom GPIO, sending them to Big Data system, with new advance in the GPU / DSP tests, the report gives clear and measurable area of MCU’s performance. The central question in this results. The results of measuring carried out in the study researc was whether this new generation of MCUs has clearly show that it is possible to unload the network sufficient processing power to take part of the pre- resources necessary for transmitting large quantities of processing (IoT gateway) role. information if they represent a part of a complex pre- treatment data for Big Data processing systems on the MCU II. CYBER PHYSICAL SYSTEMS (CPS) AND platforms themselves with IoT sensor devices. -

Firebeetle ESP32 Iot Microcontroller (Supports Wi-Fi & Bluetooth)

FireBeetle ESP32 IoT Microcontroller (Supports Wi-Fi & Bluetooth) SKU:DFR0478 INTRODUCTION DFRobot FireBeetle series is the low-power consumption micro-controller intentionally designed for Internet of Things (IoT) projects. FireBeetle Board - ESP32 integrates a Dual-Core ESP-WROOM-32 module, which supports MCU and Wi-Fi &Bluetooth dual-mode communication. The electric current is just 10μA in the deep-sleep mode. The main controller supports two power supply methods: USB and 3.7V external lithium battery. And both USB and external DC can charge the Lipo battery directly. Name WiDo Beetle ESP32 FireBeetle ESP8266 FireBeetle ESP32 SKU DFR0321 DFR0575 DFR0489 DFR0478 Microcontroller CC3000 ESP32 ESP8266 ESP32 Power supply USB or DC2.1 USB USB or 3.7V Lipo USB or 3.7V Lipo interface USB Powered 7 - 12 3.5 - 6.5 3.3 - 5.0 3.3 - 5.0 or External (V) Operating 5V 3.3V 3.3V 3.3V Voltage (V) CPU Frequency 16 240 160 240 (MHz) Flash(M) - 16 16 16 SRAM(KB) - 520 50 520 Analog pins 6 4 1 5 Digital pins 14 4 10 10 Wi-Fi protocol 802.11b/g 802.11b/g/n 802.11b/g/n 802.11b/g/n Frequency 2.4 GHz 2.4 - 2.5 GHz 2.4 - 2.5 GHz 2.4 - 2.5 GHz range UART 1 1 1 1 I2C 1 1 1 1 I2S 1 1 1 1 SPI 1 1 1 1 Compatible Arduino IDE 1.6+ Arduino IDE 1.6+ Arduino IDE 1.6+ Arduino IDE 1.6+ IDE Download Micro USB Micro USB Micro USB Micro USB Mode Arduino UNO √ × × × Compatible Lipo Charger × √ √ √ Support Dimension(mm) 75*54 35*34 58*29 58*29 Weight (g) 30 g 12 g 24 g 24 g Key Features On board 2.4G PCB Integrate a Dual-Core ESP- Arduino、RTOS、 Mobile BLE APP Connection Support. -

ZM1 Datasheet Table of Contents

ZM1 Datasheet Table of Contents Introduction 3 Related documents and links: 3 Overview 3 Key Features 4 PinMap 5 Zerynth Pin Mapping 7 Bootstrap pins 7 Peripherals Overview 8 Functional Description 9 Peripherals 10 GPIO 10 Analog to Digital Converter (ADC) 10 Notes: 10 Universal Asynchronous Receiver Transmitter (UART) 11 I2C Interface 11 Pulse Width Modulation (PWM) 11 Serial Peripheral Interface (SPI) 11 Timers and Watchdogs 11 Security 12 Electrical Characteristics 12 Absolute Maximum rating: 12 Recommended Operating Conditions: 12 DC Characteristics (3.3 V, 25 °C) 13 Wifi Radio 14 Physical Dimensions 16 PCB Footprint 17 Qualifications and Approvals 18 Zerynth SDK 18 Resources 18 Introduction This datasheet provides information on how to use the ZM1 module in your application. The ZM1 is part of the Zerynth hardware family that provides products with various features, different memory sizes, packages and peripherals. Related documents and links: Zerynth Documentation (docs.zerynth.com). Overview The Zerynth Module ZM1 is a dedicated customized version of the ESP32-WROOM-32SE Module. The Module is certified RoHS, CE/RED, e FCC (EU e US). The ZM1 Module mounts onboard the ESP32-D0WD microcontroller, and is designed to be scalable and adaptive. The CPU clock frequency is adjustable from 80 MHz to 240 MHz, With an embedded 16 MB of SPI Flash memory and integrates a rich set of peripherals, ranging from Hall sensors, Capacitive touch sensor, SD interface, Ethernet, High speed SPI, UART, I2S e I2C. Inside the ZM1, the crypto element ATECC608A from Microchip has been integrated to handle secure connections in an easy manner, exchanging certificates and keys, encrypting messages over TLS protocol and using secure authentication procedures.