Computer Vision and Pattern Recognition 2005, Vol 2, Pp:60-65, 2005

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CCD Inspector, FWHM Monitor and CCDIS Plug-In

CCD Inspector, FWHM Monitor and CCDIS Plug-in Copyright © 2005-2011 by Paul Kanevsky All Rights Reserved. Published by CCDWare under an exclusive license. http://pk.darkhorizons.org http://www.CCDWare.com To purchase CCD Inspector: http://www.ccdware.com/buy Check for product updates: http://www.ccdware.com/downloads/updates.cfm Help and Support Forum: http://ccdware.infopop.cc/eve/forums CCD Inspector Dramatically Improve quality of your images: increase sharpness and resolution Automatically sort many images at once by evaluating star sharpness and tracking quality Pick the best sub-frames for stacking, or for deciding which to keep Compare images by many objective criteria, plot the results for a better visual impact Measure and plot focus variations due to tilt or field curvature Determine how flat the image plane is. Compare performance of field flatteners and focal reducers Collimate your telescope in-focus with your CCD camera or DSLR! Evaluate optical system vignetting characteristics Estimate how well the current optical system will perform on a larger sensor before you buy that expensive new CCD or DSLR! Works with CCDSoft, MaxIm DL, and all other camera control software in real-time mode Focus and collimate your telescope using your favorite DSLR and DSLR control software! Create running charts to monitor seeing conditions, focus shift, tracking problems with your CCD or DSLR in real-time If you use CCDStack software, CCDInspector now ships with an amazing new plug-in, CCDIS. CCDIS completely automates CCDStack registration process, improves registration accuracy, and does so at blazing speeds Astrophotograpers, CCD and digital camera users often take many shorter images to later process and stack to simulate one long exposure. -

Digisnap 2000 Manual, Which Is on the CD Rom Supplied with the Equipment

Time-Lapse Package User’s Guide Dual Battery Option Shown. Zoom in for details... Harbortronics Inc 5720 144th St NW, Suite 200 Gig Harbor, WA. USA 98332 253-858-7769 (Phone) 253-858-9517 (Fax) http://www.harbortronics.com/ Sales & Service: [email protected] Technical & Customizing: [email protected] Time-Lapse Package Revision E Overview ................................................................................................................................................................................................ 3 Quick Start ............................................................................................................................................................................................ 3 Items included: ................................................................................................................................................................................. 3 Configuring the DigiSnap ..................................................................................................................................................................... 4 Connecting to a terminal .................................................................................................................................................................. 4 Configuring for Advanced Time-Lapse .......................................................................................................................................... 4 System Components ............................................................................................................................................................................ -

Pentaxslr.Com Camera: K20D Lens: SMCP DA* 16-50Mm F/2.8 ED AL (IF) SDM Body, Lens and Flash Experience the PENTAX DSLR System

pentaxslr.com Camera: K20D Lens: SMCP DA* 16-50mm F/2.8 ED AL (IF) SDM Body, Lens and Flash Experience the PENTAX DSLR System PENTAX digital SLR camera systems combine the latest in digital technology with legendary PENTAX optics, craftsmanship and functionality that are essential to your photography experience. Go ahead and grip the perfectly balanced, compact, lightweight bodies reinforced with a high-rigidity steel chassis, and experience the unmistakably clear, high-precision viewfinder. Connect with the vast heritage of PENTAX Super-multi-coated optics that provide seemingly limitless opportunities to create crystal-clear imagery with enhanced color and definition. Unleash the power of advanced, wireless P-TTL auto flash and high-speed synchronization of powerful PENTAX flash attachments. PENTAX digital SLR camera systems blend perfectly to offer ease of use, performance and portability to satisfy everyone from the novice photographer to the most discerning enthusiast. Shake Reduction K-mount Heritage Center plate The PENTAX-developed “Shake Reduction” technology PENTAX has manufactured over 24 million lenses Image sensor solves one of the oldest photographic problems: blur in the last six decades. For every assignment, we caused by handheld camera shake. The K Series Digital believe there needs to be a lens that matches Back plate SLRs produce sharp photos in challenging situations each photographer’s style – amateur and without having to use a tripod. Hand-held photography professional alike. PENTAX DSLR bodies offer Position support is now possible with long telephoto lenses or when long backward compatibility with each and every ball bearing exposure times are required due to poor lighting. -

Technology Forecasting of Digital Single-Lens Reflex Ac Mera Market: the Mpi Act of Segmentation in TFDEA

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by PDXScholar Portland State University PDXScholar Engineering and Technology Management Faculty Engineering and Technology Management Publications and Presentations 2013 Technology Forecasting of Digital Single-Lens Reflex aC mera Market: The mpI act of Segmentation in TFDEA Byung Sung Yoon Portland State University Apisit Charoensupyan Portland State University Nan Hu Portland State University Rachanida Koosawangsri Portland State University Mimie Abdulai Portland State University See next page for additional authors Let us know how access to this document benefits ouy . Follow this and additional works at: http://pdxscholar.library.pdx.edu/etm_fac Part of the Engineering Commons Citation Details Yoon, Byung Sung; Charoensupyan, Apisit; Hu, Nan; Koosawangsri, Rachanida; Abdulai, Mimie; and Wang, Xiaowen, Technology Forecasting of Digital Single-Lens Reflex aC mera Market: The mpI act of Segmentation in TFDEA, Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, 2013. This Article is brought to you for free and open access. It has been accepted for inclusion in Engineering and Technology Management Faculty Publications and Presentations by an authorized administrator of PDXScholar. For more information, please contact [email protected]. Authors Byung Sung Yoon, Apisit Charoensupyan, Nan Hu, Rachanida Koosawangsri, Mimie Abdulai, and Xiaowen Wang This article is available at PDXScholar: http://pdxscholar.library.pdx.edu/etm_fac/36 2013 Proceedings of PICMET '13: Technology Management for Emerging Technologies. Technology Forecasting of Digital Single-Lens Reflex Camera Market: The Impact of Segmentation in TFDEA Byung Sung Yoon, Apisit Charoensupyan, Nan Hu, Rachanida Koosawangsri, Mimie Abdulai, Xiaowen Wang Portland State University, Dept. -

Digital SLR Camera Timeline This Timeline Will Introduce You to All of the Different Digital SLR Cameras Released Since 2005

Digital SLR Camera Timeline This timeline will introduce you to all of the different digital SLR cameras released since 2005. For each camera, I've indicated approximately when it was released (by month) and how many megapixels the camera has. I've also noted which "extra features" the camera includes. As of 2009, there are 5 extra features that can be included in any digital SLR: • Dust Control - dust control sensors repel dust that can stick to the sensor when you change lenses • Built-in Image Stabilization - the camera shifts the sensor back and forth to compensate for camera shake • Live View - you can preview the image you're about to take on the camera's LCD screen (just like a compact) • Dynamic Range - control over dynamic range helps preserve image detail in scenes with a lot of contrast • Video - the camera captures video clips in addition to still images You can use this timeline to clearly see how the digital SLR has evolved, and which major new features have been introduced. • Cameras that represent important milestones in the history of digital SLR development are colored yellow. • A summary presents camera highlights for each year, and identifies important trends and technological advancements. • Click any camera name link to read an in-depth guide. You can also use this timeline to see what older models preceded the ones currently on the shelves. If you are just making the transition from point-and-shoot compact to digital SLR and don't want to spend over $500 on a camera, one great option is to buy an older used camera. -

Agfaphoto DC-833M, Alcatel 5035D, Apple Ipad Pro, Apple Iphone 6

AgfaPhoto DC-833m, Alcatel 5035D, Apple iPad Pro, Apple iPhone 6 plus, Apple iPhone 6s, Apple iPhone 7 plus, Apple iPhone 7, Apple iPhone 8 plus, Apple iPhone 8, Apple iPhone SE, Apple iPhone X, Apple QuickTake 100, Apple QuickTake 150, Apple QuickTake 200, ARRIRAW format, AVT F-080C, AVT F-145C, AVT F-201C, AVT F-510C, AVT F-810C, Baumer TXG14, BlackMagic Cinema Camera, BlackMagic Micro Cinema Camera, BlackMagic Pocket Cinema Camera, BlackMagic Production Camera 4k, BlackMagic URSA Mini 4.6k, BlackMagic URSA Mini 4k, BlackMagic URSA Mini Pro 4.6k, BlackMagic URSA, Canon EOS 1000D / Rebel XS / Kiss Digital F, Canon EOS 100D / Rebel SL1 / Kiss X7, Canon EOS 10D, Canon EOS 1100D / Rebel T3 / Kiss Digital X50, Canon EOS 1200D / Rebel T5 / Kiss X70, Canon EOS 1300D / Rebel T6 / Kiss X80, Canon EOS 200D / Rebel SL2 / Kiss X9, Canon EOS 20D, Canon EOS 20Da, Canon EOS 250D / 200D II / Rebel SL3 / Kiss X10, Canon EOS 3000D / Rebel T100 / 4000D, Canon EOS 300D / Rebel / Kiss Digital, Canon EOS 30D, Canon EOS 350D / Rebel XT / Kiss Digital N, Canon EOS 400D / Rebel XTi / Kiss Digital X, Canon EOS 40D, Canon EOS 450D / Rebel XSi / Kiss Digital X2, Canon EOS 500D / Rebel T1i / Kiss Digital X3, Canon EOS 50D, Canon EOS 550D / Rebel T2i / Kiss Digital X4, Canon EOS 5D Mark II, Canon EOS 5D Mark III, Canon EOS 5D Mark IV, Canon EOS 5D, Canon EOS 5DS R, Canon EOS 5DS, Canon EOS 600D / Rebel T3i / Kiss Digital X5, Canon EOS 60D, Canon EOS 60Da, Canon EOS 650D / Rebel T4i / Kiss Digital X6i, Canon EOS 6D Mark II, Canon EOS 6D, Canon EOS 700D / Rebel T5i -

Cameras Supporting DNG the Following Cameras Are Automatically Supported by Ufraw Since They Write Their Raw Files in the DNG Format

Cameras supporting DNG The following cameras are automatically supported by UFRaw since they write their raw files in the DNG format • Casio Exilim PRO EX-F1 • Casio Exilim EX-FH20 • Hasselblad H2D • Leica Digital Modul R (DMR) for R8/R9 • Leica M8 • Leica M9 • Pentax K10D • Pentax K20D • Pentax K200D • Pentax K-m • Ricoh Digital GR • Ricoh Caplio GX100 • Ricoh Caplio GX200 • Samsung GX-10 • Samsung GX-20 • Samsung Pro815 • Sea&Sea DX-1G • Seitz D3 digital scan back Other Supported Cameras (RAW) • Adobe Digital Negative (DNG) • AgfaPhoto DC-833m • Alcatel 5035D • Apple QuickTake 100 • Apple QuickTake 150 • Apple QuickTake 200 • ARRIRAW format • AVT F-080C • AVT F-145C • AVT F-201C • AVT F-510C • AVT F-810C • Baumer TXG14 • Blackmagic URSA • Canon PowerShot 600 • Canon PowerShot A5 • Canon PowerShot A5 Zoom • Canon PowerShot A50 • Canon PowerShot A460 (CHDK hack) • Canon PowerShot A470 (CHDK hack) • Canon PowerShot A530 (CHDK hack) • Canon PowerShot A570 (CHDK hack) • Canon PowerShot A590 (CHDK hack) • Canon PowerShot A610 (CHDK hack) • Canon PowerShot A620 (CHDK hack) • Canon PowerShot A630 (CHDK hack) • Canon PowerShot A640 (CHDK hack) • Canon PowerShot A650 (CHDK hack) • Canon PowerShot A710 IS (CHDK hack) • Canon PowerShot A720 IS (CHDK hack) • Canon PowerShot A3300 IS (CHDK hack) • Canon PowerShot Pro70 • Canon PowerShot Pro90 IS • Canon PowerShot Pro1 • Canon PowerShot G1 • Canon PowerShot G1 X • Canon PowerShot G1 X Mark II • Canon PowerShot G2 • Canon PowerShot G3 • Canon PowerShot G5 • Canon PowerShot G6 • Canon PowerShot G7 (CHDK -

121 Digital Frames

PHOTOGRAPHY 121 800-947-7785 | 212-444-6635 Digital Frames Digital Photo Frames More than just a digital frame, these are Digital Lifestyle Devices PanImage 7- and 8-inch Digital Photo Frames View pictures, listen to music and watch home videos on these true The PanImage 7” and 8” frames feature 800 x 600 resolution, color LCDs. Insert an SD /SDHC card or USB drive into the frame and hold up to 6400 images on 1GB of internal memory and are picutures automatically start in a slideshow mode. Easily transfer files WiFi/Bluetooth compatible. Built-in stereo speakers allows for a from your computer to the frames’ built-in memory with the included full multimedia experience. They allow you to transfer images USB 2.0 cable. Display on a table top with the included frame stand directly from a memory card via 5-in-1 card reader or from PC or mount on your wall — great for digital signage. They include a with included USB cable. Customize the look of your frame with remote control for easy operation the interchangeable white and charcoal mats. They also feature 8-inch: 512MB Memory, 800 x 600 resolution (ALDPF8) ............................................................52.99 a real time clock, calendar, alarm, audio out port, programmable 8-inch: Same as above, without speakers and no remote (ALDPF8AS) .........................................40.96 On/Off, and image rotate/resize. 12-inch: 512MB Memory, 800 x 600 resolution (ALDPF12) .........................................................95.00 7-inch Frame (PADPF7) ..........................................................................................................59.95 15-inch: 256MB Memory, 1024 x 768 resolution (ALDPF15) .....................................................159.00 8-inch Frame (PADPF8) ..........................................................................................................64.95 PanImage 10.4” Digital Picture Frame DP356 Stores up to 5000 images on 1GB of internal memory and is WiFi/ 3.5” Digital Photo Album with Alarm Clock Bluetooth compatible. -

Digital Photo Buyer's Guide 2010

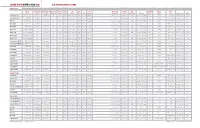

DIGITAL PHOTO BUYER’S GUIDE 2010 SLR SPECIFICATIONS CHART UNDER $1,000 Image Focal-Length Max Resolution Pixel Size Sensor A/D LCD Live AF ISO Settings Shutter Max Built-In Storage Power Sensor Factor (Pixels) (Microns) Cleaning Conv. Monitor View Video System (Expanded) Speeds Frame Rate Metering Flash Media Source Dimensions Weight Canon EOS Rebel T1i 15.1 MP CMOS 1.6x (APS-C) 4752x3168 4.7 Yes 14-bit 3.0-in. 920K-dot Yes HD, SD 9-point 100-3200 (12,800) 30-1/4000 3.4 fps 35-zone, CW, spot Yes SD/SDHC LP-E5 Li-Ion 5.1x3.8x2.4 in. 16.9 oz. Canon EOS Rebel XSi 12.2 MP CMOS 1.6x (APS-C) 4272x2848 5.2 Yes 14-bit 3.0-in. 230K-dot Yes None 9-point 100-1600 30-1/4000 3.5 fps 35-zone, CW, spot Yes SD/SDHC LP-E5 Li-Ion 5.1x3.8x2.4 in. 16.8 oz. Nikon D90 12.3 MP CMOS 1.5x (APS-C) 4288x2848 5.5 Yes 12-bit 3.0-in. 920K-dot Yes HD, SD 11-area 200-3200 (100-6400) 30-1/4000 4.5 fps 420-pixel, CW, spot Yes SD/SDHC EN-EL3e Li-Ion 5.2x4.1x3.0 in. 22 oz. Nikon D3000 10.2 MP CCD 1.5x (APS-C) 3872x2592 6.1 Yes 12-bit 3.0-in. 230K-dot No None 11-area 100-1600 (3200) 30-1/4000 3 fps 420-pixel, CW, spot Yes SD/SDHC EN-EL9a Li-Ion 5.0x3.8x2.5 in. -

What Digital SLR Features Do You Really Need?

Spending Your Own Money: What Digital SLR Features Do You Really Need? By Joe Kashi Trying to determine which digital SLR cameras have the best image quality and the best value can feel almost impossible. The similarities seem to outweigh the differences and purchase price may not be your best guide. Making a sensible purchase decision may seem overwhelming. What features are actually useful in making good images? I’ll mostly discuss the camera bodies themselves because, in addition to the camera manufacturer’s own lenses, there are several excellent third party lens manufacturers that make lenses for many different camera brands and lenses mounts. These vendors include Tamron, Sigma, and Tokina. Often, their consumer and semi-pro lenses are as good or better than the camera maker’s own lens lines. What Features Do You Need? In most cases, there’s little difference between upper entry level and semi-pro dSLR camera bodies in terms of their imaging quality and electronic features. Instead, the less expensive entry level dSLR cameras are usually built from plastic, perhaps with some metal framework internally. They’re not as rugged nor expected to last as long under heavy use. These are basically d(isposable)SLR cameras. That’s not all bad - you’ve saved enough buying a lower end dSLR camera that you can invest now in sharper lenses that you can use later when you buy an upgraded model. The mechanical features of a dSLR camera can be very important and these are real differences that you should consider - how important are they to your style of photography and are they worth the extra cost? For example, a wedding or candid photographer will prefer a camera with a quiet shutter that operates quickly. -

Book V Camera

b bb bbbera bbbbon.com bbbb Basic Photography in 180 Days Book V - Camera Editor: Ramon F. aeroramon.com Contents 1 Day 1 1 1.1 Camera ................................................ 1 1.1.1 Functional description ..................................... 2 1.1.2 History ............................................ 2 1.1.3 Mechanics ........................................... 5 1.1.4 Formats ............................................ 8 1.1.5 Camera accessories ...................................... 8 1.1.6 Camera design history .................................... 8 1.1.7 Image gallery ......................................... 12 1.1.8 See also ............................................ 14 1.1.9 References .......................................... 15 1.1.10 Bibliography ......................................... 16 1.1.11 External links ......................................... 17 2 Day 2 18 2.1 Camera obscura ............................................ 18 2.1.1 Physical explanation ...................................... 19 2.1.2 Technology .......................................... 19 2.1.3 History ............................................ 20 2.1.4 Role in the modern age .................................... 31 2.1.5 Examples ........................................... 32 2.1.6 Public access ......................................... 33 2.1.7 See also ............................................ 33 2.1.8 Notes ............................................. 34 2.1.9 References .......................................... 34 2.1.10 Sources -

Technology Forecasting of Digital Single-Lens Reflex Camera Market: the Impact of Segmentation in TFDEA

Portland State University PDXScholar Engineering and Technology Management Faculty Publications and Presentations Engineering and Technology Management 2013 Technology Forecasting of Digital Single-Lens Reflex Camera Market: The Impact of Segmentation in TFDEA Byung Sung Yoon Portland State University Apisit Charoensupyanan Portland State University Nan Hu Portland State University Rachanida Koosawangsri Portland State University Mimie Abdulai Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/etm_fac See P nextart of page the forEngineering additional Commons authors Let us know how access to this document benefits ou.y Citation Details Yoon, Byung Sung; Charoensupyan, Apisit; Hu, Nan; Koosawangsri, Rachanida; Abdulai, Mimie; and Wang, Xiaowen, Technology Forecasting of Digital Single-Lens Reflex Camera Market: The Impact of Segmentation in TFDEA, Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, 2013. This Article is brought to you for free and open access. It has been accepted for inclusion in Engineering and Technology Management Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. Authors Byung Sung Yoon, Apisit Charoensupyanan, Nan Hu, Rachanida Koosawangsri, Mimie Abdulai, and Xiaowen Wang This article is available at PDXScholar: https://pdxscholar.library.pdx.edu/etm_fac/36 2013 Proceedings of PICMET '13: Technology