ASCII, Unicode, and in Between Douglas A. Kerr Issue 3 June 15, 2010 ABSTRACT the Standard Coded Character Set ASCII Was Formall

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cumberland Tech Ref.Book

Forms Printer 258x/259x Technical Reference DRAFT document - Monday, August 11, 2008 1:59 pm Please note that this is a DRAFT document. More information will be added and a final version will be released at a later date. August 2008 www.lexmark.com Lexmark and Lexmark with diamond design are trademarks of Lexmark International, Inc., registered in the United States and/or other countries. © 2008 Lexmark International, Inc. All rights reserved. 740 West New Circle Road Lexington, Kentucky 40550 Draft document Edition: August 2008 The following paragraph does not apply to any country where such provisions are inconsistent with local law: LEXMARK INTERNATIONAL, INC., PROVIDES THIS PUBLICATION “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions; therefore, this statement may not apply to you. This publication could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in later editions. Improvements or changes in the products or the programs described may be made at any time. Comments about this publication may be addressed to Lexmark International, Inc., Department F95/032-2, 740 West New Circle Road, Lexington, Kentucky 40550, U.S.A. In the United Kingdom and Eire, send to Lexmark International Ltd., Marketing and Services Department, Westhorpe House, Westhorpe, Marlow Bucks SL7 3RQ. Lexmark may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you. -

Hieroglyphs for the Information Age: Images As a Replacement for Characters for Languages Not Written in the Latin-1 Alphabet Akira Hasegawa

Rochester Institute of Technology RIT Scholar Works Theses Thesis/Dissertation Collections 5-1-1999 Hieroglyphs for the information age: Images as a replacement for characters for languages not written in the Latin-1 alphabet Akira Hasegawa Follow this and additional works at: http://scholarworks.rit.edu/theses Recommended Citation Hasegawa, Akira, "Hieroglyphs for the information age: Images as a replacement for characters for languages not written in the Latin-1 alphabet" (1999). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Hieroglyphs for the Information Age: Images as a Replacement for Characters for Languages not Written in the Latin- 1 Alphabet by Akira Hasegawa A thesis project submitted in partial fulfillment of the requirements for the degree of Master of Science in the School of Printing Management and Sciences in the College of Imaging Arts and Sciences of the Rochester Institute ofTechnology May, 1999 Thesis Advisor: Professor Frank Romano School of Printing Management and Sciences Rochester Institute ofTechnology Rochester, New York Certificate ofApproval Master's Thesis This is to certify that the Master's Thesis of Akira Hasegawa With a major in Graphic Arts Publishing has been approved by the Thesis Committee as satisfactory for the thesis requirement for the Master ofScience degree at the convocation of May 1999 Thesis Committee: Frank Romano Thesis Advisor Marie Freckleton Gr:lduate Program Coordinator C. -

Unicode Ate My Brain

UNICODE ATE MY BRAIN John Cowan Reuters Health Information Copyright 2001-04 John Cowan under GNU GPL 1 Copyright • Copyright © 2001 John Cowan • Licensed under the GNU General Public License • ABSOLUTELY NO WARRANTIES; USE AT YOUR OWN RISK • Portions written by Tim Bray; used by permission • Title devised by Smarasderagd; used by permission • Black and white for readability Copyright 2001-04 John Cowan under GNU GPL 2 Abstract Unicode, the universal character set, is one of the foundation technologies of XML. However, it is not as widely understood as it should be, because of the unavoidable complexity of handling all of the world's writing systems, even in a fairly uniform way. This tutorial will provide the basics about using Unicode and XML to save lots of money and achieve world domination at the same time. Copyright 2001-04 John Cowan under GNU GPL 3 Roadmap • Brief introduction (4 slides) • Before Unicode (16 slides) • The Unicode Standard (25 slides) • Encodings (11 slides) • XML (10 slides) • The Programmer's View (27 slides) • Points to Remember (1 slide) Copyright 2001-04 John Cowan under GNU GPL 4 How Many Different Characters? a A à á â ã ä å ā ă ą a a a a a a a a a a a Copyright 2001-04 John Cowan under GNU GPL 5 How Computers Do Text • Characters in computer storage are represented by “small” numbers • The numbers use a small number of bits: from 6 (BCD) to 21 (Unicode) to 32 (wchar_t on some Unix boxes) • Design choices: – Which numbers encode which characters – How to pack the numbers into bytes Copyright 2001-04 John Cowan under GNU GPL 6 Where Does XML Come In? • XML is a textual data format • XML software is required to handle all commercially important characters in the world; a promise to “handle XML” implies a promise to be international • Applications can do what they want; monolingual applications can mostly ignore internationalization Copyright 2001-04 John Cowan under GNU GPL 7 $$$ £££ ¥¥¥ • Extra cost of building-in internationalization to a new computer application: about 20% (assuming XML and Unicode). -

A Decision Procedure for String to Code Point Conversion‹

A Decision Procedure for String to Code Point Conversion‹ Andrew Reynolds1, Andres Notzli¨ 2, Clark Barrett2, and Cesare Tinelli1 1 Department of Computer Science, The University of Iowa, Iowa City, USA 2 Department of Computer Science, Stanford University, Stanford, USA Abstract. In text encoding standards such as Unicode, text strings are sequences of code points, each of which can be represented as a natural number. We present a decision procedure for a concatenation-free theory of strings that includes length and a conversion function from strings to integer code points. Furthermore, we show how many common string operations, such as conversions between lowercase and uppercase, can be naturally encoded using this conversion function. We describe our implementation of this approach in the SMT solver CVC4, which contains a high-performance string subsolver, and show that the use of a native procedure for code points significantly improves its performance with respect to other state-of-the-art string solvers. 1 Introduction String processing is an important part of many kinds of software. In particular, strings often serve as a common representation for the exchange of data at interfaces between different programs, between different programming languages, and between programs and users. At such interfaces, strings often represent values of types other than strings, and developers have to be careful to sanitize and parse those strings correctly. This is a challenging task, making the ability to automatically reason about such software and interfaces appealing. Applications of automated reasoning about strings include finding or proving the absence of SQL injections and XSS vulnerabilities in web applications [28, 25, 31], reasoning about access policies in cloud infrastructure [7], and generating database tables from SQL queries for unit testing [29]. -

PCL PC-8, Code Page 437 Page 1 of 5 PCL PC-8, Code Page 437

PCL PC-8, Code Page 437 Page 1 of 5 PCL PC-8, Code Page 437 PCL Symbol Set: 10U Unicode glyph correspondence tables. Contact:[email protected] http://pcl.to -- -- -- -- $90 U00C9 Ê Uppercase e acute $21 U0021 Ë Exclamation $91 U00E6 Ì Lowercase ae diphthong $22 U0022 Í Neutral double quote $92 U00C6 Î Uppercase ae diphthong $23 U0023 Ï Number $93 U00F4 & Lowercase o circumflex $24 U0024 ' Dollar $94 U00F6 ( Lowercase o dieresis $25 U0025 ) Per cent $95 U00F2 * Lowercase o grave $26 U0026 + Ampersand $96 U00FB , Lowercase u circumflex $27 U0027 - Neutral single quote $97 U00F9 . Lowercase u grave $28 U0028 / Left parenthesis $98 U00FF 0 Lowercase y dieresis $29 U0029 1 Right parenthesis $99 U00D6 2 Uppercase o dieresis $2A U002A 3 Asterisk $9A U00DC 4 Uppercase u dieresis $2B U002B 5 Plus $9B U00A2 6 Cent sign $2C U002C 7 Comma, decimal separator $9C U00A3 8 Pound sterling $2D U002D 9 Hyphen $9D U00A5 : Yen sign $2E U002E ; Period, full stop $9E U20A7 < Pesetas $2F U002F = Solidus, slash $9F U0192 > Florin sign $30 U0030 ? Numeral zero $A0 U00E1 ê Lowercase a acute $31 U0031 A Numeral one $A1 U00ED B Lowercase i acute $32 U0032 C Numeral two $A2 U00F3 D Lowercase o acute $33 U0033 E Numeral three $A3 U00FA F Lowercase u acute $34 U0034 G Numeral four $A4 U00F1 H Lowercase n tilde $35 U0035 I Numeral five $A5 U00D1 J Uppercase n tilde $36 U0036 K Numeral six $A6 U00AA L Female ordinal (a) http://www.pclviewer.com (c) RedTitan Technology 2005 PCL PC-8, Code Page 437 Page 2 of 5 $37 U0037 M Numeral seven $A7 U00BA N Male ordinal (o) $38 U0038 -

Character Set Migration Best Practices For

Character Set Migration Best Practices $Q2UDFOH:KLWH3DSHU October 2002 Server Globalization Technology Oracle Corporation Introduction - Database Character Set Migration Migrating from one database character set to another requires proper strategy and tools. This paper outlines the best practices for database character set migration that has been utilized on behalf of hundreds of customers successfully. Following these methods will help determine what strategies are best suited for your environment and will help minimize risk and downtime. This paper also highlights migration to Unicode. Many customers today are finding Unicode to be essential to supporting their global businesses. Oracle provides consulting services for very large or complex environments to help minimize the downtime while maximizing the safe migration of business critical data. Why migrate? Database character set migration often occurs from a requirement to support new languages. As companies internationalize their operations and expand services to customers all around the world, they find the need to support data storage of more World languages than are available within their existing database character set. Historically, many legacy systems required support for only one or possibly a few languages; therefore, the original character set chosen had a limited repertoire of characters that could be supported. For example, in America a 7-bit character set called ASCII is satisfactory for supporting English data exclusively. While in Europe a variety of 8 bit European character sets can support specific subsets of European languages together with English. In Asia, multi byte character sets that could support a given Asian language and English were chosen. These were reasonable choices that fulfilled the initial requirements and provided the best combination of economy and performance. -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

Verity Locale Configuration Guide V5.0 for Peoplesoft

Verity® Locale Configuration Guide V 5.0 for PeopleSoft® November 15, 2003 Original Part Number DM0619 Verity, Incorporated 894 Ross Drive Sunnyvale, California 94089 (408) 541-1500 Verity Benelux BV Coltbaan 31 3439 NG Nieuwegein The Netherlands Copyright 2003 Verity, Inc. All rights reserved. No part of this publication may be reproduced, transmitted, stored in a retrieval system, nor translated into any human or computer language, in any form or by any means, electronic, mechanical, magnetic, optical, chemical, manual or otherwise, without the prior written permission of the copyright owner, Verity, Inc., 894 Ross Drive, Sunnyvale, California 94089. The copyrighted software that accompanies this manual is licensed to the End User for use only in strict accordance with the End User License Agreement, which the Licensee should read carefully before commencing use of the software. Verity®, Ultraseek®, TOPIC®, KeyView®, and Knowledge Organizer® are registered trademarks of Verity, Inc. in the United States and other countries. The Verity logo, Verity Portal One™, and Verity® Profiler™ are trademarks of Verity, Inc. Sun, Sun Microsystems, the Sun logo, Sun Workstation, Sun Operating Environment, and Java are trademarks or registered trademarks of Sun Microsystems, Inc. in the United States and other countries. Xerces XML Parser Copyright 1999-2000 The Apache Software Foundation. All rights reserved. Microsoft is a registered trademark, and MS-DOS, Windows, Windows 95, Windows NT, and other Microsoft products referenced herein are trademarks of Microsoft Corporation. IBM is a registered trademark of International Business Machines Corporation. The American Heritage® Concise Dictionary, Third Edition Copyright 1994 by Houghton Mifflin Company. Electronic version licensed from Lernout & Hauspie Speech Products N.V. -

Ill[S ADJUSTMENTS

B249 DATA TRANSMISSION CONTROL UNIT INTRODUCTION AND OPERATION Burroughs FUNCTIONAL DETAIL FIELD ENGINEERING CIRCUIT DETAIL lJrn~[}{] ~ D~ill[s ADJUSTMENTS MAINTENANCE ~ ill ~ OD ill [S PROCEDURES INST ALLAT ION PROCEDURES RELIABILITY IMPROVEMENT NOTICES OPTIONAL FEATURES MODIFICATIONS (BRANCH LIBRARIES) Printed in U.S. America 9-15-66 Form 1026259 Burroughs - B249 Data Transmission ~echnical Manual I N D E X INTRODUCTION & OPERATION - SECTION I Page No. B249 Data Transmission Control Unit - General Descript ion . 1 Glossary - Data Transmission Terminal Unit and MCU. 5 Glossary - DTCU & System . 3 Physical De$cr1ption . 2 FUNCTIONAL DETAIL - SECTION II B300 Active Interrogate. · . · . · · 57 B300 Data Communications Read. · · . · · · · 62 B300 Data Communications Write · · . 78 B300 Passive Interrogate · · 49 VB300 Passive Interrogate - ITU (B486) Mode · . 92 v13300 Read - ITU (B486) Mode. · · . · · . · . 95 V'B300 Write - ITU (13486) Mode · · . · · 108 B5500 Data Communications Interrogate. · . 1 B5500 Data Communications Read . · 9 B5500 Data Communications Write. · 21 CIRCUIT DETAIL - SECTION III "AU Register Load - (Normal & Reverse) 1 Clock Control. ... 11 Scan . 3 Translator . 4 ADJUSTMENTS - SECTION IV Clock Adjustments ..... 1 Variable Bias Adjustment . 1 MAINTENANCE PROCEDURES - SECTION V Maintenance Panel ... 1 INSTALLATION PROCEDURES - SECTION VI DTCU Installation. 1 Pluggable Options. 2 Power ON . 3 Special Inquiry Terminal Connection .. 2 NOTE: Pages for Sections VII, VIII and IX will be furnished when applicable. Printed in U. S. America Revised 4/1/67 For Form 1026259 Burroughs - B249 Data Transmission Technical Manual Sec, I Page 1 Introduction & Operation B249 DATA TRANSMISSION CONTROL UNIT - GENERAL DESCRIPTION The B249 DTCU is required when: 1. More than one B487 DTTU is used on a single Processing System. -

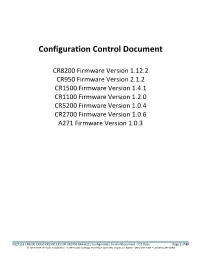

Configuration Control Document

Configuration Control Document CR8200 Firmware Version 1.12.2 CR950 Firmware Version 2.1.2 CR1500 Firmware Version 1.4.1 CR1100 Firmware Version 1.2.0 CR5200 Firmware Version 1.0.4 CR2700 Firmware Version 1.0.6 A271 Firmware Version 1.0.3 D027153 CR8200 CR950 CR1500 CR1100 CR2700 CRA-A271 Configuration Control Document CCD.Docx Page 1 of 89 © 2013-2019 The Code Corporation 12393 South Gateway Park Place Suite 600, Draper, UT 84020 (801) 495-2200 FAX (801) 495-0280 Configuration Control Document Table of Contents Keyword Table .................................................................................................................. 4 Scope ................................................................................................................................ 6 Notations .......................................................................................................................... 6 Reader Command Overview ............................................................................................. 6 4.1 Configuration Command Architecture ........................................................................................ 6 4.2 Command Format ....................................................................................................................... 7 4.3 Supported Commands ................................................................................................................. 8 4.3.1 <CF> – Configuration Manager ...................................................................................................... -

Relink Eo 3-11-97

CHAPTER 1 COMMUNICATIONS As an Aviation Electronics Technician, you will be communication circuits. These operations are accom- tasked to operate and maintain many different types of plished through the use of compatible and flexible airborne communications equipment. These systems communication systems. may differ in some respects, but they are similar in many Radio is the most important means of com- ways. As an example, there are various models of AM municating in the Navy today. There are many methods radios, yet they all serve the same function and operate of transmitting in use throughout the world. This manual on the same basic principles. It is beyond the scope of will discuss three types. They are radiotelegraph, this manual to discuss each and every model of radiotelephone, and teletypewriter. communication equipment used on naval aircraft; therefore, only representative systems will be discussed. Every effort has been made to use not only systems that Radiotelegraph are common to many of the different platforms, but also have not been used in the other training manuals. It is Radiotelegraph is commonly called CW (con- the intent of this manual to have systems from each and tinuous wave) telegraphy. Telegraphy is accomplished every type of aircraft in use today. by opening and closing a switch to separate a continuously transmitted wave. The resulting “dots” and “dashes” are based on the Morse code. The major RADIO COMMUNICATIONS disadvantage of this type of communication is the relatively slow speed and the need for experienced Learning Objective: Recognize the various operators at both ends. types of radio communications.