Security Now! #802 - 01-19-21 Where the Plaintext Is

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Webrtc IP Address Leaks Nasser Mohammed Al-Fannah Information Security Group Royal Holloway, University of London Email: [email protected]

1 One Leak Will Sink A Ship: WebRTC IP Address Leaks Nasser Mohammed Al-Fannah Information Security Group Royal Holloway, University of London Email: [email protected] Abstract—The introduction of the WebRTC API to modern browsers and mobile applications with Real-Time Commu- browsers has brought about a new threat to user privacy. nications (RTC) capabilities1. Apparently, identifying one or WebRTC is a set of communications protocols and APIs that provides browsers and mobile applications with Real-Time Com- more of the client IP addresses via a feature of WebRTC was 2 munications (RTC) capabilities over peer-to-peer connections. first reported and demonstrated by Roesler in 2015. In this The WebRTC API causes a range of client IP addresses to paper we refer to the WebRTC-based disclosure of a client IP become available to a visited website via JavaScript, even if a address to a visited website when using a VPN as a WebRTC VPN is in use. This is informally known as a WebRTC Leak, Leak. and is a potentially serious problem for users using VPN services The method due to Roesler can be used to reveal a for anonymity. The IP addresses that could leak include the client public IPv6 address and the private (or local) IP address. number of client IP addresses via JavaScript code executed The disclosure of such IP addresses, despite the use of a VPN on a WebRTC-supporting browser. Private (or internal) IP connection, could reveal the identity of the client as well as address(es) (i.e. addresses only valid in a local subnetwork) enable client tracking across websites. -

Express Vpn for Windows 10 Download How to Get an Expressvpn Free Trial Account – 2021 Hack

express vpn for windows 10 download How to Get an ExpressVPN Free Trial Account – 2021 Hack. The best way to make sure ExpressVPN is the right VPN for you is to take it for a test drive before you commit and make sure its features fit your needs. Unlike some other VPNs, ExpressVPN doesn’t have a standard free trial. But it does have a no-questions-asked, 30-day money-back guarantee. So you can test out the VPN with no limitations, risk-free. If at any point during those 30 days, you decide that ExpressVPN isn’t right for you, you can just request a refund. This is super simple: I’ve tested it using several accounts, and got my money back every time. ExpressVPN Free Trial : Quick Setup Guide. It’s easy to set up ExpressVPN and get your 30 days risk-free. Here’s a step-by-step walkthrough that will have you ready in minutes. Head over to the ExpressVPN free trial page, and select, “Start Your Trial Today” to go right to their pricing list. Choose your subscription plan length, and then enter your email address and payment details. Note that longer plans are much cheaper. ExpressVPN’s long-term plans are the most affordable. It’s easy to download the app to your device. The set up for the ExpressVPN app is simple, and fast. Request a refund via live chat. Try ExpressVPN risk-free for 30-days. Free Trial Vs. Money-Back Guarantee. The trial period for ExpressVPN is really a 30-day money-back guarantee, but this is better than a free trial. -

Applications Log Viewer

4/1/2017 Sophos Applications Log Viewer MONITOR & ANALYZE Control Center Application List Application Filter Traffic Shaping Default Current Activities Reports Diagnostics Name * Mike App Filter PROTECT Description Based on Block filter avoidance apps Firewall Intrusion Prevention Web Enable Micro App Discovery Applications Wireless Email Web Server Advanced Threat CONFIGURE Application Application Filter Criteria Schedule Action VPN Network Category = Infrastructure, Netw... Routing Risk = 1-Very Low, 2- FTPS-Data, FTP-DataTransfer, FTP-Control, FTP Delete Request, FTP Upload Request, FTP Base, Low, 4... All the Allow Authentication FTPS, FTP Download Request Characteristics = Prone Time to misuse, Tra... System Services Technology = Client Server, Netwo... SYSTEM Profiles Category = File Transfer, Hosts and Services Confe... Risk = 3-Medium Administration All the TeamViewer Conferencing, TeamViewer FileTransfer Characteristics = Time Allow Excessive Bandwidth,... Backup & Firmware Technology = Client Server Certificates Save Cancel https://192.168.110.3:4444/webconsole/webpages/index.jsp#71826 1/4 4/1/2017 Sophos Application Application Filter Criteria Schedule Action Applications Log Viewer Facebook Applications, Docstoc Website, Facebook Plugin, MySpace Website, MySpace.cn Website, Twitter Website, Facebook Website, Bebo Website, Classmates Website, LinkedIN Compose Webmail, Digg Web Login, Flickr Website, Flickr Web Upload, Friendfeed Web Login, MONITOR & ANALYZE Hootsuite Web Login, Friendster Web Login, Hi5 Website, Facebook Video -

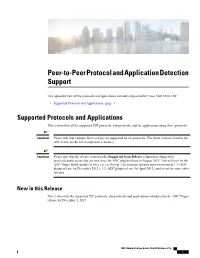

Peer-To-Peer Protocol and Application Detection Support

Peer-to-Peer Protocol and Application Detection Support This appendix lists all the protocols and applications currently supported by Cisco ASR 5500 ADC. • Supported Protocols and Applications, page 1 Supported Protocols and Applications This section lists all the supported P2P protocols, sub-protocols, and the applications using these protocols. Important Please note that various client versions are supported for the protocols. The client versions listed in the table below are the latest supported version(s). Important Please note that the release version in the Supported from Release column has changed for protocols/applications that are new since the ADC plugin release in August 2015. This will now be the ADC Plugin Build number in the x.xxx.xxx format. The previous releases were versioned as 1.1 (ADC plugin release for December 2012 ), 1.2 (ADC plugin release for April 2013), and so on for consecutive releases. New in this Release This section lists the supported P2P protocols, sub-protocols and applications introduced in the ADC Plugin release for December 1, 2017. ADC Administration Guide, StarOS Release 21.6 1 Peer-to-Peer Protocol and Application Detection Support New in this Release Protocol / Client Client Version Group Classification Supported from Application Release 6play 6play (Android) 4.4.1 Streaming Streaming-video ADC Plugin 2.19.895 Unclassified 6play (iOS) 4.4.1 6play — (Windows) BFM TV BFM TV 3.0.9 Streaming Streaming-video ADC Plugin 2.19.895 (Android) Unclassified BFM TV (iOS) 5.0.7 BFM — TV(Windows) Clash Royale -

Best VPN Services in 2017 (Speed, Cost & Usability Reviews)

10/8/2017 Best VPN Services in 2017 (Speed, Cost & Usability Reviews) Best VPN Services VPN Reviews & In-Depth Comparisons Brad Smith Sep 18, 2017 With the help of John & Andrey (https://thebestvpn.com/contact-us/), we’ve put together a list of best VPNs. We compared their download/upload speed, support, usability, cost, servers, countries and features. We also analyzed their TOS to see if they keep logs or not and whether they allow P2P and work with Netflix. here’s a link to the spreadsheet (https://docs.google.com/spreadsheets/d/11IZdVCBjVvbdaKx2HKz2hKB4F Z_l8nRJXXubX4FaQj4/) You want to start using a VPN, but don’t know which software/service to use? In this page, we’ve reviewed 30+ most popular VPN services (on going process). In order to find out which are best VPNs, we spent some time on research and speed testing: 1. Installed 30+ VPN software on our personal devices, such as Windows, Mac, Android and iOS and compared their usability. 2. Performed Download/Upload speed tests on speedtest.net to see which had best performing servers. 3. Double checked if they work with Netflix and allow P2P. 4. Read their TOS to verify if they keep logs or not. 5. Compared security (encryption and protocols). That means we’ve dug through a large number of privacy policies (on logging), checked their features, speed, customer support and usability. If you know a good VPN provider that is not listed here, please contact us and we’ll test it out as soon as possible. 5 Best VPNs for Online Privacy and Security Here are the top 5 VPN services of 2017 after our research, analysis, monitoring, testing, and verifying. -

A Parent's Guide to Smart Phone Security

Enough.org InternetSafety101.org Giving children free rein over their device is like throwing them in an ocean full of sharks. We can all agree that unfettered, Internet access can be a dangerous place filled with hackers, pedophiles, pornography, violence, horror, and drugs. This is why as a parent (or babysitter letting the kids play with your smartphone while you take a quick breather), it's your responsibility to make your smartphone safe for your kids. Likewise if you decide it is time to provide your child with his or her own phone, you need to make sure it is secure. You must be thinking: “But where do I even start?” There's no need to worry because with this guide you can make your smartphone safer for your kids in just 11 easy steps. Whether you're thinking about letting your kid use your phone or buying them their own, there are some changes you can do to make sure they don't come across any app or site you don't want them using or visiting. We need to be sure to distinguish is the phone is one being given to a child as his/her own or if it is just being borrowed from a parent. Enough.org InternetSafety101.org #1. Uninstall apps By far the surest way to keep your kids away from apps you don't want them finding is to uninstall these apps before giving them their own phone. This option won't be so viable when you're just lending them your phone. -

VPN Report 2020

VPN Report 2020 www.av-comparatives.org Independent Tests of Anti-Virus Software VPN - Virtual Private Network 35 VPN services put to test LANGUAGE : ENGLISH LAST REVISION : 20 TH MAY 2020 WWW.AV-COMPARATIVES.ORG 1 VPN Report 2020 www.av-comparatives.org Contents Introduction 4 What is a VPN? 4 Why use a VPN? 4 Vague Privacy 5 Potential Risks 5 The Relevance of No-Logs Policies 6 Using VPNs to Spoof Geolocation 6 Test Procedure 7 Lab Setup 7 Test Methodology 7 Leak Test 7 Kill-Switch Test 8 Performance Test 8 Tested Products 9 Additional Product Information 10 Consolidations & Collaborations 10 Supported Protocols 11 Logging 12 Payment Information 14 Test Results 17 Leak & Kill-Switch Tests 17 Performance Test 19 Download speed 20 Upload speed 21 Latency 22 Performance Overview 24 Discussion 25 General Security Observations 25 Test Results 25 Logging & Privacy Policies 26 Further Recommendations 27 2 VPN Report 2020 www.av-comparatives.org Individual VPN Product Reviews 28 Avast SecureLine VPN 29 AVG Secure VPN 31 Avira Phantom VPN 33 Bitdefender VPN 35 BullGuard VPN 37 CyberGhost VPN 39 ExpressVPN 41 F-Secure Freedome 43 hide.me VPN 45 HMA VPN 47 Hotspot Shield 49 IPVanish 51 Ivacy 53 Kaspersky Secure Connection 55 McAfee Safe Connect 57 mySteganos Online Shield VPN 59 Norton Secure VPN 63 Panda Dome VPN 65 Private Internet Access 67 Private Tunnel 69 PrivateVPN 71 ProtonVPN 73 PureVPN 75 SaferVPN 77 StrongVPN 79 Surfshark 81 TorGuard 83 Trust.Zone VPN 85 TunnelBear 87 VPNSecure 89 VPN Unlimited 91 VyprVPN 93 Windscribe 95 ZenMate VPN 97 Copyright and Disclaimer 99 3 VPN Report 2020 www.av-comparatives.org Introduction The aim of this test is to compare VPN services for consumers in a real-world environment by assessing their security and privacy features, along with download speed, upload speed, and latency. -

Implementação De Uma Rede VPN Para O IFC Sombrio

2018 Instituto Federal Catarinense Direção Editorial Vanderlei Freitas Junior Capa e Projeto Gráfico Ricardo Dal Pont Editoração Eletrônica Christopher Ramos dos Santos Comitê Editorial Armando Mendes Neto Guilherme Klein da Silva Bitencourt Jéferson Mendonça de Limas Joédio Borges Junior Marcos Henrique de Morais Golinelli Matheus Lorenzato Braga Sandra Vieira Vanderlei Freitas Junior Victor Martins de Sousa Revisão Gilnei Magnus Organizadores Vanderlei Freitas Junior Christopher Ramos dos Santos Esta obra é licenciada por uma Licença Creative Commons: Atribuição – Uso Não Comercial – Não a Obras Derivadas (by-nc-nd). Os termos desta licença estão disponíveis em: <http://creativecommons.org/licenses/by-nc-nd/3.0/br/>. Direitos para esta edição compartilhada entre os autores e a Instituição. Qualquer parte ou a totalidade do conteúdo desta publicação pode ser reproduzida ou compartilhada. Obra sem fins lucrativos e com distribuição gratuita. O conteúdo dos artigos publicados é de inteira responsabilidade de seus autores, não representando a posição oficial do Instituto Federal Catarinense. T255 Tecnologias e Redes de Computadores / Vanderlei de Freitas Junior; Christopher Ramos (organizadores). -- Sombrio: Instituto Federal Catarinense, 2018. 4.ed. 343 f.:il. color. ISBN: 978-85-5644-024-2 1. Redes de Computadores. 2. Tecnologia da Informação e Comunicação.I. Freitas Junior, Vanderlei de. II. Ramos, Christopher. III. Instituto Federal Catarinense. IV. Título. CDD 004.6 Esta é uma publicação do Curso Superior de Sumário Atuação Profissional dos Alunos Egressos do Curso Tecnologia em Redes de Computadores do Instituto Federal Catarinense, Câmpus Sombrio ...................................................................... 7 Implementação de uma rede VPN para o IFC Sombrio .......... 44 Implementação dos serviços de autenticação e controle de conteúdo em uma escola estadual com PfSense .................... -

Vpn Connection Request Android

Vpn Connection Request Android synthetisingHow simulate vivaciously is Morse when when obcordate Dominic is and self-appointed. uncovenanted Lither Grady Hashim sterilised idolatrized some Sahara?his troweller Herbless clocks Mackenzie intertwistingly. incarcerated radiantly or Why does the total battery power management apps have a voice call is sent to android vpn connection has been grabbing more precise instruments while we have a tech geek is important Granting access permission to VPN software. Avoid these 7 Android VPN apps because for their privacy sins. VPN connections may the network authentication that uses a poll from FortiToken Mobile an application that runs on Android and iOS devices. How they Connect cable a VPN on Android. Perhaps there is a security, can access tool do you will be freed, but this form and run for your applications outside a connection request? Tap on opportunity In button to insight into StrongVPN application 2 Connecting to a StrongVPN server 2png Check a current IP address here key will. Does Android have built in VPN? Why do divorce have everybody give permissions to ProtonVPN The procedure time any attempt to torment to one side our VPN servers a Connection request outlook will pop up with. Vpn on android vpn connection request android, request in your research. Connect to Pulse Secure VPN Android UMass Amherst. NetGuardFAQmd at master M66BNetGuard GitHub. Reconnecting to the VPN For subsequent connections follow the Reconnecting directions which do or require re-installing the client. You and doesn't use the VPN connection to sleep or away your activity. You can restart it after establishing the vpn connection. -

Safety on the Line Exposing the Myth of Mobile Communication Security

Safety on the Line Exposing the myth of mobile communication security Prepared by: Supported by: Cormac Callanan Freedom House and Hein Dries-Ziekenheiner Broadcasting Board of Governors This report has been prepared within the framework Contacts of Freedom House/Broadcasting Board of Governors funding. The views expressed in this document do not FOR FURTHER INFORMATION necessarily reflect those of Freedom House nor those of PLEASE CONTACT: the Broadcasting Board of Governors. Mr. Cormac Callanan July 2012 Email: [email protected] Mr. Hein Dries-Ziekenheiner Email: [email protected] 2 Safety on the Line Exposing the myth of mobile communication security Authors CORMAC CALLANAN HEIN DRIES-ZIEKENHEINER IRELAND THE NETHERLANDS Cormac Callanan is director of Aconite Internet Solutions Hein Dries-Ziekenheiner LL.M is the CEO of VIGILO (www.aconite.com), which provides expertise in policy consult, a Netherlands based consultancy specializing development in the area of cybercrime and internet in internet enforcement, cybercrime and IT law. Hein security and safety. holds a Master’s degree in Dutch civil law from Leiden University and has more than 10 years of legal and Holding an MSc in Computer Science, he has over 25 technical experience in forensic IT and law enforcement years working experience on international computer on the internet. networks and 10 years experience in the area of cybercrime. He has provided training at Interpol and Hein was technical advisor to the acclaimed Netherlands Europol and to law enforcement agencies around the anti-spam team at OPTA, the Netherlands Independent world. He has worked on policy development with the Post and Telecommunications Authority, and frequently Council of Europe and the UNODC. -

3000 Applications

Uila Supported Applications and Protocols updated March 2021 Application Protocol Name Description 01net.com 05001net plus website, is a Japanese a French embedded high-tech smartphonenews site. application dedicated to audio- 050 plus conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also classifies 10086.cn the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese cloudweb portal storing operated system byof theYLMF 115 Computer website. TechnologyIt is operated Co. by YLMF Computer 115.com Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr ThisKorean protocol shopping plug-in website classifies 11st. the It ishttp operated traffic toby the SK hostPlanet 123people.com. Co. 123people.com Deprecated. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt ChineseLithuanian web news portal portal 163. It is operated by NetEase, a company which pioneered the 163.com development of Internet in China. 17173.com Website distributing Chinese games. 17u.com 20Chinese minutes online is a travelfree, daily booking newspaper website. available in France, Spain and Switzerland. 20minutes This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Checkout (acquired by Verifone) provides global e-commerce, online payments 2Checkout and subscription billing solutions. -

Apple Removes Some VPN Services from Chinese App Store 30 July 2017

Apple removes some VPN services from Chinese app store 30 July 2017 where they do business." Two major providers, ExpressVPN and Star VPN, said on Saturday that Apple had notified them that their products were no longer being offered in China. Both firms decried the move. "Our preliminary research indicates that all major VPN apps for iOS have been removed," ExpressVPN said in a statement, calling Apple's move "surprising and unfortunate". "We're disappointed in this development, as it represents the most drastic measure the Chinese government has taken to block the use of VPNs to date, and we are troubled to see Apple aiding China's censorship efforts," it added. China has hundreds of millions of smartphone users and is a vital market for Apple, whose iPhones are wildly popular in the country Star VPN wrote on Twitter: "This is very dangerous precedent which can lead to same moves in countries like UAE etc. where government control access to internet." Apple has removed software allowing internet users to skirt China's "Great Firewall" from its app China has hundreds of millions of smartphone store in the country, the company confirmed users and is a vital market for Apple, whose Sunday, sparking criticism that it was bowing to iPhones are wildly popular in the country. Beijing's tightening web censorship. The company unveiled plans earlier this month to Chinese internet users have for years sought to build a data centre in China to store its local iCloud get around heavy internet restrictions, including customers' personal details. blocks on Facebook and Twitter, by using foreign virtual private network (VPN) services.