3. an Analysis of Today's Batch Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Decryption Process for Android Database Forensics

International Journal of Computer Sciences and Engineering Open Access Research Paper Vol.-7, Issue-3, March 2019 E-ISSN: 2347-2693 A Decryption Process for Android Database Forensics Nibedita Chakraborty1*, Krishna Punwar2 1,2Dept. of Information Technology and Telecommunication, Raksha Shakti University, Ahmedabad, India *Corresponding Author: [email protected], Tel.: 7980118774 DOI: https://doi.org/10.26438/ijcse/v7i3.2326 | Available online at: www.ijcseonline.org Accepted: 18/Mar/2019, Published: 31/Mar/2019 Abstract— Nowadays, Databases are mostly usable in business applications and financial transactions in Banks. Most of the database servers stores confidential and sensitive information of a mobile device. Database forensics is the part of digital forensics especially for the investigation of different databases and the sensitive information stored on a database. Mobile databases are totally different from the major database and are very platform independent as well. Even if they are not attached to the central database, they can still linked with the major database to drag and change the information stored on this. SQLite Database is mostly needed by Android application development. SQLite is a freely available database management system which is specially used to perform relational functional and it comes inbuilt with android to perform database functions on android appliance. This paper will show how a message can be decrypted by using block cipher modes and which mode is more secured and fast. Keywords—Database Forensics,Mobile Device ,Android,SQLite, Modes, Tools I. INTRODUCTION In android mobile phone device, SQLite is mainly based on ACID properties docile relational database management Database is an assemble form of interrelated data which is system. -

Implementing Cisco Cyber Security Operations

2019 CLUS Implementing Cisco Cyber Security Operations Paul Ostrowski / Patrick Lao / James Risler Cisco Security Content Development Engineers LTRCRT-2222 2019 CLUS Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Live Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space Webex Teams will be moderated cs.co/ciscolivebot#LTRCRT-2222 by the speaker until June 16, 2019. 2019 CLUS © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Agenda • Goals and Objectives • Prerequisite Knowledge & Skills (PKS) • Introduction to Security Onion • SECOPS Labs and Topologies • Access SECFND / SECOPS eLearning Lab Training Environment • Lab Evaluation • Cisco Cybersecurity Certification and Education Offerings 2019 CLUS LTRCRT-2222 © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 4 Goals and Objectives: • Today's organizations are challenged with rapidly detecting cybersecurity breaches in order to effectively respond to security incidents. Cybersecurity provides the critical foundation organizations require to protect themselves, enable trust, move faster, add greater value and grow. • Teams of cybersecurity analysts within Security Operations Centers (SOC) keep a vigilant eye on network security monitoring systems designed to protect their organizations by detecting and responding to cybersecurity threats. • The goal of Cisco’s CCNA Cyber OPS (SECFND / SECOPS) courses is to teach the fundamental skills required to begin a career working as an associate/entry-level cybersecurity analyst within a threat centric security operations center. • This session will provide the student with an understanding of Security Onion as an open source network security monitoring tool (NSM). -

Design Document for IP Fabrics

Design Document for IP Fabrics Author: May06-15 (Network Forensic UI) Andy Heintz (Communication Leader) Abraham Devine (Webmaster) Altay Ozen (Team Leader and Team Key Concept Holder) Dr. Joseph Zambreno (Adviser) Curt Schwaderer (Client) Version Date Author Change 1.0 10/26 AH Created initial version of design document 2.0 11/23 AH Created final version of design document Table of Contents 1 Problem Statement.................................................................................................................... 3 2 System Design ........................................................................................................................... 4 2.1 System Requirements................................................................................................................................ 4 2.2 Functional Requirements .......................................................................................................................... 4 2.3 Functional Decomposition ........................................................................................................................ 5 2.4 System Analysis ....................................................................................................................................... 6 3 Detailed Design ......................................................................................................................... 7 3.1 Input / Output Specification ..................................................................................................................... -

Hands-On Network Forensics, FIRST 2015

2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Workshop Preparations: 1. Unzip the virtual machine from NetworkForensics_ VirtualBox.zip on your EXTENSIVE USE OF USB thumb drive to your local hard drive COMMAND LINE 2. Start VirtualBox and run the Security Onion VM IN THIS WORKSHOP 3. Log in with: user/password 1 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Erik Hjelmvik, Swedish Armed Forces CERT FIRST 2015, Berlin 2 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Workshop Preparations: 1. Unzip the virtual machine from NetworkForensics_ VirtualBox.zip on your EXTENSIVE USE OF USB thumb drive to your local hard drive COMMAND LINE 2. Start VirtualBox and run the Security Onion VM IN THIS WORKSHOP 3. Log in with: user/password 3 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE ”Password” Ned 4 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE SysAdmin: Homer 5 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE PR /Marketing: Krusty the Clown 6 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Password Ned AB = pwned.se 7 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE pwned.se Network [INTERNET] | Default Gateway 192.168.0.1 PASSWORD-NED-XP www.pwned.se | 192.168.0.53 192.168.0.2 [TAP]--->Security- | | | Onion -----+------+---------+---------+----------------+------- | | Homer-xubuntu Krustys-PC 192.168.0.51 192.168.0.54 8 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Security Onion 9 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Paths (also on Cheat Sheet) • PCAP files: /nsm/sensor_data/securityonion_eth1/dailylogs/ • Argus files: -

Network Intell: Enabling the Non-Expert Analysis of Large Volumes of Intercepted Network Traffic

Chapter 1 NETWORK INTELL: ENABLING THE NON- EXPERT ANALYSIS OF LARGE VOLUMES OF INTERCEPTED NETWORK TRAFFIC Erwin van de Wiel, Mark Scanlon and Nhien-An Le-Khac Abstract In criminal investigations, telecommunication wiretaps have become a common technique used by law enforcement. While phone-based wire- tapping is well documented and the procedure for their execution are well known, the same cannot be said for Internet taps. Lawfully inter- cepted network traffic often contains a lot of encrypted traffic making it increasingly difficult to find useful information inside the traffic cap- tured. The advent of Internet-of-Things further complicates the pro- cess for non-technical investigators. The current level of complexity of intercepted network traffic is close to a point where data cannot be analysed without supervision of a digital investigator with advanced network knowledge. Current investigations focus on analysing all traffic in a chronological manner and are predominately conducted on the data contents of the intercepted traffic. This approach often becomes overly arduous when the amount of data to be analysed becomes very large. In this paper, we propose a novel approach to analyse large amounts of intercepted network traffic based on network metadata. Our approach significantly reduces the duration of the analysis and also produces an arXiv:1712.05727v2 [cs.CR] 27 Jan 2018 insight view of analysing results for the non-technical investigator. We also test our approach with a large sample of network traffic data. Keywords: Network Investigation, Big Data Forensics, Intercepted Network Traffic, Internet tap, Network Metadata Analysis, Non-Technical Investigator. 1. Introduction Lawful interception is a method that is used by the police force in some countries in almost all middle-to high-level criminal investigations. -

Comparing SSD Forensics with HDD Forensics

St. Cloud State University theRepository at St. Cloud State Culminating Projects in Information Assurance Department of Information Systems 5-2020 Comparing SSD Forensics with HDD Forensics Varun Reddy Kondam [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/msia_etds Recommended Citation Kondam, Varun Reddy, "Comparing SSD Forensics with HDD Forensics" (2020). Culminating Projects in Information Assurance. 105. https://repository.stcloudstate.edu/msia_etds/105 This Starred Paper is brought to you for free and open access by the Department of Information Systems at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in Information Assurance by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Comparing SSD Forensics with HDD Forensics By Varun Reddy Kondam A Starred Paper Submitted to the Graduate Faculty of St. Cloud State University in Partial Fulfillment of the Requirements for the Degree Master of Science in Information Assurance May 2020 Starred Paper Committee: Mark Schmidt, Chairperson Lynn Collen Sneh Kalia 2 Abstract The technological industry is growing at an unprecedented rate; to adequately evaluate this shift in the fast-paced industry, one would first need to deliberate on the differences between the Hard Disk Drive (HDD) and Solid-State Drive (SSD). HDD is a hard disk drive that was conventionally used to store data, whereas SSD is a more modern and compact substitute; SSDs comprises of flash memory technology, which is the modern-day method of storing data. Though the inception of data storage began with HDD, they proved to be less accessible and stored less data as compared to the present-day SSDs, which can easily store up to 1 Terabyte in a minuscule chip-size frame. -

Basic Security Testing with Kali Linux 2

Basic Security Testing with Kali Linux Cover design and photo provided by Moriah Dieterle. Copyright © 2013 by Daniel W. Dieterle. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means without the prior written permission of the publisher. All trademarks, registered trademarks and logos are the property of their respective owners. ISBN-13: 978-1494861278 Thanks to my family for their unending support and prayer, you are truly a gift from God! Thanks to my friends in the infosec & cybersecurity community for sharing your knowledge and time with me. And thanks to my friends in our local book writers club (especially you Bill!), without your input, companionship and advice, this would have never happened. Daniel Dieterle “It is said that if you know your enemies and know yourself, you will not be imperiled in a hundred battles” - Sun Tzu “Behold, I send you forth as sheep in the midst of wolves: be ye therefore wise as serpents, and harmless as doves.” - Matthew 10:16 (KJV) About the Author Daniel W. Dieterle has worked in the IT field for over 20 years. During this time he worked for a computer support company where he provided computer and network support for hundreds of companies across Upstate New York and throughout Northern Pennsylvania. He also worked in a Fortune 500 corporate data center, briefly worked at an Ivy League school’s computer support department and served as an executive at an electrical engineering company. For about the last 5 years Daniel has been completely focused on security. -

Automated Control of Distributed Systems

Summer Research Fellowship Programme-2015 Indian Academy of Sciences, Bangalore PROJECT REPORT AUTOMATED CONTROL OF DISTRIBUTED SYSTEMS UNDER THE GUIDANCE OF Dr. B.M MEHTRE Associate Professor, Head, Center for Information Assurance and Management (CIAM) Institute for Development and Research in Banking Technology (IDRBT), Hyderabad - 500 057 Submitted by: S. NIVEADHITHA II Year, B Tech Computer Science Engineering SRM University, Kattankulathur, Chennai. SRF- ENGS7327 (2015) Indian Academy of Sciences, Bangalore CERTIFICATE This is to certify that Ms S Niveadhitha, Student, Second year B Tech Computer Science Engineering, SRM University, Kattankulathur, Chennai has undertaken Summer Research Fellowship Programme (2015) conducted by Indian Academy of Sciences, Bangalore at IDRBT, Hyderabad from May 25, 2015 to July 20, 2015. She was assigned the project “Automated Control of Distributed Systems” under my guidance. I wish her all the best for all her future endeavours. Dr. B.M MEHTRE Associate Professor, Head, Center for Information Assurance and Management (CIAM) Institute for Development and Research in Banking Technology (IDRBT), Hyderabad - 500 057 ACKNOWLEDGMENT I express my deep sense of gratitude to my Guide Dr. B. M. Mehtre, Associate Professor, Head, CIAM, IDRBT, Hyderabad - 500 057 for giving me an great opportunity to do this project in CIAM, IDRBT and providing all the support. I am thankful to Prof. Dr. B.L.Deekshatulu, Adjunct Professor, IDRBT for his guidance and valuable feedback. I am grateful to Mr. Hiran V Nath, Miss Shashi Sachan and colleagues of CIAM, IDRBT who constantly encouraged me for my project work and supported me by providing all the necessary information. I am indebted to Indian Academy of Sciences, Bangalore, Director, E & T SRM University, and Head, CSE, SRM University, Kattankulathur, Chennai for giving me this golden opportunity to undertake Summer Research Fellowship Programme at IDRBT. -

Evaluating the Availability of Forensic Evidence from Three Idss: Tool Ability

Evaluating the Availability of Forensic Evidence from Three IDSs: Tool Ability EMAD ABDULLAH ALSAIARI A thesis submitted to the Faculty of Design and Creative Technologies Auckland University of Technology in partial fulfilment of the requirements for the degree of Masters of Forensic Information Technology School of Engineering, Computer and Mathematical Sciences Auckland, New Zealand 2016 i Declaration I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person nor material which to a substantial extent has been accepted for the qualification of any other degree or diploma of a University or other institution of higher learning, except where due acknowledgement is made in the acknowledgements. Emad Abdullah Alsaiari ii Acknowledgement At the beginning and foremost, the researcher would like to thank almighty Allah. Additionally, I would like to thank everyone who helped me to conduct this thesis starting from my family, supervisor, all relatives and friends. I would also like to express my thorough appreciation to all the members of Saudi Culture Mission for facilitating the process of studying in a foreign country. I would also like to express my thorough appreciation to all the staff of Saudi Culture Mission for facilitating the process of studying in Auckland University of Technology. Especially, the pervious head principal of the Saudi Culture Mission Dr. Satam Al- Otaibi for all his motivation, advice and support to students from Saudi in New Zealand as well as Saudi Arabia Cultural Attaché Dr. Saud Theyab the head principal of the Saudi Culture Mission. -

Enabling Non-Expert Analysis of Large Volumes of Intercepted Network Traffic

Enabling Non-Expert Analysis OF Large Volumes OF Intercepted Network Traffic Erwin Wiel, Mark Scanlon, Nhien-An Le-Khac To cite this version: Erwin Wiel, Mark Scanlon, Nhien-An Le-Khac. Enabling Non-Expert Analysis OF Large Volumes OF Intercepted Network Traffic. 14th IFIP International Conference on Digital Forensics (Digital- Forensics), Jan 2018, New Delhi, India. pp.183-197, 10.1007/978-3-319-99277-8_11. hal-01988846 HAL Id: hal-01988846 https://hal.inria.fr/hal-01988846 Submitted on 22 Jan 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Chapter 11 ENABLING NON-EXPERT ANALYSIS OF LARGE VOLUMES OF INTERCEPTED NETWORK TRAFFIC Erwin van de Wiel, Mark Scanlon and Nhien-An Le-Khac Abstract Telecommunications wiretaps are commonly used by law enforcement in criminal investigations. While phone-based wiretapping has seen con- siderable success, the same cannot be said for Internet taps. Large portions of intercepted Internet traffic are often encrypted, making it difficult to obtain useful information. The advent of the Internet of Things further complicates network wiretapping. In fact, the current level of complexity of intercepted network traffic is almost at the point where data cannot be analyzed without the active involvement of ex- perts. -

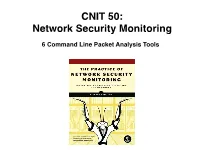

Argus and the Ra Client SO Tool Categories Three Types of Tools

CNIT 50: Network Security Monitoring 6 Command Line Packet Analysis Tools Topics • SO Tool Categories • Running Tcpdump • Using Dumpcap and Tshark • Running Argus and the Ra Client SO Tool Categories Three Types of Tools • Data presentation • Data collection • Data delivery Data Presentation Tools • Packet Analysis Tools • Read traffic from a live interface or from a saved PCAP file • Command-line: tcpdump, Tshark (with Dumpcap), and Argus Ra Client • Graphical interface: Wireshark, Xplico, and NetworkMiner (see Ch 7) NSM Consoles • Gateways to NSM data • Squil, Squert, and ELSA (see Ch 8) • Text discusses Snorby but it's abandoned and no longer included in Security Onion • Links Ch 1e, 1f Data Collection Tools • These applications collect and generate the NSM data available to the presentation tools • Argus server, Netsniff-ng, PRADS, Snort, Suricata, and Bro Argus and PRADS • Argus server and PRADS create and store their own form of session data • Argus uses a proprietary binary format suited for rapid command-line mining • PRADS data is best read through an NSM console Netsniff-ng • Simply writes full-content data to disk in pcap format Snort and Suricata • Network intrusion detection systems (NIDS) • Inspect traffic and write alerts • According to signatures deployed with each tool Bro • Observes and interprets traffic that has been generted and logged in a variety of NSM datatypes Data Delivery Tools • Middleware between the data presentation and data collection tools • PulledPork manages IDS rules • Barnyard2 manages alert processing -

A User-Oriented Network Forensic Analyser: the Design of a High-Level Protocol Analyser D Joy Plymouth University

CORE Metadata, citation and similar papers at core.ac.uk Provided by Research Online @ ECU Edith Cowan University Research Online Australian Digital Forensics Conference Conferences, Symposia and Campus Events 2014 A user-oriented network forensic analyser: The design of a high-level protocol analyser D Joy Plymouth University F Li Plymouth University N L. Clarke Edith Cowan University S M. Furnell Edith Cowan University DOI: 10.4225/75/57b3e511fb87f Originally published in the Proceedings of the 12th Australian Digital Forensics Conference. Held on the 1-3 December, 2014 at Edith Cowan University, Joondalup Campus, Perth, Western Australia. This Conference Proceeding is posted at Research Online. http://ro.ecu.edu.au/adf/135 A USER-ORIENTED NETWORK FORENSIC ANALYSER: THE DESIGN OF A HIGH-LEVEL PROTOCOL ANALYSER D. Joy1, F. Li1, N.L. Clarke1,2 and S.M. Furnell1,2 1Centre for Security, Communications and Network Research (CSCAN) Plymouth University, Plymouth, United Kingdom 2Security Research Institute, Edith Cowan University, Western Australia [email protected] Abstract Network forensics is becoming an increasingly important tool in the investigation of cyber and computer- assisted crimes. Unfortunately, whilst much effort has been undertaken in developing computer forensic file system analysers (e.g. Encase and FTK), such focus has not been given to Network Forensic Analysis Tools (NFATs). The single biggest barrier to effective NFATs is the handling of large volumes of low-level traffic and being able to exact and interpret forensic artefacts and their context – for example, being able extract and render application-level objects (such as emails, web pages and documents) from the low-level TCP/IP traffic but also understand how these applications/artefacts are being used.