Measuring and Securing Cryptographic Deployments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mihir Bellare Curriculum Vitae Contents

Mihir Bellare Curriculum vitae August 2018 Department of Computer Science & Engineering, Mail Code 0404 University of California at San Diego 9500 Gilman Drive, La Jolla, CA 92093-0404, USA. Phone: (858) 534-4544 ; E-mail: [email protected] Web Page: http://cseweb.ucsd.edu/~mihir Contents 1 Research areas 2 2 Education 2 3 Distinctions and Awards 2 4 Impact 3 5 Grants 4 6 Professional Activities 5 7 Industrial relations 5 8 Work Experience 5 9 Teaching 6 10 Publications 6 11 Mentoring 19 12 Personal Information 21 2 1 Research areas ∗ Cryptography and security: Provable security; authentication; key distribution; signatures; encryp- tion; protocols. ∗ Complexity theory: Interactive and probabilistically checkable proofs; approximability ; complexity of zero-knowledge; randomness in protocols and algorithms; computational learning theory. 2 Education ∗ Massachusetts Institute of Technology. Ph.D in Computer Science, September 1991. Thesis title: Randomness in Interactive Proofs. Thesis supervisor: Prof. S. Micali. ∗ Massachusetts Institute of Technology. Masters in Computer Science, September 1988. Thesis title: A Signature Scheme Based on Trapdoor Permutations. Thesis supervisor: Prof. S. Micali. ∗ California Institute of Technology. B.S. with honors, June 1986. Subject: Mathematics. GPA 4.0. Class rank 4 out of 227. Summer Undergraduate Research Fellow 1984 and 1985. ∗ Ecole Active Bilingue, Paris, France. Baccalauréat Série C, June 1981. 3 Distinctions and Awards ∗ PET (Privacy Enhancing Technologies) Award 2015 for publication [154]. ∗ Fellow of the ACM (Association for Computing Machinery), 2014. ∗ ACM Paris Kanellakis Theory and Practice Award 2009. ∗ RSA Conference Award in Mathematics, 2003. ∗ David and Lucille Packard Foundation Fellowship in Science and Engineering, 1996. (Twenty awarded annually in all of Science and Engineering.) ∗ Test of Time Award, ACM CCS 2011, given for [81] as best paper from ten years prior. -

MASTERCLASS GNUPG MASTERCLASS You Wouldn’T Want Other People Opening Your Letters and BEN EVERARD Your Data Is No Different

MASTERCLASS GNUPG MASTERCLASS You wouldn’t want other people opening your letters and BEN EVERARD your data is no different. Encrypt it today! SECURE EMAIL WITH GNUPG AND ENIGMAIL Send encrypted emails from your favourite email client. our typical email is about as secure as a The first thing that you need to do is create a key to JOHN LANE postcard, which is good news if you’re a represent your identity in the OpenPGP world. You’d Ygovernment agency. But you wouldn’t use a typically create one key per identity that you have. postcard for most things sent in the post; you’d use a Most people would have one identity, being sealed envelope. Email is no different; you just need themselves as a person. However, some may find an envelope – and it’s called “Encryption”. having separate personal and professional identities Since the early 1990s, the main way to encrypt useful. It’s a personal choice, but starting with a single email has been PGP, which stands for “Pretty Good key will help while you’re learning. Privacy”. It’s a protocol for the secure encryption of Launch Seahorse and click on the large plus-sign email that has since evolved into an open standard icon that’s just below the menu. Select ‘PGP Key’ and called OpenPGP. work your way through the screens that follow to supply your name and email address and then My lovely horse generate the key. The GNU Privacy Guard (GnuPG), is a free, GPL-licensed You can, optionally, use the Advanced Key Options implementation of the OpenPGP standard (there are to add a comment that can help others identify your other implementations, both free and commercial – key and to select the cipher, its strength and set when the PGP name now refers to a commercial product the key should expire. -

Using Frankencerts for Automated Adversarial Testing of Certificate

Using Frankencerts for Automated Adversarial Testing of Certificate Validation in SSL/TLS Implementations Chad Brubaker ∗ y Suman Janay Baishakhi Rayz Sarfraz Khurshidy Vitaly Shmatikovy ∗Google yThe University of Texas at Austin zUniversity of California, Davis Abstract—Modern network security rests on the Secure Sock- many open-source implementations of SSL/TLS are available ets Layer (SSL) and Transport Layer Security (TLS) protocols. for developers who need to incorporate SSL/TLS into their Distributed systems, mobile and desktop applications, embedded software: OpenSSL, NSS, GnuTLS, CyaSSL, PolarSSL, Ma- devices, and all of secure Web rely on SSL/TLS for protection trixSSL, cryptlib, and several others. Several Web browsers against network attacks. This protection critically depends on include their own, proprietary implementations. whether SSL/TLS clients correctly validate X.509 certificates presented by servers during the SSL/TLS handshake protocol. In this paper, we focus on server authentication, which We design, implement, and apply the first methodology for is the only protection against man-in-the-middle and other large-scale testing of certificate validation logic in SSL/TLS server impersonation attacks, and thus essential for HTTPS implementations. Our first ingredient is “frankencerts,” synthetic and virtually any other application of SSL/TLS. Server authen- certificates that are randomly mutated from parts of real cer- tication in SSL/TLS depends entirely on a single step in the tificates and thus include unusual combinations of extensions handshake protocol. As part of its “Server Hello” message, and constraints. Our second ingredient is differential testing: if the server presents an X.509 certificate with its public key. -

Horizontal PDF Slides

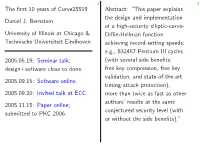

1 2 The first 10 years of Curve25519 Abstract: “This paper explains the design and implementation Daniel J. Bernstein of a high-security elliptic-curve- University of Illinois at Chicago & Diffie-Hellman function Technische Universiteit Eindhoven achieving record-setting speeds: e.g., 832457 Pentium III cycles 2005.05.19: Seminar talk; (with several side benefits: design+software close to done. free key compression, free key validation, and state-of-the-art 2005.09.15: Software online. timing-attack protection), 2005.09.20: Invited talk at ECC. more than twice as fast as other authors’ results at the same 2005.11.15: Paper online; conjectured security level (with submitted to PKC 2006. or without the side benefits).” 1 2 3 The first 10 years of Curve25519 Abstract: “This paper explains Elliptic-curve computations the design and implementation Daniel J. Bernstein of a high-security elliptic-curve- University of Illinois at Chicago & Diffie-Hellman function Technische Universiteit Eindhoven achieving record-setting speeds: e.g., 832457 Pentium III cycles 2005.05.19: Seminar talk; (with several side benefits: design+software close to done. free key compression, free key validation, and state-of-the-art 2005.09.15: Software online. timing-attack protection), 2005.09.20: Invited talk at ECC. more than twice as fast as other authors’ results at the same 2005.11.15: Paper online; conjectured security level (with submitted to PKC 2006. or without the side benefits).” 1 2 3 The first 10 years of Curve25519 Abstract: “This paper explains Elliptic-curve computations the design and implementation Daniel J. Bernstein of a high-security elliptic-curve- University of Illinois at Chicago & Diffie-Hellman function Technische Universiteit Eindhoven achieving record-setting speeds: e.g., 832457 Pentium III cycles 2005.05.19: Seminar talk; (with several side benefits: design+software close to done. -

Cryptographic Agility in Practice Emerging Use-Cases

CRYPTOGRAPHIC AGILITY IN PRACTICE EMERGING USE-CASES AUTHORS: Tyson Macaulay Chief Product Officer InfoSec Global Richard Henderson Chief Technology Officer North America InfoSec Global USE CASES WHITEPAPER Summary Cryptography is the bedrock upon which modern data and communications security rests. Cryptography provides the ability to not just keep data and communications confidential, but also underlies virtually all means to establish the identity of people and things in a virtualized world. But cryptography has historically been static: “set and forget”. The world has changed while cryptography has not, and there is a pressing need to move towards dynamically managed and agile cryptographic systems: “cryptographic agility”. Data transparently moves in and out of trusted and untrusted hardware, software, within complex, integrated IoT/IIoT (Internet of Things/Industrial Internet of Things) devices and platforms, and across international borders. Once deployed or implemented, virtually all of these are unable to make changes to their digital identities or the cryptography they use without a hands-on, bespoke process of re-installation, re-configuration, and downtime... if fixes and updates are available at all. What does this mean? For devices and platforms, a cryptographic vulnerability in may require the device be replaced, discarded, or additional in-line infrastructure be deployed to mitigate the threat. For applications and systems, patches must be applied, systems shut down, and expensive human expertise contracted to make it all work. This “static” cryptographic security model has already caused substantial public safety, consumer privacy, financial, and health risks - all associated with device and system vulnerabilities exploited by malicious actors. For now, there are no standards and few tools for the management of cryptography. -

Securing Email Through Online Social Networks

SECURING EMAIL THROUGH ONLINE SOCIAL NETWORKS Atieh Saberi Pirouz A thesis in The Department of Concordia Institute for Information Systems Engineering (CIISE) Presented in Partial Fulfillment of the Requirements For the Degree of Master of Applied Science (Information Systems Security) at Concordia University Montreal,´ Quebec,´ Canada August 2013 © Atieh Saberi Pirouz, 2013 Concordia University School of Graduate Studies This is to certify that the thesis prepared By: Atieh Saberi Pirouz Entitled: Securing Email Through Online Social Networks and submitted in partial fulfillment of the requirements for the degree of Master of Applied Science (Information Systems Security) complies with the regulations of this University and meets the accepted standards with respect to originality and quality. Signed by the final examining commitee: Dr. Benjamin C. M. Fung Chair Dr. Lingyu Wang Examiner Dr. Zhenhua Zhu Examiner Dr. Mohammad Mannan Supervisor Approved Chair of Department or Graduate Program Director 20 Dr. Christopher Trueman, Dean Faculty of Engineering and Computer Science Abstract Securing Email Through Online Social Networks Atieh Saberi Pirouz Despite being one of the most basic and popular Internet applications, email still largely lacks user-to-user cryptographic protections. From a research perspective, designing privacy preserving techniques for email services is complicated by the re- quirement of balancing security and ease-of-use needs of everyday users. For example, users cannot be expected to manage long-term keys (e.g., PGP key-pair), or under- stand crypto primitives. To enable intuitive email protections for a large number of users, we design Friend- lyMail by leveraging existing pre-authenticated relationships between a sender and receiver on an Online Social Networking (OSN) site, so that users can send secure emails without requiring direct key exchange with the receiver in advance. -

Mining of Massive Datasets

Mining of Massive Datasets Anand Rajaraman Kosmix, Inc. Jeffrey D. Ullman Stanford Univ. Copyright c 2010, 2011 Anand Rajaraman and Jeffrey D. Ullman ii Preface This book evolved from material developed over several years by Anand Raja- raman and Jeff Ullman for a one-quarter course at Stanford. The course CS345A, titled “Web Mining,” was designed as an advanced graduate course, although it has become accessible and interesting to advanced undergraduates. What the Book Is About At the highest level of description, this book is about data mining. However, it focuses on data mining of very large amounts of data, that is, data so large it does not fit in main memory. Because of the emphasis on size, many of our examples are about the Web or data derived from the Web. Further, the book takes an algorithmic point of view: data mining is about applying algorithms to data, rather than using data to “train” a machine-learning engine of some sort. The principal topics covered are: 1. Distributed file systems and map-reduce as a tool for creating parallel algorithms that succeed on very large amounts of data. 2. Similarity search, including the key techniques of minhashing and locality- sensitive hashing. 3. Data-stream processing and specialized algorithms for dealing with data that arrives so fast it must be processed immediately or lost. 4. The technology of search engines, including Google’s PageRank, link-spam detection, and the hubs-and-authorities approach. 5. Frequent-itemset mining, including association rules, market-baskets, the A-Priori Algorithm and its improvements. 6. -

Linux Administrators Security Guide LASG - 0.1.1

Linux Administrators Security Guide LASG - 0.1.1 By Kurt Seifried ([email protected]) copyright 1999, All rights reserved. Available at: https://www.seifried.org/lasg/. This document is free for most non commercial uses, the license follows the table of contents, please read it if you have any concerns. If you have any questions email [email protected]. A mailing list is available, send an email to [email protected], with "subscribe lasg-announce" in the body (no quotes) and you will be automatically added. 1 Table of contents License Preface Forward by the author Contributing What this guide is and isn't How to determine what to secure and how to secure it Safe installation of Linux Choosing your install media It ain't over 'til... General concepts, server verses workstations, etc Physical / Boot security Physical access The computer BIOS LILO The Linux kernel Upgrading and compiling the kernel Kernel versions Administrative tools Access Telnet SSH LSH REXEC NSH Slush SSL Telnet Fsh secsh Local YaST sudo Super Remote Webmin Linuxconf COAS 2 System Files /etc/passwd /etc/shadow /etc/groups /etc/gshadow /etc/login.defs /etc/shells /etc/securetty Log files and other forms of monitoring General log security sysklogd / klogd secure-syslog next generation syslog Log monitoring logcheck colorlogs WOTS swatch Kernel logging auditd Shell logging bash Shadow passwords Cracking passwords John the ripper Crack Saltine cracker VCU PAM Software Management RPM dpkg tarballs / tgz Checking file integrity RPM dpkg PGP MD5 Automatic -

Oracle Solaris 11.4 Security Target, V1.3

Oracle Solaris 11.4 Security Target Version 1.3 February 2021 Document prepared by www.lightshipsec.com Oracle Security Target Document History Version Date Author Description 1.0 09 Nov 2020 G Nickel Update TOE version 1.1 19 Nov 2020 G Nickel Update IDR version 1.2 25 Jan 2021 L Turner Update TLS and SSH. 1.3 8 Feb 2021 L Turner Finalize for certification. Page 2 of 40 Oracle Security Target Table of Contents 1 Introduction ........................................................................................................................... 5 1.1 Overview ........................................................................................................................ 5 1.2 Identification ................................................................................................................... 5 1.3 Conformance Claims ...................................................................................................... 5 1.4 Terminology ................................................................................................................... 6 2 TOE Description .................................................................................................................... 9 2.1 Type ............................................................................................................................... 9 2.2 Usage ............................................................................................................................. 9 2.3 Logical Scope ................................................................................................................ -

Security + Encryption Standards

Security + Encryption Standards Author: Joseph Lee Email: joseph@ ripplesoftware.ca Mobile: 778-725-3206 General Concepts Forward secrecy / perfect forward secrecy • Using a key exchange to provide a new key for each session provides improved forward secrecy because if keys are found out by an attacker, past data cannot be compromised with the keys Confusion • Cipher-text is significantly different than the original plaintext data • The property of confusion hides the relationship between the cipher-text and the key Diffusion • Is the principle that small changes in message plaintext results in large changes in the cipher-text • The idea of diffusion is to hide the relationship between the cipher-text and the plaintext Secret-algorithm • A proprietary algorithm that is not publicly disclosed • This is discouraged because it cannot be reviewed Weak / depreciated algorithms • An algorithm that can be easily "cracked" or defeated by an attacker High-resiliency • Refers to the strength of the encryption key if an attacker discovers part of the key Data-in-transit • Data sent over a network Data-at-rest • Data stored on a medium Data-in-use • Data being used by an application / computer system Out-of-band KEX • Using a medium / channel for key-exchange other than the medium the data transfer is taking place (phone, email, snail mail) In-band KEX • Using the same medium / channel for key-exchange that the data transfer is taking place Integrity • Ability to determine the message has not been altered • Hashing algorithms manage Authenticity -

Fast Elliptic Curve Cryptography in Openssl

Fast Elliptic Curve Cryptography in OpenSSL Emilia K¨asper1;2 1 Google 2 Katholieke Universiteit Leuven, ESAT/COSIC [email protected] Abstract. We present a 64-bit optimized implementation of the NIST and SECG-standardized elliptic curve P-224. Our implementation is fully integrated into OpenSSL 1.0.1: full TLS handshakes using a 1024-bit RSA certificate and ephemeral Elliptic Curve Diffie-Hellman key ex- change over P-224 now run at twice the speed of standard OpenSSL, while atomic elliptic curve operations are up to 4 times faster. In ad- dition, our implementation is immune to timing attacks|most notably, we show how to do small table look-ups in a cache-timing resistant way, allowing us to use precomputation. To put our results in context, we also discuss the various security-performance trade-offs available to TLS applications. Keywords: elliptic curve cryptography, OpenSSL, side-channel attacks, fast implementations 1 Introduction 1.1 Introduction to TLS Transport Layer Security (TLS), the successor to Secure Socket Layer (SSL), is a protocol for securing network communications. In its most common use, it is the \S" (standing for \Secure") in HTTPS. Two of the most popular open- source cryptographic libraries implementing SSL and TLS are OpenSSL [19] and Mozilla Network Security Services (NSS) [17]: OpenSSL is found in, e.g., the Apache-SSL secure web server, while NSS is used by Mozilla Firefox and Chrome web browsers, amongst others. TLS provides authentication between connecting parties, as well as encryp- tion of all transmitted content. Thus, before any application data is transmit- ted, peers perform authentication and key exchange in a TLS handshake. -

Cryptanalysis of the Random Number Generator of the Windows Operating System

Cryptanalysis of the Random Number Generator of the Windows Operating System Leo Dorrendorf School of Engineering and Computer Science The Hebrew University of Jerusalem 91904 Jerusalem, Israel [email protected] Zvi Gutterman Benny Pinkas¤ School of Engineering and Computer Science Department of Computer Science The Hebrew University of Jerusalem University of Haifa 91904 Jerusalem, Israel 31905 Haifa, Israel [email protected] [email protected] November 4, 2007 Abstract The pseudo-random number generator (PRNG) used by the Windows operating system is the most commonly used PRNG. The pseudo-randomness of the output of this generator is crucial for the security of almost any application running in Windows. Nevertheless, its exact algorithm was never published. We examined the binary code of a distribution of Windows 2000, which is still the second most popular operating system after Windows XP. (This investigation was done without any help from Microsoft.) We reconstructed, for the ¯rst time, the algorithm used by the pseudo- random number generator (namely, the function CryptGenRandom). We analyzed the security of the algorithm and found a non-trivial attack: given the internal state of the generator, the previous state can be computed in O(223) work (this is an attack on the forward-security of the generator, an O(1) attack on backward security is trivial). The attack on forward-security demonstrates that the design of the generator is flawed, since it is well known how to prevent such attacks. We also analyzed the way in which the generator is run by the operating system, and found that it ampli¯es the e®ect of the attacks: The generator is run in user mode rather than in kernel mode, and therefore it is easy to access its state even without administrator privileges.