Sequential Learning of Product Recommendations with Customer Disengagement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Signatory ID Name CIN Company Name 02700003 RAM TIKA

Signatory ID Name CIN Company Name 02700003 RAM TIKA U55101DL1998PTC094457 RVS HOTELS AND RESORTS 02700032 BANSAL SHYAM SUNDER U70102AP2005PTC047718 SHREEMUKH PROPERTIES PRIVATE 02700065 CHHIBA SAVITA U01100MH2004PTC150274 DEJA VU FARMS PRIVATE LIMITED 02700070 PARATE VIJAYKUMAR U45200MH1993PTC072352 PARATE DEVELOPERS P LTD 02700076 BHARATI GHOSH U85110WB2007PTC118976 ACCURATE MEDICARE & 02700087 JAIN MANISH RAJMAL U45202MH1950PTC008342 LEO ESTATES PRIVATE LIMITED 02700109 NATESAN RAMACHANDRAN U51505TN2002PTC049271 RESHMA ELECTRIC PRIVATE 02700110 JEGADEESAN MAHENDRAN U51505TN2002PTC049271 RESHMA ELECTRIC PRIVATE 02700126 GUPTA JAGDISH PRASAD U74210MP2003PTC015880 GOPAL SEVA PRIVATE LIMITED 02700155 KRISHNAKUMARAN NAIR U45201GJ1994PTC021976 SHARVIL HOUSING PVT LTD 02700157 DHIREN OZA VASANTLAL U45201GJ1994PTC021976 SHARVIL HOUSING PVT LTD 02700183 GUPTA KEDAR NATH U72200AP2004PTC044434 TRAVASH SOFTWARE SOLUTIONS 02700187 KUMARASWAMY KUNIGAL U93090KA2006PLC039899 EMERALD AIRLINES LIMITED 02700216 JAIN MANOJ U15400MP2007PTC020151 CHAMBAL VALLEY AGRO 02700222 BHAIYA SHARAD U45402TN1996PTC036292 NORTHERN TANCHEM PRIVATE 02700226 HENDIN URI ZIPORI U55101HP2008PTC030910 INNER WELLSPRING HOSPITALITY 02700266 KUMARI POLURU VIJAYA U60221PY2001PLC001594 REGENCY TRANSPORT CARRIERS 02700285 DEVADASON NALLATHAMPI U72200TN2006PTC059044 ZENTERE SOLUTIONS PRIVATE 02700322 GOPAL KAKA RAM U01400UP2007PTC033194 KESHRI AGRI GENETICS PRIVATE 02700342 ASHISH OBERAI U74120DL2008PTC184837 ASTHA LAND SCAPE PRIVATE 02700354 MADHUSUDHANA REDDY U70200KA2005PTC036400 -

Scientometric Portrait of Child Sexual Abuse Research in 21St Century India

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Library Philosophy and Practice (e-journal) Libraries at University of Nebraska-Lincoln Winter 2-2021 Scientometric Portrait of Child Sexual Abuse Research in 21st Century India Soumita Mitra Arindam Sarkar [email protected] Ashok Pal Dr. Institute of Development Studies Kolkata, Salt Lake, Kolkata-700064, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/libphilprac Part of the Library and Information Science Commons Mitra, Soumita; Sarkar, Arindam; and Pal, Ashok Dr., "Scientometric Portrait of Child Sexual Abuse Research in 21st Century India" (2021). Library Philosophy and Practice (e-journal). 5047. https://digitalcommons.unl.edu/libphilprac/5047 Scientometric Portrait of Child Sexual Abuse Research in 21st Century India Soumita Mitra MLIS (Digital Library), Dept. of Library & Information Science, Jadavpur University Email:[email protected] Arindam Sarkar PhD Research Scholar of Department of Library and Information Science, Jadavpur University, Kolkata-700032 E-mail: [email protected] ORCID: 0000-0002-8728-3378 Dr. Ashok Pal Assistant Librarian, Institute of Development Studies Kolkata, Salt Lake, Kolkata-700064 ORCID: 0000-0002-8428-6864 Abstract: Sexual abuse during childhood can destroy the backbone of a society because children are the future citizens. Significant research in this area is desirable to increase awareness against this heinous crime. The present scientometric study has been conducted to present the growth of research in this subject domain in India. Data analysis reveals that among the total 300 articles published on this topic, the highest number of publications i.e. 42 was published in 2015 and year 2001 has published only 2 articles. -

The Conference Brochure

The Many Lives of Indian Cinema: 1913-2013 and beyond Centre for the Study of Developing Societies, Delhi 9-11 January 2014 1 Credits Concept: Ravi Vasudevan Production: Ishita Tiwary Operations: Ashish Mahajan Programme coordinator: Tanveer Kaur Infrastructure: Sachin Kumar, Vikas Chaurasia Consultant: Ravikant Audio-visual Production: Ritika Kaushik Print Design: Mrityunjay Chatterjee Cover Image: Mrityunjay Chatterjee Back Cover Image: Shahid Datawala, Sarai Archive Staff of the Centre for the Study of Developing Societies We gratefully acknowledge support from the following institutions: Indian Council for Social Science Research; Arts and Humanities Research Council; Research Councils UK; Goethe Institute, Delhi; Indian Council for Historical Research; Sage Publishing. Doordarshan have generously extended media partnership to the conference. Images in the brochure are selected from Sarai Archive collections. Sponsors Media Partner 2 The Idea Remembering legendary beginnings provides us the occasion to redefine and make contemporary the history we set out to honour. We need to complicate the idea of origins and `firsts’ because they highlight some dimensions of film culture and usage over others, and obscure the wider network of media technologies, cultural practices, and audiences which made cinema possible. In India, it is a matter of debate whether D.G. Phalke's Raja Harishchandra (1913), popularly referred to as the first Indian feature film, deserves that accolade. As Rosie Thomas has shown, earlier instances of the story film can be identified, includingAlibaba (Hiralal Sen, 1903), an Arabian Nights fantasy which would point to the presence of a different cultural universe from that provided by Phalke's Hindu mythological film. Such a revisionary history is critical to our research agenda. -

Voluntary Nature Conservancy 2 Th 7 Charotar Crocodile Count-2020 Counting Crocodile for Conservation

7TH Charotar Crocodile Count Counting Crocodile for Conservation 3rd-5th January 2020 Vallabh Vidyanagar, Gujarat Voluntary Nature Conservancy 2 www.vncindia.org 7th Charotar Crocodile Count-2020 Counting crocodile for Conservation rd th 3 -5 January 2020 A Citizen Science Initiative By Voluntary Nature Conservancy IN COLLABORATION WITH Suggested Citation: Voluntary Nature Conservancy (2020). 7th Charotar Crocodile Count- 2020, Voluntary Nature Conservancy, VallabhVidyanagar, Gujarat, India. Pp. 24. Report Design & Preparation: Anirudh Vasava PROGRAM PARTNERS OUR SUPPORTERS Cover Photo: Anirudh Vasava Back Cover Photo: Shubham Parmar Voluntary Nature Conservancy 101- Radha Darshan, Behind Union Bank of India, Vallabh Vidyanagar-388120 Duleep Matthai Conservancy Nature Voluntary Jai Suthar / Photo By: Nature Conservation Trust 2 Gujarat, India [email protected] / www.vncindia.org / Phone No. - (+91) 9898142170 WHAT’S CHAROTAR CROCODILE IMPORTANCE OF CHAROTAR REGION CROCODILE POPULATION ASSESMENT HOW DOES THIS CROC COUNT HELPS? COUNT? METHOD? The count provides an ideal opportunity to un- Charotar Crocodile Count, born in 2013, is an Charotar region is significant for sustenance of mug- VNC uses an approach called “Citizen Science” to derstand the significance of biodiversity and how annual event, designed to bring together a diverse ger population of Charotar and for meta-population count crocodiles in Charotar. Citizen Science is an villagers harmoniously coexist with muggers. set of participants to understand the importance of of Central Gujarat. The region is also an important exciting, multifaceted way to bring people from all muggers and wetland in the present day conserva- dispersal and breeding ground for muggers between walks of life together for research and conservation. the meta-populations in Central Gujarat. -

Role of Media and Usage of Films and Documentaries As Political Tool

© 2018 JETIR June 2018, Volume 5, Issue 06 www.jetir.org (ISSN-2349-5162) Role of Media and Usage of Films and Documentaries as Political Tool DR. VIKRAMJIT SINGH, ASSOCIATE PROFESSOR DEPARTMENT OF POLITICAL SCIENCE, DAYANAND COLLGE, HISAR, HARYANA, INDIA Abstract Now days the usage of print as well as digital media is widely used for the propaganda to have the political advantage by the political parties. A number of movies and documentaries are intentionally released so that the political benefits can be taken. In this manuscript, the case scenarios of such movies and the film based propaganda are addressed so that the overall motive and the goals of the political parties can be identified. In this research paper, the case studies and the scenarios of assorted films and documentaries are cited so that the clear theme can be addressed with the higher degree of references and the proof of the mentioned work. In current scenarios, the political parties whether these are opposition or the ruling they are getting the huge benefits with the exploitations of emotions of the general public with the integration of films to defame or fame the particular person or parties. By this way, the overall scenario and integrity of movies and bollywood is getting affected with the favor of particular political benefits. Keywords: Propaganda Movie, Role of Media in Politics, Cinema as Political Tool Introduction The filmmakers are now days more focused on polishing the image of particular person or defaming the image of particular parties and these are visible from the assorted movies specifically in the time of elections but it is not the good practices to exploit the image of particular parties. -

State District Branch Address Centre Ifsc Contact1 Contact2 Contact3 Micr Code

STATE DISTRICT BRANCH ADDRESS CENTRE IFSC CONTACT1 CONTACT2 CONTACT3 MICR_CODE ANDAMAN NO 26. MG ROAD AND ABERDEEN BAZAR , NICOBAR PORT BLAIR -744101 704412829 704412829 ISLAND ANDAMAN PORT BLAIR ,A & N ISLANDS PORT BLAIR IBKL0001498 8 7044128298 8 744259002 UPPER GROUND FLOOR, #6-5-83/1, ANIL ANIL NEW BUS STAND KUMAR KUMAR ANDHRA ROAD, BHUKTAPUR, 897889900 ANIL KUMAR 897889900 PRADESH ADILABAD ADILABAD ADILABAD 504001 ADILABAD IBKL0001090 1 8978899001 1 1ST FLOOR, 14- 309,SREERAM ENCLAVE,RAILWAY FEDDER ROADANANTAPURA ANDHRA NANTAPURANDHRA ANANTAPU 08554- PRADESH ANANTAPUR ANANTAPUR PRADESH R IBKL0000208 270244 D.NO.16-376,MARKET STREET,OPPOSITE CHURCH,DHARMAVA RAM- 091 ANDHRA 515671,ANANTAPUR DHARMAVA 949497979 PRADESH ANANTAPUR DHARMAVARAM DISTRICT RAM IBKL0001795 7 515259202 SRINIVASA SRINIVASA IDBI BANK LTD, 10- RAO RAO 43, BESIDE SURESH MYLAPALL SRINIVASA MYLAPALL MEDICALS, RAILWAY I - RAO I - ANDHRA STATION ROAD, +91967670 MYLAPALLI - +91967670 PRADESH ANANTAPUR GUNTAKAL GUNTAKAL - 515801 GUNTAKAL IBKL0001091 6655 +919676706655 6655 18-1-138, M.F.ROAD, AJACENT TO ING VYSYA BANK, HINDUPUR , ANANTAPUR DIST - 994973715 ANDHRA PIN:515 201 9/98497191 PRADESH ANANTAPUR HINDUPUR ANDHRA PRADESH HINDUPUR IBKL0001162 17 515259102 AGRICULTURE MARKET COMMITTEE, ANANTAPUR ROAD, TADIPATRI, 085582264 ANANTAPUR DIST 40 ANDHRA PIN : 515411 /903226789 PRADESH ANANTAPUR TADIPATRI ANDHRA PRADESH TADPATRI IBKL0001163 2 515259402 BUKARAYASUNDARA M MANDAL,NEAR HP GAS FILLING 91 ANDHRA STATION,ANANTHAP ANANTAPU 929710487 PRADESH ANANTAPUR VADIYAMPETA UR -

In Re Eros International Securities Litigation 15-CV-08956-Amended

Case 1:15-cv-08956-AJN Document 68 Filed 10/10/16 Page 1 of 122 UNITED STATES DISTRICT COURT SOUTHERN DISTRICT OF NEW YORK ) In re EROS INTERNATIONAL SECURITIES ) Master File No. 15 Civ. 8956 (AJN) LITIGATION ) _________________________________________ ) AMENDED CONSOLIDATED ) CLASS ACTION COMPLAINT This Document Relates To: ALL ACTIONS ) FOR VIOLATIONS OF THE ) FEDERAL SECURITIES LAWS ) ) Jury Trial Demanded ) Michael W. Stocker David J. Goldsmith Barry Michael Okun Alfred L. Fatale III LABATON SUCHAROW LLP 140 Broadway New York, New York 10005 Tel.: (212) 907-0700 Fax: (212) 818-0477 Attorneys for Lead Plaintiffs Fred Eisner and Strahinja Ivoševič and Lead Counsel for the Class Case 1:15-cv-08956-AJN Document 68 Filed 10/10/16 Page 2 of 122 TABLE OF CONTENTS I. NATURE OF THE ACTION ............................................................................................. 1 II. JURISDICTION AND VENUE ......................................................................................... 9 III. PARTIES .......................................................................................................................... 10 IV. FACTUAL BACKGROUND AND SUBSTANTIVE ALLEGATIONS .................................................................................. 13 A. Company Background .......................................................................................... 13 B. The Lulla Family’s Extensive Related-Party Transactions ................................................................................... 14 C. Eros Goes -

In Re Eros International Securities Litigation 15-CV-08956

Case 1:15-cv-08956-AJN Document 60 Filed 07/15/16 Page 1 of 111 UNITED STATES DISTRICT COURT SOUTHERN DISTRICT OF NEW YORK ) In re EROS INTERNATIONAL SECURITIES ) Master File No. 15 Civ. 8956 (AJN) LITIGATION ) _________________________________________ ) CONSOLIDATED CLASS ) ACTION COMPLAINT This Document Relates To: ALL ACTIONS ) FOR VIOLATIONS OF THE ) FEDERAL SECURITIES LAWS ) ) Jury Trial Demanded ) Michael W. Stocker David J. Goldsmith Barry Michael Okun LABATON SUCHAROW LLP 140 Broadway New York, New York 10005 Tel.: (212) 907-0700 Fax: (212) 818-0477 Attorneys for Lead Plaintiffs Fred Eisner and Strahinja Ivoševič and Lead Counsel for the Class Case 1:15-cv-08956-AJN Document 60 Filed 07/15/16 Page 2 of 111 TABLE OF CONTENTS I. NATURE OF THE ACTION ............................................................................................. 1 II. JURISDICTION AND VENUE ......................................................................................... 8 III. PARTIES ............................................................................................................................ 9 IV. FACTUAL BACKGROUND AND SUBSTANTIVE ALLEGATIONS ......................................................................... 12 A. Company Background .......................................................................................... 12 B. The Lulla Family’s Extensive Related-Party Transactions ................................................................................... 13 C. Eros Goes Public .................................................................................................. -

Playback Singers of Bollywood and Hollywood

ILLUSION AND REALITY: PLAYBACK SINGERS OF BOLLYWOOD AND HOLLYWOOD By MYRNA JUNE LAYTON Submitted in accordance with the requirements for the degree of DOCTOR OF LITERATURE AND PHILOSOPHY in the subject MUSICOLOGY at the UNIVERSITY OF SOUTH AFRICA. SUPERVISOR: PROF. M. DUBY JANUARY 2013 Student number: 4202027-1 I declare that ILLUSION AND REALITY: PLAYBACK SINGERS OF BOLLYWOOD AND HOLLYWOOD is my own work and that all the sources that I have used or quoted have been indicated and acknowledged by means of complete references. SIGNATURE DATE (MRS M LAYTON) Preface I would like to thank the two supervisors who worked with me at UNISA, Marie Jorritsma who got me started on my reading and writing, and Marc Duby, who saw me through to the end. Marni Nixon was most helpful to me, willing to talk with me and discuss her experiences recording songs for Hollywood studios. It was much more difficult to locate people who work in the Bollywood film industry, but two people were helpful and willing to talk to me; Craig Pruess and Deepa Nair have been very gracious about corresponding with me via email. Lastly, putting the whole document together, which seemed an overwhelming chore, was made much easier because of the expert formatting and editing of my daughter Nancy Heiss, sometimes assisted by her husband Andrew Heiss. Joe Bonyata, a good friend who also happens to be an editor and publisher, did a final read-through to look for small details I might have missed, and of course, he found plenty. For this I am grateful. -

Film Preservation & Restoration Workshop

FILM PRESERVATION & RESTORATION WORKSHOP, INDIA 2016 1 2 FILM PRESERVATION & RESTORATION WORKSHOP, INDIA 2016 1 Cover Image: Ulagam Sutrum Valiban, 1973 Tamil (M. G. Ramachandran) Courtesy: Gnanam FILM PRESERVATION OCT 7TH - 14TH & RESTORATION Prasad Film Laboratories WORKSHOP 58, Arunachalam Road Saligramam INDIA 2017 Chennai 600 093 Vedhala Ulagam, 1948, Tamil The Film Preservation & Restoration Workshop India 2017 (FPRWI 2017) is an initiative of Film Heritage Foundation (FHF) and The International Federation of Film Archives (FIAF) in association with The Film Foundation’s World Cinema Project, L’Immagine Ritrovata, The Academy of Motion Picture Arts & Sciences, Prasad Corp., La Cinémathèque française, Imperial War Museums, Fondazione Cineteca di Bologna, The National Audivisual Institute of Finland (KAVI), Národní Filmový Archiv (NFA), Czech Republic and The Criterion Collection to provide training in the specialized skills required to safe- guard India’s cinematic heritage. The seven-day course designed by David Walsh, Head of the FIAF Training and Outreach Program will cover both theory and practical classes in the best practices of the preservation and restoration of both filmic and non-filmic material and daily screenings of restored classics from around the world. Lectures and practical sessions will be conducted by leading archivists and restorers from preeminent institutions from around the world. Preparatory reading material will be shared with selected candidates in seven modules beginning two weeks prior to the commencement of the workshop. The goal of the programme is to continue our commitment for the third successive year to train an indigenous pool of film archivists and restorers as well as to build on the movement we have created all over India and in our neighbouring countries to preserve the moving image legacy. -

List for Website Updation

Investor First Investor Last Amount to be Proposed date of Name Investor Middle Name Name Address Country State District Pin Code Folio Number transferred Transfer to IEPF NILKANTH,870/2B,BHANDARKAR MR HARISHCHANDRAKOLTE NA ROAD, PUNE INDIA MAHARASHTRA PUNE 411004 PTSE0000093 294000.00 31-AUG-2020 NATIONAL HSG.SOC,GATE NO.2,HOUSE NO.44, BANER MR DILIPCHAVAN NA RD.PUNE INDIA MAHARASHTRA PUNE 411007 PTSE0000176 1050.00 31-AUG-2020 ASHOKA THILLERY CANNANORE MR D UNNIKRISHNAN KERALA INDIA KERALA CANNANORE 670001 PTSE0000291 8750.00 31-AUG-2020 FLAT 5,BLDG.NO.8-17,KUBERA PARK MR ISHRATALI NA KONDHWA,LULLANAGAR,PUNE INDIA MAHARASHTRA PUNE 411040 PTSE0000304 8750.00 31-AUG-2020 BLOCK NO. 2, INDIRA APTS., CHINTAMAN NGR., SAHAKAR MR CHANDRASHEKHARBHIDE NA NGR.,PUNE INDIA MAHARASHTRA PUNE 411009 PTSE0000313 4375.00 31-AUG-2020 NO 9 SANO LAI APARTMENTS MS VIDYASWAMINATHAN NA SALISBURY PARK PUNE INDIA MAHARASHTRA PUNE 411037 PTSE0000350 13125.00 31-AUG-2020 A3/12 ROYAL ORCHARD D P ROAD MR SUDHIRNERUKAR NA AUNDH PUNE INDIA MAHARASHTRA PUNE 411017 PTSE0000379 875.00 31-AUG-2020 C-4,VISHWAKARMA NAGAR SUS MR NITINAMBHAIKAR NA ROAD, PASHAN PUNE INDIA MAHARASHTRA PUNE 411021 PTSE0000578 3500.00 31-AUG-2020 MR ALINDPRASAD NA A 552 SARITA VIHAR NEW DELHI INDIA DELHI NEW DELHI 110028 PTSE0000614 3500.00 31-AUG-2020 FLAT NO 1 PLOT NO B-82 TULSHIBAGHWALE COLONY MR PANKAJAMBARDEKAR NA SAHAKARNAGAR NO 2 PUNE INDIA MAHARASHTRA PUNE 411009 PTSE0000639 3500.00 31-AUG-2020 181 SHURKWAR PETH SHINDI ALI MR GOPALDEORE NA PUNE INDIA MAHARASHTRA PUNE 411002 PTSE0000659 3500.00 31-AUG-2020 B-7/99 SHANTHI RAKSHAK SOC. -

MA Entrance Examination Result 2016.Xlsx

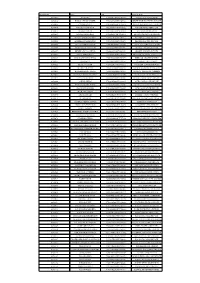

DEPARTMENT OF HISTORY UNIVERSITY OF DELHI RESULT OF M.A. HISTORY ENTRANCE EXAMINATION 2016 MARKS S.NO. ROLL NO. NAME CATEGORY OUT OF RANK 100 1 10001 VENKATA SAI VISHAL KANDE GEN 35 1371 2 10002 Jegan OBC 34 1504 3 10003 Bakkolla Shekhar OBC 38 1047 4 10004 UTHARA BABU GEN 46 424 5 10005 EMIL MATHEW BINNY GEN 46 424 6 10006 Athulya Anna Mammen GEN 56 96 7 10009 Rumi Longri ST 32 1778 8 10012 E N MOUNIKA SAI OBC 47 379 9 10013 MARIA MICHEAL NITHYN OBC 36 1251 10 10016 keshav jamjam OBC 47 379 11 10017 Akshay Thomas Kurien GEN 51 229 12 10019 kavvampelli prashanth SC 43 624 13 10020 RICANO RAJAN GEN 22 3120 14 10022 K.SUBAMANOJ OBC 40 862 15 10023 KOPPU KRISHNAVENI SC 27 2556 16 10025 Nevin Dalvin OBC 40 862 17 10026 NEETHU MARIA JAMES ST 36 1251 18 10027 mithaigiri sameer ur rehaman GEN 49 303 19 10028 Mukkera Sai Sri Laxmi Prasanna SC 37 1133 20 10029 VISHNU M OBC 36 1251 21 10030 NANDAKUMAR N GEN 43 624 22 10031 Anusha Sundar GEN 63 30 23 10033 Jacob Thomas GEN 51 229 24 10034 ASWANI P OBC 37 1133 25 10035 Ria Dantewadia GEN 41 775 26 10037 Akash Kumar OBC 48 337 27 10038 SAFWAN THANIYAPIL OBC 46 424 28 10039 MUTHAMIL MUTHALVAN S OBC 46 424 29 10040 Pranav Sriprakash Menon GEN 56 96 30 10041 MAHENDRANATH S GEN 71 3 31 10043 Syed Arafath Ahmed GEN 42 694 32 10044 M VEINAI MERCY ST 29 2247 33 10045 RANITA THIYAM GEN 30 2090 34 10048 Yannaganti Akhil sharath GEN 60 49 35 10049 NIKHIL KUMAR GEN 48 337 36 10050 SHAGUN SC 32 1778 37 10051 Vaishali Yadav OBC 34 1504 38 10052 Harsh Upadhyay GEN 39 947 39 10053 S KAINI ST 35 1371 40 10055 Ahongsangbam