Design Patterns in Object-Oriented Frameworks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1. Domain Modeling

Software design pattern seminar sse, ustc Topic 8 Observer, Mediator, Facade Group K 1. The observer pattern (Behavioral pattern, chapter 16 and 17) 1) the observer is an interface or abstract class defining the operations to be used to update itself 2) a solution to these problems A. Decouple change and related responses so that such dependencies can be added or removed freely and dynamically 3) solution A. Observable defines the operations for attaching, de-attaching and notifying observers B. Observer defines the operations to update itself 4) liabilities A. Unwanted concurrent update to a concrete observable may occur Figure 1 Sample Observer Pattern 2. The mediator pattern (Behavioral pattern, chapter 16 and 17) 1) the mediator encapsulates how a set of objects interact and keeps objects from referring to each other explicitly 2) a solution to these problems A. a set of objects communicate in well-defined but complex ways. The resulting interdependencies are unstructured and difficult to understand B. reusing an object is difficult because it refers to and communicates with many other objects C. a behavior that's distributed between several classes should be customizable without a lot of subclassing 3) solution A. Mediator defines an interface for communicating with Colleague objects, knows the colleague classes and keep a reference to the colleague objects, and implements the communication and transfer the messages between the colleague classes 1 Software design pattern seminar sse, ustc B. Colleague classes keep a reference to its Mediator object, and communicates with the Mediator whenever it would have otherwise communicated with another Colleague 4) liabilities A. -

A Mathematical Approach to Object Oriented Design Patterns

06.2006 J.Natn.Sci.FoundationObject oriented design Sripatterns Lanka 2008 36 (3):219-227 219 RESEARCH ARTICLE A mathematical approach to object oriented design patterns Saluka R. Kodituwakku1*and Peter Bertok2 1 Department of Statistics and Computer Science, Faculty of Science, University of Peradeniya, Peradeniya. 2 Department of Computer Science, RMIT University, Melbourne, Australia. Revised: 27 May 2008 ; Accepted: 18 July 2008 Abstract: Although design patterns are reusable design the distribution of responsibilities. For ease of learning elements, existing pattern descriptions focus on specific and reference, in1, design patterns are organized into a solutions that are not easily reusable in new designs. This paper catalogue. introduces a new pattern description method for object oriented design patterns. The description method aims at providing a The catalogue is formed by two criteria: purpose more general description of patterns so that patterns can be and scope. According to their purpose, design patterns readily reusable. This method also helps a programmer to are classified into creational, structural and behavioural, analyze, compare patterns, and detect patterns from existing software programmes. whereas the scope divides patterns into class patterns and object patterns. Class patterns capture relationships This method differs from the existing pattern description between classes and their subclasses; object patterns deal methods in that it captures both static and dynamic properties with relationships between objects. of patterns. It describes them in terms of mathematical entities rather than natural language narratives, incomplete graphical Software patterns provide reusable solutions to notations or programme fragments. It also helps users to recurring design problems. Even if we have reusable understand the patterns and relationships between them; and solutions they may not be effectively reused, because select appropriate patterns to the problem at hand. -

The Scalable Adapter Design Pattern: Enabling Interoperability Between Educational Software Tools

The Scalable Adapter Design Pattern: Enabling Interoperability Between Educational Software Tools Andreas Harrer, Catholic University Eichstatt-Ingolstadt,¨ Germany, Niels Pinkwart, Clausthal University of Technology, Germany, Bruce M. McLaren, Carnegie Mellon University, Pittsburgh PA, U.S.A., and DFKI, Saarbrucken,¨ Germany, and Oliver Scheuer, DFKI, Saarbrucken,¨ Germany Abstract For many practical learning scenarios, the integrated use of more than one learning tool is educationally beneficial. In these cases, interoperability between learning tools - getting the pieces to talk to one another in a coherent, well-founded manner - is a crucial requirement that is often hard to achieve. This paper describes a re-usable software design that aims at the integration of independent learning tools into one collaborative learning scenario. We motivate the usefulness and expressiveness of combining several learning tools into one integrated learning experience. Based on this we sketch software design principles that integrate several existing components into a joint technical framework. The feasibility of the approach, which we name the “Scalable Adapter” design pattern, is shown with several implementation examples from different educational technology domains, including Intelligent Tutoring Systems and collaborative learning environments. IEEE keywords: J.m Computer applications, H.5.3 Group and Organization interfaces, K.3.m Computers in Education A short version of this paper appeared in the Proceedings of the Conference on Intelligent Tutoring Systems 2008 1 The Scalable Adapter Design Pattern: Enabling Interoperability Between Educational Software Tools1 I. INTRODUCTION hypothesis and plans. In order to help the student or student In the field of educational technology, there have been groups during the different steps of this inquiry procedure, it numerous attempts in recent years to connect differently makes sense to enable them to have hypotheses, experimenta- targeted learning environments to one another. -

Designpatternsphp Documentation Release 1.0

DesignPatternsPHP Documentation Release 1.0 Dominik Liebler and contributors Jul 18, 2021 Contents 1 Patterns 3 1.1 Creational................................................3 1.1.1 Abstract Factory........................................3 1.1.2 Builder.............................................8 1.1.3 Factory Method......................................... 13 1.1.4 Pool............................................... 18 1.1.5 Prototype............................................ 21 1.1.6 Simple Factory......................................... 24 1.1.7 Singleton............................................ 26 1.1.8 Static Factory.......................................... 28 1.2 Structural................................................. 30 1.2.1 Adapter / Wrapper....................................... 31 1.2.2 Bridge.............................................. 35 1.2.3 Composite............................................ 39 1.2.4 Data Mapper.......................................... 42 1.2.5 Decorator............................................ 46 1.2.6 Dependency Injection...................................... 50 1.2.7 Facade.............................................. 53 1.2.8 Fluent Interface......................................... 56 1.2.9 Flyweight............................................ 59 1.2.10 Proxy.............................................. 62 1.2.11 Registry............................................. 66 1.3 Behavioral................................................ 69 1.3.1 Chain Of Responsibilities................................... -

Design Patterns in Ocaml

Design Patterns in OCaml Antonio Vicente [email protected] Earl Wagner [email protected] Abstract The GOF Design Patterns book is an important piece of any professional programmer's library. These patterns are generally considered to be an indication of good design and development practices. By giving an implementation of these patterns in OCaml we expected to better understand the importance of OCaml's advanced language features and provide other developers with an implementation of these familiar concepts in order to reduce the effort required to learn this language. As in the case of Smalltalk and Scheme+GLOS, OCaml's higher order features allows for simple elegant implementation of some of the patterns while others were much harder due to the OCaml's restrictive type system. 1 Contents 1 Background and Motivation 3 2 Results and Evaluation 3 3 Lessons Learned and Conclusions 4 4 Creational Patterns 5 4.1 Abstract Factory . 5 4.2 Builder . 6 4.3 Factory Method . 6 4.4 Prototype . 7 4.5 Singleton . 8 5 Structural Patterns 8 5.1 Adapter . 8 5.2 Bridge . 8 5.3 Composite . 8 5.4 Decorator . 9 5.5 Facade . 10 5.6 Flyweight . 10 5.7 Proxy . 10 6 Behavior Patterns 11 6.1 Chain of Responsibility . 11 6.2 Command . 12 6.3 Interpreter . 13 6.4 Iterator . 13 6.5 Mediator . 13 6.6 Memento . 13 6.7 Observer . 13 6.8 State . 14 6.9 Strategy . 15 6.10 Template Method . 15 6.11 Visitor . 15 7 References 18 2 1 Background and Motivation Throughout this course we have seen many examples of methodologies and tools that can be used to reduce the burden of working in a software project. -

A Design Pattern Generation Tool

Project Number: GFP 0801 A DESIGN PATTERN GEN ERATION TOOL A MAJOR QUALIFYING P ROJECT REPORT SUBMITTED TO THE FAC ULTY OF WORCESTER POLYTECHNIC INSTITUTE IN PARTIAL FULFILLME NT OF THE REQUIREMEN TS FOR THE DEGREE OF BACHELOR O F SCIENCE BY CAITLIN VANDYKE APRIL 23, 2009 APPROVED: PROFESSOR GARY POLLICE, ADVISOR 1 ABSTRACT This project determines the feasibility of a tool that, given code, can convert it into equivalent code (e.g. code that performs the same task) in the form of a specified design pattern. The goal is to produce an Eclipse plugin that performs this task with minimal input, such as special tags.. The final edition of this plugin will be released to the Eclipse community. ACKNOWLEGEMENTS This project was completed by Caitlin Vandyke with gratitude to Gary Pollice for his advice and assistance, as well as reference materials and troubleshooting. 2 TABLE OF CONTENTS Abstract ....................................................................................................................................................................................... 2 Acknowlegements ................................................................................................................................................................... 2 Table of Contents ..................................................................................................................................................................... 3 Table of Illustrations ............................................................................................................................................................. -

Additional Design Pattern Examples Design Patterns--Factory Method

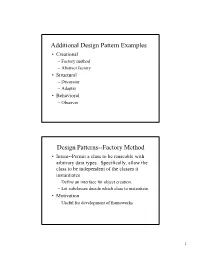

Additional Design Pattern Examples • Creational – Factory method – Abstract factory • Structural – Decorator – Adapter • Behavioral – Observer Design Patterns--Factory Method • Intent--Permit a class to be reuseable with arbitrary data types. Specifically, allow the class to be independent of the classes it instantiates – Define an interface for object creation. – Let subclasses decide which class to instantiate. • Motivation – Useful for development of frameworks 1 Factory Method--Continued • Consider a document-processing framework – High-level support for creating, opening, saving documents – Consistent method calls for these commands, regardless of document type (word-processor, spreadsheet, etc.) – Logic to implement these commands delegated to specific types of document objects. – May be some operations common to all document types. Factory Method--Continued Document Processing Example-General Framework: Document Application getTitle( ) * Edits 1 newDocument( ) newDocument( ) openDocument( ) openDocument( ) ... ... MyDocument Problem: How can an Application object newDocument( ) create instances of specific document classes openDocument( ) without being application-specific itself. ... 2 Factory Method--Continued Use of a document creation “factory”: Document Application getTitle( ) * Edits 1 newDocument( ) newDocument( ) openDocument( ) openDocument( ) ... ... 1 requestor * Requests-creation creator 1 <<interface>> MyDocument DocumentFactoryIF newDocument( ) createDocument(type:String):Document openDocument( ) ... DocumentFactory -

Design Patterns Adapter Pattern

DDEESSIIGGNN PPAATTTTEERRNNSS -- AADDAAPPTTEERR PPAATTTTEERRNN http://www.tutorialspoint.com/design_pattern/adapter_pattern.htm Copyright © tutorialspoint.com Adapter pattern works as a bridge between two incompatible interfaces. This type of design pattern comes under structural pattern as this pattern combines the capability of two independent interfaces. This pattern involves a single class which is responsible to join functionalities of independent or incompatible interfaces. A real life example could be a case of card reader which acts as an adapter between memory card and a laptop. You plugin the memory card into card reader and card reader into the laptop so that memory card can be read via laptop. We are demonstrating use of Adapter pattern via following example in which an audio player device can play mp3 files only and wants to use an advanced audio player capable of playing vlc and mp4 files. Implementation We have a MediaPlayer interface and a concrete class AudioPlayer implementing the MediaPlayer interface. AudioPlayer can play mp3 format audio files by default. We are having another interface AdvancedMediaPlayer and concrete classes implementing the AdvancedMediaPlayer interface. These classes can play vlc and mp4 format files. We want to make AudioPlayer to play other formats as well. To attain this, we have created an adapter class MediaAdapter which implements the MediaPlayer interface and uses AdvancedMediaPlayer objects to play the required format. AudioPlayer uses the adapter class MediaAdapter passing it the desired audio type without knowing the actual class which can play the desired format. AdapterPatternDemo, our demo class will use AudioPlayer class to play various formats. Step 1 Create interfaces for Media Player and Advanced Media Player. -

Secure Telemedicine System for Home Health Care

Graduate Theses, Dissertations, and Problem Reports 2000 Secure telemedicine system for home health care Sridhar Vasudevan West Virginia University Follow this and additional works at: https://researchrepository.wvu.edu/etd Recommended Citation Vasudevan, Sridhar, "Secure telemedicine system for home health care" (2000). Graduate Theses, Dissertations, and Problem Reports. 1099. https://researchrepository.wvu.edu/etd/1099 This Thesis is protected by copyright and/or related rights. It has been brought to you by the The Research Repository @ WVU with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you must obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Thesis has been accepted for inclusion in WVU Graduate Theses, Dissertations, and Problem Reports collection by an authorized administrator of The Research Repository @ WVU. For more information, please contact [email protected]. SECURE TELEMEDICINE SYSTEM FOR HOME HEALTH CARE Sridhar Vasudevan Thesis submitted to the College of Engineering and Mineral Resources at West Virginia University in partial fulfillment of the requirements for the degree of Master of Science in Computer Science V.Jagannathan, Ph.D., Chair Sumitra Reddy, Ph.D. James D. Mooney, Ph.D. Department of Computer Science and Electrical Engineering Morgantown, West Virginia 2000 Keywords: EJB, Java, Home Health Care, Telemedicine SECURE TELEMEDICINE SYSTEM FOR HOME HEALTH CARE Sridhar Vasudevan ABSTRACT This thesis describes a low-cost telemedicine system that provides home based patient care by linking patients with skilled nurses at the home care agency. -

Java Design Patterns I

Java Design Patterns i Java Design Patterns Java Design Patterns ii Contents 1 Introduction to Design Patterns 1 1.1 Introduction......................................................1 1.2 What are Design Patterns...............................................1 1.3 Why use them.....................................................2 1.4 How to select and use one...............................................2 1.5 Categorization of patterns...............................................3 1.5.1 Creational patterns..............................................3 1.5.2 Structural patterns..............................................3 1.5.3 Behavior patterns...............................................3 2 Adapter Design Pattern 5 2.1 Adapter Pattern....................................................5 2.2 An Adapter to rescue.................................................6 2.3 Solution to the problem................................................7 2.4 Class Adapter..................................................... 11 2.5 When to use Adapter Pattern............................................. 12 2.6 Download the Source Code.............................................. 12 3 Facade Design Pattern 13 3.1 Introduction...................................................... 13 3.2 What is the Facade Pattern.............................................. 13 3.3 Solution to the problem................................................ 14 3.4 Use of the Facade Pattern............................................... 16 3.5 Download the Source Code............................................. -

Gof Design Patterns

GoF Design Patterns CSC 440: Software Engineering Slide #1 Topics 1. GoF Design Patterns 2. Template Method 3. Strategy 4. Composite 5. Adapter CSC 440: Software Engineering Slide #2 Design Patterns Book Classic text that started Design Patterns movement written by the Gang of Four (GoF). We’ll introduce several widely used patterns from the book. Useful solutions for certain problems, but if you don’t have the problem, don’t use the pattern. CSC 440: Software Engineering Slide #3 GoF Design Patterns Catalog Behavioral Creational Structural Interpreter Factory Adapter Template Abstract Factory Bridge method Builder Chain of Composite Responsibility Prototype Decorator Command Singleton Façade Iterator Flyweight Mediator Memento Proxy Observer State Strategy Visitor CSC 440: Software Engineering Slide #4 GoF and GRASP Patterns GoF patterns are specializations and/or combinations of the more fundamental GRASP design patterns. Example: Adapter Pattern provides Protected Variations through use of Indirection and Polymorphism CSC 440: Software Engineering Slide #5 Template Method Pattern Name: Template Method Problem: How to implement an algorithm that varies in certain circumstances? Solution: Define the skeleton of the algorithm in the superclass, deferring implementation of the individual steps to the subclasses. CSC 440: Software Engineering Slide #6 Example: The Report Class class Report def output_report(format) if format == :plain puts(“*** #{@title} ***”) elsif format == :html puts(“<html><head>”) puts(“<title>#{@title}</title>”) -

Object-Oriented Design Patterns

Object-Oriented Design Patterns David Janzen EECS 816 Object-Oriented Software Development University of Kansas Outline • Introduction – Design Patterns Overview – Strategy as an Early Example – Motivation for Creating and Using Design Patterns – History of Design Patterns • Gang of Four (GoF) Patterns – Creational Patterns – Structural Patterns – Behavioral Patterns Copyright © 2006 by David S. 2 Janzen. All rights reserved. What are Design Patterns? • In its simplest form, a pattern is a solution to a recurring problem in a given context • Patterns are not created, but discovered or identified • Some patterns will be familiar? – If you’ve been designing and programming for long, you’ve probably seen some of the patterns we will discuss – If you use Java Foundation Classes (Swing), Copyright © 2006 by David S. 3 you have certaJiannzleyn. Aulls rieghdts rsesoervmed.e design patterns Design Patterns Definition1 • Each pattern is a three-part rule, which expresses a relation between – a certain context, – a certain system of forces which occurs repeatedly in that context, and – a certain software configuration which allows these forces to resolve themselves 1. Dick Gabriel, http://hillside.net/patterns/definition.html Copyright © 2006 by David S. 4 Janzen. All rights reserved. A Good Pattern1 • Solves a problem: – Patterns capture solutions, not just abstract principles or strategies. • Is a proven concept: – Patterns capture solutions with a track record, not theories or speculation 1. James O. Coplien, http://hillside.net/patterns/definition.html Copyright © 2006 by David S. 5 Janzen. All rights reserved. A Good Pattern • The solution isn't obvious: – Many problem-solving techniques (such as software design paradigms or methods) try to derive solutions from first principles.