Kylinx: a Dynamic Library Operating System for Simplified and Efficient Cloud Virtualization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uva-DARE (Digital Academic Repository)

UvA-DARE (Digital Academic Repository) On the construction of operating systems for the Microgrid many-core architecture van Tol, M.W. Publication date 2013 Link to publication Citation for published version (APA): van Tol, M. W. (2013). On the construction of operating systems for the Microgrid many-core architecture. General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:27 Sep 2021 General Bibliography [1] J. Aas. Understanding the linux 2.6.8.1 cpu scheduler. Technical report, Silicon Graphics Inc. (SGI), Eagan, MN, USA, February 2005. [2] D. Abts, S. Scott, and D. J. Lilja. So many states, so little time: Verifying memory coherence in the Cray X1. In Proceedings of the 17th International Symposium on Parallel and Distributed Processing, IPDPS '03, page 10 pp., Washington, DC, USA, April 2003. -

High Performance Computing Systems

High Performance Computing Systems Multikernels Doug Shook Multikernels Two predominant approaches to OS: – Full weight kernel – Lightweight kernel Why not both? – How does implementation affect usage and performance? Gerolfi, et. al. “A Multi-Kernel Survey for High- Performance Computing,” 2016 2 FusedOS Assumes heterogeneous architecture – Linux on full cores – LWK requests resources from linux to run programs Uses CNK as its LWK 3 IHK/McKernel Uses an Interface for Heterogeneous Kernels – Resource allocation – Communication McKernel is the LWK – Only operable with IHK Uses proxy processes 4 mOS Embeds LWK into the Linux kernel – LWK is visible to Linux just like any other process Resource allocation is performed by sysadmin/user 5 FFMK Uses the L4 microkernel – What is a microkernel? Also uses a para-virtualized Linux instance – What is paravirtualization? 6 Hobbes Pisces Node Manager Kitten LWK Palacios Virtual Machine Monitor 7 Sysadmin Criteria Is the LWK standalone? Which kernel is booted by the BIOS? How and when are nodes partitioned? 8 Application Criteria What is the level of POSIX support in the LWK? What is the pseudo file system support? How does an application access Linux functionality? What is the system call overhead? Can LWK and Linux share memory? Can a single process span Linux and the LWK? Does the LWK support NUMA? 9 Linux Criteria Are LWK processes visible to standard tools like ps and top? Are modifications to the Linux kernel necessary? Do Linux kernel changes propagate to the LWK? -

Intra-Unikernel Isolation with Intel Memory Protection Keys

Intra-Unikernel Isolation with Intel Memory Protection Keys Mincheol Sung Pierre Olivier∗ Virginia Tech, USA The University of Manchester, United Kingdom [email protected] [email protected] Stefan Lankes Binoy Ravindran RWTH Aachen University, Germany Virginia Tech, USA [email protected] [email protected] Abstract ACM Reference Format: Mincheol Sung, Pierre Olivier, Stefan Lankes, and Binoy Ravin- Unikernels are minimal, single-purpose virtual machines. dran. 2020. Intra-Unikernel Isolation with Intel Memory Protec- This new operating system model promises numerous bene- tion Keys. In 16th ACM SIGPLAN/SIGOPS International Conference fits within many application domains in terms of lightweight- on Virtual Execution Environments (VEE ’20), March 17, 2020, Lau- ness, performance, and security. Although the isolation be- sanne, Switzerland. ACM, New York, NY, USA, 14 pages. https: tween unikernels is generally recognized as strong, there //doi.org/10.1145/3381052.3381326 is no isolation within a unikernel itself. This is due to the use of a single, unprotected address space, a basic principle 1 Introduction of unikernels that provide their lightweightness and perfor- Unikernels have gained attention in the academic research mance benefits. In this paper, we propose a new design that community, offering multiple benefits in terms of improved brings memory isolation inside a unikernel instance while performance, increased security, reduced costs, etc. As a keeping a single address space. We leverage Intel’s Memory result, -

A Linux in Unikernel Clothing Lupine

A Linux in Unikernel Clothing Hsuan-Chi Kuo+, Dan Williams*, Ricardo Koller* and Sibin Mohan+ +University of Illinois at Urbana-Champaign *IBM Research Lupine Unikernels are great BUT: Unikernels lack full Linux Support App ● Hermitux: supports only 97 system calls Kernel LibOS + App ● OSv: ○ Fork() , execve() are not supported Hypervisor Hypervisor ○ Special files are not supported such as /proc ○ Signal mechanism is not complete ● Small kernel size ● Rumprun: only 37 curated applications ● Heavy ● Fast boot time ● Community is too small to keep it rolling ● Inefficient ● Improved performance ● Better security 2 Can Linux behave like a unikernel? 3 Lupine Linux 4 Lupine Linux ● Kernel mode Linux (KML) ○ Enables normal user process to run in kernel mode ○ Processes can still use system services such as paging and scheduling ○ App calls kernel routines directly without privilege transition costs ● Minimal patch to libc ○ Replace syscall instruction to call ○ The address of the called function is exported by the patched KML kernel using the vsyscall ○ No application changes/recompilation required 5 Boot time Evaluation Metrics Image size Based on: Unikernel benefits Memory footprint Application performance Syscall overhead 6 Configuration diversity ● 20 top apps on Docker hub (83% of all downloads) ● Only 19 configuration options required to run all 20 applications: lupine-general 7 Evaluation - Comparison configurations Lupine Cloud Operating Systems [Lupine-base + app-specific options] OSv general Linux-based Unikernels Kernel for 20 apps -

SIGOPS Annual Report 2012

SIGOPS Annual Report 2012 Fiscal Year July 2012-June 2013 Submitted by Jeanna Matthews, SIGOPS Chair Overview SIGOPS is a vibrant community of people with interests in “operatinG systems” in the broadest sense, includinG topics such as distributed computing, storaGe systems, security, concurrency, middleware, mobility, virtualization, networkinG, cloud computinG, datacenter software, and Internet services. We sponsor a number of top conferences, provide travel Grants to students, present yearly awards, disseminate information to members electronically, and collaborate with other SIGs on important programs for computing professionals. Officers It was the second year for officers: Jeanna Matthews (Clarkson University) as Chair, GeorGe Candea (EPFL) as Vice Chair, Dilma da Silva (Qualcomm) as Treasurer and Muli Ben-Yehuda (Technion) as Information Director. As has been typical, elected officers agreed to continue for a second and final two- year term beginning July 2013. Shan Lu (University of Wisconsin) will replace Muli Ben-Yehuda as Information Director as of AuGust 2013. Awards We have an excitinG new award to announce – the SIGOPS Dennis M. Ritchie Doctoral Dissertation Award. SIGOPS has lonG been lackinG a doctoral dissertation award, such as those offered by SIGCOMM, Eurosys, SIGPLAN, and SIGMOD. This new award fills this Gap and also honors the contributions to computer science that Dennis Ritchie made durinG his life. With this award, ACM SIGOPS will encouraGe the creativity that Ritchie embodied and provide a reminder of Ritchie's leGacy and what a difference a person can make in the field of software systems research. The award is funded by AT&T Research and Alcatel-Lucent Bell Labs, companies that both have a strong connection to AT&T Bell Laboratories where Dennis Ritchie did his seminal work. -

Unikernel Monitors: Extending Minimalism Outside of the Box

Unikernel Monitors: Extending Minimalism Outside of the Box Dan Williams Ricardo Koller IBM T.J. Watson Research Center Abstract Recently, unikernels have emerged as an exploration of minimalist software stacks to improve the security of ap- plications in the cloud. In this paper, we propose ex- tending the notion of minimalism beyond an individual virtual machine to include the underlying monitor and the interface it exposes. We propose unikernel monitors. Each unikernel is bundled with a tiny, specialized mon- itor that only contains what the unikernel needs both in terms of interface and implementation. Unikernel mon- itors improve isolation through minimal interfaces, re- Figure 1: The unit of execution in the cloud as (a) a duce complexity, and boot unikernels quickly. Our ini- unikernel, built from only what it needs, running on a tial prototype, ukvm, is less than 5% the code size of a VM abstraction; or (b) a unikernel running on a spe- traditional monitor, and boots MirageOS unikernels in as cialized unikernel monitor implementing only what the little as 10ms (8× faster than a traditional monitor). unikernel needs. 1 Introduction the application (unikernel) and the rest of the system, as defined by the virtual hardware abstraction, minimal? Minimal software stacks are changing the way we think Can application dependencies be tracked through the in- about assembling applications for the cloud. A minimal terface and even define a minimal virtual machine mon- amount of software implies a reduced attack surface and itor (or in this case a unikernel monitor) for the applica- a better understanding of the system, leading to increased tion, thus producing a maximally isolated, minimal exe- security. -

W4118: Multikernel

W4118: multikernel Instructor: Junfeng Yang References: Modern Operating Systems (3rd edition), Operating Systems Concepts (8th edition), previous W4118, and OS at MIT, Stanford, and UWisc Motivation: sharing is expensive Difficult to parallelize single-address space kernels with shared data structures . Locks/atomic instructions: limit scalability . Must make every shared data structure scalable • Partition data • Lock-free data structures • … . Tremendous amount of engineering Root cause . Expensive to move cache lines . Congestion of interconnect 1 Multikernel: explicit sharing via messages Multicore chip == distributed system! . No shared data structures! . Send messages to core to access its data Advantages . Scalable . Good match for heterogeneous cores . Good match if future chips don’t provide cache- coherent shared memory 2 Challenge: global state OS must manage global state . E.g., page table of a process Solution . Replicate global state . Read: read local copy low latency . Update: update local copy + distributed protocol to update remote copies • Do so asynchronously (“split phase”) 3 Barrelfish overview Figure 5 in paper CPU driver . kernel-mode part per core . Inter-Processor interrupt (IPI) Monitors . OS abstractions . User-level RPC (URPC) 4 IPC through shared memory #define CACHELINE (64) struct box{ char buf[CACHELINE-1]; // message contents char flag; // high bit == 0 means sender owns it }; __attribute__ ((aligned (CACHELINE))) struct box m __attribute__ ((aligned (CACHELINE))); send() recv() { { // set up message while(!(m.flag & 0x80)) memcpy(m.buf, …); ; m.flag |= 0x80; m.buf … //process message } } 5 Case study: TLB shootdown When is it necessary? Windows & Linux: send IPIs Barrelfish: sends messages to involved monitors . 1 broadcast message N-1 invalidates, N-1 fetches . -

Shanlu › About › Cv › CV Shanlu.Pdf Shan Lu

Shan Lu University of Chicago, Dept. of Computer Science Phone: +1-773-702-3184 5730 S. Ellis Ave., Rm 343 E-mail: [email protected] Chicago, IL 60637 USA Homepage: http://people.cs.uchicago.edu/~shanlu RESEARCH INTERESTS Tool support for improving the correctness and efficiency of large scale software systems EMPLOYMENT 2019 – present Professor, Dept. of Computer Science, University of Chicago 2014 – 2019 Associate Professor, Dept. of Computer Sciences, University of Chicago 2009 – 2014 Assistant Professor, Dept. of Computer Sciences, University of Wisconsin – Madison EDUCATION 2008 University of Illinois at Urbana-Champaign, Urbana, IL Ph.D. in Computer Science Thesis: Understanding, Detecting, and Exposing Concurrency Bugs (Advisor: Prof. Yuanyuan Zhou) 2003 University of Science & Technology of China, Hefei, China B.S. in Computer Science HONORS AND AWARDS 2019 ACM Distinguished Member Among 62 members world-wide recognized for outstanding contributions to the computing field 2015 Google Faculty Research Award 2014 Alfred P. Sloan Research Fellow Among 126 “early-career scholars (who) represent the most promising scientific researchers working today” 2013 Distinguished Alumni Educator Award Among 3 awardees selected by Department of Computer Science, University of Illinois 2010 NSF Career Award 2021 Honorable Mention Award @ CHI for paper [C71] (CHI 2021) 2019 Best Paper Award @ SOSP for paper [C62] (SOSP 2019) 2019 ACM SIGSOFT Distinguished Paper Award @ ICSE for paper [C58] (ICSE 2019) 2017 Google Scholar Classic Paper Award for -

Multiprocessor Operating Systems CS 6410: Advanced Systems

Introduction Multikernel Tornado Conclusion Discussion Outlook References Multiprocessor Operating Systems CS 6410: Advanced Systems Kai Mast Department of Computer Science Cornell University September 4, 2014 Kai Mast — Multiprocessor Operating Systems 1/47 Introduction Multikernel Tornado Conclusion Discussion Outlook References Let us recall Multiprocessor vs. Multicore Figure: Multiprocessor [10] Figure: Multicore [10] Kai Mast — Multiprocessor Operating Systems 2/47 Introduction Multikernel Tornado Conclusion Discussion Outlook References Let us recall Message Passing vs. Shared Memory Shared Memory Threads/Processes access the same memory region Communication via changes in variables Often easier to implement Message Passing Threads/Processes don’t have shared memory Communication via messages/events Easier to distribute between different processors More robust than shared memory Kai Mast — Multiprocessor Operating Systems 3/47 Introduction Multikernel Tornado Conclusion Discussion Outlook References Let us recall Miscellaneous Cache Coherence Inter-Process Communication Remote-Procedure Call Preemptive vs. cooperative Multitasking Non-uniform memory access (NUMA) Kai Mast — Multiprocessor Operating Systems 4/47 Introduction Multikernel Tornado Conclusion Discussion Outlook References Current Systems are Diverse Different Architectures (x86, ARM, ...) Different Scales (Desktop, Server, Embedded, Mobile ...) Different Processors (GPU, CPU, ASIC ...) Multiple Cores and/or Multiple Processors Multiple Operating Systems on a System (Firmware, -

Pivot Tracing: Dynamic Causal Monitoring for Distributed Systems Pdfauthor=Jonathan Mace, Ryan Roelke, Rodrigo Fonseca

PivoT Tracing:Dynamic Causal MoniToring for DisTribuTed SysTems JonaThan Mace Ryan Roelke Rodrigo Fonseca Brown UniversiTy AbsTracT MoniToring and TroubleshooTing disTribuTed sysTems is noToriously diõcult; poTenTial prob- lems are complex, varied, and unpredicTable. _e moniToring and diagnosis Tools commonly used Today – logs, counTers, and meTrics – have Two imporTanT limiTaTions: whaT gets recorded is deûned a priori, and The informaTion is recorded in a componenT- or machine-cenTric way, making iT exTremely hard To correlaTe events ThaT cross These boundaries. _is paper presents PivoT Tracing, a moniToring framework for disTribuTed sysTems ThaT addresses boTh limiTaTions by combining dynamic insTrumenTaTion wiTh a novel relaTional operaTor: The happened-before join. PivoT Tracing gives users, aT runTime, The abiliTy To deûne arbiTrary meTrics aT one poinT of The sysTem, while being able To selecT, ûlTer, and group by events mean- ingful aT oTher parts of The sysTem, even when crossing componenT or machine boundaries. We have implemenTed a proToType of PivoT Tracing for Java-based sysTems and evaluaTe iT on a heTerogeneous Hadoop clusTer comprising HDFS, HBase, MapReduce, and YARN. We show ThaT PivoT Tracing can eòecTively idenTify a diverse range of rooT causes such as soware bugs, misconûguraTion, and limping hardware. We show ThaT PivoT Tracing is dynamic, exTensible, and enables cross-Tier analysis beTween inTer-operaTing applicaTions, wiTh low execuTion overhead. Ë. InTroducTion MoniToring and TroubleshooTing disTribuTed sysTems -

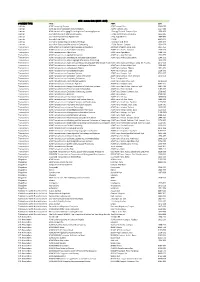

A ACM Transactions on Trans. 1553 TITLE ABBR ISSN ACM Computing Surveys ACM Comput. Surv. 0360‐0300 ACM Journal

ACM - zoznam titulov (2016 - 2019) CONTENT TYPE TITLE ABBR ISSN Journals ACM Computing Surveys ACM Comput. Surv. 0360‐0300 Journals ACM Journal of Computer Documentation ACM J. Comput. Doc. 1527‐6805 Journals ACM Journal on Emerging Technologies in Computing Systems J. Emerg. Technol. Comput. Syst. 1550‐4832 Journals Journal of Data and Information Quality J. Data and Information Quality 1936‐1955 Journals Journal of Experimental Algorithmics J. Exp. Algorithmics 1084‐6654 Journals Journal of the ACM J. ACM 0004‐5411 Journals Journal on Computing and Cultural Heritage J. Comput. Cult. Herit. 1556‐4673 Journals Journal on Educational Resources in Computing J. Educ. Resour. Comput. 1531‐4278 Transactions ACM Letters on Programming Languages and Systems ACM Lett. Program. Lang. Syst. 1057‐4514 Transactions ACM Transactions on Accessible Computing ACM Trans. Access. Comput. 1936‐7228 Transactions ACM Transactions on Algorithms ACM Trans. Algorithms 1549‐6325 Transactions ACM Transactions on Applied Perception ACM Trans. Appl. Percept. 1544‐3558 Transactions ACM Transactions on Architecture and Code Optimization ACM Trans. Archit. Code Optim. 1544‐3566 Transactions ACM Transactions on Asian Language Information Processing 1530‐0226 Transactions ACM Transactions on Asian and Low‐Resource Language Information Proce ACM Trans. Asian Low‐Resour. Lang. Inf. Process. 2375‐4699 Transactions ACM Transactions on Autonomous and Adaptive Systems ACM Trans. Auton. Adapt. Syst. 1556‐4665 Transactions ACM Transactions on Computation Theory ACM Trans. Comput. Theory 1942‐3454 Transactions ACM Transactions on Computational Logic ACM Trans. Comput. Logic 1529‐3785 Transactions ACM Transactions on Computer Systems ACM Trans. Comput. Syst. 0734‐2071 Transactions ACM Transactions on Computer‐Human Interaction ACM Trans. -

An Oral History of Computer Science Cornell University

An Oral History of Computer Science at Cornell University https://ecommons.cornell.edu/handle/1813/40569 Gates Hall, Cornell University Twelve senior faculty members share their personal journeys and their recollections of the early days of computer science at Cornell University and the leadership role in bringing a new field of study into existence. Birman, Ken Hopcroft, John E Cardie, Claire Kozen, Dexter Constable, Robert Nerode, Anil Conway, Richard W. Schneider, Fred B Gries, David Teitelbaum, Tim Hartmanis, Juris Van Loan, Charlie (Click on a name above to scroll to an abstract and a live link to the associated streaming video.) version: 16Jun16 1. KEN BIRMAN Ken Birman, who joined CS in 1981, exemplifies the successful synergy of research and entrepreneurial activities. His research in distributed systems led to his founding ISIS Distributed Systems, Inc., in 1988, which developed software used by the New York Stock Exchange (NYSE) and Swiss Exchange, the French Air Traffic Control sys- tem, the AEGIS warship, and others. He started two other companies, Reliable Network Solutions and Web Sci- ences LLC. This entrepreneurship has in turn generated new research ideas and has also led to Ken’s advising various organi- zations on distributed systems and cloud computing, including the French Civil Aviation Organization, the north- eastern electric power grid, NATO, the US Treasury, and the US Air Force. Ken has received several awards for his research, among them the IEEE Tsutomu Kanai Award for his work on trustworthy computing, the Cisco “Technology Visionary” award, and the ACM SIGOPS Hall of Fame Award. He also has written two successful texts.