Area Challenge Index

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Leicester and Leicestershire City Deal

Leicester and Leicestershire City Deal Page | 1 Executive Summary Leicester and Leicestershire is a diverse and dynamic local economy and its success is integral to driving economic growth in the United Kingdom. The area is home to just under 1 million residents and over 32,000 businesses, many in the manufacturing and logistics sectors. Leicester and Leicestershire also benefits from its location at the heart of the UK road network and close proximity to both the second largest freight handling airport in the UK and London. The area provides employment for 435,000 people and generates an estimated gross value added of £19.4 billion. Despite these strengths Leicester and Leicestershire faces a series of challenges: more than 25,000 jobs were lost between 2008 and 2011 (nearly twice the national average); youth unemployment is relatively high within the city of Leicester and parts of the county; and whilst 70% of small and medium enterprises have plans for growth many find accessing the right type of business support is complex. Some local businesses also note difficulties in filling vacancies. As part of the area’s wider Growth Strategy the City Deal seeks to tackle these key barriers. Over its lifetime the Leicester and Leicestershire Enterprise Partnership expects that the City Deal will deliver: A new employment scheme targeted at 16-24 year olds that will reduce youth unemployment by 50% by 2018, deliver 3,000 new apprenticeships and 1,000 traineeships and work placements. An innovative new employment and training scheme for young offenders. Improved co-ordination of business support services and a range of innovative business support programmes. -

Dementia Pilot

Assistive Technology Dementia Pilot January 2020 Information for participants What are we doing? We are running a small pilot project for 50 people to test some new technology designed to help people with dementia living at home. This is something new for the council and we would like to see how it works and understand what the people taking part think about it. We have selected the technology that we are going to use and at the end of the project we will ask you how helpful and easy it was to use. Where are we doing it? The pilot project is a partnership between Leicestershire County Council and five district and borough councils and is for people living in the following areas: • Charnwood • North West Leicestershire • Hinckley and Bosworth • Oadby and Wigston • Blaby What does the technology do? We will be using a product called MySense. This monitors an individual’s activity and daily routine in the home and then communicates the information it collects using the internet. It gathers information using: • Sensors – which can identify movement and activity, e.g. when the bathroom door was opened, or the fridge was used • Smartplug – which connects to the kettle and can indicate when this has been used • Sleep Sensor – which can identify the time at which someone has gone to bed and the time they spent in bed This information is then communicated in the following ways: • App – for family members/friends, which shows a timeline of activity. • Notifications – that a family member/friend will receive by text about the important things you need to know about Do I have to pay for this? No, the council will provide the system to you free of charge during the pilot. -

Leicestershire County & Rutland Nhs Primary Care

LEICESTERSHIRE JOINT STRATEGIC NEEDS ASSESSMENT 2018-2021 Economy JULY 2018 Strategic Business Intelligence Team Leicestershire County Council Public Health Intelligence Strategic Business Intelligence Team Strategy and Business Intelligence Chief Executive’s Department Leicestershire County Council County Hall, Glenfield Leicester LE3 8RA Tel 0116 305 4266 Email [email protected] Produced by the Strategic Business Intelligence Team at Leicestershire County Council. Whilst every effort has been made to ensure the accuracy of the information contained within this report, Leicestershire County Council cannot be held responsible for any errors or omission relating to the data contained within the report. i FOREWORD The purpose of the Joint Strategic Needs Assessment (JSNA) is to: • To improve the health and wellbeing of the local community and reduce inequalities for all ages. • To determine what actions the local authority, the local NHS and other partners need to take to meet health and social care needs, and to address the wider determinants that impact on health and wellbeing. • To provide a source of relevant reference to the Local Authority, Clinical Commissioning Groups (CCGs) and NHS England for the commissioning of any future services. The Local Authority and CCGs have equal and joint statutory responsibility to prepare a Joint Strategic Needs Assessment (JSNA) for Leicestershire, through the Health and Wellbeing Board. The Health and Social Care Act 2012 amended the Local Government and Public Involvement in Health Act 2007 to introduce duties and powers for Health and Wellbeing Boards in relation to JSNAs. The JSNA offers an opportunity for the Local Authority, CCGs and NHS England’s plans for commissioning services to be informed by up to date information on the population that use their services. -

Leics HMA ELR Report - Appendices Final.Doc PACEC Contents

Leicester and Leicestershire HMA Employment Land Study A report prepared by PACEC With Warwick Business Management Ltd on behalf of Leicester Shire Economic Partnership PACEC Public and Corporate Economic Consultants 49-53 Regent Street Cambridge CB2 1AB Tel: 01223 311649 Fax: 01223 362913 504 Linen Hall 162-168 Regent Street London W1R 5TB Tel: 020 7734 6699 Fax: 020 7434 0357 e-mail: [email protected] February 2009 Ref: H:\0712\12LSEP\Rep\Final\Leics HMA ELR Report - Appendices Final.doc PACEC Contents Contents Appendix A Commuting Flows ..................................................................................................... 2 Appendix B Employment Sector Definitions .............................................................................. 10 Appendix C Floorspace Definitions ............................................................................................ 11 C1 ODPM Floorspace Definitions up to 2004 ...................................................................... 11 C2 ODPM Floorspace Definition 2005 onwards .................................................................. 12 Appendix D Outstanding Planning Permissions and Allocations, March 2007 .......................... 14 Appendix E Site Assessment of Employment Areas which remain Wholly or Partly Undeveloped 20 Appendix F Floorspace Densities .............................................................................................. 23 F1 Strategic Warehousing ...................................................................................................... -

East Midlands

Liberal Democrat submission for BCE 3rd consultation East Midlands Submission to the Boundary Commission for England third period of consultation: East Midlands Summary There is a factual error in the Commission’s report concerning the Liberal Democrat counter-proposals in the Leicestershire / Northamptonshire / Nottinghamshire / Rutland sub-region. We would, therefore, ask the Commission to reconsider the scheme we put forward. We welcome the change the Commission has made to its proposal for Mansfield. We welcome the fact that the Commission has kept to its original proposals in Lincolnshire, much of Derbyshire and Derby, and in Northampton. We consider that the changes that the Commission has made to four constituencies in Derbyshire, affecting the disposition of three wards, are finely balanced judgement calls with which we are content to accept the Commission’s view. The change that the Commission has made to the Kettering and Wellingborough constituencies would not have needed to be considered if it had agreed to our proposal for an unchanged Wellingborough seat. The Commission’s proposal to move the Burton Joyce and Stoke Bardolph ward into its proposed Sherwood constituency means that it is now proposing three Nottinghamshire constituencies (Bassetlaw, Broxtowe, Sherwood) which contain a ward which is inaccessible from the rest of the seat. We are not in agreement with the Commission’s failure to comply with the spirit of the legislation or the letter of its own guidelines in respect of these three proposed constituencies. We are not in agreement with the Commission’s failure to respect the boundaries of the City of Nottingham to the extent of proposing three constituencies that cross the Unitary Authority boundary. -

Leicester, Leicestershire & Rutland

Leicester, Leicestershire & Rutland Better care together in Hinckley and Bosworth www.bettercareleicester.nhs.uk 27 March 2017 Scope of the evening What we’re doing now, and what we want to do in the future How our proposals might affect you and your local area • Caring for you at home • Your local GP practices • Local community hospitals • Access to urgent care services The NHS does amazing things every day The national view Introducing our Sustainability and Transformation Plan (STP) • Health and care proposals for Leicester, Leicestershire & Rutland (LLR) • Progression of Better Care Together work, but with clearer focus on implementing a few key system priorities • Engaging across the area to hear the public views • 44 STP areas in England, and some changes may impact on residents here Tackling the gaps in the system Health Finance Care and and and quality wellbeing efficiency Making us fit for future care Across Leicester, Leicestershire and Rutland our population is growing The older population is predicted to increase by 11% in next five years Long term illnesses are also increasing This leads to a greater demand for health and care services Identifying the health and care financial gap What patients tell us they want I want to stay in my own I want the knowledge to care for home or in my local area ourselves and stop myself from getting sick I want all the services to work together so I don’t have to keep repeating my I want to feel more in story or attending so many control and independent appointments Addressing the areas for -

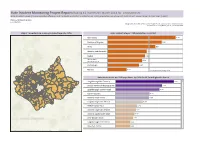

Himp Maps Page2

Hate Incident Monitoring Project Report: Rolling 12 months ll March 2014 for Leicestershire Hate Incident Levels (Police recorded offences and incidents and HIMP incidents) per 1000 populaon are shown at District and Lower Super Output Area (LSOA) Choose Partnership Area Leicestershire Designed by Karen Earp, Research & Insight Team , Leicestershire County Council, Contact: [email protected] , 0116 305 7260 Map of Leicestershire showing incident levels by LSOA Hate incident rate per 1000 populaon by district. -1 Charnwood 0.76 -1 Oadby and Wigston 0.61 -1 Blaby 0.53 0 Hinckley and Bosworth 0.44 0 Melton 0.42 North West 0 0.39 Leicestershire 0 Harborough 0.35 0 Rutland 0.21 Leicestershire Average 0.46 Hate incident rate per 1000 populaon by LSOA for All from highest to lowest -9 Loughborough Bell Foundry 9.27 -7 Oadby The Parade & Leicester Ra.. 7.40 -7 Loughborough Toothill Road 7.00 -5 Coalville Centre 5.44 -5 Hinckley Town Centre 5.35 -4 Loughborough Centre South 4.34 -3 Melton Egerton East 3.46 -3 Hinckley Town Centre North 3.24 -3 Hinckley Castle South West 3.06 -3 Lile Bowden South 2.83 -2 Loughborough Centre West 2.39 -2 Measham Centre 2.39 -2 Loughborough Meadow Lane 2.37 About Tableau maps: www.tableausoftware.com/ mapdata Hate Incident Monitoring Project Report: Rolling 12 months ll March 2014 for Blaby Hate Incident Levels (Police recorded offences and incidents and HIMP incidents) per 1000 populaon are shown at District and Lower Super Output Area (LSOA) Choose Partnership Area Blaby Designed by Karen Earp, Research & Insight Team , Leicestershire County Council, Contact: [email protected] , 0116 305 7260 Map of Blaby showing incident levels by LSOA Hate incident rate per 1000 populaon by district. -

Draft Public Realm Strategy SPD Part Thirteen

Borough Council’s Grounds Maintenance Officer (where relevant), and Oadby and Wigston Borough Council’s Planning and Economic Development Teams. 62 SECTION SEVEN Glossary of Terms 6C’s: The 6Cs sub-region as defined in the East Midlands Regional Plan has an existing population of 1.9m people. It includes the 3 cities of Leicester, Derby and Nottingham and the 3 Counties of Leicestershire, Derbyshire and Nottinghamshire. Area Action Plans: provide a planning framework for areas of change or conservation. Conservation Area: an area of special architectural or historic interest identified by the Local Planning Authority under the Planning (Listed Buildings & Conservation Areas) Act 1990. There is a statutory duty to preserve or enhance the character or appearance of such areas. Core Strategy: A development plan document that sets out the long term vision (10+ years) for a local planning authority area, the strategic objectives, and the strategic planning policies needed to deliver that vision. Development Plan Documents: spatial planning documents that are subject to independent examination, and together with Supplementary Planning Documents, will form the development plan for a local authority area for the purposes of the Act. They can include a core strategy, site specific allocations of land, and area action plans (where needed). Other development plan documents, including generic development control policies, can be produced. Individual development plan documents or parts of a document can be reviewed independently from other development plan documents. Each authority must set out the programme for preparing its development plan documents in the Local Development Scheme. Leicester Principal Urban Area: The Leicester Principal Urban Area encompasses all the administrative area of Leicester City and parts of Blaby, Charnwood, Harborough, Hinckley and Bosworth and Oadby and Wigston districts. -

Hinckley and Bosworth Nominated Candidates

Statement of Persons Nominated and Notice of Poll Leicestershire County Council Election of a County Councillor The following is a statement of the persons nominated for election as a County Councillor for Burbage Division Reason why Name of Description Name of Proposer (*), Seconder (**) Home Address no longer Candidate (if any) and Assentors nominated* HILL (address in Social Chettle David * Roger Hinckley & Democratic Wallis Sonia J ** Bosworth) Party MARTIN 1 Kestrel Close, Labour Party Clayden Hugh C * Robert Burbage, LE10 Clayden Anna M P ** 2PA WALKER 16 Denis Road, Liberal Inman Celia P * Barry Richard Burbage, LE10 Democrat Focus Lynch Maureen V ** 2LR Team WRIGHT (address in The Clayton Andrew T * Amanda Victoria Hinckley & Conservative MacDonald David W ** Bosworth) Party Candidate The persons above, where no entry is made in the last column, have been and stand validly nominated. A POLL WILL BE TAKEN on Thursday 6 May 2021, between the hours of 7:00 am and 10:00 pm Where contested this poll is taken together with the election of Parish Councillors, the election of the Police and Crime Commissioner and the referendum on the adoption of the Burbage Neighbourhood Plan. Dated 8 April 2021 Bill Cullen Deputy Returning Officer Hinckley Hub Rugby Road Hinckley Leicestershire LE10 0FR Statement of Persons Nominated and Notice of Poll Leicestershire County Council Election of a County Councillor The following is a statement of the persons nominated for election as a County Councillor for De Montfort Division Reason why Name of Description -

Hinckley and Bosworth

HINCKLEY & BOSWORTH DISTRICT PROFILE JANUARY 2010 JSNA District Level Report JSNA findings for Hinckley and Bosworth 1. Demography In 2007 there were an estimated 104,400 people resident in Hinckley and Bosworth. 95% of the population is white British and 2% is White Other. Hinckley and Bosworth is affected by socio-economic deprivation with 7.1% of the population living in neighbourhoods that have been classified as deprived nationally (4th quintile). However, there are no residents within the most deprived quintile within this district. Whilst this is similar to the value for Leicestershire it is well below the national average of 20% of people. Priority Neighbourhoods Hinckley and Bosworth has five priority neighbourhoods, Earl Shilton, Barwell, Hinckley, Burbage and Bagworth. Maps of the priority neighbourhoods are available on the Leicestershire Statistics and Research Online website: http://www.lsr-online.org/reports/leicestershire_laa_priority_neighbourhoods 2. Housing and accommodation needs Hinckley and Bosworth is a mostly rural area. The main settlements, Hinckley, Barwell, and Earl Shilton, are fairly different . Using Output Area Classifications to gain a general view suggests that Hinckley contains more higher income households and Earl Shilton is relatively more working class, but these are generalisations and cover a substantial variety. Most of the children of school age in the main settlements are White British. The largest ethnic minority groups are ‘Other White’ and ‘Asian/British Indian’. Properties in the main settlements are mainly owner occupied 3 bed houses, though there are significant numbers of both 2 bed flats and houses. There is very little private rented property (though this is likely to have increased since 2001) throughout the borough. -

Blaby District Council and Hinckley and Bosworth Borough Council Joint Community Safety Partnership Overview & Scrutiny Committee

Blaby District Council and Hinckley and Bosworth Borough Council Joint Community Safety Partnership Overview & Scrutiny Committee Date of Meeting 8 March 2018 Title of Report Blaby and Hinckley and Bosworth Joint Community Safety Partnership Annual Scrutiny Report Report Author Strategic Director (Blaby District Council ) Director of Community Services (Hinckley & Bosworth Borough Council) 1. What is this report about? 1.1 To provide an update as to the priorities, performance, successes and challenges in relation to the Blaby and Hinckley and Bosworth Community Safety Partnership 2. Recommendation 2.1 That Scrutiny notes the content of this report 3. Reason for Decision(s) Recommended 3.1 This report is the second required annual report from the Blaby and Hinckley and Bosworth Community Safety Partnership (CSP) 4. Matters to consider 4.1 Strategic Overview The Crime & Disorder Act 1998 provides the framework for the establishment of Community Safety Partnerships (CSP’s). Membership must include the “Responsible Authorities” as defined by the Crime and Disorder Act 1998. The responsible authorities on the local Partnership are Blaby District Council, Hinckley and Bosworth Borough Council, Leicestershire County Council, Police, Fire & Rescue Service, Clinical Commissioning Groups and Probation. 4.2 The Community Safety Partnership delivers its work based around the three priorities of the CSP strategy 2017-2020. Action plans and a robust performance management system are in place to ensure delivery of the Community Safety Strategy. The three priorities were decided on following consultation with our partners, the public and young people: • Protecting and supporting people • Reducing offending and reoffending • Improving community confidence and cohesion 4.3 Protecting and supporting people Domestic Abuse The Partnership works to support victims and secondary victims (children) of domestic abuse through in house services and services offered by partnership members. -

Demographic Profile of Leicester, Leicestershire and Rutland

Equality and Human Rights Team Demographic Profile of Leicester, Leicestershire and Rutland Local, Unitary, and District Authority Areas 2017 Mid-Year Estimates and 2011 Census Equality and Human Rights Team Contents Introduction ......................................................................................................................... 1 Age and Gender .................................................................................................................. 2 England Benchmark ...................................................................................................................... 2 Leicester, Leicestershire, and Rutland .......................................................................................... 3 Leicester, Leicestershire, and Rutland overall ............................................................................ 3 Leicester City ............................................................................................................................. 4 Leicestershire County ................................................................................................................ 5 Rutland ...................................................................................................................................... 6 Within Leicestershire County......................................................................................................... 7 Blaby ........................................................................................................................................