Computational Optimization - Dnsc 8392

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of California, San Diego

UNIVERSITY OF CALIFORNIA, SAN DIEGO Computational Methods for Parameter Estimation in Nonlinear Models A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Physics with a Specialization in Computational Physics by Bryan Andrew Toth Committee in charge: Professor Henry D. I. Abarbanel, Chair Professor Philip Gill Professor Julius Kuti Professor Gabriel Silva Professor Frank Wuerthwein 2011 Copyright Bryan Andrew Toth, 2011 All rights reserved. The dissertation of Bryan Andrew Toth is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2011 iii DEDICATION To my grandparents, August and Virginia Toth and Willem and Jane Keur, who helped put me on a lifelong path of learning. iv EPIGRAPH An Expert: One who knows more and more about less and less, until eventually he knows everything about nothing. |Source Unknown v TABLE OF CONTENTS Signature Page . iii Dedication . iv Epigraph . v Table of Contents . vi List of Figures . ix List of Tables . x Acknowledgements . xi Vita and Publications . xii Abstract of the Dissertation . xiii Chapter 1 Introduction . 1 1.1 Dynamical Systems . 1 1.1.1 Linear and Nonlinear Dynamics . 2 1.1.2 Chaos . 4 1.1.3 Synchronization . 6 1.2 Parameter Estimation . 8 1.2.1 Kalman Filters . 8 1.2.2 Variational Methods . 9 1.2.3 Parameter Estimation in Nonlinear Systems . 9 1.3 Dissertation Preview . 10 Chapter 2 Dynamical State and Parameter Estimation . 11 2.1 Introduction . 11 2.2 DSPE Overview . 11 2.3 Formulation . 12 2.3.1 Least Squares Minimization . -

Multi-Objective Optimization of Unidirectional Non-Isolated Dc/Dcconverters

MULTI-OBJECTIVE OPTIMIZATION OF UNIDIRECTIONAL NON-ISOLATED DC/DC CONVERTERS by Andrija Stupar A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Edward S. Rogers Department of Electrical and Computer Engineering University of Toronto © Copyright 2017 by Andrija Stupar Abstract Multi-Objective Optimization of Unidirectional Non-Isolated DC/DC Converters Andrija Stupar Doctor of Philosophy Graduate Department of Edward S. Rogers Department of Electrical and Computer Engineering University of Toronto 2017 Engineers have to fulfill multiple requirements and strive towards often competing goals while designing power electronic systems. This can be an analytically complex and computationally intensive task since the relationship between the parameters of a system’s design space is not always obvious. Furthermore, a number of possible solutions to a particular problem may exist. To find an optimal system, many different possible designs must be evaluated. Literature on power electronics optimization focuses on the modeling and design of partic- ular converters, with little thought given to the mathematical formulation of the optimization problem. Therefore, converter optimization has generally been a slow process, with exhaus- tive search, the execution time of which is exponential in the number of design variables, the prevalent approach. In this thesis, geometric programming (GP), a type of convex optimization, the execution time of which is polynomial in the number of design variables, is proposed and demonstrated as an efficient and comprehensive framework for the multi-objective optimization of non-isolated unidirectional DC/DC converters. A GP model of multilevel flying capacitor step-down convert- ers is developed and experimentally verified on a 15-to-3.3 V, 9.9 W discrete prototype, with sets of loss-volume Pareto optimal designs generated in under one minute. -

Julia, My New Friend for Computing and Optimization? Pierre Haessig, Lilian Besson

Julia, my new friend for computing and optimization? Pierre Haessig, Lilian Besson To cite this version: Pierre Haessig, Lilian Besson. Julia, my new friend for computing and optimization?. Master. France. 2018. cel-01830248 HAL Id: cel-01830248 https://hal.archives-ouvertes.fr/cel-01830248 Submitted on 4 Jul 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. « Julia, my new computing friend? » | 14 June 2018, IETR@Vannes | By: L. Besson & P. Haessig 1 « Julia, my New frieNd for computiNg aNd optimizatioN? » Intro to the Julia programming language, for MATLAB users Date: 14th of June 2018 Who: Lilian Besson & Pierre Haessig (SCEE & AUT team @ IETR / CentraleSupélec campus Rennes) « Julia, my new computing friend? » | 14 June 2018, IETR@Vannes | By: L. Besson & P. Haessig 2 AgeNda for today [30 miN] 1. What is Julia? [5 miN] 2. ComparisoN with MATLAB [5 miN] 3. Two examples of problems solved Julia [5 miN] 4. LoNger ex. oN optimizatioN with JuMP [13miN] 5. LiNks for more iNformatioN ? [2 miN] « Julia, my new computing friend? » | 14 June 2018, IETR@Vannes | By: L. Besson & P. Haessig 3 1. What is Julia ? Open-source and free programming language (MIT license) Developed since 2012 (creators: MIT researchers) Growing popularity worldwide, in research, data science, finance etc… Multi-platform: Windows, Mac OS X, GNU/Linux.. -

Numericaloptimization

Numerical Optimization Alberto Bemporad http://cse.lab.imtlucca.it/~bemporad/teaching/numopt Academic year 2020-2021 Course objectives Solve complex decision problems by using numerical optimization Application domains: • Finance, management science, economics (portfolio optimization, business analytics, investment plans, resource allocation, logistics, ...) • Engineering (engineering design, process optimization, embedded control, ...) • Artificial intelligence (machine learning, data science, autonomous driving, ...) • Myriads of other applications (transportation, smart grids, water networks, sports scheduling, health-care, oil & gas, space, ...) ©2021 A. Bemporad - Numerical Optimization 2/102 Course objectives What this course is about: • How to formulate a decision problem as a numerical optimization problem? (modeling) • Which numerical algorithm is most appropriate to solve the problem? (algorithms) • What’s the theory behind the algorithm? (theory) ©2021 A. Bemporad - Numerical Optimization 3/102 Course contents • Optimization modeling – Linear models – Convex models • Optimization theory – Optimality conditions, sensitivity analysis – Duality • Optimization algorithms – Basics of numerical linear algebra – Convex programming – Nonlinear programming ©2021 A. Bemporad - Numerical Optimization 4/102 References i ©2021 A. Bemporad - Numerical Optimization 5/102 Other references • Stephen Boyd’s “Convex Optimization” courses at Stanford: http://ee364a.stanford.edu http://ee364b.stanford.edu • Lieven Vandenberghe’s courses at UCLA: http://www.seas.ucla.edu/~vandenbe/ • For more tutorials/books see http://plato.asu.edu/sub/tutorials.html ©2021 A. Bemporad - Numerical Optimization 6/102 Optimization modeling What is optimization? • Optimization = assign values to a set of decision variables so to optimize a certain objective function • Example: Which is the best velocity to minimize fuel consumption ? fuel [ℓ/km] velocity [km/h] 0 30 60 90 120 160 ©2021 A. -

Using the COIN-OR Server

Using the COIN-OR Server Your CoinEasy Team November 16, 2009 1 1 Overview This document is part of the CoinEasy project. See projects.coin-or.org/CoinEasy. In this document we describe the options available to users of COIN-OR who are interested in solving opti- mization problems but do not wish to compile source code in order to build the COIN-OR projects. In particular, we show how the user can send optimization problems to a COIN-OR server and get the solution result back. The COIN-OR server, webdss.ise.ufl.edu, is 2x Intel(R) Xeon(TM) CPU 3.06GHz 512MiB L2 1024MiB L3, 2GiB DRAM, 4x73GiB scsi disk 2xGigE machine. This server allows the user to directly access the following COIN-OR optimization solvers: • Bonmin { a solver for mixed-integer nonlinear optimization • Cbc { a solver for mixed-integer linear programs • Clp { a linear programming solver • Couenne { a solver for mixed-integer nonlinear optimization problems and is capable of global optiomization • DyLP { a linear programming solver • Ipopt { an interior point nonlinear optimization solver • SYMPHONY { mixed integer linear solver that can be executed in either parallel (dis- tributed or shared memory) or sequential modes • Vol { a linear programming solver All of these solvers on the COIN-OR server may be accessed through either the GAMS or AMPL modeling languages. In Section 2.1 we describe how to use the solvers using the GAMS modeling language. In Section 2.2 we describe how to call the solvers using the AMPL modeling language. In Section 3 we describe how to call the solvers using a command line executable pro- gram OSSolverService.exe (or OSSolverService for Linux/Mac OS X users { in the rest of the document we refer to this executable using a .exe extension). -

Click to Edit Master Title Style

Click to edit Master title style MINLP with Combined Interior Point and Active Set Methods Jose L. Mojica Adam D. Lewis John D. Hedengren Brigham Young University INFORM 2013, Minneapolis, MN Presentation Overview NLP Benchmarking Hock-Schittkowski Dynamic optimization Biological models Combining Interior Point and Active Set MINLP Benchmarking MacMINLP MINLP Model Predictive Control Chiller Thermal Energy Storage Unmanned Aerial Systems Future Developments Oct 9, 2013 APMonitor.com APOPT.com Brigham Young University Overview of Benchmark Testing NLP Benchmark Testing 1 1 2 3 3 min J (x, y,u) APOPT , BPOPT , IPOPT , SNOPT , MINOS x Problem characteristics: s.t. 0 f , x, y,u t Hock Schittkowski, Dynamic Opt, SBML 0 g(x, y,u) Nonlinear Programming (NLP) Differential Algebraic Equations (DAEs) 0 h(x, y,u) n m APMonitor Modeling Language x, y u MINLP Benchmark Testing min J (x, y,u, z) 1 1 2 APOPT , BPOPT , BONMIN x s.t. 0 f , x, y,u, z Problem characteristics: t MacMINLP, Industrial Test Set 0 g(x, y,u, z) Mixed Integer Nonlinear Programming (MINLP) 0 h(x, y,u, z) Mixed Integer Differential Algebraic Equations (MIDAEs) x, y n u m z m APMonitor & AMPL Modeling Language 1–APS, LLC 2–EPL, 3–SBS, Inc. Oct 9, 2013 APMonitor.com APOPT.com Brigham Young University NLP Benchmark – Summary (494) 100 90 80 APOPT+BPOPT APOPT 70 1.0 BPOPT 1.0 60 IPOPT 3.10 IPOPT 50 2.3 SNOPT Percentage (%) 6.1 40 Benchmark Results MINOS 494 Problems 5.5 30 20 10 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 Not worse than 2 times slower than -

Specifying “Logical” Conditions in AMPL Optimization Models

Specifying “Logical” Conditions in AMPL Optimization Models Robert Fourer AMPL Optimization www.ampl.com — 773-336-AMPL INFORMS Annual Meeting Phoenix, Arizona — 14-17 October 2012 Session SA15, Software Demonstrations Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 1 New and Forthcoming Developments in the AMPL Modeling Language and System Optimization modelers are often stymied by the complications of converting problem logic into algebraic constraints suitable for solvers. The AMPL modeling language thus allows various logical conditions to be described directly. Additionally a new interface to the ILOG CP solver handles logic in a natural way not requiring conventional transformations. Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 2 AMPL News Free AMPL book chapters AMPL for Courses Extended function library Extended support for “logical” conditions AMPL driver for CPLEX Opt Studio “Concert” C++ interface Support for ILOG CP constraint programming solver Support for “logical” constraints in CPLEX INFORMS Impact Prize to . Originators of AIMMS, AMPL, GAMS, LINDO, MPL Awards presented Sunday 8:30-9:45, Conv Ctr West 101 Doors close 8:45! Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 3 AMPL Book Chapters now free for download www.ampl.com/BOOK/download.html Bound copies remain available purchase from usual -

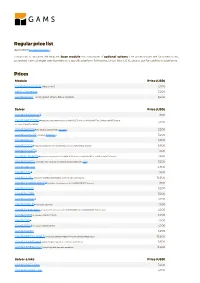

Standard Price List

Regular price list April 2021 (Download PDF ) This price list includes the required base module and a number of optional solvers. The prices shown are for unrestricted, perpetual named single user licenses on a specific platform (Windows, Linux, Mac OS X), please ask for additional platforms. Prices Module Price (USD) GAMS/Base Module (required) 3,200 MIRO Connector 3,200 GAMS/Secure - encrypted Work Files Option 3,200 Solver Price (USD) GAMS/ALPHAECP 1 1,600 GAMS/ANTIGONE 1 (requires the presence of a GAMS/CPLEX and a GAMS/SNOPT or GAMS/CONOPT license, 3,200 includes GAMS/GLOMIQO) GAMS/BARON 1 (for details please follow this link ) 3,200 GAMS/CONOPT (includes CONOPT 4 ) 3,200 GAMS/CPLEX 9,600 GAMS/DECIS 1 (requires presence of a GAMS/CPLEX or a GAMS/MINOS license) 9,600 GAMS/DICOPT 1 1,600 GAMS/GLOMIQO 1 (requires presence of a GAMS/CPLEX and a GAMS/SNOPT or GAMS/CONOPT license) 1,600 GAMS/IPOPTH (includes HSL-routines, for details please follow this link ) 3,200 GAMS/KNITRO 4,800 GAMS/LGO 2 1,600 GAMS/LINDO (includes GAMS/LINDOGLOBAL with no size restrictions) 12,800 GAMS/LINDOGLOBAL 2 (requires the presence of a GAMS/CONOPT license) 1,600 GAMS/MINOS 3,200 GAMS/MOSEK 3,200 GAMS/MPSGE 1 3,200 GAMS/MSNLP 1 (includes LSGRG2) 1,600 GAMS/ODHeuristic (requires the presence of a GAMS/CPLEX or a GAMS/CPLEX-link license) 3,200 GAMS/PATH (includes GAMS/PATHNLP) 3,200 GAMS/SBB 1 1,600 GAMS/SCIP 1 (includes GAMS/SOPLEX) 3,200 GAMS/SNOPT 3,200 GAMS/XPRESS-MINLP (includes GAMS/XPRESS-MIP and GAMS/XPRESS-NLP) 12,800 GAMS/XPRESS-MIP (everything but general nonlinear equations) 9,600 GAMS/XPRESS-NLP (everything but discrete variables) 6,400 Solver-Links Price (USD) GAMS/CPLEX Link 3,200 GAMS/GUROBI Link 3,200 Solver-Links Price (USD) GAMS/MOSEK Link 1,600 GAMS/XPRESS Link 3,200 General information The GAMS Base Module includes the GAMS Language Compiler, GAMS-APIs, and many utilities . -

Polyhedral Outer Approximations in Convex Mixed-Integer Nonlinear

Jan Kronqvist Polyhedral Outer Approximations in Polyhedral Outer Approximations in Convex Mixed-Integer Nonlinear Programming Approximations in Convex Polyhedral Outer Convex Mixed-Integer Nonlinear Programming Jan Kronqvist PhD Thesis in Process Design and Systems Engineering Dissertations published by Process Design and Systems Engineering ISSN 2489-7272 Faculty of Science and Engineering 978-952-12-3734-8 Åbo Akademi University 978-952-12-3735-5 (pdf) Painosalama Oy Åbo 2018 Åbo, Finland 2018 2018 Polyhedral Outer Approximations in Convex Mixed-Integer Nonlinear Programming Jan Kronqvist PhD Thesis in Process Design and Systems Engineering Faculty of Science and Engineering Åbo Akademi University Åbo, Finland 2018 Dissertations published by Process Design and Systems Engineering ISSN 2489-7272 978-952-12-3734-8 978-952-12-3735-5 (pdf) Painosalama Oy Åbo 2018 Preface My time as a PhD student began back in 2014 when I was given a position in the Opti- mization and Systems Engineering (OSE) group at Åbo Akademi University. It has been a great time, and many people have contributed to making these years a wonderful ex- perience. During these years, I was given the opportunity to teach several courses in both environmental engineering and process systems engineering. The opportunity to teach these courses has been a great experience for me. I want to express my greatest gratitude to my supervisors Prof. Tapio Westerlund and Docent Andreas Lundell. Tapio has been a true source of inspiration and a good friend during these years. Thanks to Tapio, I have been able to be a part of an inter- national research environment. -

Performance of Optimization Software - an Update

Performance of Optimization Software - an Update INFORMS Annual 2011 Charlotte, NC 13-18 November 2011 H. D. Mittelmann School of Math and Stat Sciences Arizona State University 1 Services we provide • Guide to Software: "Decision Tree" • http://plato.asu.edu/guide.html • Software Archive • Software Evaluation: "Benchmarks" • Archive of Testproblems • Web-based Solvers (1/3 of NEOS) 2 We maintain the following NEOS solvers (8 categories) Combinatorial Optimization * CONCORDE [TSP Input] Global Optimization * ICOS [AMPL Input] Linear Programming * bpmpd [AMPL Input][LP Input][MPS Input][QPS Input] Mixed Integer Linear Programming * FEASPUMP [AMPL Input][CPLEX Input][MPS Input] * SCIP [AMPL Input][CPLEX Input][MPS Input] [ZIMPL Input] * qsopt_ex [LP Input][MPS Input] [AMPL Input] Nondifferentiable Optimization * condor [AMPL Input] Semi-infinite Optimization * nsips [AMPL Input] Stochastic Linear Programming * bnbs [SMPS Input] * DDSIP [LP Input][MPS Input] 3 We maintain the following NEOS solvers (cont.) Semidefinite (and SOCP) Programming * csdp [MATLAB_BINARY Input][SPARSE_SDPA Input] * penbmi [MATLAB Input][MATLAB_BINARY Input] * pensdp [MATLAB_BINARY Input][SPARSE_SDPA Input] * sdpa [MATLAB_BINARY Input][SPARSE_SDPA Input] * sdplr [MATLAB_BINARY Input][SDPLR Input][SPARSE_SDPA Input] * sdpt3 [MATLAB_BINARY Input][SPARSE_SDPA Input] * sedumi [MATLAB_BINARY Input][SPARSE_SDPA Input] 4 Overview of Talk • Current and Selected(*) Benchmarks { Parallel LP benchmarks { MILP benchmark (MIPLIB2010) { Feasibility/Infeasibility Detection benchmarks -

Julia: a Modern Language for Modern ML

Julia: A modern language for modern ML Dr. Viral Shah and Dr. Simon Byrne www.juliacomputing.com What we do: Modernize Technical Computing Today’s technical computing landscape: • Develop new learning algorithms • Run them in parallel on large datasets • Leverage accelerators like GPUs, Xeon Phis • Embed into intelligent products “Business as usual” will simply not do! General Micro-benchmarks: Julia performs almost as fast as C • 10X faster than Python • 100X faster than R & MATLAB Performance benchmark relative to C. A value of 1 means as fast as C. Lower values are better. A real application: Gillespie simulations in systems biology 745x faster than R • Gillespie simulations are used in the field of drug discovery. • Also used for simulations of epidemiological models to study disease propagation • Julia package (Gillespie.jl) is the state of the art in Gillespie simulations • https://github.com/openjournals/joss- papers/blob/master/joss.00042/10.21105.joss.00042.pdf Implementation Time per simulation (ms) R (GillespieSSA) 894.25 R (handcoded) 1087.94 Rcpp (handcoded) 1.31 Julia (Gillespie.jl) 3.99 Julia (Gillespie.jl, passing object) 1.78 Julia (handcoded) 1.2 Those who convert ideas to products fastest will win Computer Quants develop Scientists prepare algorithms The last 25 years for production (Python, R, SAS, DEPLOY (C++, C#, Java) Matlab) Quants and Computer Compress the Scientists DEPLOY innovation cycle collaborate on one platform - JULIA with Julia Julia offers competitive advantages to its users Julia is poised to become one of the Thank you for Julia. Yo u ' v e k i n d l ed leading tools deployed by developers serious excitement. -

Open Source Tools for Optimization in Python

Open Source Tools for Optimization in Python Ted Ralphs Sage Days Workshop IMA, Minneapolis, MN, 21 August 2017 T.K. Ralphs (Lehigh University) Open Source Optimization August 21, 2017 Outline 1 Introduction 2 COIN-OR 3 Modeling Software 4 Python-based Modeling Tools PuLP/DipPy CyLP yaposib Pyomo T.K. Ralphs (Lehigh University) Open Source Optimization August 21, 2017 Outline 1 Introduction 2 COIN-OR 3 Modeling Software 4 Python-based Modeling Tools PuLP/DipPy CyLP yaposib Pyomo T.K. Ralphs (Lehigh University) Open Source Optimization August 21, 2017 Caveats and Motivation Caveats I have no idea about the background of the audience. The talk may be either too basic or too advanced. Why am I here? I’m not a Sage developer or user (yet!). I’m hoping this will be a chance to get more involved in Sage development. Please ask lots of questions so as to guide me in what to dive into! T.K. Ralphs (Lehigh University) Open Source Optimization August 21, 2017 Mathematical Optimization Mathematical optimization provides a formal language for describing and analyzing optimization problems. Elements of the model: Decision variables Constraints Objective Function Parameters and Data The general form of a mathematical optimization problem is: min or max f (x) (1) 8 9 < ≤ = s.t. gi(x) = bi (2) : ≥ ; x 2 X (3) where X ⊆ Rn might be a discrete set. T.K. Ralphs (Lehigh University) Open Source Optimization August 21, 2017 Types of Mathematical Optimization Problems The type of a mathematical optimization problem is determined primarily by The form of the objective and the constraints.