Documentation, Written in Java, Scheme, XML and Javadoc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

38Th Meeting Held in Ghent, Belgium Friday 19 November 2004

Formal Methods Europe Minutes of the 38th meeting Held in Ghent, Belgium Friday 19 November 2004 Present at the meeting were: • Raymond Boute • Neville Dean • Steve Dunne • John Fitzgerald (Chairman) • Valerie Harvey • Franz Lichtenberger • Dino Mandrioli • José Oliveira • Nico Plat (Secretary) • Kees Pronk (Treasurer) • Ken Robinson • Simão Melo de Sousa • Marcel Verhoef Apologies had been received from: Eerke Boiten, Jonathan Bowen, Ana Cavalcante, Tim Denvir, Alessandro Fantechi, Stefania Gnesi, Shmuel Katz, Steve King, Jan Tretmans, Teemu Tynjala, Jim Woodcock. 1 Welcome John Fitzgerald welcomed all those present at the meeting. He thanked Raymond Boute for his work organising the meeting. He briefly introduced FME and its aims for those normally not present at an FME meeting. 2 Agree upon agenda Item 5 (FME logo) was deleted. A report on ISOLA by Marcel Verhoef was added to the agenda. 3 Minutes of the meeting held in Newcastle upon Tyne on 6 September 2004 The minutes of the meeting were approved without modification. 4 Action list Action 34/7: Done, see item 10 of these minutes. Action closed. Action 37/1: Done. The paper is now available at www.fmeurope.org under the “Formal methods” -> “Education” section. Action closed. Action 37/2: Done but no response received as yet. Action closed. Action 37/3: Done, action closed. Jonathan Bowen had reported by e-mail that that there is no real progress and that he is very busy with other commitments at the moment. If a good EC call presents itself that would be motivational. Jonathan would prefer, however, that someone else would take a more leading role in reviving it, with Jonathan as a backup. -

Formal Methods: from Academia to Industrial Practice a Travel Guide

Formal Methods: From Academia to Industrial Practice A Travel Guide Marieke Huisman Department of Computer Science (FMT), UT, P.O. Box 217, 7500 AE Enschede, The Netherlands Dilian Gurov KTH Royal Institute of Technology, Lindstedtsvägen 3, SE-100 44 Stockholm, Sweden Alexander Malkis Department of Informatics (I4), TUM, Boltzmannstr. 3, 85748 Garching, Germany 17 February 2020 Abstract For many decades, formal methods are considered to be the way for- ward to help the software industry to make more reliable and trustworthy software. However, despite this strong belief and many individual success stories, no real change in industrial software development seems to be oc- curring. In fact, the software industry itself is moving forward rapidly, and the gap between what formal methods can achieve and the daily software- development practice does not appear to be getting smaller (and might even be growing). In the past, many recommendations have already been made on how to develop formal-methods research in order to close this gap. This paper investigates why the gap nevertheless still exists and provides its own re- commendations on what can be done by the formal-methods–research com- munity to bridge it. Our recommendations do not focus on open research questions. In fact, formal-methods tools and techniques are already of high quality and can address many non-trivial problems; we do give some tech- nical recommendations on how tools and techniques can be made more ac- cessible. To a greater extent, we focus on the human aspect: how to achieve impact, how to change the way of thinking of the various stakeholders about this issue, and in particular, as a research community, how to alter our be- haviour, and instead of competing, collaborate to address this issue. -

Formal Methods Specification and Verification Guidebook for Software and Computer Systems Volume I: Planning and Technology Insertion

OFFICE OF SAFETY AND MISSION ASSURANCE NASA-GB-002-95 RELEASE 1.0 FORMAL METHODS SPECIFICATION AND VERIFICATION GUIDEBOOK FOR SOFTWARE AND COMPUTER SYSTEMS VOLUME I: PLANNING AND TECHNOLOGY INSERTION JULY 1995 NATIONAL AERONAUTICS AND SPACE ADMINISTRATION WASHINGTON, DC 20546 NASA-GB-002-95 Release 1.0 FORMAL METHODS SPECIFICATION AND VERIFICATION GUIDEBOOK FOR SOFTWARE AND COMPUTER SYSTEMS VOLUME I: PLANNING AND TECHNOLOGY INSERTION FOREWORD The Formal Methods Specification and Verification Guidebook for Software and Computer Systems describes a set of techniques called Formal Methods (FM), and outlines their use in the specification and verification of computer systems and software. Development of increasingly complex systems has created a need for improved specification and verification techniques. NASA's Safety and Mission Quality Office has supported the investigation of techniques such as FM, which are now an accepted method for enhancing the quality of aerospace applications. The guidebook provides information for managers and practitioners who are interested in integrating FM into an existing systems development process. Information includes technical and administrative considerations that must be addressed when establishing the use of FM on a specific project. The guidebook is intended to aid decision makers in the successful application of FM to the development of high- quality systems at reasonable cost. This is the first volume of a planned two- volume set. The current volume focuses on administrative and planning considerations for the successful application of FM. Volume II will contain more technical information for the FM practitioner, and will be released at a later date. Major contributors to the guidebook include, from the Jet Propulsion Laboratory: Rick Covington (editor), John Kelly (task lead), and Robyn Lutz; from Johnson Space Center: David Hamilton (Loral) and Dan Bowman (Loral); from Langley Research Center: Ben DiVito (VIGYAN) and Judith Crow (SRI International); and from NASA HQ Code Q: Alice Robinson. -

Winter 1998 ISSN 1361-3103

S~ies I Vol. 3, No. 4, ·Wihter 199B-FAGS Europe 1 r FACS. FORMAL METHODS EUROPE Europe ~ ~ The Newsletter of the BCS Formal Aspects of Computing Science Special Interest Group and Formal Methods Europe. Series I Vol. 3, No. 4, Winter 1998 ISSN 1361-3103 1 Editorial Apologies to all our readers for the interruption in publication. Hopefully, we are now back on track, with a new editorial team taking over from the next issue. However, this, along with various problems in staging events last year, has really brought home to FACS committee how overstretched we are at times, and how much in need of new active committee members. The will is there, but often the time is not... So please, if YOU can help FACS make a good start into the next 20 years, get in touch with us and make an offer of help! Our main needs are for: event organizers; newsletter contributors; and above all, thinkers and 2 FACS Europe - Series 1 Vol. 3, No. 4, Winter 1998 movers with good ideas and time/energy to bring them through to effect. We tend to work mainly bye-mail, and meet a couple of times a year face to face. 1.1 FACS is 20! The theme for this issue is '20 Years of BCS-FACS'. We have two special pieces: a guest piece from a long-time contributor from earlier years, F X Reid, and also a parting (alas!) piece from Dan Simpson, who is resigning from the committee after many long years of much appreciated support. -

FM'99 - Formal Methods

Lecture Notes in Computer Science 1708 FM'99 - Formal Methods World Congress on Formal Methods in the Developement of Computing Systems, Toulouse, France, September 20-24, 1999, Proceedings, Volume I Bearbeitet von Jeannette M. Wing, Jim Woodcook, Jim Davies 1. Auflage 1999. Taschenbuch. xxxvi, 940 S. Paperback ISBN 978 3 540 66587 8 Format (B x L): 15,5 x 23,5 cm Gewicht: 1427 g Weitere Fachgebiete > Technik > Technik Allgemein > Computeranwendungen in der Technik Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. Preface Formal methods are coming of age. Mathematical techniques and tools are now regarded as an important part of the development process in a wide range of industrial and governmental organisations. A transfer of technology into the mainstream of systems development is slowly, but surely, taking place. FM'99, the First World Congress on Formal Methods in the Development of Computing Systems, is a result, and a measure, of this new-found maturity. It brings an impressive array of industrial and applications-oriented papers that show how formal methods have been used to tackle real problems. These proceedings are a record of the technical symposium of FM'99 :along- side the papers describing applications of formal methods, you will find technical reports, papers, and abstracts detailing new advances in formal techniques, from mathematical foundations to practical tools. -

FACS FACTS Newsletter

Issue 2009-1 July 2009 FACS A C T S The Newsletter of the Formal Aspects of Computing Science (FACS) Specialist Group FACS FACTS Issue 2009-1 July 2009 About FACS FACTS FACS FACTS [ISSN: 0950-1231] is the newsletter of the BCS Specialist Group on Formal Aspects of Computing Science (FACS). FACS FACTS is distributed in electronic form to all FACS members. Submissions to FACS FACTS are always welcome. Please visit the newsletter area of the FACS website [http://www.bcs-facs.org/newsletter] for further details. Back issues of FACS FACTS are available to download from: http://www.bcs-facs.org/newsletter/facsfactsarchive.html The FACS FACTS Team Newsletter Editor Margaret West [[email protected]] Editorial Team Paul Boca, Jonathan Bowen, Jawed Siddiqi Contributors to this Issue Paul Boca, Jonathan Bowen, Tim Denvir, John Fitzgerald, Anthony Hall, Jawed Siddiqi, Margaret West If you have any questions about FACS, please send these to Paul Boca [[email protected]] 2 FACS FACTS Issue 2009-1 July 2009 Peter John Landin (1930–2009) It is with great sadness that we note the death of Peter Landin on June 3rd 2009. Peter was a major contributor to Computer Science in general, and to semantics and functional programming in particular. An obituary will be published in the next issue. Readers with personal recollections of Peter are invited to contact the editor so that these can also be included. Editorial Some brief news follows of our activities this year and proposed activities for next year. Our FACS evening seminar series commenced as usual in autumn 2008. -

Formal Methods Specification and Analysis Guidebook for the Verification of Software and Computer Systems

OIIICI 01 Sill 1) l\l)\llsslo\ \%s( l{\\cl NASA-GB-O01-97 1{1 I I \\l 1 (1 Formal Methods Specification and Analysis Guidebook for the Verification of Software and Computer Systems Volume 11: A Practitioner’s Companion May 1997 L*L● National Aeronautics and Space Administration @ Washington, DC 20546 NASA-GB-001-97 Release 1.0 FORMAL METHODS SPECIFICATION AND ANALYSIS GUIDEBOOK FOR THE VERIFICATION OF SOFTWARE AND COMPUTER SYSTEMS VOLUME II: A PRACTITIONER’S COMPANION FOREWORD This volume presents technical issues involved in applying mathematical techniques known as Formal Methods to specify and analytically verify aerospace and avionics software systems. The first volume, NASA-GB-002-95 [NASA-95a], dealt with planning and technology insertion. This second volume discusses practical techniques and strategies for verifying requirements and high-level designs for software intensive systems. The discussion is illustrated with a realistic example based on NASA’s Simplified Aid for EVA (Extravehicular Activity) Rescue [SAFER94a, SAFER94b]. The vohu-ne is intended as a “companion” and guide for the novice formal methods and analytical verification practitioner. Together, the two volumes address the recognized need for new technologies and improved techniques to meet the demands inherent in developing increasingly complex and autonomous systems. The support of NASA’s Safety and Mission Quality Office for the investigation of formal methods and analytical verification techniques reflects the growing practicality of these approaches for enhancing the quality of aerospace and avionics applications. Major contributors to the guidebook include Judith Crow, lead author (SRI International); Ben Di Vito, SAFER example author (ViGYAN); Robyn Lutz (NASA - JPL); Larry Roberts (Lockheed Martin Space Mission Systems and Services); Martin Feather (NASA - JPL); and John Kelly (NASA - JPL), task lead. -

Alaris Capture Pro Software

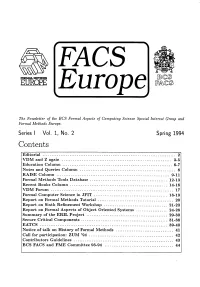

~FACS FORMAL METHODS EUROPE The Newsletter of the BCS Formal Aspects of Computing Science Special Interest Group and Formal Methods Europe. Series I Vol. 1, No. 2 Spring 1994 Contents Editorial .......................................................................... 2 VDM and Z again .............................................................. 3-5 Education Column ............................................................. 6-7 Notes and Queries Column ..................................................... 8 RAISE Column ............................................................... 9-11 Formal Methods Tools Database ..................... , ....... " ............ 12-13 Recent Books Column ...................................................... 14-16 VDM Forunl .................................................................... 17 Formal Computer Science in JFIT ......................................... 18-19 Report on Formal Methods Tutorial .......................................... 20 Report on Sixth Refinement Workshop ................................... 21-23 Report on .Formal Aspects of Object Oriented Systems ................. 24-28 Summary of the ERIL Project ............................................. 29-30 Secure Critical Components ................................................ 31-38 EATCS ....................................................................... 39-40 Notice of talk on History of Formal Methods ................................ 41 Call for participation: ZUM '94 .............................................. -

BCS-FACS: a Report from the Trenches

FACS FACTS The Newsletter of the BCS Specialist Group in Formal Aspects of Computing Science Issue 2003-1 July 2003 ISSN 0950-1231 Contents Editorial.................................................................................................. 1 BCS-FACS: A report from the trenches.................................................. 2 Some events sponsored/organised by the BCS-FACS group ................ 8 Conference and Workshop Reports ..................................................... 10 ZB2003 conference report ................................................................... 10 FORTEST meeting report .................................................................... 12 Object Oriented Technology in Aviation Workshop .............................. 14 Book Announcements.......................................................................... 15 Some Forthcoming Events................................................................... 16 European Association for Theoretical Computer Science..................... 17 Posts in Formal Methods ..................................................................... 18 FACS Officers...................................................................................... 18 Editorial FACS FACTS is the newsletter of the BCS Formal Aspects of Computing Science (FACS) specialist group. It publishes reports on events organised or attended by the group, and announcements of relevant conferences, books, etc. Previously it was known as FACS EUROPE as it was published in association with the FM -

Of Teaching Formal Methods

Preface This volume contains the proceedings of TFM 2009, the Second International FME Conference on Teaching Formal Methods, organized by the Subgroup of Education of the Formal Methods Europe (FME) association. The conference took place as part of the first Formal Methods Week (FMWeek), held in Eind- hoven, The Netherlands, in November 2009. TFM 2009 was a one-day forum in which to explore the successes and fail- ures of Formal Methods (FM) education, and to promote cooperative projects to further education and training in FMs. The organizers gathered lecturers, teach- ers, and industrial partners to discuss their experience, present their pedagogical methodologies, and explore best practices. Interest in formal method teaching is growing. TFM 2009 followed in a series of events on teaching formal methods which includes two BCS-FACS TFM work- shops (Oxford in 2003, and London in 2006), the TFM 2004 conference (Ghent, 2004, with proceedings published as Springer LNCS Volume 3294), the FM-Ed 2006 workshop (Hamilton, co-located with FM 2006), FORMED (Budapest, at ETAPS 2008), and FMET 2008 (Kitakyushu, co-located with ICFEM 2008). Formal methods have an important role to play in the development of com- plex computing systems|a role acknowledged in industrial standards such as IEC 61508 and ISO/IEC 15408, and in the increasing use of precise modelling notations, semantic markup languages, and model-driven techniques. There is a growing need for software engineers who can work effectively with simple, math- ematical abstractions, and with -

FM2009 Is the Sixteenth Symposium in a Series of the Formal Methods Europe Association

16TH INTERNATIONAL SYMPOSIUM ON FORMAL METHODS NOVEMBER 2-6, 2009 • TECHNISCHE UNIVERSITEIT EINDHOVEN • THE NETHERLANDS FM2009 is the sixteenth symposium in a series of the Formal Methods Europe association. The symposium will be organized as a world congress. Ten years after FM'99 in Toulouse, the first world congress, the formal methods communities from all over the world will again have the opportunity to meet. Therefore, FM2009 will be both an occasion to celebrate, and a platform for enthusiastic researchers and practitioners from a diversity of backgrounds and schools to exchange their ideas and share their experience. Reflecting the global span of FM2009, the Programme Committee has been recruited from all over the world (see overleaf). For the technical symposium, papers on every aspect of the development and application of formal methods for the improvement of the cur- rent practice on system developments are invited for submission. Of particular interest are papers on tools and industrial applications: there will be a special track devoted to this topic. INVITED SPEAKERS The proceedings of FM2009 will be published in Springer's Lec- Wan Fokkink, NL ture Notes in Computer Science series. Submitted papers should not Carroll Morgan, Australia have been submitted elsewhere for publication, should be in Springer's Colin O'Halloran, UK LNCS format (as described in: www.springer.com/lncs), and should Sriram Rajamani, India not exceed 16 pages including appendices. Please note that an ac- Jeannette Wing, USA cepted paper will be published in the Proceedings only if at least one of its authors attends the symposium, in order to present their PC CHAIRS accepted work. -

Lecture Notes in Computer Science 2021 Edited by G

Lecture Notes in Computer Science 2021 Edited by G. Goos, J. Hartmanis and J. van Leeuwen 3 Berlin Heidelberg New York Barcelona Hong Kong London Milan Paris Singapore Tokyo Jos´e Nuno Oliveira Pamela Zave (Eds.) FME 2001: Formal Methods for Increasing Software Productivity International Symposium of Formal Methods Europe Berlin, Germany, March 12-16, 2001 Proceedings 13 Series Editors Gerhard Goos, Karlsruhe University, Germany Juris Hartmanis, Cornell University, NY, USA Jan van Leeuwen, Utrecht University, The Netherlands Volume Editors Jos´e Nuno Oliveira University of Minho, Computer Science Department Campus de Gualtar, 4700-320 Braga, Portugal E-mail: [email protected] Pamela Zave AT&T Laboratories – Research 180 Park Avenue, Florham Park, New Jersey 07932, USA E-mail: [email protected] Cataloging-in-Publication Data applied for Die Deutsche Bibliothek - CIP-Einheitsaufnahme Formal methods for increasing software productivity : proceedings / FME 2001, International Symposium of Formal Methods Europe, Berlin, Germany, March 12 - 16, 2001. José Nuno Oliveira ; Pamela Zave (ed.). - Berlin ; Heidelberg ; New York ; Barcelona ; Hong Kong ; London ; Milan ; Paris ; Singapore ; Tokyo : Springer, 2001 (Lecture notes in computer science ; Vol. 2021) ISBN 3-540-41791-5 CR Subject Classification (1998): F.3, D.1-3, J.1, K.6, F.4.1 ISSN 0302-9743 ISBN 3-540-41791-5 Springer-Verlag Berlin Heidelberg New York This work is subject to copyright. All rights are reserved, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, re-use of illustrations, recitation, broadcasting, reproduction on microfilms or in any other way, and storage in data banks.