Downloading Remote Data Sets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory,Ritual and Place in Africa TWIN CITIES AFRICANIST SYMPOSIUM

Sacred Ground: Memory,Ritual and Place in Africa TWIN CITIES AFRICANIST SYMPOSIUM Carleton College February 21-22, 2003 Events Schedule Friday, February 21 Great Hall, 4 to 9 p.m. Welcoming Remarks Allen Isaacman, University of Minnesota Keynote Lecture “The Politics and Poetics of Sacred Sites” Sandra Greene, Professor of History, Cornell University 4 to 6 p.m. Reception with African Food, Live Music Musical performance by Jalibah Kuyateh and the Mandingo Griot Ensemble 6 to 9 p.m. Saturday, February 22 Alumni Guest House Meeting Room Morning panel: 9 to 10:30 a.m. Theme: Sacred Ground: Memory, Ritual and Place in Africa Chair: Sandra Greene, Cornell University William Moseley, Department of Geography, Macalester College, “Leaving Hallowed Practices for Hollow Ground: Wealth, Poverty and Cotton Production in Southern Mali” Kathryn Linn Geurts, Department of Anthropology, Hamline University, “Migration Myths, Landscape, and Cultural Memory in Southeastern Ghana” Jamie Monson, Department of History, Carleton College, “From Protective Lions to Angry Spirits: Local Discourses of Land Degradation in Tanzania” Cynthia Becker, Department of Art History, University of St. Thomas, “Zaouia: Sacred Space, Sufism and Slavery in the Trans-Sahara Caravan Trade” Coffee Break Mid-Morning panel: 11 a.m. to 12:30 p.m. Theme: Memory, Ritual and Performance in Africa Chair: Dianna Shandy, Macalester College Michele Wagner, Department of History, University of Minnesota, “Reburial in Rwanda: Ritual of Healing or Ritual of Revenge?” Tommie Jackson, Department of English, St. Cloud State University, “‘Fences’ in the drama by August Wilson and ‘Sizwe Bansi is Dead,’ by Athol Fugard” Helena Pohlandt-McCormick, Department of History, University of Minnesota, “Memory and Violence in Soweto” Pamela Feldman-Savelsberg, Department of Anthropology, Carleton College, “Remembering the Troubles: Collective Memory and Reproduction in Cameroon” Break 12:30 to 2 p.m. -

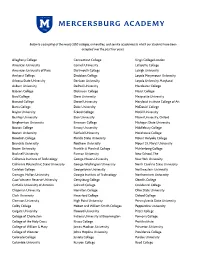

Below Is a Sampling of the Nearly 500 Colleges, Universities, and Service Academies to Which Our Students Have Been Accepted Over the Past Four Years

Below is a sampling of the nearly 500 colleges, universities, and service academies to which our students have been accepted over the past four years. Allegheny College Connecticut College King’s College London American University Cornell University Lafayette College American University of Paris Dartmouth College Lehigh University Amherst College Davidson College Loyola Marymount University Arizona State University Denison University Loyola University Maryland Auburn University DePaul University Macalester College Babson College Dickinson College Marist College Bard College Drew University Marquette University Barnard College Drexel University Maryland Institute College of Art Bates College Duke University McDaniel College Baylor University Eckerd College McGill University Bentley University Elon University Miami University, Oxford Binghamton University Emerson College Michigan State University Boston College Emory University Middlebury College Boston University Fairfield University Morehouse College Bowdoin College Florida State University Mount Holyoke College Brandeis University Fordham University Mount St. Mary’s University Brown University Franklin & Marshall College Muhlenberg College Bucknell University Furman University New School, The California Institute of Technology George Mason University New York University California Polytechnic State University George Washington University North Carolina State University Carleton College Georgetown University Northeastern University Carnegie Mellon University Georgia Institute of Technology -

Class of 2018 Successes

High School Success 2017-18 A U S T I N W A L D O R F S C H O O L C L A S S O F 2 0 1 8 C O L L E G E S O F A C C E P T A N C E A N D M A T R I C U L A T I O N Agnes Scott College Eckerd College Oklahoma State University University of Arizona American University Fordham University Okl ahoma University University of Denver Austin Community College Goucher College Rider University University of Georgia Barnard College Hendrix College Sarah Lawrence College University of North Texas Bates College High Point University Seattle University University of Portland Baylor University Hobart & William Smith College Smith College University of Redlands Centre College Illinois Wesleyan University Southwestern University University of San Fransisco Colorado State University Kansas State University St. Edward's University University of Texas at Austin Connecticut College Lewis and Clark College Stephen F. Austin University University of Texas at Dallas Denison University Loyola University Chicago Texas A&M University University of Texas at San Antonio Depaul University Marymount Manhattan College Texas State University University of Wyoming Drew University Middlebury College Texas Tech University Washington University in St. Louis Drexel University Mount Holyoke College Trinity University Wesleyan College Earlham College Nova Southeastern Univeristy University of Alabama Whitman College Whittier College The Class of 2018 In tota l , e l e v e n g raduates All 1 6 graduates of the class of 2018 applied of the Class of 2018 earned to 7 4 , were accepted to 5 7 , and will $ 2 . -

Continental-Scale Links Between the Mantle and Groundwater Systems of the Western United States: Evidence from Travertine Springs and Regional He Isotope Data

VOL. 15, No. 12 A PUBLICATION OF THE GEOLOGICAL SOCIETY OF AMERICa DECEMBER 2005 Continental-scale links between the mantle and groundwater systems of the western United States: Evidence from travertine springs and regional He isotope data Inside: Continental-scale links between the mantle and groundwater systems of the western United States: Evidence from travertine springs and regional He isotope data, by DENNIS L. NEWEll, LAURA J. CROSSEY, KARL E. KARLSTROM, TOBIAS P. FISCHER, AND DAVID R. HILTON, p. 4 Section Meetings: Northeastern, p. 14 Southeastern, p. 20 South-Central, p. 27 le re sab fer e i r d e s n o I u r c e s tectonics, petrology, mantle dynamics, impacts, and syntheses reconcil- Plates, Plumes, and Paradigms ing several branches of earth science. Included are chapters that advocate edited by Gillian R. Foulger, James H. Natland, Dean C. Presnall, the plume model and ones that advocate alternative models. The book and Don L. Anderson will enjoy a long lifetime of usefulness and functions as a reference work for students, scholars, and informed lay people. It is equally valuable This beautiful compendium of work on hotspot volcanism documents the for supporting advanced undergraduate or post-graduate courses and re- development, current state-of-play, and future prospects of all branches search scientists working at the forefront of hotspot science. It is an es- of the subject. It contains extensive and indispensable reference resources sential addition to the bookshelves of every science library, earth science in the form of hotspot, tectonic, volcano and tomographic maps and cross teacher, and research scientist who aspires to understand the frontiers of sections of Earth. -

WHITMAN GEOLOGY Vol

WHITMAN GEOLOGY Vol. 1, 2006 Dear Geology Alumni; Hi! I am initiating this newsletter with the hope that it will establish a stronger connection between alumni and current students. This newsletter will not only keep you in touch with the events here at Whitman, but your responses will be a valuable resource to students as they make their choices for life outside of Whitman. I’d like to encourage you to become part of Whitman’s career consultant network. This is a network of alumni and parents who have agreed to make themselves available to talk to students about their careers and jobs. For more information or to sign up please visit this website: http://www.whitman.edu/content/career_center/parents/networking-at-whitman If you have any other words of advice for students or would like to write something to published in a subsequent newsletter we would be happy to hear from you! This newsletter will be sent out annually in November in conjunction with Whittie News. You can email me at [email protected] Best Wishes, Tara Gregg ‘05 Where in the world is Whitman Geology? This past summer Professor Bob Carson took a group of students to Tibet. For pictures and details on his trip visit this website: http://people.whitman.edu/~carsonrj/trips/Tibet/Tibetrocks2005.htm Last fall’s regional trip visited central Idaho. The trip was lead by Visiting Professor Brennan Jordan. Also attending were Professor Bob Carson and 34 students. Cooper Brossy (’03) joined the trip for a few days and led the group through Craters of the Moon National Monument. -

APRIL 2020 Newsletter

Submissions from the t- shirt design contest are Read about future plans in! Check them out on for some of the class of page 4! 2020 in the Senior Spotlights on pages 7-8! ST. OLAF COLLEGE TRIO Upward Bound Messenger March/April 2020 Volume XXXI Issue #6 wp.stolaf.edu/upward/ UB Reminders and Updates By: Mari Avaloz Although spring is generally a time we will focus on math and science start thinking about graduation, BBQs homework help and are available to and living at Olaf for the summer, we you for the remainder of the school seem to remain in a time of year. uncertainty. UB staff also feel the same and miss seeing our students in UB Summer Program person, but we are thankful for their continued dedication to the program. UB is here to remind students to SP The most up-to-date information and remember, this too will pass. about summer is detailed in the letter th Don’t lose motivation to finish the sent on April 10 . Students, please school year strong, and look forward keep up with your email regarding In This Issue: to the time we can unite again. It will updates about summer. Parents/ happen. This article highlights a few guardians, we will send more info of our most recent updates (more once we lock down more specifics. UB SPIRIT WEEK . page 2 details can be found in the letter sent Please feel free to call UB with any to participants on April 10, 2020). additional questions or concerns. WELCOME NEW STUDENTS! . -

Fall 2019 College Visits Users' Guide

Fall 2019 College Visits Users’ Guide Providence Academy College Counseling Disclaimer: The descriptions in this guide have been formed from the combined experience of PA’s college counselors, input from admission representatives, feedback from PA students and graduates, and recognized college guides. This guide does not depict all that there is to know about these campuses, nor does it mention all the strong academic offerings which may be available. We hope it helps you choose visits well and to broaden your college search! REMINDER: To attend college meetings scheduled during the Light Blue or Pink elective periods, students must obtain a college visit pass from Mrs. Peterson at least one day in advance of the visit and then, also at least one day in advance, speak with and obtain the signature of their elective course or study hall instructor . With a signed college visit pass, students may proceed directly to the college meeting at the start of the period. Tuesday, September 24 8:00 AM: University of British Columbia (Vancouver, BC) (UBC is a very large, internationally recognized research university that recruits heavily from abroad, which includes recruiting U.S. students to its campus on the edge of the Strait of Georgia in Vancouver, Canada. The massive campus requires considerable independence and self-direction, but the academic programs are widely considered to be first-rate. Prominent programs include computer science, economics, and international relations.) 8:00 AM: Lynn University (Boca Raton, FL) (A private university in Boca Raton, Fla., Lynn enrolls 2,300 undergraduate students and is considered one of the country’s most innovative colleges. -

Geochronology of Precambrian Meta-Gabbro in the Henrys Lake Mountains, Southwest Montana and Idaho B

KECK GEOLOGY CONSORTIUM PROCEEDINGS OF THE TWENTY-FOURTH ANNUAL KECK RESEARCH SYMPOSIUM IN GEOLOGY April 2011 Union College, Schenectady, NY Dr. Robert J. Varga, Editor Director, Keck Geology Consortium Pomona College Dr. Holli Frey Symposium Convenor Union College Carol Morgan Keck Geology Consortium Administrative Assistant Diane Kadyk Symposium Proceedings Layout & Design Department of Earth & Environment Franklin & Marshall College Keck Geology Consortium Geology Department, Pomona College 185 E. 6th St., Claremont, CA 91711 (909) 607-0651, [email protected], keckgeology.org ISSN# 1528-7491 The Consortium Colleges The National Science Foundation ExxonMobil Corporation KECK GEOLOGY CONSORTIUM PROCEEDINGS OF THE TWENTY-FOURTH ANNUAL KECK RESEARCH SYMPOSIUM IN GEOLOGY ISSN# 1528-7491 April 2011 Robert J. Varga Keck Geology Consortium Diane Kadyk Editor and Keck Director Pomona College Proceedings Layout & Design Pomona College 185 E 6th St., Claremont, CA Franklin & Marshall College 91711 Keck Geology Consortium Member Institutions: Amherst College, Beloit College, Carleton College, Colgate University, The College of Wooster, The Colorado College, Franklin & Marshall College, Macalester College, Mt Holyoke College, Oberlin College, Pomona College, Smith College, Trinity University, Union College, Washington & Lee University, Wesleyan University, Whitman College, Williams College 2010-2011 PROJECTS FORMATION OF BASEMENT-INVOLVED FORELAND ARCHES: INTEGRATED STRUCTURAL AND SEISMOLOGICAL RESEARCH IN THE BIGHORN MOUNTAINS, WYOMING Faculty: CHRISTINE SIDDOWAY, MEGAN ANDERSON, Colorado College, ERIC ERSLEV, University of Wyoming Students: MOLLY CHAMBERLIN, Texas A&M University, ELIZABETH DALLEY, Oberlin College, JOHN SPENCE HORNBUCKLE III, Washington and Lee University, BRYAN MCATEE, Lafayette College, DAVID OAKLEY, Williams College, DREW C. THAYER, Colorado College, CHAD TREXLER, Whitman College, TRIANA N. UFRET, University of Puerto Rico, BRENNAN YOUNG, Utah State University. -

Using Structural Analyses To

KECK GEOLOGY CONSORTIUM PROCEEDINGS OF THE TWENTY-FIFTH ANNUAL KECK RESEARCH SYMPOSIUM IN GEOLOGY April 2012 Amherst College, Amherst, MA Dr. Robert J. Varga, Editor Director, Keck Geology Consortium Pomona College Dr. Tekla Harms Symposium Convenor Amherst College Carol Morgan Keck Geology Consortium Administrative Assistant Diane Kadyk Symposium Proceedings Layout & Design Department of Earth & Environment Franklin & Marshall College Keck Geology Consortium Geology Department, Pomona College 185 E. 6th St., Claremont, CA 91711 (909) 607-0651, [email protected], keckgeology.org ISSN# 1528-7491 The Consortium Colleges The National Science Foundation ExxonMobil Corporation KECK GEOLOGY CONSORTIUM PROCEEDINGS OF THE TWENTY-FIFTH ANNUAL KECK RESEARCH SYMPOSIUM IN GEOLOGY ISSN# 1528-7491 April 2012 Robert J. Varga Keck Geology Consortium Diane Kadyk Editor and Keck Director Pomona College Proceedings Layout & Design Pomona College 185 E 6th St., Claremont, CA Franklin & Marshall College 91711 Keck Geology Consortium Member Institutions: Amherst College, Beloit College, Carleton College, Colgate University, The College of Wooster, The Colorado College, Franklin & Marshall College, Macalester College, Mt Holyoke College, Oberlin College, Pomona College, Smith College, Trinity University, Union College, Washington & Lee University, Wesleyan University, Whitman College, Williams College 2011-2012 PROJECTS TECTONIC EVOLUTION OF THE CHUGACH-PRINCE WILLIAM TERRANE, SOUTH-CENTRAL ALASKA Faculty: JOHN GARVER, Union College, Cameron Davidson, -

Fall 2020 Report on Assessment September 2020

Fall 2020 Report on Assessment September 2020 Full Report The report that follows represents a departure from the standard format of the Fall assessment report to the Board of Regents. Typically, the Assessment Committee uses the Fall report to communicate results from institution-wide assessment surveys or instruments administered to students during the prior academic year. These instruments follow a three-year rotation. For 2019-20, the scheduled instrument was the HEDS (Higher Education Data Sharing) Consortium Research Practices Survey (RPS). However, in consulting with library staff, who were originally involved in the creation of the HEDS RPS and are the primary users of the data, we made the decision to discontinue this survey instrument. The consensus was that the survey is somewhat outdated and no longer directly relevant for understanding students’ informational literacy skills. There is interest among college librarians in identifying a replacement instrument that will be consistent with the newly developed ILOs specifically tailored to the library's engagement with first-year courses. However, the excess demands placed on library staff due to COVID-19 and the resulting shift away from regular in-person teaching has delayed these conversations. Although there were not any scheduled institutional instruments administered last year, the Assessment Committee presents this report to update the Board on the wealth of other assessment-related activities that took place in 2019-20 and the many changes to assessment occurring at St. Olaf in the coming year. HEDS COVID-19 Institutional Response Student Survey In response to the COVID-19 pandemic and the abrupt shift to remote teaching made by many institutions, the Higher Education Data Sharing (HEDS) Consortium developed a COVID-19 Institutional Response Student Survey that institutions could use to gain feedback from students on the institution’s response to the pandemic, students’ worries and concerns, and their experiences with remote learning. -

St. Olaf College

National Institute for Learning Outcomes Assessment September 2020 Assessment in Motion: Steps Toward a More Integrated Model Susan Canon, Kelsey Thompson, and Mary Walczak Olaf College St. Foreword By Pat Hutchings As part of an ongoing effort to track and explore developments in student learning outcomes assessment, the National Institute for Learning Outcomes Assessment (NILOA) has published a number of institutional case studies which are housed on the website. We are now revisiting and updating some of those earlier examples in order to understand how campus assessment practices evolve over time—through lessons learned from local experience but also as a result of changes in institutional priorities, the launch of new initiatives, leadership transitions, and trends in the larger assessment movement. This report on St. Olaf College is an update of theoriginal 2012 case study by Natasha Jankowski. Founded in 1874 by Norwegian Lutheran immigrants, St. Olaf College is a nationally ranked residential liberal arts college of the Evangelical Lutheran Church in America (ELCA) located in Northfield, Minnesota. St. Olaf challenges students to excel in the liberal arts, examine faith and values, and explore meaningful vocation in an inclusive, globally engaged community nourished by Lutheran tradition. St. Olaf has roughly 3,000 students, offers 49 majors and 20 concentrations (minors), and has a robust study-abroad program, with more than two-thirds of students studying abroad before graduating. St. Olaf has a long history with assessment, having participated in many different assessment initiatives over the years including a Teagle-funded project with Carleton College and Macalester College focused on using assessment findings to improve specific learning outcomes, and eth Associated Colleges of the Midwest-Teagle Collegium on Student Learning exploring how students learn and acquire the knowledge and skills of a liberal education. -

UNDERGRADUATE RESEARCH OPPORTUNITIES Keck Geology Consortium SUMMER 2011 Dept

Robert J. Varga, Director UNDERGRADUATE RESEARCH OPPORTUNITIES Keck Geology Consortium SUMMER 2011 Dept. of Geology Pomona College 185 E. Sixth St. Pending funding from the National Science Foundation Research Experiences for Claremont, CA 91711 Undergraduate Programs (REU), the Keck Geology Consortium announces the following projects for SUMMER 2011 and encourages students to review the projects on our Blenda Long Administrative Assistant website: keckgeology.org. All project dates are tentative (please check website for updates). Application instructions are on the website Office 909 – 607-0651 Fax 909 – 621-8552 TH [email protected] APPLICATION DEADLINE: FEBRUARY 4 , 2011 South-Central Alaska - Tectonic evolution of the Chugach-Prince William terrane, south-central Amherst College Alaska. Faculty: John Garver (Union College), Cam Davidson (Carleton College): June 15-July Beloit College 12 (tentative) Carleton College Ascraeus Mons, Mars - Origins of Sinuous and Braided Channels on Ascraeus Mons, Mars: Andrew de Wet (Franklin & Marshall College), Jake Bleacher (NASA-GSFC), Brent Garry, Colgate University Smithsonian: July 6-August 3 (tentative) The College of Wooster Peruvian Andes - Tropical Holocene Climatic Insights From Records of Variability in Andean The Colorado College Paleoglaciers: Donald Rodbell (Union College), Nathan Stansell, Byrd Polar Research Center: 15 June-15 July (tentative) Franklin & Marshall College Tetons-Absaroka Ranges, Wyoming - Eocene Tectonic Evolution of the Teton-Absaroka Mt Holyoke College Ranges, Wyoming: John Craddock (Macalester College), Dave Malone (Illinois State University): July 15-August 14 (tentative) Macalester College Front Range, Colorado - Interdisciplinary Studies in the Critical Zone, Boulder Creek Oberlin College catchment, Front Range, Colorado: David Dethier (Williams College): July 13-August 10 Pomona College (tentative) Smith College U.S.