Matrix Inverses Consider the Ordinary Algebraic Equation and Its Solution

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linear Algebra and Matrix Theory

Linear Algebra and Matrix Theory Chapter 1 - Linear Systems, Matrices and Determinants This is a very brief outline of some basic definitions and theorems of linear algebra. We will assume that you know elementary facts such as how to add two matrices, how to multiply a matrix by a number, how to multiply two matrices, what an identity matrix is, and what a solution of a linear system of equations is. Hardly any of the theorems will be proved. More complete treatments may be found in the following references. 1. References (1) S. Friedberg, A. Insel and L. Spence, Linear Algebra, Prentice-Hall. (2) M. Golubitsky and M. Dellnitz, Linear Algebra and Differential Equa- tions Using Matlab, Brooks-Cole. (3) K. Hoffman and R. Kunze, Linear Algebra, Prentice-Hall. (4) P. Lancaster and M. Tismenetsky, The Theory of Matrices, Aca- demic Press. 1 2 2. Linear Systems of Equations and Gaussian Elimination The solutions, if any, of a linear system of equations (2.1) a11x1 + a12x2 + ··· + a1nxn = b1 a21x1 + a22x2 + ··· + a2nxn = b2 . am1x1 + am2x2 + ··· + amnxn = bm may be found by Gaussian elimination. The permitted steps are as follows. (1) Both sides of any equation may be multiplied by the same nonzero constant. (2) Any two equations may be interchanged. (3) Any multiple of one equation may be added to another equation. Instead of working with the symbols for the variables (the xi), it is eas- ier to place the coefficients (the aij) and the forcing terms (the bi) in a rectangular array called the augmented matrix of the system. a11 a12 . -

Using Row Reduction to Calculate the Inverse and the Determinant of a Square Matrix

Using row reduction to calculate the inverse and the determinant of a square matrix Notes for MATH 0290 Honors by Prof. Anna Vainchtein 1 Inverse of a square matrix An n × n square matrix A is called invertible if there exists a matrix X such that AX = XA = I, where I is the n × n identity matrix. If such matrix X exists, one can show that it is unique. We call it the inverse of A and denote it by A−1 = X, so that AA−1 = A−1A = I holds if A−1 exists, i.e. if A is invertible. Not all matrices are invertible. If A−1 does not exist, the matrix A is called singular or noninvertible. Note that if A is invertible, then the linear algebraic system Ax = b has a unique solution x = A−1b. Indeed, multiplying both sides of Ax = b on the left by A−1, we obtain A−1Ax = A−1b. But A−1A = I and Ix = x, so x = A−1b The converse is also true, so for a square matrix A, Ax = b has a unique solution if and only if A is invertible. 2 Calculating the inverse To compute A−1 if it exists, we need to find a matrix X such that AX = I (1) Linear algebra tells us that if such X exists, then XA = I holds as well, and so X = A−1. 1 Now observe that solving (1) is equivalent to solving the following linear systems: Ax1 = e1 Ax2 = e2 ... Axn = en, where xj, j = 1, . -

Linear Algebra Review

Linear Algebra Review Kaiyu Zheng October 2017 Linear algebra is fundamental for many areas in computer science. This document aims at providing a reference (mostly for myself) when I need to remember some concepts or examples. Instead of a collection of facts as the Matrix Cookbook, this document is more gentle like a tutorial. Most of the content come from my notes while taking the undergraduate linear algebra course (Math 308) at the University of Washington. Contents on more advanced topics are collected from reading different sources on the Internet. Contents 3.8 Exponential and 7 Special Matrices 19 Logarithm...... 11 7.1 Block Matrix.... 19 1 Linear System of Equa- 3.9 Conversion Be- 7.2 Orthogonal..... 20 tions2 tween Matrix Nota- 7.3 Diagonal....... 20 tion and Summation 12 7.4 Diagonalizable... 20 2 Vectors3 7.5 Symmetric...... 21 2.1 Linear independence5 4 Vector Spaces 13 7.6 Positive-Definite.. 21 2.2 Linear dependence.5 4.1 Determinant..... 13 7.7 Singular Value De- 2.3 Linear transforma- 4.2 Kernel........ 15 composition..... 22 tion.........5 4.3 Basis......... 15 7.8 Similar........ 22 7.9 Jordan Normal Form 23 4.4 Change of Basis... 16 3 Matrix Algebra6 7.10 Hermitian...... 23 4.5 Dimension, Row & 7.11 Discrete Fourier 3.1 Addition.......6 Column Space, and Transform...... 24 3.2 Scalar Multiplication6 Rank......... 17 3.3 Matrix Multiplication6 8 Matrix Calculus 24 3.4 Transpose......8 5 Eigen 17 8.1 Differentiation... 24 3.4.1 Conjugate 5.1 Multiplicity of 8.2 Jacobian...... -

Amps: an Augmented Matrix Formulation for Principal Submatrix Updates with Application to Power Grids∗

SIAM J. SCI.COMPUT. c 2017 Society for Industrial and Applied Mathematics Vol. 39, No. 5, pp. S809{S827 AMPS: AN AUGMENTED MATRIX FORMULATION FOR PRINCIPAL SUBMATRIX UPDATES WITH APPLICATION TO POWER GRIDS∗ YU-HONG YEUNGy , ALEX POTHENy , MAHANTESH HALAPPANAVARz , AND ZHENYU HUANGz Abstract. We present AMPS, an augmented matrix approach to update the solution to a linear system of equations when the matrix is modified by a few elements within a principal submatrix. This problem arises in the dynamic security analysis of a power grid, where operators need to perform N − k contingency analysis, i.e., determine the state of the system when exactly k links from N fail. Our algorithms augment the matrix to account for the changes in it, and then compute the solution to the augmented system without refactoring the modified matrix. We provide two algorithms|a direct method and a hybrid direct-iterative method|for solving the augmented system. We also exploit the sparsity of the matrices and vectors to accelerate the overall computation. We analyze the time complexity of both algorithms and show that it is bounded by the number of nonzeros in a subset of the columns of the Cholesky factor that are selected by the nonzeros in the sparse right-hand-side vector. Our algorithms are compared on three power grids with PARDISO, a parallel direct solver, and CHOLMOD, a direct solver with the ability to modify the Cholesky factors of the matrix. We show that our augmented algorithms outperform PARDISO (by two orders of magnitude) and CHOLMOD (by a factor of up to 5). -

TP Matrices and TP Completability

W&M ScholarWorks Undergraduate Honors Theses Theses, Dissertations, & Master Projects 5-2018 TP Matrices and TP Completability Duo Wang Follow this and additional works at: https://scholarworks.wm.edu/honorstheses Part of the Algebra Commons Recommended Citation Wang, Duo, "TP Matrices and TP Completability" (2018). Undergraduate Honors Theses. Paper 1159. https://scholarworks.wm.edu/honorstheses/1159 This Honors Thesis is brought to you for free and open access by the Theses, Dissertations, & Master Projects at W&M ScholarWorks. It has been accepted for inclusion in Undergraduate Honors Theses by an authorized administrator of W&M ScholarWorks. For more information, please contact [email protected]. TP Matrices and TP Completability A thesis submitted in partial fulfillment of the requirement for the degree of Bachelor of Science in Mathematics from The College of William and Mary by Duo Wang Committe Members: Charles Johnson Junping Shi Mark Greer Williamsburg, VA April 10, 2018 Abstract A matrix is called totally nonnegative (TN) if the determinant of every square submatrix is nonnegative and totally positive (TP) if the determinant of every square submatrix is positive. The TP (TN) completion problem asks which partial matrices have a TP (TN) completion. In this paper, several new TP-completable pat- terns in 3-by-n matrices are identified. The relationship between expansion and completability is developed based on the prior re- sults about single unspecified entry. These results extend our un- derstanding of TP-completable patterns. A new Ratio Theorem related to TP-completability is introduced in this paper, and it can possibly be a helpful tool in TP-completion problems. -

Matrices and Linear Algebra

Chapter 2 Matrices and Linear Algebra 2.1 Basics Definition 2.1.1. A matrix is an m n array of scalars from a given field F . The individual values in the matrix× are called entries. Examples. 213 12 A = B = 124 34 − The size of the array is–written as m n,where × m n × number of rows number of columns Notation a11 a12 ... a1n a21 a22 ... a2n rows ←− A = a a ... a n1 n2 mn columns A := uppercase denotes a matrix a := lower case denotes an entry of a matrix a F. ∈ Special matrices 33 34 CHAPTER 2. MATRICES AND LINEAR ALGEBRA (1) If m = n, the matrix is called square.Inthiscasewehave (1a) A matrix A is said to be diagonal if aij =0 i = j. (1b) A diagonal matrix A may be denoted by diag(d1,d2,... ,dn) where aii = di aij =0 j = i. The diagonal matrix diag(1, 1,... ,1) is called the identity matrix and is usually denoted by 10... 0 01 In = . . .. 01 or simply I,whenn is assumed to be known. 0 = diag(0,... ,0) is called the zero matrix. (1c) A square matrix L is said to be lower triangular if ij =0 i<j. (1d) A square matrix U is said to be upper triangular if uij =0 i>j. (1e) A square matrix A is called symmetric if aij = aji. (1f) A square matrix A is called Hermitian if aij =¯aji (¯z := complex conjugate of z). (1g) Eij has a 1 in the (i, j) position and zeros in all other positions. -

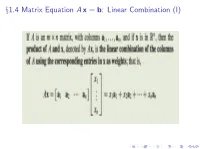

§1.4 Matrix Equation Ax = B: Linear Combination

x1.4 Matrix Equation A x = b: Linear Combination (I) Matrix-vector Product () Linear Combination (II) Example: 2 4 3 1 2 −1 A = ; x = 3 0 −5 3 4 5 7 Linear Equations in terms of Matrix-vector Product Example: Linear Equations in terms of Matrix-vector Product Example: Linear Equations in terms of Linear Combinations Linear Equations in terms of Linear Combinations 2 3 1 3 4 b1 4 0 14 10 b2 + 4 b1 5 0 7 5 b3 + 3 b1 2 3 1 3 4 b1 (`3)−(1=2)×(`2)!(`3) =) 4 0 14 10 b2 + 4 b1 5 0 0 0 b3 + 3 b1 − 1=2(b2 + 4 b1) Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) ! (`2) 1 3 4 b1 (`3) + (3) × (`1) ! (`3) 4 −4 2 −6 b2 5 =) −3 −2 −7 b3 2 3 1 3 4 b1 4 0 14 10 b2 + 4 b1 5 0 0 0 b3 + 3 b1 − 1=2(b2 + 4 b1) Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) ! (`2) 2 3 1 3 4 b1 1 3 4 b1 (`3) + (3) × (`1) ! (`3) 4 −4 2 −6 b2 5 =) 4 0 14 10 b2 + 4 b1 5 −3 −2 −7 b3 0 7 5 b3 + 3 b1 (` )−(1=2)×(` )!(` ) 3 =) 2 3 Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) ! -

Systems of Linear Equations

Systems of Linear Equations 0.1 Definitions Recall that if A 2 Rm×n and B 2 Rm×p, then the augmented matrix [A j B] 2 Rm×n+p is the matrix [AB], that is the matrix whose first n columns are the columns of A, and whose last p columns are the columns of B. Typically we consider B = 2 Rm×1 ' Rm, a column vector. We also recall that a matrix A 2 Rm×n is said to be in reduced row echelon form if, counting from the topmost row to the bottom-most, 1. any row containing a nonzero entry precedes any row in which all the entries are zero (if any) 2. the first nonzero entry in each row is the only nonzero entry in its column 3. the first nonzero entry in each row is 1 and it occurs in a column to the right of the first nonzero entry in the preceding row Example 0.1 The following matrices are not in reduced echelon form because they all fail some part of 3 (the first one also fails 2): 01 1 01 00 1 0 21 2 0 0 0 1 0 1 0 0 1 @ A @ A 0 1 0 1 0 1 0 0 1 1 A matrix that is in reduced row echelon form is: 01 0 1 01 @0 1 0 0A 0 0 0 1 A system of m linear equations in n unknowns is a set of m equations, numbered from 1 to m going down, each in n variables xi which are multiplied by coefficients aij 2 F , whose sum equals some bj 2 R: 8 a x + a x + ··· + a x = b > 11 1 12 2 1n n 1 > <> a21x1 + a22x2+ ··· + a2nxn = b2 (S) . -

Math 240: Linear Systems and Rank of a Matrix

Math 240: Linear Systems and Rank of a Matrix Ryan Blair University of Pennsylvania Thursday January 20, 2011 Ryan Blair (U Penn) Math 240: Linear Systems and Rank of a Matrix Thursday January 20, 2011 1 / 10 Outline 1 Review of Last Time 2 linear Independence Ryan Blair (U Penn) Math 240: Linear Systems and Rank of a Matrix Thursday January 20, 2011 2 / 10 Review of Last Time Review of last time 1 Transpose of a matrix 2 Special types of matrices 3 Matrix properties 4 Row-echelon and reduced row echelon form 5 Solving linear systems using Gaussian and Gauss-Jordan elimination Ryan Blair (U Penn) Math 240: Linear Systems and Rank of a Matrix Thursday January 20, 2011 3 / 10 Review of Last Time Echelon Forms Definition A matrix is in row-echelon form if 1 Any row consisting of all zeros is at the bottom of the matrix. 2 For all non-zero rows the leading entry must be a one. This is called the pivot. 3 In consecutive rows the pivot in the lower row appears to the right of the pivot in the higher row. Definition A matrix is in reduced row-echelon form if it is in row-echelon form and every pivot is the only non-zero entry in its column. Ryan Blair (U Penn) Math 240: Linear Systems and Rank of a Matrix Thursday January 20, 2011 4 / 10 Review of Last Time Row Operations We will be applying row operations to augmented matrices to find solutions to linear equations. This is called Gaussian or Gauss-Jordan elimination. -

Augmented Matrices 567

8.2 Systems of Linear Equations: Augmented Matrices 567 8.2 Systems of Linear Equations: Augmented Matrices In Section 8.1 we introduced Gaussian Elimination as a means of transforming a system of linear equations into triangular form with the ultimate goal of producing an equivalent system of linear equations which is easier to solve. If we take a step back and study the process, we see that all of our moves are determined entirely by the coefficients of the variables involved, and not the variables themselves. Much the same thing happened when we studied long division in Section 3.2. Just as we developed synthetic division to streamline that process, in this section, we introduce a similar bookkeeping device to help us solve systems of linear equations. To that end, we define a matrix as a rectangular array of real numbers. We typically enclose matrices with square brackets, `[' and `]', and we size matrices by the number of rows and columns they have. For example, the size (sometimes called the dimension) of 3 0 −1 2 −5 10 is 2 × 3 because it has 2 rows and 3 columns. The individual numbers in a matrix are called its entries and are usually labeled with double subscripts: the first tells which row the element is in and the second tells which column it is in. The rows are numbered from top to bottom and the columns are numbered from left to right. Matrices themselves are usually denoted by uppercase letters (A, B, C, etc.) while their entries are usually denoted by the corresponding letter. -

Matrices, Block Stuctures and Gaussian Elimination

MATRICES, BLOCK STRUCTURES AND GAUSSIAN ELIMINATION Numerical linear algebra lies at the heart of modern scientific computing and computational science. Today it is not uncommon to perform numerical computations with matrices having millions of components. The key to understanding how to implement such algorithms is to exploit underlying structure within the matrices. In these notes we touch on a few ideas and tools for dissecting matrix structure. Specifically we are concerned with the block structure matrices. 1. Rows and Columns Let A 2 Rm×n so that A has m rows and n columns. Denote the element of A in the ith row and jth column as Aij. Denote the m rows of A by A1·;A2·;A3·;:::;Am· and the n columns of A by A·1;A·2;A·3;:::;A·n. For example, if 2 3 2 −1 5 7 3 3 A = 4 −2 27 32 −100 0 0 5 ; −89 0 47 22 −21 33 then A2;4 = −100, A1· = 3 2 −1 5 7 3 ;A2· = −2 27 32 −100 0 0 ;A3· = −89 0 47 22 −21 33 and 2 3 3 2 2 3 2−13 2 5 3 2 7 3 2 3 3 A·1 = 4 −2 5 ;A·2 = 4275 ;A·3 = 4 32 5 ;A·4 = 4−1005 ;A·5 = 4 0 5 ;A·6 = 4 0 5 : −89 0 47 22 −21 33 Exercise 1.1. If 2 3 −4 1 1 0 0 3 6 2 2 0 0 1 0 7 6 7 C = 6 −1 0 0 0 0 1 7 ; 6 7 4 0 0 0 2 1 4 5 0 0 0 1 0 3 2−43 6 2 7 6 7 what are C4;4, C·4 and C4·? For example, C2· = 2 2 0 0 1 0 and C·2 = 6 0 7 : 6 7 4 0 5 0 The block structuring of a matrix into its rows and columns is of fundamental importance and is extremely useful in understanding the properties of a matrix. -

Handbook of Linear Algebra

62 Fast Algorithms for Structured Matrix Computations 62.1 Classes of Structured Matrices ::::::::::::::::::::::: 62-1 62.2 Transformations of Structured Matrices :::::::::::: 62-6 62.3 Generalized Schur Algorithms :::::::::::::::::::::::: 62-8 62.4 Schur Algorithms for Positive Definite Matrices ::: 62-10 62.5 Fast Algorithms for Cauchy-like Systems ::::::::::: 62-12 62.6 Fast Algorithms for Toeplitz-like Systems :::::::::: 62-13 62.7 Fast Algorithms for Vandermonde Systems :::::::: 62-15 Michael Stewart 62.8 Superfast Algorithms :::::::::::::::::::::::::::::::::: 62-17 Georgia State University References:::::::::::::::::::::::::::::::::::::::::::::::::::::: 62-19 A structured matrix is an m×n matrix with elements that can be determined from substan- tially fewer than mn parameters. This is a broad definition that includes sparse matrices, matrices with displacement structure, matrices with rank structure, and numerous types of matrices with specialized structure. Many common matrix operations can be performed us- ing fast algorithms that exploit matrix structure to achieve asymptotic speed-up relative to conventional algorithms. For matrices with either displacement structure or rank structure, there are fast algorithms for the solution of linear systems, inversion, QR factorization, and matrix-vector multiplication. Fast algorithms typically achieve a factor of n speed-up over their conventional counterparts. Algorithms that do better than this are sometimes referred to as superfast. This chapter focuses on methods for dense matrices with structure. The emphasis is on direct methods, with some limited coverage of the use of fast matrix-vector multiplication in iterative methods and the choice of preconditioners for structured systems. Displacement structure is used as a unifying context for the presentation of many of the fast algorithms.