Integrated Solutions for Embedded Dolby E and AC-3 Romolo Magarelli and David Strachan, Evertz Microsystems Ltd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Avid Supported Video File Formats

Avid Supported Video File Formats 04.07.2021 Page 1 Avid Supported Video File Formats 4/7/2021 Table of Contents Common Industry Formats ............................................................................................................................................................................................................................................................................................................................................................................................... 4 Application & Device-Generated Formats .................................................................................................................................................................................................................................................................................................................................................................. 8 Stereoscopic 3D Video Formats ...................................................................................................................................................................................................................................................................................................................................................................................... 11 Quick Lookup of Common File Formats ARRI..............................................................................................................................................................................................................................................................................................................................................................4 -

Video Codec Requirements and Evaluation Methodology

Video Codec Requirements 47pt 30pt and Evaluation Methodology Color::white : LT Medium Font to be used by customers and : Arial www.huawei.com draft-filippov-netvc-requirements-01 Alexey Filippov, Huawei Technologies 35pt Contents Font to be used by customers and partners : • An overview of applications • Requirements 18pt • Evaluation methodology Font to be used by customers • Conclusions and partners : Slide 2 Page 2 35pt Applications Font to be used by customers and partners : • Internet Protocol Television (IPTV) • Video conferencing 18pt • Video sharing Font to be used by customers • Screencasting and partners : • Game streaming • Video monitoring / surveillance Slide 3 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : • Basic requirements: . Random access to pictures 18pt Random Access Period (RAP) should be kept small enough (approximately, 1-15 seconds); Font to be used by customers . Temporal (frame-rate) scalability; and partners : . Error robustness • Optional requirements: . resolution and quality (SNR) scalability Slide 4 35pt Internet Protocol Television (IPTV) Font to be used by customers and partners : Resolution Frame-rate, fps Picture access mode 2160p (4K),3840x2160 60 RA 18pt 1080p, 1920x1080 24, 50, 60 RA 1080i, 1920x1080 30 (60 fields per second) RA Font to be used by customers and partners : 720p, 1280x720 50, 60 RA 576p (EDTV), 720x576 25, 50 RA 576i (SDTV), 720x576 25, 30 RA 480p (EDTV), 720x480 50, 60 RA 480i (SDTV), 720x480 25, 30 RA Slide 5 35pt Video conferencing Font to be used by customers and partners : • Basic requirements: . Delay should be kept as low as possible 18pt The preferable and maximum delay values should be less than 100 ms and 350 ms, respectively Font to be used by customers . -

Lecture 11 : Discrete Cosine Transform Moving Into the Frequency Domain

Lecture 11 : Discrete Cosine Transform Moving into the Frequency Domain Frequency domains can be obtained through the transformation from one (time or spatial) domain to the other (frequency) via Fourier Transform (FT) (see Lecture 3) — MPEG Audio. Discrete Cosine Transform (DCT) (new ) — Heart of JPEG and MPEG Video, MPEG Audio. Note : We mention some image (and video) examples in this section with DCT (in particular) but also the FT is commonly applied to filter multimedia data. External Link: MIT OCW 8.03 Lecture 11 Fourier Analysis Video Recap: Fourier Transform The tool which converts a spatial (real space) description of audio/image data into one in terms of its frequency components is called the Fourier transform. The new version is usually referred to as the Fourier space description of the data. We then essentially process the data: E.g . for filtering basically this means attenuating or setting certain frequencies to zero We then need to convert data back to real audio/imagery to use in our applications. The corresponding inverse transformation which turns a Fourier space description back into a real space one is called the inverse Fourier transform. What do Frequencies Mean in an Image? Large values at high frequency components mean the data is changing rapidly on a short distance scale. E.g .: a page of small font text, brick wall, vegetation. Large low frequency components then the large scale features of the picture are more important. E.g . a single fairly simple object which occupies most of the image. The Road to Compression How do we achieve compression? Low pass filter — ignore high frequency noise components Only store lower frequency components High pass filter — spot gradual changes If changes are too low/slow — eye does not respond so ignore? Low Pass Image Compression Example MATLAB demo, dctdemo.m, (uses DCT) to Load an image Low pass filter in frequency (DCT) space Tune compression via a single slider value n to select coefficients Inverse DCT, subtract input and filtered image to see compression artefacts. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

VIDEO Blu-Ray™ Disc Player BP330

VIDEO Blu-ray™ Disc Player BP330 Internet access lets you stream instant content from Make the most of your HDTV. Blu-ray disc playback Less clutter. More possibilities. Cut loose from Netflix, CinemaNow, Vudu and YouTube direct to delivers exceptional Full HD 1080p video messy wires. Integrated Wi-Fi® connectivity allows your TV — no computer required. performance, along with Bonus-view for a picture-in- you take advantage of Internet access from any picture. available Wi-Fi® connection in its range. VIDEO Blu-ray™ Disc Player BP330 PROFILE & PLAYABLE DISC PLAYABLE AUDIO FORMATS BD Profile 2.0 LPCM Yes USB Playback Yes Dolby® Digital Yes External HDD Playback Yes (via USB) Dolby® Digital Plus Yes BD-ROM/BD-R/BD-RE Yes Dolby® TrueHD Yes DVD-ROM/DVD±R/DVD±RW Yes DTS Yes Audio CD/CD-R/CD-RW Yes DTS-HD Master Audio Yes DTS-CD Yes MPEG 1/2 L2 Yes MP3 Yes LG SMART TV WMA Yes Premium Content Yes AAC Yes Netflix® Yes FLAC Yes YouTube® Yes Amazon® Yes PLAYABLE PHOTO FORMATS Hulu Plus® Yes JPEG Yes Vudu® Yes GIF/Animated GIF Yes CinemaNow® Yes PNG Yes Pandora® Yes MPO Yes Picasa® Yes AccuWeather® Yes CONVENIENCE SIMPLINK™ Yes VIDEO FEATURES Loading Time >10 Sec 1080p Up-scaling Yes LG Remote App Yes (Free download on Google Play and Apple App Store) Noise Reduction Yes Last Scene Memory Yes Deep Color Yes Screen Saver Yes NvYCC Yes Auto Power Off Yes Video Enhancement Yes Parental Lock Yes Yes Yes CONNECTIVITY Wired LAN Yes AUDIO FEATURES Wi-Fi® Built-in Yes Dolby Digital® Down Mix Yes DLNA Certified® Yes Re-Encoder Yes (DTS only) LPCM Conversion -

Frequently Asked Questions Dolby Digital Plus

Frequently Asked Questions Dolby® Digital Plus redefines the home theater surround experience for new formats like high-definition video discs. What is Dolby Digital Plus? Dolby® Digital Plus is Dolby’s new-generation multichannel audio technology developed to enhance the premium experience of high-definition media. Built on industry-standard Dolby Digital technology, Dolby Digital Plus as implemented in Blu-ray Disc™ features more channels, less compression, and higher data rates for a warmer, richer, more compelling audio experience than you get from standard-definition DVDs. What other applications are there for Dolby Digital Plus? The advanced spectral coding efficiencies of Dolby Digital Plus enable content producers to deliver high-resolution multichannel soundtracks at lower bit rates than with Dolby Digital. This makes it ideal for emerging bandwidth-critical applications including cable, IPTV, IP streaming, and satellite (DBS) and terrestrial broadcast. Dolby Digital Plus is also a preferred medium for delivering BonusView (Profile 1.1) and BD-Live™ (Profile 2.0) interactive audio content on Blu-ray Disc. Delivering higher quality and more channels at higher bit rates, plus greater efficiency at lower bit rates, Dolby Digital Plus has the flexibility to fulfill the needs of new content delivery formats for years to come. Is Dolby Digital Plus content backward-compatible? Because Dolby Digital Plus is built on core Dolby Digital technologies, content that is encoded with Dolby Digital Plus is fully compatible with the millions of existing home theaters and playback systems worldwide equipped for Dolby Digital playback. Dolby Digital Plus soundtracks are easily converted to a 640 kbps Dolby Digital signal without decoding and reencoding, for output via S/PDIF. -

Analysis of Video Compression Using DCT

Imperial Journal of Interdisciplinary Research (IJIR) Vol-3, Issue-3, 2017 ISSN: 2454-1362, http://www.onlinejournal.in Analysis of Video Compression using DCT Pranavi Patil1, Sanskruti Patil2 & Harshala Shelke3 & Prof. Anand Sankhe4 1,2,3 Bachelor of Engineering in computer, Mumbai University 4Proffessor, Department of Computer Science & Engineering, Mumbai University Maharashtra, India Abstract: Video is most useful media to represent the where in order to get the best possible compression great information. Videos, images are the most efficiency, it considers certain level of distortion. essential approaches to represent data. Now this year, all the communications are done on such ii. Lossless Compression: media. The central problem for the media is its large The lossless compression technique is generally size. Also this large data contains a lot of redundant focused on the decreasing the compressed output information. The huge usage of digital multimedia video' bit rate without any alteration of the frame. leads to inoperable growth of data flow through The decompressed bit-stream is matching to the various mediums. Now a days, to solve the problem original bit-stream. of bandwidth requirement in communication, multimedia data is a big challenge. The main 2. Video Compression concept in the present paper is about the Discrete The main unit behind the video compression Cosine Transform (DCT) algorithm for compressing approach is the video encoder found at the video's size. Keywords: Compression, DCT, PSNR, transmitter side, which encodes the video that is to be MSE, Redundancy. transmitted in form of bits and also the video decoder positioned at the receiver side, which rebuild the Keywords: Compression, DCT, PSNR, MSE, video in its original form based on the bit sequence Redundancy. -

Video Coding Standards

Module 8 Video Coding Standards Version 2 ECE IIT, Kharagpur Lesson 23 MPEG-1 standards Version 2 ECE IIT, Kharagpur Lesson objectives At the end of this lesson, the students should be able to : 1. Enlist the major video coding standards 2. State the basic objectives of MPEG-1 standard. 3. Enlist the set of constrained parameters in MPEG-1 4. Define the I- P- and B-pictures 5. Present the hierarchical data structure of MPEG-1 6. Define the macroblock modes supported by MPEG-1 23.0 Introduction In lesson 21 and lesson 22, we studied how to perform motion estimation and thereby temporally predict the video frames to exploit significant temporal redundancies present in the video sequence. The error in temporal prediction is encoded by standard transform domain techniques like the DCT, followed by quantization and entropy coding to exploit the spatial and statistical redundancies and achieve significant video compression. The video codecs therefore follow a hybrid coding structure in which DPCM is adopted in temporal domain and DCT or other transform domain techniques in spatial domain. Efforts to standardize video data exchange via storage media or via communication networks are actively in progress since early 1980s. A number of international video and audio standardization activities started within the International Telephone Consultative Committee (CCITT), followed by the International Radio Consultative Committee (CCIR), and the International Standards Organization / International Electrotechnical Commission (ISO/IEC). An experts group, known as the Motion Pictures Expects Group (MPEG) was established in 1988 in the framework of the Joint ISO/IEC Technical Committee with an objective to develop standards for coded representation of moving pictures, associated audio, and their combination for storage and retrieval of digital media. -

DCT——Page 1- Chow CS525—DCT——Page 2

Why needs Compression? Video Compression Applications z Typical NTSC video z Cable TV distribution 460x340=148000 pels/frame, 30 frames/sec, 16 bits/pel (YIQ) z Interactive communications—videophone, videoconferencing, videotex. =71 Mbps z Digital storage media—CD-ROM, CD-V, digital VTR. z Bandwidth are limited resource and expensive z Network database services video library, video information provider. z Storage—enormous for uncompressed video z Video-on-demand z Compression processing—relative cheap z Broadcasting z Video Camera, VCR, TV Receiver z Video surveillance z Comparison of NTSC and HDTV Reference: “Discrete Cosine Transform Algorithms, Advantages, Application”, Rao and Yip, Academic Press, 1990. chow CS525—DCT——Page 1- chow CS525—DCT——Page 2- Applications vs. Bit Rates Why can video data be compressed? Application Standard Bit Rate Range Fax CCITT G3/G4/JBIG 4.8-14.4 kbps Redundancy Still Image JPEG 56/64 kbps z Intraframe Videophone (analog) 9.6-19.2 kbps z Interframe Videophone (digital) CCITT H.261 px64 64-128 kbps Videoconferencing CCITT H.261 px64 320-1920 kbps Entertainment video MPEG 1-3 Mbps Broadcast video MPEG II 4-10 Mbps Intraframe JPEG JPEG 10-20 Mbps Digital VCR MPEG II 8-20 Mbps NTSC (very high quality) CCIR/ANSI 34/45 Mbps HDTV (broadcasting) FCC(US only) 20-25 Mbps HDTV (studio) 30-45 Mbps chow CS525—DCT——Page 3- chow CS525—DCT——Page 4- Compression Techniques Transform Coding Concept z Spatial domain—DPCM, Transform, Subband, Vector quantization z Why can data be compressed?—Redundancy z Temporal domain—frame difference, motion compensation z Orthogonal transform: —decorrelate data into a more compact form so that redundancy can be discarded more easily. -

NTSC Monochrome Video Display Modul Ee

ENGINEERING, QUALITY AND RELIABILITY SPECIFICATION NTSC Monochrome Video Display Module Model NDP-0090-MV5 Revision A DISPLAYTECH CONFIDENTIAL Displaytech, Inc. 2602 Clover Basin Drive Longmont, Colorado 80503-7604 USA 303-772-2191 303-772-2193 fax 800-397-8124 USA toll free www.displaytech.com Table of Contents ENGINEERING, QUALITY AND RELIABILITY SPECIFICATION................................................................................................ 4 Features...................................................................................................................................................................................4 Applications.............................................................................................................................................................................4 INTRODUCTION.........................................................................................................................................................................4 SYSTEM OVERVIEW ................................................................................................................................................................5 ELECTRICAL SPECIFICATION...........................................................................................................................................6 ABSOLUTE MAXIMUM RATINGS ............................................................................................................................................... 6 RECOMMENDED OPERATING CONDITIONS............................................................................................................................. -

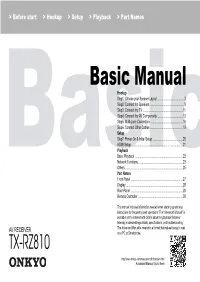

Basic Manual Hookup Step1: Choose Your Speaker Layout

SN29402177B_TX-RZ810_BAS_En_1702xx.book 1 ページ 2017年2月9日 木曜日 午後3時33分 > Before start > Hookup > Setup > Playback > Part Names Basic Manual Hookup Step1: Choose your Speaker Layout .......................................3 Step2: Connect the Speakers ..................................................9 Step3: Connect the TV ..........................................................11 Step4: Connect the AV Components .....................................13 Step5: Multi-zone Connection ...............................................16 Step6: Connect Other Cables ................................................19 Setup Step7: Power On & Initial Setup ............................................20 HDMI Setup ...........................................................................21 Playback Basic Playback ......................................................................22 Network Functions .................................................................23 Others ....................................................................................25 Part Names Front Panel ............................................................................27 Display ...................................................................................28 Rear Panel ............................................................................29 Remote Controller .................................................................30 This manual includes information needed when starting up and also instructions for frequently used operations. The "Advanced -

It Is Not Television Anymore: Designing Digital Video for Learning And

1 It is not television anymore: Designing digital video for learning and assessment Daniel L. Schwartz & Kevin Hartman School of Education Stanford University To Appear in Video Research in the Learning Sciences; R. Goldman, S. Derry, R. Pea, & B. Barron (Eds.). Mahwah, NJ: Erlbaum. 2 When used effectively, video is a powerful technology for learning. Researchers can examine videotapes to learn about patterns of classroom interaction. In-service teachers can review videos of their own teaching to reveal their strengths and weaknesses as instructors. In these instances, the video captures naturally occurring events that often elude the naked eye when seen in person but become clearer upon review. In this chapter, we consider a different use of video for learning. We describe the use of designed video, where the author of a video decides on its components and features beforehand. For example, take the case of a scripted video of a child incorrectly solving a math problem. A researcher can ask other children to watch the video and comment on the errors they notice. When used this way, the video is designed as an assessment that helps researchers learn what the children know. Designed video can also help students learn. For example, a professor might use the same video clip to help explain common mathematical errors to an entire class. Designed video can support learning in many ways. In this chapter, we provide a simple framework for mapping uses of video into desired and observable learning outcomes. We then show how this framework can be applied when designing video embedded in multimedia environments.