Word and Document Embedding with Vmf-Mixture Priors on Context Word Vectors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Customer Order Form

#374 | NOV19 PREVIEWS world.com Name: ORDERS DUE NOV 18 THE COMIC SHOP’S CATALOG PREVIEWSPREVIEWS CUSTOMER ORDER FORM CUSTOMER 601 7 Nov19 Cover ROF and COF.indd 1 10/10/2019 3:23:57 PM Nov19 CBLDF Ad.indd 1 10/10/2019 3:36:53 PM PROTECTOR #1 MARVEL ACTION: IMAGE COMICS SPIDER-MAN #1 IDW PUBLISHING WONDER WOMAN #750 SEX CRIMINALS #26 DC COMICS IMAGE COMICS IRON MAN 2020 #1 MARVEL COMICS BATMAN #86 DC COMICS STRANGER THINGS: INTO THE FIRE #1 DARK HORSE COMICS RED SONJA: AGE OF CHAOS! #1 DYNAMITE ENTERTAINMENT SONIC THE HEDGEHOG #25 IDW PUBLISHING FRANKENSTEIN HEAVY VINYL: UNDONE #1 Y2K-O! OGN SC DARK HORSE COMICS BOOM! STUDIOS NOv19 Gem Page ROF COF.indd 1 10/10/2019 4:18:59 PM FEATURED ITEMS COMIC BOOKS • GRAPHIC NOVELS KIDZ #1 l ABLAZE Octavia Butler’s Parable of the Sower HC l ABRAMS COMICARTS Cat Shit One #1 l ANTARCTIC PRESS 1 Owly Volume 1: The Way Home GN (Color Edition) l GRAPHIX Catherine’s War GN l HARPER ALLEY BOWIE: Stardust, Rayguns & Moonage Daydreams HC l INSIGHT COMICS The Plain Janes GN SC/HC l LITTLE BROWN FOR YOUNG READERS Nils: The Tree of Life HC l MAGNETIC PRESS 1 The Sunken Tower HC l ONI PRESS The Runaway Princess GN SC/HC l RANDOM HOUSE GRAPHIC White Ash #1 l SCOUT COMICS Doctor Who: The 13th Doctor Season 2 #1 l TITAN COMICS Tank Girl Color Classics ’93-’94 #3.1 l TITAN COMICS Quantum & Woody #1 (2020) l VALIANT ENTERTAINMENT Vagrant Queen: A Planet Called Doom #1 l VAULT COMICS BOOKS • MAGAZINES Austin Briggs: The Consummate Illustrator HC l AUAD PUBLISHING The Black Widow Little Golden Book HC l GOLDEN BOOKS -

Free Catalog

Featured New Items DC COLLECTING THE MULTIVERSE On our Cover The Art of Sideshow By Andrew Farago. Recommended. MASTERPIECES OF FANTASY ART Delve into DC Comics figures and Our Highest Recom- sculptures with this deluxe book, mendation. By Dian which features insights from legendary Hanson. Art by Frazetta, artists and eye-popping photography. Boris, Whelan, Jones, Sideshow is world famous for bringing Hildebrandt, Giger, DC Comics characters to life through Whelan, Matthews et remarkably realistic figures and highly al. This monster-sized expressive sculptures. From Batman and Wonder Woman to The tome features original Joker and Harley Quinn...key artists tell the story behind each paintings, contextualized extraordinary piece, revealing the design decisions and expert by preparatory sketches, sculpting required to make the DC multiverse--from comics, film, sculptures, calen- television, video games, and beyond--into a reality. dars, magazines, and Insight Editions, 2020. paperback books for an DCCOLMSH. HC, 10x12, 296pg, FC $75.00 $65.00 immersive dive into this SIDESHOW FINE ART PRINTS Vol 1 dynamic, fanciful genre. Highly Recommened. By Matthew K. Insightful bios go beyond Manning. Afterword by Tom Gilliland. Wikipedia to give a more Working with top artists such as Alex Ross, accurate and eye-opening Olivia, Paolo Rivera, Adi Granov, Stanley look into the life of each “Artgerm” Lau, and four others, Sideshow artist. Complete with fold- has developed a series of beautifully crafted outs and tipped-in chapter prints based on films, comics, TV, and ani- openers, this collection will mation. These officially licensed illustrations reign as the most exquisite are inspired by countless fan-favorite prop- and informative guide to erties, including everything from Marvel and this popular subject for DC heroes and heroines and Star Wars, to iconic classics like years to come. -

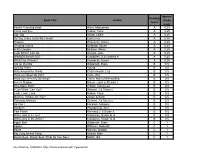

ACCREDITED TEXAS STALLIONS As of April 25, 2019 (Please Note That This List May Not Be Complete; Call the TTA Office with Any Questions.)

ACCREDITED TEXAS STALLIONS as of April 25, 2019 (Please note that this list may not be complete; call the TTA office with any questions.) NAME ACCREDITED SIRE DAM YR FOALED A. P. RIDGE 1/1/2019 A.P. INDY SEEBE 2006 A.P JETTER 1/1/2004 A. P JET OUEEE BEBE 1999 ADMINISTRATION 1/1/2005 HENNESSY SCHAUM'S CLASS 2000 ADVANCEEXPECTATION 1/1/2009 VALID EXPECTATIONS IRON'S ADVANCE 2006 AFLEET BOB 1/1/2011 NORTHERN AFLEET CAROLE VEE 2005 ANDEAN CHASQUI 1/1/1996 MISWAKI GOLD BRIEF BIKINI 1991 ANGLIANA 1/1/2010 GIANT'S CAUSEWAY PRATELLA 2002 BALLISTIC KITTEN 1/1/2017 KITTEN'S JOY BALLADE'S GIRL 2010 BEHINDATTHEBAR 1/1/2018 FOREST WILDCAT RHIANA 2005 BETA CAPO 1/1/2011 LANGFUHR MINERS MIRAGE 2004 BIG LUKEY 1/1/2002 HOLY BULL BOLD ESCAPE 1996 BLACK RAIDER 1/1/2013 ORIENTATE PEN'N PAD 2008 BOBBY'S RED JET 1/1/2005 HENNESSY DOMINI ARLYN 1999 BOONE'S MILL 1/1/2001 CARSON CITY SHY MISS 1992 BRADESTER 1/1/2017 LION HEART GRANDESTOFALL 2010 BUG A BOO BEN 1/1/2018 AWESOME AGAIN COLLECT THE CASH 2003 BYARS 1/1/2005 DEPUTY MINISTER THIRTY FLAGS 1993 CAMP BRET 1/1/2012 FOREST CAMP SHES GOT THE LOOK 2007 CAPTAIN COUNTDOWN 1/1/2003 RELAUNCH BEARLY COOKING 1996 CASUAL AL 1/1/2015 AL SABIN CASUAL VIC 2009 CELESTIC NIGHT 1/1/2014 VINDICATION CELESTIC 2008 CHIEF HALO 1/1/2011 SUNNY'S HALO HULA ZIP 2002 CHIEF THREE SOX 1/1/2002 CHIEF HONCHO RAMONA KAY 1995 CHITOZ 1/1/2010 FOREST WILDCAT WICHITOZ 2005 CLARKE LANE 1/1/2019 GIANT'S CAUSEWAY WONDER LADY ANNE L. -

2019 Sunshine Autumn Sieger September 28 & 29, 2019

The International Dog Shows and International All Breed Canine Association 2019 Sunshine Autumn Sieger September 28 & 29, 2019 SHOW ROSTER updated: 9/25/2019 9:38:30 PM BREED CAT# DOG & OWNER'S INFORMATION Shows: 1234 NAME: Azari Ruzka Always ‘N’ Forever REG #S: Hp57528901 SEX: Bitch DOG SHOW CLASS: Junior Puppy Class (6-9) ADD'L Afghan Hound 43007 CLASSES: OWNER(S): K Irazabal D. Hichborn Clermont FL BREEDER(S): D. Hichborn K Irazabal K. Smith SIRE: Gch Cynergy’s The Magic of it all at Ruzka DAM: Ch Azari Ruzka Twilight Eclipse NAME: Ruzka Azari The Enchanted one REG #S: HP57528902 SEX: Bitch DOG SHOW CLASS: Junior Puppy Class (6-9) ADD'L Afghan Hound 43006 CLASSES: Bred by Exhibitor OWNER(S): M Lopez-Silvero D. Hichborn Clermont FL BREEDER(S): D. Hichborn K. Irazabal K. Smith SIRE: Gch Cynergy’s The Magic of it all at Ruzka DAM: Ch Azari Ruzka Twilight eclipse NAME: Minda's Misty Isle's Aleta REG #S: WS64720502 SEX: Bitch DOG SHOW CLASS: Baby (3-6) ADD'L CLASSES: OWNER(S): Akita 42901 Katherine Nash Ronald Nash Melbourne FL BREEDER(S): Hollie Walker Francee Hamblet Lynn Morgan SIRE: CH Someli Born Harry Potter DAM: Minda & Midnite Acesup Harper's Bazaar NAME: After All Its Only Make Believe REG #S: RN32586603 SEX: Bitch DOG SHOW CLASS: Open Class ADD'L CLASSES: American Hairless 42566 OWNER(S): Tammy K. Stefanie Lakeland FL BREEDER(S): Melinda Vinson SIRE: GCHG CH Bur-way Trin 2 Raise Some Terrier Kane DAM: Feeriya Magic Adelaida Velvet NAME: Vinelake's Puddle Jumper REG #S: DN57719102 SEX: Dog DOG SHOW CLASS: Baby (3-6) ADD'L CLASSES: -

Book Title Author Reading Level Approx. Grade Level

Approx. Reading Book Title Author Grade Level Level Anno's Counting Book Anno, Mitsumasa A 0.25 Count and See Hoban, Tana A 0.25 Dig, Dig Wood, Leslie A 0.25 Do You Want To Be My Friend? Carle, Eric A 0.25 Flowers Hoenecke, Karen A 0.25 Growing Colors McMillan, Bruce A 0.25 In My Garden McLean, Moria A 0.25 Look What I Can Do Aruego, Jose A 0.25 What Do Insects Do? Canizares, S.& Chanko,P A 0.25 What Has Wheels? Hoenecke, Karen A 0.25 Cat on the Mat Wildsmith, Brain B 0.5 Getting There Young B 0.5 Hats Around the World Charlesworth, Liza B 0.5 Have you Seen My Cat? Carle, Eric B 0.5 Have you seen my Duckling? Tafuri, Nancy/Greenwillow B 0.5 Here's Skipper Salem, Llynn & Stewart,J B 0.5 How Many Fish? Cohen, Caron Lee B 0.5 I Can Write, Can You? Stewart, J & Salem,L B 0.5 Look, Look, Look Hoban, Tana B 0.5 Mommy, Where are You? Ziefert & Boon B 0.5 Runaway Monkey Stewart, J & Salem,L B 0.5 So Can I Facklam, Margery B 0.5 Sunburn Prokopchak, Ann B 0.5 Two Points Kennedy,J. & Eaton,A B 0.5 Who Lives in a Tree? Canizares, Susan et al B 0.5 Who Lives in the Arctic? Canizares, Susan et al B 0.5 Apple Bird Wildsmith, Brain C 1 Apples Williams, Deborah C 1 Bears Kalman, Bobbie C 1 Big Long Animal Song Artwell, Mike C 1 Brown Bear, Brown Bear What Do You See? Martin, Bill C 1 Found online, 7/20/2012, http://home.comcast.net/~ngiansante/ Approx. -

Azbill Sawmill Co. I-40 at Exit 101 Southside Cedar Grove, TN 731-968-7266 731-614-2518, Michael Azbill [email protected] $6,0

Azbill Sawmill Co. I-40 at Exit 101 Southside Cedar Grove, TN 731-968-7266 731-614-2518, Michael Azbill [email protected] $6,000 for 17,000 comics with complete list below in about 45 long comic boxes. If only specific issue wanted email me your inquiry at [email protected] . Comics are bagged only with no boards. Have packed each box to protect the comics standing in an upright position I've done ABSOLUTELY all the work for you. Not only are they alphabetzied from A to Z start to finish, but have a COMPLETE accurate electric list. Dates range from 1980's thur 2005. Cover price up to $7.95 on front of comic. Dates range from mid to late 80's thru 2005 with small percentage before 1990. Marvel, DC, Image, Cross Gen, Dark Horse, etc. Box AB Image-Aaron Strain#2(2) DC-A. Bizarro 1,3,4(of 4) Marvel-Abominations 2(2) Antartic Press-Absolute Zero 1,3,5 Dark Horse-Abyss 2(of2) Innovation- Ack the Barbarion 1(3) DC-Action Comis 0(2),599(2),601(2),618,620,629,631,632,639,640,654(2),658,668,669,671,672,673(2),681,684,683,686,687(2/3/1 )688(2),689(4),690(2),691,692,693,695,696(5),697(6),701(3),702,703(3),706,707,708(2),709(2),712,713,715,716( 3),717(2),718(4),720,688,721(2),722(2),723(4),724(3),726,727,728(3),732,733,735,736,737(2),740,746,749,754, 758(2),814(6), Annual 3(4),4(1) Slave Labor-Action Girl Comics 3(2) DC-Advanced Dungeons & Dragons 32(2) Oni Press-Adventrues Barry Ween:Boy Genius 2,3(of6) DC-Adventrure Comics 247(3) DC-Adventrues in the Operation:Bollock Brigade Rifle 1,2(2),3(2) Marvel-Adventures of Cyclops & Phoenix 1(2),2 Marvel-Adventures -

Artless: Ignorance in the Novel and the Making of Modern Character

Artless: Ignorance in the Novel and the Making of Modern Character By Brandon White A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in English in the Graduate Division of the University of California, Berkeley Committee in charge: Professor C. D. Blanton, Chair Professor D. A. Miller Professor Kent Puckett Professor Marianne Constable Spring 2017 Abstract Artless: Ignorance in the Novel and the Making of Modern Character by Brandon White Doctor of Philosophy in English University of California, Berkeley Professor C. D. Blanton, Chair Two things tend to be claimed about the modernist novel, as exemplified at its height by Virginia Woolf’s The Waves (1931) — first, that it abandons the stability owed to conventional characterization, and second, that the narrow narration of intelligence alone survives the sacrifice. For The Waves, the most common way of putting this is to say that the novel contains “not characters, but characteristics,” “not characters[,] but voices,” but that the voices that remain capture “highly conscious intelligence” at work. Character fractures, but intelligence is enshrined. “Artless: Ignorance in the Novel and the Making of Modern Character” argues that both of these presumptions are misplaced, and that the early moments of British modernism instead consolidated characterization around a form of ignorance, or what I call “artlessness” — a condition through which characters come to unlearn the educations that have constituted them, and so are able to escape the modes of knowledge imposed by the prevailing educational establishment. Whether for Aristotle or Hegel, Freud or Foucault, education has long been understood as the means by which subjects are formed; with social circumstances put in place before us, any idea of independent character is only a polite fiction. -

Released 18Th March 2020 BOOM!

Released 18th March 2020 BOOM! STUDIOS JAN208439 ALIENATED #1 (OF 6) 3RD PTG JAN201325 ALIENATED #2 (OF 6) JAN208429 ALIENATED #2 (OF 6) FOC QUINONES VAR JAN201336 FIREFLY #15 CVR A MAIN ASPINALL JAN201337 FIREFLY #15 CVR B PREORDER KANO VAR JAN208430 FIREFLY #15 FOC MCDAID VAR NOV191302 GARFIELD TP GARZILLA JAN201328 HEARTBEAT #5 (OF 5) JAN208431 HEARTBEAT #5 (OF 5) FOC LOTAY VAR JAN201366 LUMBERJANES #72 CVR A LEYH JAN201367 LUMBERJANES #72 CVR B PREORDER YEE VAR NOV191301 LUMBERJANES TO MAX ED HC VOL 06 JAN201339 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 CVR A MORA JAN201342 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 DON MONTES JAN208432 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 FOC VAR JAN201340 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 LEO MONTES JAN201341 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 MIKE MONTES JAN201343 POWER RANGERS TEENAGE MUTANT NINJA TURTLES #4 RAPH MONTES JAN201326 RED MOTHER #4 JAN208434 RED MOTHER #4 FOC INGRANATA VAR JAN201327 SOMETHING IS KILLING CHILDREN #6 JAN208436 SOMETHING IS KILLING CHILDREN #6 25 COPY INCV JAN208435 SOMETHING IS KILLING CHILDREN #6 FOC VAR JAN201319 WICKED THINGS #1 CVR A SARIN JAN201320 WICKED THINGS #1 CVR B ALLISON JAN208438 WICKED THINGS #1 FOC VAR DARK HORSE COMICS NOV190280 ART OF CUPHEAD HC NOV190281 ART OF CUPHEAD HC LTD ED JAN200318 BANG #2 (OF 5) CVR A TORRES JAN200319 BANG #2 (OF 5) CVR B KINDT DEC190338 CYBERPUNK 2077 NIGHT CITY KEYCHAIN DEC190337 CYBERPUNK 2077 NIGHT CITY MAGNET DEC190334 CYBERPUNK 2077 SILVERHAND LOGO KEYCHAIN DEC190336 CYBERPUNK 2077 -

2014 Submitted by K.C

New Title Holders earned January, February & March 2014 Submitted by K.C. Artley AKC Conformation Champion Baruschka’s Krassiwaja SC, br: Amy Regius Alchemy JC x Enchantess V. (CH) Sikula & Anne Midgarden, ow; Kasey Troybhiko, br/ow: Carol Mchugh CH Aashtoria’s My Secret Agenda (D) & Dale Parks & Leah Thorp CH Timeless One Of These Nights (D) HP44775402, 29-Dec-2012, by GCH CH Majenkir Ice And Fire At Avalyn HP40366902, 21-Mar-2011, by Jubilee Aashtoria Wildhunt Hidden Agenda (B) HP43679505, 3-Jul-2012 by CH Glacier Tinman x CH Renaissance CGC x CH Aashtoria Irishbrook La Majenkir Front And Center x CH Stones Of Iron, br: Chris Swilley & Rochelle JC, br: Robin Riel, ow: Ken Majenkir My Vixen Aliy, br: Karen Robin Casey & Cynthia Gredys, ow: Porter & Robin Riel Staudt-Cartabona, ow: Lynne Bennett Dale Parks & Kasey Parks & Robin CH Astara’s Late Arrival (D) HP21323901, CH Maringa’s Nights In White Satin (D) Casey 18-Apr-2005, by Astara’s Bring HP43593102, 3-Jul-2012 by GCH CH Vento Danza Victoria Secret (B) It On x CH Lido Keiya Rose, br: Maringa’s Brand New Day x CH HP40869109, 23-Jun-2011, by GCH Carvey Graves, ow: Karen & William Maringa’s Cotton Candy JC, br/ow: Aashtoria Wildhunt 4 Your I’s Only Ackerman David Hardin & Stephanie Moss RA ROM-C x GCH Vento Danza CH Attaway-Kinobi Gala Margarita CH Nickolai Maviam Darkside Of The Independence Day, br: Dee Burkholder (B) HP43909801, 6-Aug-2012, by Moon (B) HP34500718, 8-May-2009, & Cassey Cedeno, ow: Sandra Miller Chabibi’s Korona-Kazan SC ROM-P by CH Téine Fire & Brimstone RN JC CH Wild -

Batman's Animated Brain(S) Lisa Kort-Butler

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Sociology Faculty Presentations & Talks Sociology, Department of 2019 Batman's Animated Brain(s) Lisa Kort-Butler Follow this and additional works at: https://digitalcommons.unl.edu/socprez Part of the American Popular Culture Commons, Criminology Commons, Critical and Cultural Studies Commons, Graphic Communications Commons, Other Psychology Commons, Other Sociology Commons, and the Social Control, Law, Crime, and Deviance Commons This Presentation is brought to you for free and open access by the Sociology, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Sociology Faculty Presentations & Talks by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Citation: Kort‐Butler, Lisa A. “Batman’s Animated Brain(s).” Paper presented to the Batman in Popular Culture Conference, Bowling Green State University, Bowling Green, OH. April 12, 2019. [email protected] ____________ Image: Batman’s brain in Batman: The Brave and the Bold, “Journey to the Center of the Bat!” 1 I was in the beginning stages of a project on the social story of the brain (and a neuroscience more broadly), when a Google image search brought me a purchasable phrenology of Batman, then a Batman‐themed Heart and Brain cartoon of the Brain choosing the cape‐and‐cowl. (Thanks algorithms! I had, a few years back, published on representations of deviance and justice in superhero cartoons [Kort‐Butler 2012 and 2013], and recorded a podcast on superheroes’ physical and mental trauma.) ____________________ Images, left to right: https://wharton.threadless.com/designs/batman‐phrenology http://theawkwardyeti.com/comic/everyone‐knows/ 2 A quick search of Batman’s brain yielded something interesting: various pieces on the psychology of Batman (e.g., Langley 2012), Zehr’s (2008) work on Bruce Wayne’s training plans and injuries, the science fictions of Batman comics in the post‐World War II era (Barr 2008; e.g., Detective Comics, Vol. -

{Download PDF} Forever Evil: Blight

FOREVER EVIL: BLIGHT PDF, EPUB, EBOOK Mikel Janin,Ray Fawkes | 400 pages | 07 Oct 2014 | DC Comics | 9781401250065 | English | United States Comic books in 'Forever Evil: Blight' About this product. Stock photo. Brand new: Lowest price The lowest-priced brand-new, unused, unopened, undamaged item in its original packaging where packaging is applicable. See all 5 brand new listings. Buy It Now. Add to cart. With unspeakable evil called Blight unleashed on the world, it's up to the Justice League Dark, Swamp Thing, Pandora and the Phantom Stranger to try and stop them from destroying everything around them. Additional Product Features Dewey Edition. Show More Show Less. Add to Cart. They travel to the bottom of the ocean, where they arrive at Nan Madol , a deserted undersea kingdom. There, Sea King begins to attack them and awakens the spirits of Nan Madol to attack the surface. Pandora informs Constantine that they have to stop him, as the Sea King is housing Deadman's spirit. On the surface, the group is able to stop Sea King's attack, and break the connection Sea King's body had on Deadman. Deadman wakes up and informs the group he jumped into Sea King's body during the Crime Syndicate's arrival, and that it twisted his mind so he did not know how he was. Constantine removes the last traces of Sea King's consciousness and locks Deadman in the body to have a weapon to use against the Crime Syndicate. The Presence appears to the Stranger and tells him he should join Blight in order to continue on his path to redemption. -

Reading Counts

Title Author Reading Level Sorted Alphabetically by Author's First Name Barn, The Avi 5.8 Oedipus The King (Knox) Sophocles 9 Enciclopedia Visual: El pla... A. Alessandrello 6 Party Line A. Bates 3.5 Green Eyes A. Birnbaum 2.2 Charlotte's Rose A. E. Cannon 3.7 Amazing Gracie A. E. Cannon 4.1 Shadow Brothers, The A. E. Cannon 5.5 Cal Cameron By Day, Spiderman A. E. Cannon 5.9 Four Feathers, The A. E. W. Mason 9 Guess Where You're Going... A. F. Bauman 2.5 Minu, yo soy de la India A. Farjas 3 Cat-Dogs, The A. Finnis 5.5 Who Is Tapping At My Window? A. G. Deming 1.5 Infancia animal A. Ganeri 2 camellos tienen joroba, Los A. Ganeri 4 Me pregunto-el mar es salado A. Ganeri 4.3 Comportamiento animal A. Ganeri 6 Lenguaje animal A. Ganeri 7 vida (origen y evolución), La A. Garassino 7.9 Takao, yo soy de Japón A. Gasol Trullols 6.9 monstruo y la bibliotecaria A. Gómez Cerdá 4.5 Podría haber sido peor A. H. Benjamin 1.2 Little Mouse...Big Red Apple A. H. Benjamin 2.3 What If? A. H. Benjamin 2.5 What's So Funny? (FX) A. J. Whittier 1.8 Worth A. LaFaye 5 Edith Shay A. LaFaye 7.1 abuelita aventurera, La A. M. Machado 2.9 saltamontes verde, El A. M. Matute 7.1 Wanted: Best Friend A. M. Monson 2.8 Secret Of Sanctuary Island A. M. Monson 4.9 Deer Stand A.