Optimizing Linux* for Dual-Core AMD Opteron™ Processors Optimizing Linux for Dual-Core AMD Opteron Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AMD Athlonxp/Athlon/Duron Processor Based MAIN BOARD

All manuals and user guides at all-guides.com FN41 AMD AthlonXP/Athlon/Duron Processor Based MAIN BOARD all-guides.com All manuals and user guides at all-guides.com WARNING Thermal issue is highly essential for processors with a speed of 600MHz and above. Hence, we recommend you to use the CPU fan qualified by AMD or motherboard manufacturer. Meanwhile, please make sure CPU and fan are securely fastened well. Otherwise, improper fan installation not only gets system unstable but also could damage both CPU and motherboard because insufficient thermal dissipation. If you would like to know more about thermal topic please see AMD website for detailed thermal requirement through the address: http://www.amd.com/products/athlon/thermals http://www.amd.com/products/duron/thermals All manuals and user guides at all-guides.com Shuttle® FN41 Socket 462 AMD AthlonXP/Athlon/Duron Processor based DDR Mainboard Manual Version 1.0 Copyright Copyright© 2002 by Shuttle® Inc. All Rights Reserved. No part of this publication may be reproduced, transcribed, stored in a retrieval system, translated into any language, or transmitted in any form or by any means, electronic, mechanical, magnetic, optical, chemical, photocopying, manual, or otherwise, without prior written permission from Shuttle® Inc. Disclaimer Shuttle® Inc. shall not be liable for any incidental or consequential damages resulting from the performance or use of this product. This company makes no representations or warranties regarding the contents of this manual. Information in this manual has been carefully checked for reliability; however, no guarantee is given as to the correctness of the contents. -

Motherboard Gigabyte Ga-A75n-Usb3

Socket AM3+ - AMD 990FX - GA-990FXA-UD5 (rev. 1.x) Socket AM3+ Pagina 1/3 GA- Model 990FXA- Motherboard UD5 PCB 1.x Since L2 L3Core System vendor CPU Model Frequency Process Stepping Wattage BIOS Name Cache Cache Bus(MT/s) Version AMD FX-8150 3600MHz 1MBx8 8MB Bulldozer 32nm B2 125W 5200 F5 AMD FX-8120 3100MHz 1MBx8 8MB Bulldozer 32nm B2 125W 5200 F5 AMD FX-8120 3100MHz 1MBx8 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-8100 2800MHz 1MBx8 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-6100 3300MHz 1MBx6 8MB Bulldozer 32nm B2 95W 5200 F5 AMD FX-4100 3600MHz 1MBx4 8MB Bulldozer 32nm B2 95W 5200 F5 Socket AM3 GA- Model 990FXA- Motherboard UD5 PCB 1.x Since L2 L3Core System vendor CPU Model Frequency Process Stepping Wattage BIOS Name Cache Cache Bus(MT/s) Version AMD Phenom II X6 1100T 3300MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1090T 3200MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1075T 3000MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1065T 2900MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1055T 2800MHz 512KBx6 6MB Thuban 45nm E0 125W 4000 F2 AMD Phenom II X6 1055T 2800MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1045T 2700MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X6 1035T 2600MHz 512KBx6 6MB Thuban 45nm E0 95W 4000 F2 AMD Phenom II X4 980 3700MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F6 AMD Phenom II X4 975 3600MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F2 AMD Phenom II X4 970 3500MHz 512KBx4 6MB Deneb 45nm C3 125W 4000 F2 AMD Phenom II X4 965 3400MHz 512KBx4 -

AMD Ryzen™ PRO & Athlon™ PRO Processors Quick Reference Guide

AMD Ryzen™ PRO & Athlon™ PRO Processors Quick Reference guide AMD Ryzen™ PRO Processors with Radeon™ Graphics for Business Laptops (Socket FP6/FP5) 1 Core/Thread Frequency Boost/Base L2+L3 Cache Graphics Node TDP Intel vPro Core/Thread Frequency Boost*/Base L2+L3 Cache Graphics Node TDP AMD PRO technologies COMPARED TO 4.9/1.1 Radeon™ 6/12 UHD AMD Ryzen™ 7 PRO 8/16 Up to 12MB Graphics 7nm 15W intel Intel Core i7 10810U GHz 13MB 4750U 4.1/1.7 GHz CORE i7 14nm 15W (7 Cores) 10th Gen Intel Core i7 10610U 4/8 4.9/1.8 9MB UHD GHz AMD Ryzen™ 7 PRO Up to Radeon™ intel 4/8 6MB 10 12nm 15W 4.8/1.9 3700U 4.0/2.3 GHz Vega CORE i7 Intel Core i7 8665U 4/8 9MB UHD 14nm 15W 8th Gen GHz Radeon™ AMD Ryzen™ 5 PRO 6/12 Up to 11MB Graphics 7nm 15W intel 4.4/1.7 4650U 4.0/2.1 GHz CORE i5 Intel Core i5 10310U 4/8 7MB UHD 14nm 15W GHz (6 Cores) 10th Gen AMD Ryzen™ 5 PRO 4/8 Up to 6MB Radeon™ 12nm 15W intel 4.1/1.6 3500U 3.7/2.1 GHz Vega8 CORE i5 Intel Core i5 8365U 4/8 7MB UHD 14nm 15W th GHz 8 Gen Radeon™ AMD Ryzen™ 3 PRO 4/8 Up to 6MB Graphics 7nm 15W intel 4.1/2.1 4450U 3.7/2.5 GHz CORE i3 Intel Core i3 10110U 2/4 5MB UHD 14nm 15W (5 Cores) 10th Gen GHz AMD Ryzen™ 3 PRO 4/4 Up to 6MB Radeon™ 12nm 15W intel 3.9/2.1 3300U 3.5/2.1 GHz Vega6 CORE i3 Intel Core i3 8145U 2/4 4.5MB UHD 14nm 15W 8th Gen GHz AMD Athlon™ PRO Processors with Radeon™ Vega Graphics for Business Laptops (Socket FP5) AMD Athlon™ PRO Up to Radeon™ intel Intel Pentium 4415U 2/4 2.3 GHz 2.5MB HD 610 14nm 15W 300U 2/4 3.3/2.4 GHz 5MB Vega3 12nm 15W 1. -

SQL Server 2005 on the IBM Eserver Xseries 460 Enterprise Server

IBM Front cover SQL Server 2005 on the IBM Eserver xSeries 460 Enterprise Server Describes how “scale-up” solutions suit enterprise databases Explains the XpandOnDemand features of the xSeries 460 Introduces the features of the new SQL Server 2005 Michael Lawson Yuichiro Tanimoto David Watts ibm.com/redbooks Redpaper International Technical Support Organization SQL Server 2005 on the IBM Eserver xSeries 460 Enterprise Server December 2005 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. First Edition (December 2005) This edition applies to Microsoft SQL Server 2005 running on the IBM Eserver xSeries 460. © Copyright International Business Machines Corporation 2005. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix The team that wrote this Redpaper . ix Become a published author . .x Comments welcome. xi Chapter 1. The x460 enterprise server . 1 1.1 Scale up versus scale out . 2 1.2 X3 Architecture . 2 1.2.1 The X3 Architecture servers . 2 1.2.2 Key features . 3 1.2.3 IBM XA-64e third-generation chipset . 4 1.2.4 XceL4v cache . 6 1.3 XpandOnDemand . 6 1.4 Memory subsystem . 7 1.5 Multi-node configurations . 9 1.5.1 Positioning the x460 and the MXE-460. 11 1.5.2 Node connectivity . 12 1.5.3 Partitioning . 13 1.6 64-bit with EM64T . 14 1.6.1 What makes a 64-bit processor . 14 1.6.2 Intel Xeon with EM64T . -

AMD's Early Processor Lines, up to the Hammer Family (Families K8

AMD’s early processor lines, up to the Hammer Family (Families K8 - K10.5h) Dezső Sima October 2018 (Ver. 1.1) Sima Dezső, 2018 AMD’s early processor lines, up to the Hammer Family (Families K8 - K10.5h) • 1. Introduction to AMD’s processor families • 2. AMD’s 32-bit x86 families • 3. Migration of 32-bit ISAs and microarchitectures to 64-bit • 4. Overview of AMD’s K8 – K10.5 (Hammer-based) families • 5. The K8 (Hammer) family • 6. The K10 Barcelona family • 7. The K10.5 Shanghai family • 8. The K10.5 Istambul family • 9. The K10.5-based Magny-Course/Lisbon family • 10. References 1. Introduction to AMD’s processor families 1. Introduction to AMD’s processor families (1) 1. Introduction to AMD’s processor families AMD’s early x86 processor history [1] AMD’s own processors Second sourced processors 1. Introduction to AMD’s processor families (2) Evolution of AMD’s early processors [2] 1. Introduction to AMD’s processor families (3) Historical remarks 1) Beyond x86 processors AMD also designed and marketed two embedded processor families; • the 2900 family of bipolar, 4-bit slice microprocessors (1975-?) used in a number of processors, such as particular DEC 11 family models, and • the 29000 family (29K family) of CMOS, 32-bit embedded microcontrollers (1987-95). In late 1995 AMD cancelled their 29K family development and transferred the related design team to the firm’s K5 effort, in order to focus on x86 processors [3]. 2) Initially, AMD designed the Am386/486 processors that were clones of Intel’s processors. -

Processor Check Utility for 64-Bit Compatibility

Processor Check Utility for 64-Bit Compatibility VMware Workstation Processor Check Utility for 64-Bit Compatibility VMware Workstation version 5.5 supports virtual machines with 64-bit guest operating systems, running on host machines with the following processors: • AMD™ Athlon™ 64, revision D or later • AMD Opteron™, revision E or later • AMD Turion™ 64, revision E or later • AMD Sempron™, 64-bit-capable revision D or later (experimental support) • Intel™ EM64T VT-capable processors (experimental support) When you power on a virtual machine with a 64-bit guest operating system, Workstation performs an internal check: if the host CPU is not a supported 64-bit processor, you cannot power on the virtual machine. VMware also provides this standalone processor check utility, which you can use without Workstation to perform the same check and determine whether your CPU is supported for virtual machines with 64-bit guest operating systems. Note: On hosts with EM64T VT-capable processors, you may not be able to power on a 64-bit guest, even though the processor check utility indicates that the processor is supported for 64- bit guests. VT functionality can be disabled via the BIOS, but the processor check utility cannot read the appropriate model-specific register (MSR) to detect that the VT functionality has been disabled in the BIOS. Note: In shopping for a processor that is compatible with Workstation 5.5 64-bit guests, you may be unable to determine the revision numbers of a given vendor's offering of AMD Athlon 64, Opteron, Turion 64, or Sempron processors. At this time, the only reliable way to determine whether any of these processors is a revision supported by Workstation 5.5, is by the manufacturing technology (CMOS): any of the AMD Athlon 64, Opteron, Turion 64, or Sempron processors whose manufacturing technology is 90nm SOI (.09 micron SOI) is compatible with Workstation 5.5 64-bit guests. -

Support for NUMA Hardware in Helenos

Charles University in Prague Faculty of Mathematics and Physics MASTER THESIS Vojtˇech Hork´y Support for NUMA hardware in HelenOS Department of Distributed and Dependable Systems Supervisor: Mgr. Martin Dˇeck´y Study Program: Software Systems 2011 I would like to thank my supervisor, Martin Dˇeck´y,for the countless advices and the time spent on guiding me on the thesis. I am grateful that he introduced HelenOS to me and encouraged me to add basic NUMA support to it. I would also like to thank other developers of HelenOS, namely Jakub Jerm´aˇrand Jiˇr´ı Svoboda, for their assistance on the mailing list and for creating HelenOS. I would like to thank my family and my closest friends too { for their patience and continuous support. I declare that I carried out this master thesis independently, and only with the cited sources, literature and other professional sources. I understand that my work relates to the rights and obligations under the Act No. 121/2000 Coll., the Copyright Act, as amended, in particular the fact that the Charles University in Prague has the right to conclude a license agreement on the use of this work as a school work pursuant to Section 60 paragraph 1 of the Copyright Act. Prague, August 2011 Vojtˇech Hork´y 2 N´azevpr´ace:Podpora NUMA hardwaru pro HelenOS Autor: Vojtˇech Hork´y Katedra (´ustav): Matematicko-fyzik´aln´ıfakulta univerzity Karlovy Vedouc´ıpr´ace:Mgr. Martin Dˇeck´y e-mail vedouc´ıho:[email protected]ff.cuni.cz Abstrakt: C´ılemt´etodiplomov´epr´aceje rozˇs´ıˇritoperaˇcn´ısyst´emHelenOS o podporu ccNUMA hardwaru. -

A Novel Hard-Soft Processor Affinity Scheduling for Multicore Architecture Using Multiagents

European Journal of Scientific Research ISSN 1450-216X Vol.55 No.3 (2011), pp.419-429 © EuroJournals Publishing, Inc. 2011 http://www.eurojournals.com/ejsr.htm A Novel Hard-Soft Processor Affinity Scheduling for Multicore Architecture using Multiagents G. Muneeswari Research Scholar, Computer Science and Engineering Department RMK Engineering College, Anna University-Chennai, Tamilnadu, India E-mail: [email protected] K. L. Shunmuganathan Professor & Head Computer Science and Engineering Department RMK Engineering College, Anna University-Chennai, Tamilnadu, India E-mail: [email protected] Abstract Multicore architecture otherwise called as SoC consists of large number of processors packed together on a single chip uses hyper threading technology. This increase in processor core brings new advancements in simultaneous and parallel computing. Apart from enormous performance enhancement, this multicore platform adds lot of challenges to the critical task execution on the processor cores, which is a crucial part from the operating system scheduling point of view. In this paper we combine the AMAS theory of multiagent system with the affinity based processor scheduling and this will be best suited for critical task execution on multicore platforms. This hard-soft processor affinity scheduling algorithm promises in minimizing the average waiting time of the non critical tasks in the centralized queue and avoids the context switching of critical tasks. That is we assign hard affinity for critical tasks and soft affinity for non critical tasks. The algorithm is applicable for the system that consists of both critical and non critical tasks in the ready queue. We actually modified and simulated the linux 2.6.11 kernel process scheduler to incorporate this scheduling concept. -

Agent Based Load Balancing Scheme Using Affinity Processor Scheduling for Multicore Architectures

WSEAS TRANSACTIONS on COMPUTERS G. Muneeswari, K. L. Shunmuganathan Agent Based Load Balancing Scheme using Affinity Processor Scheduling for Multicore Architectures G.MUNEESWARI Research Scholar Department of computer Science and Engineering RMK Engineering College, Anna University (Chennai) INDIA [email protected] http://www.rmkec.ac.in Dr.K.L.SHUNMUGANATHAN Professor & Head Department of computer Science and Engineering RMK Engineering College, Anna University (Chennai) INDIA [email protected] http://www.rmkec.ac.in Abstract:-Multicore architecture otherwise called as CMP has many processors packed together on a single chip utilizes hyper threading technology. The main reason for adding large amount of processor core brings massive advancements in parallel computing paradigm. The enormous performance enhancement in multicore platform injects lot of challenges to the task allocation and load balancing on the processor cores. Altogether it is a crucial part from the operating system scheduling point of view. To envisage this large computing capacity, efficient resource allocation schemes are needed. A multicore scheduler is a resource management component of a multicore operating system focuses on distributing the load of some highly loaded processor to the lightly loaded ones such that the overall performance of the system is maximized. We already proposed a hard-soft processor affinity scheduling algorithm that promises in minimizing the average waiting time of the non critical tasks in the centralized queue and avoids the context switching of critical tasks. In this paper we are incorporating the agent based load balancing scheme for the multicore processor using the hard-soft processor affinity scheduling algorithm. Since we use the actual round robin scheduling for non critical tasks and due to soft affinity the load balancing is done automatically for non critical tasks. -

AMD Athlon Processor Model 4 Data Sheet

Preliminary Information AMD AthlonTM Processor Model 4 Data Sheet Publication # 23792 Rev: K Issue Date: November 2001 Preliminary Information © 2000, 2001 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or war- ranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and prod- uct descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other applica- tion in which the failure of AMD’s product could create a situation where per- sonal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any Trademarks AMD, the AMD Arrow logo, AMD Athlon, AMD Duron, and combinations thereof, and 3DNow! are trademarks of Advanced Micro Devices, Inc. HyperTransport is a trademark of the HyperTransport Technology Consortium. -

AMD Athlon 64 FX Processor Product Data Sheet

AMD Athlon™ 64 FX Processor Product Data Sheet • Compatible with Existing 32-Bit Code Base – Including support for SSE, SSE2, SSE3*, MMX™, 940-Pin Package Specific Features 3DNow!™ technology and legacy x86 instructions *SSE3 supported by Rev. E and later processors • Refer to the AMD Functional Data Sheet, – Runs existing operating systems and drivers 940-Pin Package, order# 31412, for functional, – Local APIC on-chip electrical, and mechanical details of 940-pin package processors. • AMD64 Technology – AMD64 technology instruction set extensions • Packaging – 64-bit integer registers, 48-bit virtual addresses, – 940-pin lidded ceramic micro PGA 40-bit physical addresses – 1.27-mm pin pitch – Eight additional 64-bit integer registers (16 total) – 31x31-row pin array – Eight additional 128-bit SSE/SSE2/SSE3 registers – 40mm x 40mm ceramic substrate (16 total) – Ceramic C4 die attach • Multi-Core Architecture • Integrated Memory Controller – Single-core or dual-core options – Low-latency, high-bandwidth – Discrete L1 and L2 cache structures for each core – 144-bit DDR SDRAM at 100, 133, 166, and • 64-Kbyte 2-Way Associative ECC-Protected 200 MHz L1 Data Cache – Supports up to eight registered DIMMs – Two 64-bit operations per cycle, 3-cycle latency – ECC checking with double-bit detect and single-bit correct • 64-Kbyte 2-Way Associative Parity-Protected L1 Instruction Cache • Electrical Interfaces – With advanced branch prediction – HyperTransport™ technology: LVDS-like differential, unidirectional • 16-Way Associative ECC-Protected L2 Cache – DDR SDRAM: SSTL_2 per JEDEC specification – Exclusive cache architecture—storage in addition – Clock, reset, and test signals also use DDR to L1 caches SDRAM-like electrical specifications. -

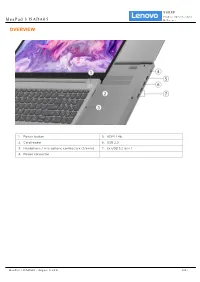

Ideapad 3 15ADA05 Reference

PSREF Product Specifications IdeaPad 3 15ADA05 Reference OVERVIEW 1. Power button 5. HDMI 1.4b 2. Card reader 6. USB 2.0 3. Headphone / microphone combo jack (3.5mm) 7. 2x USB 3.2 Gen 1 4. Power connector IdeaPad 3 15ADA05 - August 17 2021 1 of 7 PSREF Product Specifications IdeaPad 3 15ADA05 Reference PERFORMANCE Processor Processor Family AMD 3000, AMD Athlon™, or AMD Ryzen™ 3 / 5 / 7 Processor Processor Base Max Memory Processor Name Cores Threads Cache Processor Graphics Frequency Frequency Support 1MB L2 / AMD 3020e 2 2 1.2GHz 2.6GHz DDR4-2400 AMD Radeon™ Graphics 4MB L3 AMD Athlon Silver 1MB L2 / 2 2 2.3GHz 3.2GHz DDR4-2400 AMD Radeon Graphics 3050U 4MB L3 AMD Athlon Gold 1MB L2 / 2 4 2.4GHz 3.3GHz DDR4-2400 AMD Radeon Graphics 3150U 4MB L3 AMD Ryzen 3 1MB L2 / 2 4 2.6GHz 3.5GHz DDR4-2400 AMD Radeon Graphics 3250U 4MB L3 AMD Ryzen 5 2MB L2 / AMD Radeon Vega 8 4 8 2.1GHz 3.7GHz DDR4-2400 3500U 4MB L3 Graphics AMD Ryzen 7 2MB L2 / AMD Radeon RX Vega 10 4 8 2.3GHz 4.0GHz DDR4-2400 3700U 4MB L3 Graphics Operating System Operating System • Windows® 10 Home 64 • Windows 10 Home in S mode • Windows 10 Pro 64 • FreeDOS • No operating system Graphics Graphics Graphics Type Memory Key Features AMD Radeon Graphics Integrated Shared DirectX® 12 AMD Radeon Vega 8 Graphics Integrated Shared DirectX 12 AMD Radeon RX Vega 10 Graphics Integrated Shared DirectX 12 Monitor Support Monitor Support Supports up to 2 independent displays via native display and 1 external monitor; supports external monitor via HDMI® (up to 3840x2160@30Hz) Chipset Chipset AMD SoC (System on Chip) platform Memory Max Memory[1] • Up to 8GB (8GB SO-DIMM) DDR4-2400 offering (AMD 3020e models) • Up to 12GB (4GB soldered + 8GB SO-DIMM) DDR4-2400 offering (Athlon or Ryzen models) Memory Slots • One DDR4 SO-DIMM slot (AMD 3020e models) • One memory soldered to systemboard, one DDR4 SO-DIMM slot, dual-channel capable (Athlon or Ryzen models) Memory Type IdeaPad 3 15ADA05 - August 17 2021 2 of 7 PSREF Product Specifications IdeaPad 3 15ADA05 Reference DDR4-2400 Notes: 1.