Path Optimization in Free Energy Calculations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advances in Theoretical & Computational Physics

ISSN: 2639-0108 Review Article Advances in Theoretical & Computational Physics Cosmology: Preprogrammed Evolution (The problem of Providence) Besud Chu Erdeni Unified Theory Lab, Bayangol disrict, Ulan-Bator, Mongolia *Corresponding author Besud Chu Erdeni, Unified Theory Lab, Bayangol disrict, Ulan-Bator, Mongolia. Submitted: 25 March 2021; Accepted: 08 Apr 2021; Published: 18 Apr 2021 Citation: Besud Chu Erdeni (2021) Cosmology: Preprogrammed Evolution (The problem of Providence). Adv Theo Comp Phy 4(2): 113-120. Abstract This is continued from the article Superunification: Pure Mathematics and Theoretical Physics published in this journal and intended to discuss the general logical and philosophical consequences of the universal mathematical machine described by the superunified field theory. At first was mathematical continuum, that is, uncountably infinite set of real numbers. The continuum is self-exited and self- organized into the universal system of mathematical harmony observed by the intelligent beings in the Cosmos as the physical Universe. Consequently, cosmology as a science of evolution in the Uni- verse can be thought as a preprogrammed natural phenomenon beginning with the Big Bang event, or else, the Creation act pro- (6) cess. The reader is supposed to be acquinted with the Pythagoras’ (Arithmetization) and Plato’s (Geometrization) concepts. Then, With this we can derive even the human gene-chromosome topol- the numeric, Pythagorean, form of 4-dim space-time shall be ogy. (1) The operator that images the organic growth process from nothing is where time is an agorithmic (both geometric and algebraic) bifur- cation of Newton’s absolute space denoted by the golden section exp exp e. -

Journal of Computational Physics

JOURNAL OF COMPUTATIONAL PHYSICS AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Audience p.2 • Impact Factor p.2 • Abstracting and Indexing p.2 • Editorial Board p.2 • Guide for Authors p.6 ISSN: 0021-9991 DESCRIPTION . Journal of Computational Physics has an open access mirror journal Journal of Computational Physics: X which has the same aims and scope, editorial board and peer-review process. To submit to Journal of Computational Physics: X visit https://www.editorialmanager.com/JCPX/default.aspx. The Journal of Computational Physics focuses on the computational aspects of physical problems. JCP encourages original scientific contributions in advanced mathematical and numerical modeling reflecting a combination of concepts, methods and principles which are often interdisciplinary in nature and span several areas of physics, mechanics, applied mathematics, statistics, applied geometry, computer science, chemistry and other scientific disciplines as well: the Journal's editors seek to emphasize methods that cross disciplinary boundaries. The Journal of Computational Physics also publishes short notes of 4 pages or less (including figures, tables, and references but excluding title pages). Letters to the Editor commenting on articles already published in this Journal will also be considered. Neither notes nor letters should have an abstract. Review articles providing a survey of particular fields are particularly encouraged. Full text articles have a recommended length of 35 pages. In order to estimate the page limit, please use our template. Published conference papers are welcome provided the submitted manuscript is a significant enhancement of the conference paper with substantial additions. Reproducibility, that is the ability to reproduce results obtained by others, is a core principle of the scientific method. -

Free and Open Source Software for Computational Chemistry Education

Free and Open Source Software for Computational Chemistry Education Susi Lehtola∗,y and Antti J. Karttunenz yMolecular Sciences Software Institute, Blacksburg, Virginia 24061, United States zDepartment of Chemistry and Materials Science, Aalto University, Espoo, Finland E-mail: [email protected].fi Abstract Long in the making, computational chemistry for the masses [J. Chem. Educ. 1996, 73, 104] is finally here. We point out the existence of a variety of free and open source software (FOSS) packages for computational chemistry that offer a wide range of functionality all the way from approximate semiempirical calculations with tight- binding density functional theory to sophisticated ab initio wave function methods such as coupled-cluster theory, both for molecular and for solid-state systems. By their very definition, FOSS packages allow usage for whatever purpose by anyone, meaning they can also be used in industrial applications without limitation. Also, FOSS software has no limitations to redistribution in source or binary form, allowing their easy distribution and installation by third parties. Many FOSS scientific software packages are available as part of popular Linux distributions, and other package managers such as pip and conda. Combined with the remarkable increase in the power of personal devices—which rival that of the fastest supercomputers in the world of the 1990s—a decentralized model for teaching computational chemistry is now possible, enabling students to perform reasonable modeling on their own computing devices, in the bring your own device 1 (BYOD) scheme. In addition to the programs’ use for various applications, open access to the programs’ source code also enables comprehensive teaching strategies, as actual algorithms’ implementations can be used in teaching. -

Computational Science and Engineering

Computational Science and Engineering, PhD College of Engineering Graduate Coordinator: TBA Email: Phone: Department Chair: Marwan Bikdash Email: [email protected] Phone: 336-334-7437 The PhD in Computational Science and Engineering (CSE) is an interdisciplinary graduate program designed for students who seek to use advanced computational methods to solve large problems in diverse fields ranging from the basic sciences (physics, chemistry, mathematics, etc.) to sociology, biology, engineering, and economics. The mission of Computational Science and Engineering is to graduate professionals who (a) have expertise in developing novel computational methodologies and products, and/or (b) have extended their expertise in specific disciplines (in science, technology, engineering, and socioeconomics) with computational tools. The Ph.D. program is designed for students with graduate and undergraduate degrees in a variety of fields including engineering, chemistry, physics, mathematics, computer science, and economics who will be trained to develop problem-solving methodologies and computational tools for solving challenging problems. Research in Computational Science and Engineering includes: computational system theory, big data and computational statistics, high-performance computing and scientific visualization, multi-scale and multi-physics modeling, computational solid, fluid and nonlinear dynamics, computational geometry, fast and scalable algorithms, computational civil engineering, bioinformatics and computational biology, and computational physics. Additional Admission Requirements Master of Science or Engineering degree in Computational Science and Engineering (CSE) or in science, engineering, business, economics, technology or in a field allied to computational science or computational engineering field. GRE scores Program Outcomes: Students will demonstrate critical thinking and ability in conducting research in engineering, science and mathematics through computational modeling and simulations. -

Computational Chemistry

G UEST E DITORS’ I NTRODUCTION COMPUTATIONAL CHEMISTRY omputational chemistry has come of chemical, physical, and biological phenomena. age. With significant strides in com- The Nobel Prize award to John Pople and Wal- puter hardware and software over ter Kohn in 1998 highlighted the importance of the last few decades, computational these advances in computational chemistry. Cchemistry has achieved full partnership with the- With massively parallel computers capable of ory and experiment as a tool for understanding peak performance of several teraflops already on and predicting the behavior of a broad range of the scene and with the development of parallel software for efficient exploitation of these high- end computers, we can anticipate that computa- tional chemistry will continue to change the scientific landscape throughout the coming cen- tury. The impact of these advances will be broad and encompassing, because chemistry is so cen- tral to the myriad of advances we anticipate in areas such as materials design, biological sci- ences, and chemical manufacturing. Application areas Materials design is very broad in scope. It ad- dresses a diverse range of compositions of matter that can better serve structural and functional needs in all walks of life. Catalytic design is one such example that clearly illustrates the promise and challenges of computational chemistry. Al- most all chemical reactions of industrial or bio- chemical significance are catalytic. Yet, catalysis is still more of an art (at best) or an empirical fact than a design science. But this is sure to change. A recent American Chemical Society Sympo- sium volume on transition state modeling in catalysis illustrates its progress.1 This sympo- sium highlighted a significant development in the level of modeling that researchers are em- 1521-9615/00/$10.00 © 2000 IEEE ploying as they focus more and more on the DONALD G. -

COMPUTER PHYSICS COMMUNICATIONS an International Journal and Program Library for Computational Physics

COMPUTER PHYSICS COMMUNICATIONS An International Journal and Program Library for Computational Physics AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Audience p.2 • Impact Factor p.2 • Abstracting and Indexing p.2 • Editorial Board p.2 • Guide for Authors p.4 ISSN: 0010-4655 DESCRIPTION . Visit the CPC International Computer Program Library on Mendeley Data. Computer Physics Communications publishes research papers and application software in the broad field of computational physics; current areas of particular interest are reflected by the research interests and expertise of the CPC Editorial Board. The focus of CPC is on contemporary computational methods and techniques and their implementation, the effectiveness of which will normally be evidenced by the author(s) within the context of a substantive problem in physics. Within this setting CPC publishes two types of paper. Computer Programs in Physics (CPiP) These papers describe significant computer programs to be archived in the CPC Program Library which is held in the Mendeley Data repository. The submitted software must be covered by an approved open source licence. Papers and associated computer programs that address a problem of contemporary interest in physics that cannot be solved by current software are particularly encouraged. Computational Physics Papers (CP) These are research papers in, but are not limited to, the following themes across computational physics and related disciplines. mathematical and numerical methods and algorithms; computational models including those associated with the design, control and analysis of experiments; and algebraic computation. Each will normally include software implementation and performance details. The software implementation should, ideally, be available via GitHub, Zenodo or an institutional repository.In addition, research papers on the impact of advanced computer architecture and special purpose computers on computing in the physical sciences and software topics related to, and of importance in, the physical sciences may be considered. -

Computational Complexity for Physicists

L IMITS OF C OMPUTATION Copyright (c) 2002 Institute of Electrical and Electronics Engineers. Reprinted, with permission, from Computing in Science & Engineering. This material is posted here with permission of the IEEE. Such permission of the IEEE does not in any way imply IEEE endorsement of any of the discussed products or services. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by sending a blank email message to [email protected]. By choosing to view this document, you agree to all provisions of the copyright laws protecting it. COMPUTATIONALCOMPLEXITY FOR PHYSICISTS The theory of computational complexity has some interesting links to physics, in particular to quantum computing and statistical mechanics. This article contains an informal introduction to this theory and its links to physics. ompared to the traditionally close rela- mentation as well as the computer on which the tionship between physics and mathe- program is running. matics, an exchange of ideas and meth- The theory of computational complexity pro- ods between physics and computer vides us with a notion of complexity that is Cscience barely exists. However, the few interac- largely independent of implementation details tions that have gone beyond Fortran program- and the computer at hand. Its precise definition ming and the quest for faster computers have been requires a considerable formalism, however. successful and have provided surprising insights in This is not surprising because it is related to a both fields. -

Computational Complexity: a Modern Approach

i Computational Complexity: A Modern Approach Sanjeev Arora and Boaz Barak Princeton University http://www.cs.princeton.edu/theory/complexity/ [email protected] Not to be reproduced or distributed without the authors’ permission ii Chapter 10 Quantum Computation “Turning to quantum mechanics.... secret, secret, close the doors! we always have had a great deal of difficulty in understanding the world view that quantum mechanics represents ... It has not yet become obvious to me that there’s no real problem. I cannot define the real problem, therefore I suspect there’s no real problem, but I’m not sure there’s no real problem. So that’s why I like to investigate things.” Richard Feynman, 1964 “The only difference between a probabilistic classical world and the equations of the quantum world is that somehow or other it appears as if the probabilities would have to go negative..” Richard Feynman, in “Simulating physics with computers,” 1982 Quantum computing is a new computational model that may be physically realizable and may provide an exponential advantage over “classical” computational models such as prob- abilistic and deterministic Turing machines. In this chapter we survey the basic principles of quantum computation and some of the important algorithms in this model. One important reason to study quantum computers is that they pose a serious challenge to the strong Church-Turing thesis (see Section 1.6.3), which stipulates that every physi- cally reasonable computation device can be simulated by a Turing machine with at most polynomial slowdown. As we will see in Section 10.6, there is a polynomial-time algorithm for quantum computers to factor integers, whereas despite much effort, no such algorithm is known for deterministic or probabilistic Turing machines. -

COMPUTATIONAL SCIENCE 2017-2018 College of Engineering and Computer Science BACHELOR of SCIENCE Computer Science

COMPUTATIONAL SCIENCE 2017-2018 College of Engineering and Computer Science BACHELOR OF SCIENCE Computer Science *The Computational Science program offers students the opportunity to acquire a knowledge in computing integrated with knowledge in one of the following areas of study: (a) bioinformatics, (b) computational physics, (c) computational chemistry, (d) computational mathematics, (e) environmental science informatics, (f) health informatics, (g) digital forensics and cyber security, (h) business informatics, (i) biomedical informatics, (j) computational engineering physics, and (k) computational engineering technology. Graduates of this program major in computational science with a concentration in one of the above areas of study. (Amended for clarification 12/5/2018). *Previous UTRGV language: Computational science graduates develop emphasis in two major fields, one in computer science and one in another field, in order to integrate an interdisciplinary computing degree applied to a number of emerging areas of study such as biomedical-informatics, digital forensics, computational chemistry, and computational physics, to mention a few examples. Graduates of this program are prepared to enter the workforce or to continue a graduate studies either in computer science or in the second major. A – GENERAL EDUCATION CORE – 42 HOURS Students must fulfill the General Education Core requirements. The courses listed below satisfy both degree requirements and General Education core requirements. Required 020 - Mathematics – 3 hours For all -

Computational Problem Solving in University Physics Education Students’ Beliefs, Knowledge, and Motivation

Computational problem solving in university physics education Students’ beliefs, knowledge, and motivation Madelen Bodin Department of Physics Umeå 2012 This work is protected by the Swedish Copyright Legislation (Act 1960:729) ISBN: 978-91-7459-398-3 ISSN: 1652-5051 Cover: Madelen Bodin Electronic version available at http://umu.diva-portal.org/ Printed by: Print & Media, Umeå University Umeå, Sweden, 2012 To my family Thanks It has been an exciting journey during these years as a PhD student in physics education research. I started this journey as a physicist and I am grateful that I've had the privilege to gain insight into the fascination field of how, why, and when people learn. There are many people that have been involved during this journey and contributed with support, encouragements, criticism, laughs, inspiration, and love. First I would like to thank Mikael Winberg who encouraged me to apply as a PhD student and who later became my supervisor. You have been invaluable as a research partner and constantly given me constructive comments on my work. Thanks also to Sune Pettersson and Sylvia Benkert who introduced me to this field of research. Many thanks to Jonas Larsson, Martin Servin, and Patrik Norqvist who let me borrow their students and also have contributed with valuable ideas and comments. Special thanks to the students who shared their learning experiences during my studies. It has been a privilege to be a member of the National Graduate School in Science and Technology Education (FontD). Thanks for providing courses and possibilities to networking with research colleagues from all over the world. -

Teaching Computational Physics

ODERN PHYSICS RESEARCH is concerned M increasing1y with comple~ systems com posed of many interacting components - the Teaching many atoms making up a solid, the many stars in a galaxy, or the many values of a field in space-time describing an elementary parti Computational cle. In most of these cases, although we might know the simple general laws govern ing the interactions between the components, Physics it is difficult to predict or understand qualita tively new phenomena that can arise solely from the complexity of the problem. General insights in this regard are difficult, and the analytical pencil-and-paper approach that has served physics so well in the past quickly reaches its limits. Numerical simulations are therefore essential to further understanding, and computcrs and computing playa central role in much of modern research. Using a computer to model physical sys tems is, at its best, more art than science. The successful computational physicist is not a blind number-cruncher, but rather exploits the numerical power of the computer through by Steven E. Koonin a mix of numerical analysis, analytical models, and programing to solve otherwise intractable problems. It is a skill that can be acquired and refined - knowing how to set up the simulation, what numerical methods to employ, how to implement them effi ciently, when to trust the accuracy of the results. Despite its importance, computational physics has largely been neglected in the stan dard university physics curriculum. In part, this is because it requires balanced integration of three commonly disjoint disciplines: phys Caltech in the winter of 1983. -

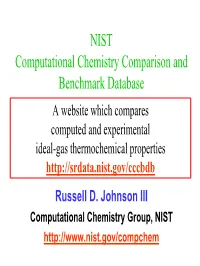

NIST Computational Chemistry Comparison and Benchmark Database

NIST Computational Chemistry Comparison and Benchmark Database A website which compares computed and experimental ideal-gas thermochemical properties http://srdata.nist.gov/cccbdb Russell D. Johnson III Computational Chemistry Group, NIST http://www.nist.gov/compchem How good is that ab initio calculation? For predicting enthalpies. For predicting geometries. For predicting vibrational frequencies. For this molecule. For these molecules. http://srdata.nist.gov/cccbdb CCCBDB Contents • 680 molecules • Enthalpies of formation, Entropies, Geometries, Vibrational frequencies • Experimental values • Computed values • Comparisons • Auxiliary information http://srdata.nist.gov/cccbdb Properties • Energetics – Enthalpies of formation – Atomization enthalpies – Reaction enthalpies – Barriers to Internal Rotation • Entropies – Heat Capacities – Integrated Heat Capacities http://srdata.nist.gov/cccbdb More Properties • Geometries – Bond lengths and angles – Rotational Constants – Moments of Inertia • Vibrational Frequencies – Intensities – Zero point energies http://srdata.nist.gov/cccbdb Even More Properties • Electrostatics – Dipole Moments – Quadrupole Moments – Polarizabilities – Charges • Mulliken •ESP • ChelpG http://srdata.nist.gov/cccbdb Molecules in CCCBDB • Small, gas-phase • Mostly < 7 heavy atoms - hexane • Mostly no atoms with atomic number >17 (chlorine). A dozen Br-containing molecules. • 90 diatomics, 574 polyatomics • 469 organic molecules, – 125 hydrocarbons – 145 CHO species – 16 amides http://srdata.nist.gov/cccbdb Experimental