Exploring Slider Vs. Categorical Response Formats in Web-Based Surveys

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Notable Alphas Fraternity Mission Statement

ALPHA PHI ALPHA NOTABLE ALPHAS FRATERNITY MISSION STATEMENT ALPHA PHI ALPHA FRATERNITY DEVELOPS LEADERS, PROMOTES BROTHERHOOD AND ACADEMIC EXCELLENCE, WHILE PROVIDING SERVICE AND ADVOCACY FOR OUR COMMUNITIES. FRATERNITY VISION STATEMENT The objectives of this Fraternity shall be: to stimulate the ambition of its members; to prepare them for the greatest usefulness in the causes of humanity, freedom, and dignity of the individual; to encourage the highest and noblest form of manhood; and to aid down-trodden humanity in its efforts to achieve higher social, economic and intellectual status. The first two objectives- (1) to stimulate the ambition of its members and (2) to prepare them for the greatest usefulness in the cause of humanity, freedom, and dignity of the individual-serve as the basis for the establishment of Alpha University. Table Of Contents Table of Contents THE JEWELS . .5 ACADEMIA/EDUCATORS . .6 PROFESSORS & RESEARCHERS. .8 RHODES SCHOLARS . .9 ENTERTAINMENT . 11 MUSIC . 11 FILM, TELEVISION, & THEATER . 12 GOVERNMENT/LAW/PUBLIC POLICY . 13 VICE PRESIDENTS/SUPREME COURT . 13 CABINET & CABINET LEVEL RANKS . 13 MEMBERS OF CONGRESS . 14 GOVERNORS & LT. GOVERNORS . 16 AMBASSADORS . 16 MAYORS . 17 JUDGES/LAWYERS . 19 U.S. POLITICAL & LEGAL FIGURES . 20 OFFICIALS OUTSIDE THE U.S. 21 JOURNALISM/MEDIA . 21 LITERATURE . .22 MILITARY SERVICE . 23 RELIGION . .23 SCIENCE . .24 SERVICE/SOCIAL REFORM . 25 SPORTS . .27 OLYMPICS . .27 BASKETBALL . .28 AMERICAN FOOTBALL . 29 OTHER ATHLETICS . 32 OTHER ALPHAS . .32 NOTABLE ALPHAS 3 4 ALPHA PHI ALPHA ADVISOR HANDBOOK THE FOUNDERS THE SEVEN JEWELS NAME CHAPTER NOTABILITY THE JEWELS Co-founder of Alpha Phi Alpha Fraternity; 6th Henry A. Callis Alpha General President of Alpha Phi Alpha Co-founder of Alpha Phi Alpha Fraternity; Charles H. -

Carbonite Operation Manual

Carbonite & Carbonite eXtreme OPERATION MANUAL v10.0 www.rossvideo.com times of company or customer crisis - do what you Thank You For Choosing know in your heart is right. (You may rent helicopters if necessary.) Ross You've made a great choice. We expect you will be very happy with your purchase of Ross Technology. Our mission is to: 1. Provide a Superior Customer Experience • offer the best product quality and support 2. Make Cool Practical Technology • develop great products that customers love Ross has become well known for the Ross Video Code of Ethics. It guides our interactions and empowers our employees. I hope you enjoy reading it below. If anything at all with your Ross experience does not live up to your expectations be sure to reach out to us at [email protected]. David Ross CEO, Ross Video [email protected] Ross Video Code of Ethics Any company is the sum total of the people that make things happen. At Ross, our employees are a special group. Our employees truly care about doing a great job and delivering a high quality customer experience every day. This code of ethics hangs on the wall of all Ross Video locations to guide our behavior: 1. We will always act in our customers' best interest. 2. We will do our best to understand our customers' requirements. 3. We will not ship crap. 4. We will be great to work with. 5. We will do something extra for our customers, as an apology, when something big goes wrong and it's our fault. -

06 7-26-11 TV Guide.Indd

Page 6 THE NORTON TELEGRAM Tuesday, July 26, 2011 Monday Evening August 1, 2011 7:00 7:30 8:00 8:30 9:00 9:30 10:00 10:30 11:00 11:30 KHGI/ABC The Bachelorette The Bachelorette Local Nightline Jimmy Kimmel Live WEEK OF FRIDAY , JULY 29 THROUGH THURSDAY , AUG . 4 KBSH/CBS How I Met Mike Two Men Mike Hawaii Five-0 Local Late Show Letterman Late KSNK/NBC America's Got Talent Law Order: CI Harry's Law Local Tonight Show w/Leno Late FOX Hell's Kitchen MasterChef Local Cable Channels A&E Hoarders Hoarders Intervention Intervention Hoarders AMC The Godfather The Godfather ANIM I Shouldn't Be Alive I Shouldn't Be Alive Hostage in Paradise I Shouldn't Be Alive I Shouldn't Be Alive CNN In the Arena Piers Morgan Tonight Anderson Cooper 360 To Be Announced Piers Morgan Tonight DISC Jaws of the Pacific Rogue Sharks Summer of the Shark Rogue Sharks Summer of the Shark DISN Good Luck Shake It Bolt Phineas Phineas Wizards Wizards E! Sex-City Sex-City Ice-Coco Ice-Coco True Hollywood Story Chelsea E! News Chelsea Norton TV ESPN MLB Baseball Baseball Tonight SportsCenter Baseball NFL Live ESPN2 SportsNation Soccer World, Poker World, Poker FAM Secret-Teen Switched at Birth Secret-Teen The 700 Club My Wife My Wife FX Earth Stood Earth Stood HGTV House Hunters Design Star High Low Hunters House House Design Star HIST Pawn Pawn American Pickers Pawn Pawn Top Gear Pawn Pawn LIFE Craigslist Killer The Protector The Protector Chris How I Met Listings: MTV True Life MTV Special Teen Wolf Teen Wolf Awkward. -

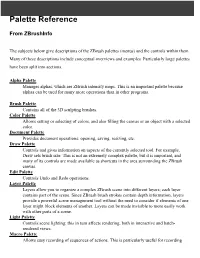

Brush Palettes (Menus) and the Controls Within Them

Palette Reference From ZBrushInfo The subjects below give descriptions of the ZBrush palettes (menus) and the controls within them. Many of these descriptions include conceptual overviews and examples. Particularly large palettes have been split into sections. Alpha Palette Manages alphas, which are ZBrush intensity maps. This is an important palette because alphas can be used for many more operations than in other programs. Brush Palette Contains all of the 3D sculpting brushes. Color Palette Allows setting or selecting of colors, and also filling the canvas or an object with a selected color. Document Palette Provides document operations; opening, saving, resizing, etc. Draw Palette Controls and gives information on aspects of the currently selected tool. For example, Draw sets brush size. This is not an extremely complex palette, but it is important, and many of its controls are made available as shortcuts in the area surrounding the ZBrush canvas. Edit Palette Controls Undo and Redo operations. Layer Palette Layers allow you to organize a complex ZBrush scene into different layers; each layer contains part of the scene. Since ZBrush brush strokes contain depth information, layers provide a powerful scene management tool without the need to consider if elements of one layer might block elements of another. Layers can be made invisible to more easily work with other parts of a scene. Light Palette Controls scene lighting; this in turn affects rendering, both in interactive and batch- rendered views. Macro Palette Allows easy recording of sequences of actions. This is particularly useful for recording actions that make a number of settings that define, for example, a favorite brush combination. -

Read the Balticon 46 Pocket Program

Note to Parents Anime Parents, please be advised that there are a lim- ited number of movies in the Anime Progam which are appropriate for children under 12. Please take note of the ratings listed next to the movies. A document explaining the ratings will be posted on the Anime Room Door. Parents are strongly encouraged to take these ratings as a guide for what might or might not be inappropriate for their children to watch. In general, children under 14 should not be in the Anime Room room between 10 PM and 5 AM, but everything in the middle of the day should be ok for ages 14 and up. Children under 13 should be accompanied by a parent or guard- ian. We recommend that parents of teens sit and view a portion of an “MA” rated movie to decide if it is something they want their teen to view. Film Festival All of the entries being screened in the film festival up to 9:30 pm are what the selection committee estimated would be considered “G” or “PG” if they were rated. Entries screening after 9:40 pm range from estimated “G” to “R” rated, thus some movies screening after 9:40 pm may not be appropriate for children under 17 who are not accompanied by a par- ent or guardian. LARP Join the returning players in the 3rd year of the game H e r o e s & V i l l a i n s Register in the Valley Foyer Friday 5 to 9 pm and Saturday, 9 to 11 am. -

WHERE DO ALPHAS COME FROM?: a MEASURE of the VALUE of ACTIVE INVESTMENT MANAGEMENT∗ Andrew W

JOURNAL OF INVESTMENT MANAGEMENT, Vol. 6, No. 2, (2008), pp. 1–29 © JOIM 2008 JOIM www.joim.com WHERE DO ALPHAS COME FROM?: A MEASURE OF THE VALUE OF ACTIVE INVESTMENT MANAGEMENT∗ Andrew W. Lo† The value of active investment management is traditionally measured by alpha, beta, volatility, tracking error, and the Sharpe and information ratios. These are essentially static characteristics of the marginal distributions of returns at a single point in time, and do not incorporate dynamic aspects of a manager’s investment process. In this paper, I propose a measure of the value of active investment management that captures both static and dynamic contributions of a portfolio manager’s decisions. The measure is based on a decomposition of a portfolio’s expected return into two distinct components: a static weighted-average of the individual securities’ expected returns, and the sum of covariances between returns and portfolio weights. The former component measures the portion of the manager’s expected return due to static investments in the underlying securities, while the latter component captures the forecast power implicit in the manager’s dynamic investment choices. This measure can be computed for long-only investments, long/short portfolios, and asset allocation rules, and is particularly relevant for hedge-fund strategies where both components are significant contributors to their expected returns, but only one should garner the high fees that hedge funds typically charge. Several analytical and empirical examples are provided to illustrate the practical relevance of this decomposition. 1 Introduction ∗I thank Nicholas Chan, John Cox, Arnout Eikeboom, Lisa Goldberg, Mark Grinblatt, Stephanie Hogue, Rajnish Kamat, Philippe Luedi, Sara Salem, Nils Tuchschmid, an anonymous With the growing popularity of hedge funds and referee, and participants at the Le Club B 2006 Conference other absolute-return investment strategies, there and the JOIM 2007 Spring Conference for their helpful com- is a widening gap between the performance met- ments and discussion. -

Sandspur, Vol. 45 No. 03, October 18, 1939

University of Central Florida STARS The Rollins Sandspur Newspapers and Weeklies of Central Florida 10-18-1939 Sandspur, Vol. 45 No. 03, October 18, 1939 Rollins College Find similar works at: https://stars.library.ucf.edu/cfm-sandspur University of Central Florida Libraries http://library.ucf.edu This Newspaper is brought to you for free and open access by the Newspapers and Weeklies of Central Florida at STARS. It has been accepted for inclusion in The Rollins Sandspur by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Rollins College, "Sandspur, Vol. 45 No. 03, October 18, 1939" (1939). The Rollins Sandspur. 561. https://stars.library.ucf.edu/cfm-sandspur/561 sfifr Florida's Support Oldest College Rollins Sandspur Newspaper Eollins andBpur Advertisers VOLUME 45 (Z-107) (Weekly Student Newspaper) WINTER PARK, FLORIDA, WEDNESDAY, OCTOBER 18, 1939 (Complete Campus Coverage) CRIPPLED TARS LASH DECKS FOR HURRICANES Walter Trampler, Violinist, 78NewStudents Will Present Recital, October 24 Academic Honor Ground Instruction Football Team Accept Bids to List Announced Courses Offered Is Heading For Rollins Students Social Groups At Convocation Hurricane Game Classes Given For Those Unable To Take Regular "Kappa Alpha and Pi Phi Chi Omegas Win Cup Third Aeronautics Training Time; Ten Elected to Fresh Tars' Backfield Is Riddled By Receive Most Pledges; Al- Injuries; Four Backs Are < pha Phi is Third man Phi Society The Orlando Vocational School, Definitely Off List which has in operation Day trade 44 Women and 34 Men Dr. Holt Speaks On classes for aviation Mechanic European Affairs Training* with over $50,000 worth Rollins Will Give *J 0 i n Organizations of airplanes, engines, and ground Miami Strong Battle school equipment, has opened a The opening convocation of the special Ground School course. -

Terra Nova, an Experiment in Creating Cult Televison for a Mass Audience

Syracuse University SURFACE S.I. Newhouse School of Public Media Studies - Theses Communications 8-2012 Terra Nova, An Experiment in Creating Cult Televison for a Mass Audience Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/ms_thesis Part of the Film and Media Studies Commons, and the Mass Communication Commons Recommended Citation Osur, Laura, "Terra Nova, An Experiment in Creating Cult Televison for a Mass Audience" (2012). Media Studies - Theses. 5. https://surface.syr.edu/ms_thesis/5 This Thesis is brought to you for free and open access by the S.I. Newhouse School of Public Communications at SURFACE. It has been accepted for inclusion in Media Studies - Theses by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When it aired in Fall 2011 on Fox, Terra Nova was an experiment in creating a cult television program that appealed to a mass audience. This thesis is a case study of that experiment. I conclude that the show failed because of its attempts to maintain the sophistication, complexity and innovative nature of the cult genre while simultaneously employing an overly simplistic narrative structure that resembles that of mass audience programming. Terra Nova was unique in its transmedia approach to marketing and storytelling, its advanced special effects, and its dystopian speculative fiction premise. Terra Nova’s narrative, on the other hand, presented a nostalgically simple moralistic landscape that upheld old-fashioned ideologies and felt oddly retro to the modern SF TV audience. Terra Nova’s failure suggests that a cult show made for this type of broad audience is impossible. -

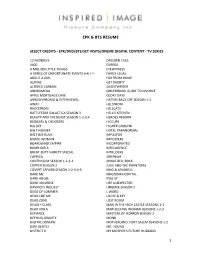

Epk & Bts Resume 1 Select Credits

EPK & BTS RESUME SELECT CREDITS - EPK/DVD/BTS/SET VISITS/ONLINE DIGITAL CONTENT - TV SERIES 12 MONKEYS DRESDEN FILES 4400 EUREKA A MILLION LITTLE THINGS EYEWITNESS A SERIES OF UNFORTUNATE EVENTS SSN 1-2 FAIRLY LEGAL ABOUT A GIRL FAR FROM HOME ALPHAS GET SHORTY ALTERED CARBON GHOSTWRITER ANDROMEDA GIRLFRIENDS’ GUIDE TO DIVORCE APPLE MORTGAGE CAKE GLORY DAYS ARROW (PROMO & INTERVIEWS) HATERS BACK OFF SEASON 1-2 AWAY HELSTROM BACKSTROM HELLCATS BATTLESTAR GALACTICA SEASON 3 HELL’S KITCHEN BEAUTY AND THE BEAST SEASON 1-2-3-4 HEROES REBORN BEGGARS & CHOOSERS HICCUPS BIG SKY HIGHER GROUND BIG THUNDER HOTEL PARANORMAL BIG TIME RUSH IMPASTOR BIONIC WOMAN IMPOSTERS BOARDWALK EMPIRE INCORPORATED BOMB GIRLS INTELLIGENCE BRENT BUTT VARIETY SPECIAL INTRUDERS CAPRICA JEREMIAH CONTINUUM SEASON 1-2-3-4 JINGLE BELL ROCK COPPER SEASON 2 JULIE AND THE PHANTOMS COVERT AFFAIRS SEASON 1-2-3-4-5 KING & MAXWELL DARE ME KINGDOM HOSPITAL DARK ANGEL KYLE XY DARK UNIVERSE LIFE UNEXPECTED DAVINCI’S INQUEST LINGERIE SEASON 2 DEAD OF SUMMER L WORD DEAD LIKE ME LOCKE & KEY DEAD ZONE LOST ROOM DEADLY CLASS MAN IN THE HIGH CASTLE SEASONS 1-2 DEAR VIOLA MAN SEEKING WOMAN SEASONS 1-2-3 DEFIANCE MASTERS OF HORROR SEASON 2 DEFYING GRAVITY MONK DIGITAL DOMAIN MOTHERLAND: FORT SALEM SEASONS 1-2 DIRK GENTLY MR. YOUNG DISTRICT 9 MY MOTHER’S FUTURE HUSBAND 1 EPK & BTS RESUME MYSTERIOUS WAYS SUPERNATURAL ONCE UPON A TIME SWEET SOUL BURLESQUE ORPHAN BLACK (Promo) SYFY UK FLASH GORDON OUTER LIMITS TALKING TO HOLLYWOOD PLAYGROUND THE 100 SEASON 1-2-3-4-5-6 PRIMEVAL THE ART OF MORE -

Noafhugopacket2020.Pdf

Microreview [video game]: Control by Remedy Entertainment (developer) Table of 49 Essays 51 The Community of Apocalypse: On N. K. Jemisin’s The Fifth Season 52 Contents This is Not a Review of The Joker 56 Battlestar Galactica as a Human Rights Narrative 58 Introduction 3 How to End a Game of Thrones 62 George R.R. Martin is Still Not Your Bitch, and other stories 64 Fiction Reviews 5 Microreview [book]: Gideon the Ninth by Tamsyn Muir 6 Conversations 66 Microreview [book]: In an Absent Dream, by Seanan McGuire 8 Adri and Joe Talk About Books: 2018 Locus Recommended Reading List Microreview [book]: Empress of Forever, by Max Gladstone 10 67 Microreview [book]: Infinite Detail by Tim Maughan 12 Review Roundtable: Vigilance by Robert Jackson Bennett 71 Microreview [Book]: The Outcast Hours edited by Mahvesh Murad & Jared Time Capsule: SF - The Year’s Greatest Science Fiction and Fantasy (1956) Shurin 14 77 Microreview [book]: The Deep, by Rivers Solomon 15 Review Roundtable: Avengers: Endgame 82 Microreview [book]: Terms of Enlistment by Marko Kloos 17 LET’S FRIGHTEN CHILDREN! Bonus Conversation Edition 87 The Hugo Initiative 19 The Initiative (an excerpt) 20 The Hugo Initiative: Doomsday Book (1993, Best Novel) 21 The Hugo Initiative: Double Star (1956, Best Novel) 23 The Hugo Initiative: Girl Genius (2009, Best Graphic Story) 25 The Hugo Initiative: Dune (1966, Best Novel) 26 The Hugo Initiative: Blogtable (1968, Best Short Story) 28 Features 33 Reading Deryni: The Bastard Prince 34 LET’S FRIGHTEN CHILDREN! The Mainstream 37 Mondays on Mandalore: A New New Hope 39 Questing in Shorts: June 2019 41 Introducing Watchmen Wednesdays 44 Thursday Morning Superhero 46 WE RANK ‘EM: Villagers from Untitled Goose Game (House House) 47 could say anything on Twitter without referenc- this weird, beautiful little website happen. -

Epk & Bts Resume 1 Select Credits

EPK & BTS RESUME SELECT CREDITS - EPK/DVD/BTS/SET VISITS/ONLINE DIGITAL CONTENT - TV SERIES 12 MONKEYS FAR FROM HOME 4400 GIRLFRIENDS’ GUIDE TO DIVORCE A MILLION LITTLE THINGS GLORY DAYS A SERIES OF UNFORTUNATE EVENTS SSN 1-2 HATERS BACK OFF SEASON 1-2 ABOUT A GIRL HELLCATS ALPHAS HELL’S KITCHEN ANDROMEDA HEROES REBORN APPLE MORTGAGE CAKE HICCUPS ARROW (PROMO & INTERVIEWS) HIGHER GROUND BACKSTROM IMPASTOR BATTLESTAR GALACTICA SEASON 3 IMPOSTERS BEAUTY AND THE BEAST SEASON 1-2-3-4 INCORPORATED BEGGARS & CHOOSERS INTELLIGENCE BIG THUNDER INTRUDERS BIG TIME RUSH JEREMIAH BIONIC WOMAN JINGLE BELL ROCK BOARDWALK EMPIRE KING & MAXWELL BOMB GIRLS KINGDOM HOSPITAL BRENT BUTT VARIETY SPECIAL KYLE XY CAPRICA LIFE UNEXPECTED CONTINUUM SEASON 1-2-3-4 LINGERIE SEASON 2 COPPER SEASON 2 L WORD COVERT AFFAIRS SEASON 1-2-3-4-5 LOST ROOM DARE ME MAN IN THE HIGH CASTLE SEASON 1-2 DARK ANGEL MAN SEEKING WOMAN SEASON 1-2-3 DARK UNIVERSE MASTERS OF HORROR SEASON 2 DAVINCI’S INQUEST MONK DEAD OF SUMMER MR. YOUNG DEAD LIKE ME MY MOTHER’S FUTURE HUSBAND DEAD ZONE MYSTERIOUS WAYS DEAR VIOLA ONCE UPON A TIME DEFIANCE ORPHAN BLACK (Promo) DEFYING GRAVITY OUTER LIMITS DIGITAL DOMAIN PLAYGROUND DIRK GENTLY PRIMEVAL DISTRICT 9 PRISON BREAK: SEQUEL DRESDEN FILES PRIVATE EYES EUREKA PSYCH EYEWITNESS PROJECT RUNWAY CANADA FAIRLY LEGAL PSYCH SEASON 5-6 1 EPK & BTS RESUME REAL HOUSEWIVES OF VANCOUVER TALKING TO HOLLYWOOD REIGN SEASON 1 THE 100 SEASON 1-2-3-4-5-6 RIESE THE ART OF MORE SEASON 2 RIVERDALE THE BOYS ROBSON ARMS THE EXPANSE SEASON 1-2-3 ROMEO THE FLASH (PROMO -

Professional Resume

JACKIE ALEXANDER MOULSON PRODUCTION COORDINATOR Cell: 416.994.1805 Res: 905.425.1750 Email: [email protected] HOT ZONE Mini Series National Geographic/Fox 21 Television Producer: Lena Cordina Aug. – Dec. 2018 PM: Chris Shaw NO SLEEP ‘TIL CHRISTMAS TV Movie ABC Freeform Producer: Mary Pantelidis June – Aug. 2018 PM: Jeffery Pong TAKEN 2 TV Series Europacorp/NBC Universal Producer: Lena Cordina Season 2 PM: Chris Shaw June 2017 – March 2018 KILLJOYS 3 TV Series Temple Street/Boat Rocker Producer: Lena Cordina Season 3 PM: Chris Shaw Dec. 2016 – May 2017 AMERICAN GODS TV Series Fremantle Media/Starz Co-Exec Producer: David Coatsworth Season 1 PM: Lisa Kussner Nov. 2015 – Dec. 2016 INCORPORATED TV Pilot CBS/SyFy/Pearl StreetFilms Co-Exec Producer: Frank Siracusa July – Sept. 2015 Whizbang/Take 5 Co-Exec Producer: John Weber Line Producer/PM: Shauna Jamison DEFIANCE 3 TV Series NBC Universal/SyFy Producer: Mark Winemaker Season 3 PM: Shauna Jamison Jan. – July 2015 MAN SEEKING WOMAN TV Series FX Network/Broadway Video Producer: Hartley Gorenstein Season 1 PM: Stefan Steen Sept. – Dec. 2014 REGRESSION Feature First Generation Films/Mod Executive Producer: Alex Lalonde Feb. – July 2014 Producciones Executive Producer: Christina Piovesan Line Producer: Derek Rappaport DEFIANCE 2 TV Series NBC Universal/SyFy Producer: Mark Winemaker Season 2 PM: Shauna Jamison July 2013 – Jan. 2014 COPPER 2 TV Series Cineflix/BBC America Producer: Michael Frislev Season 2 PM: Shauna Jamison Dec. 2012 – July 2013 ALPHAS I & 2 TV Series NBC Universal/SyFy Producer: Kevin Lafferty Seasons 1 & 2 PM: Victoria Harding S2 Feb. – Sept. 2012 S1 March – Sept.