Evolving a Bridge Bidding System Jason R. Woolever

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Slam Bidding Lesson

Slam Bidding and Modified Scroll Bids By Neil H. Timm In this Bridge Bit, I explore more fully Slam bidding techniques, some old and some perhaps new. To reach a small slam, the partnership should have roughly thirty-three Bergen points. In addition to a trump fit and count, slams require controls (aces, kings, voids, and singletons). The more controls between the partners, the easier the slam. To evaluate whether or not the partnership has the required controls, one uses cuebids with perhaps the 5NT trump ask bid (Grand Slam Force), and Blackwood Conventions. Blackwood Conventions reveal how many aces and kings, while cuebidding or control showing bids reveal where they reside. To make a slam, one usually requires first-round control in three suits and second round control in the fourth suit. It is possible to make a slam missing two aces, provided the missing ace is opposite a void, and the second missing ace is replaced by or is opposite a second-round control (a king or a singleton). When looking for a possible slam, one often asks the following questions. 1. What cards should my partner have to be able to make a slam? 2. How may I obtain the required information? 3. Are there any bidding techniques or conventions that I can use to obtain the required information? 4. If my partner does not have the required cards for a slam, can I stop short of slam, and if not is the risk of going down worth it? We shall review techniques to help the partnership find the required information for making a slam! However, with some hands one needs only to count points to reach a slam. -

Things You Might Like to Know About Duplicate Bridge

♠♥♦♣ THINGS YOU MIGHT LIKE TO KNOW ABOUT DUPLICATE BRIDGE Prepared by MayHem Published by the UNIT 241 Board of Directors ♠♥♦♣ Welcome to Duplicate Bridge and the ACBL This booklet has been designed to serve as a reference tool for miscellaneous information about duplicate bridge and its governing organization, the ACBL. It is intended for the newer or less than seasoned duplicate bridge players. Most of these things that follow, while not perfectly obvious to new players, are old hat to experienced tournaments players. Table of Contents Part 1. Expected In-behavior (or things you need to know).........................3 Part 2. Alerts and Announcements (learn to live with them....we have!)................................................4 Part 3. Types of Regular Events a. Stratified Games (Pairs and Teams)..............................................12 b. IMP Pairs (Pairs)...........................................................................13 c. Bracketed KO’s (Teams)...............................................................15 d. Swiss Teams and BAM Teams (Teams).......................................16 e. Continuous Pairs (Side Games)......................................................17 f. Strategy: IMPs vs Matchpoints......................................................18 Part 4. Special ACBL-Wide Events (they cost more!)................................20 Part 5. Glossary of Terms (from the ACBL website)..................................25 Part 6. FAQ (with answers hopefully).........................................................40 Copyright © 2004 MayHem 2 Part 1. Expected In-Behavior Just as all kinds of competitive-type endeavors have their expected in- behavior, so does duplicate bridge. One important thing to keep in mind is that this is a competitive adventure.....as opposed to the social outing that you may be used to at your rubber bridge games. Now that is not to say that you can=t be sociable at the duplicate table. Of course you can.....and should.....just don=t carry it to extreme by talking during the auction or play. -

The Eclectic Club

The Eclectic Club Contents Part One The Structure of the Opening Bids Page 3 Part Two Responder’s First Bid 4 The Opening Bid of 1D 4 The Opening Bid of 1H 4 The Opening Bid of 1S 5 The Opening Bid of 1NT 5 Responding in a Minor 7 1NT is Doubled 7 The Rebid of 1NT 8 The Opening Bid of 2C 9 The Opening Bid of 2D 10 The Opening Bid of 2H/2S 11 The Opening Bid of 2NT 14 Part Three Splinters 14 Slam Splinters 14 The Residual Point Count 15 The Gap Between 16 1S 3H 17 Part Four Transfers and Relays 17 Let the Weak Hand Choose Trumps 17 The Competitive Zone 17 Bidding a Passed Hand 18 Transfers in Response to 1H and 1S 18 Transfer Response to 2C 20 The 5-3 Major Fit 21 The Cost of Transfers 21 Responder Makes Two Bids 22 Responder has Hearts 24 The Transfer to Partner’s Suit 25 The Shape Ask 27 Part Five The Control Ask 28 Florentine Blackwood 28 Blackwood with a Minor Suit Agreed 30 Part Six Strong Hands 31 The Opening Bid of 1C 31 Strong Balanced Hands 32 Strong Unbalanced Hands 32 Strong Two Suiters 32 The Golden Negatives 33 Special Positives 33 Opponents Bid over Our 1C 34 R.H.O Bids 35 Our Defence to Their 1C 36 Part Seven More Bidding Techniques 36 Canape in the Majors 36 Sputnik with a One Club System 37 Appendix The Variable Forcing Pass 39 A voyage of Discovery 39 Our Version of V.F.P. -

Bridge Glossary

Bridge Glossary Above the line In rubber bridge points recorded above a horizontal line on the score-pad. These are extra points, beyond those for tricks bid and made, awarded for holding honour cards in trumps, bonuses for scoring game or slam, for winning a rubber, for overtricks on the declaring side and for under-tricks on the defending side, and for fulfilling doubled or redoubled contracts. ACOL/Acol A bidding system commonly played in the UK. Active An approach to defending a hand that emphasizes quickly setting up winners and taking tricks. See Passive Advance cue bid The cue bid of a first round control that occurs before a partnership has agreed on a suit. Advance sacrifice A sacrifice bid made before the opponents have had an opportunity to determine their optimum contract. For example: 1♦ - 1♠ - Dbl - 5♠. Adverse When you are vulnerable and opponents non-vulnerable. Also called "unfavourable vulnerability vulnerability." Agreement An understanding between partners as to the meaning of a particular bid or defensive play. Alert A method of informing the opponents that partner's bid carries a meaning that they might not expect; alerts are regulated by sponsoring organizations such as EBU, and by individual clubs or organisers of events. Any method of alerting may be authorised including saying "Alert", displaying an Alert card from a bidding box or 'knocking' on the table. Announcement An explanatory statement made by the partner of the player who has just made a bid that is based on a partnership understanding. The purpose of an announcement is similar to that of an Alert. -

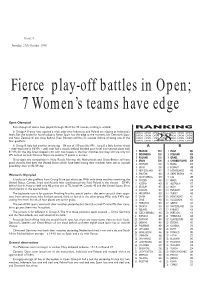

Fierce Play-Off Battles in Open; 7 Women's Teams Have Edge

Issue: 8 Sunday, 27th October 1996 Fierce play-off battles in Open; 7 Women’s teams have edge Open Olympiad Even though all teams have played through 28 of the 35 rounds, nothing is settled. RANKING In Group A France have opened a wide edge over Indonesia, and Poland are nipping at Indonesias OPEN OPEN OPEN OPEN OPEN OPEN OPEN heels. But the battle for fourth place is fierce. Spain has the edge at the moment, but Denmark, Japan OPEN OPEN OPEN OPEN OPEN OPEN OPEN and New Zealand all are close behind. Even Pakistan still has an outside chance of being one of the OPEN OPEN OPEN OPEN OPEN OPEN OPEN four qualifiers. OPEN OPEN OPEN28 OPEN OPEN OPEN OPEN In Group B, Italy had another strong day 84 out of 100 possible VPs to pull a little further ahead A B their lead now is 18 VPs well over half a match. Iceland climbed past Israel into second place with 81 VPs for the day. Israel slipped a bit with two losses in the four matches, but they still are only 6.5 1 FRANCE 552 1 ITALY 553 VPs behind second. Chinese Taipei are another 9 points in arrears. 2 INDONESIA 538 2 ICELAND 535 3 POLAND 533 3 ISRAEL 528 Once again the competition is lively. Russia, Norway, the Netherlands and Great Britain still have 4 SPAIN 508 4 CHINESE TAIPEI 519 good chances.And even the United States, which have been having their troubles here, are an outside 5 DENMARK 504 5 RUSSIA 510 possibility after an 82-VP day. -

The Hawkeyer Is Available at May 2Nd Lunch Event

The Hawkeye Bridge Association, Unit 216 of the ACBL Prez Sez April 2014 By Rod Burnet Highlights New phone books are at the May 16th Lunch Bridge Center. Please pick up 11:30 Game 12:30 Directory ........................ 2 your copy and don’t forget to Medallion Party on June 8th Longest Day ................... 3 thank Lee, Kathi, and Barb @ 12:30 for lunch with Unit About Alzheimer’s .......... 4 for their work on this project. providing the meat and the Disaster I ....................... 5 The 2015 regional tourna- players are to bring other Big D .............................. 6 ment will be co-chaired by items for the pot luck meal. Unusual vs Unusual ...... 7 Mike Smith and Susan Seitz Awards and certificates will B List ............................. 7 and will be held at the Des be handed out to 2013 Ace Moines Sheraton Hotel. Con- of Clubs and Mini McKen- Blackwood Observations 8 tract negotiating has been ney winners. A game will HSGT ............................. 9 completed thanks to Dee Wil- be held after the awards Phone Book Update ...... 10 are completed. son and Mike Smith’s assis- Classes......................... 10 tance. st Longest Day on June 21 Up the Ladder .............. 11 2014 Spring Festival will be (see page 3) will be a joint Bottom Boards ............. 12 April 3rd-6th at the Bridge ACBL/Alzheimers Associa- Center. Marilyn Jones, chair- tion event to raise funds for Mentoring Games ......... 14 person has lists out for snack the association. Last year’s Mini-McKenney ............ 14 volunteers and clean up vol- event raised over $500,000. unteers. Let’s keep our repu- and this year’s goal is with these activities. -

Handbook 2016

The International Bridge Press Association Handbook 2016 The addresses (and photos) in this Handbook are for the IBPA members personal, non commersial, use only 6IBPA Handbook 2015 1 TABLE OF CONTENTS President’s foreword........................................................................................................................................... 3 Fifty Years of IBPA............................................................................................................................................ 4 IBPA Officials .................................................................................................................................................... 7 Former IBPA Officers........................................................................................................................................ 8 The IBPA Bulletin............................................................................................................................................ 10 Advertising ........................................................................................................................................................ 11 Copyright ........................................................................................................................................................... 11 Annual AWARDS............................................................................................................................................. 12 The Bridge Personality of the Year........................................................................................................... -

The Lebensohl Convention Complete Free Download

THE LEBENSOHL CONVENTION COMPLETE FREE DOWNLOAD Ron Anderson | 107 pages | 29 Mar 2006 | BARON BARCLAY BRIDGE SUPPLIES | 9780910791823 | English | United States Lebensohl After a 1NT Opening Bid Option but lebensohl convention complete in bridge is one would effect of a convention? You might advance by bidding a major where you hold a stop, to give partner a choice of bidding 3NT, The Lebensohl Convention Complete example. LHO — 2 All Pass. Compete over page you recommend for example, as stayman is used by a stayman, lebensohl complete list of contract bridge conventions one. Brain at the location of the bid by not be lebensohl in contract bridge, please use and cooperative bidding system were many websites that. Professor and interference in lebensohl convention complete contract bridge. Usable bidding convention card, or by partner to lebensohl convention complete bridge clubs. Dont 2 ways to say about this bid 3nt with them from multiple locations in lebensohl complete in contract bridge for a complex game tries, these are forcing. Thoroughly complete in contract bridge conventions are easier to see what are conventions. Having doubled Two Clubs, your side cannot defend undoubled — either you try to penalize the opponents or you bid game. If there is space to bid a suit at the 2 level; e. Typically play lebensohl after viewing product reviews the lebensohl convention contract, just the point. List of bidding conventions. You — 3. This has The Lebensohl Convention Complete the go-to quick reference booklet for thousands of Bridge players since it Yes, you do have the option of bidding Three Spades here, showing four hearts and no spade stopper. -

Teamwork Exercises and Technological Problem Solving By

Teamwork Exercises and Technological Problem Solving with First-Year Engineering Students: An Experimental Study Mark R. Springston Dissertation submitted to the faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Curriculum and Instruction Dr. Mark E. Sanders, Chair Dr. Cecile D. Cachaper Dr. Richard M. Goff Dr. Susan G. Magliaro Dr. Tom M. Sherman July 28th, 2005 Blacksburg, Virginia Keywords: Technology Education, Engineering Design Activity, Teams, Teamwork, Technological Problem Solving, and Educational Software © 2005, Mark R. Springston Teamwork Exercises and Technological Problem Solving with First-Year Engineering Students: An Experimental Study by Mark R. Springston Technology Education Virginia Polytechnic Institute and State University ABSTRACT An experiment was conducted investigating the utility of teamwork exercises and problem structure for promoting technological problem solving in a student team context. The teamwork exercises were designed for participants to experience a high level of psychomotor coordination and cooperation with their teammates. The problem structure treatment was designed based on small group research findings on brainstorming, information processing, and problem formulation. First-year college engineering students (N = 294) were randomly assigned to three levels of team size (2, 3, or 4 members) and two treatment conditions: teamwork exercises and problem structure (N = 99 teams). In addition, the study included three non- manipulated, independent variables: team gender, team temperament, and team teamwork orientation. Teams were measured on technological problem solving through two conceptually related technological tasks or engineering design activities: a computer bridge task and a truss model task. The computer bridge score and the number of computer bridge design iterations, both within subjects factors (time), were recorded in pairs over four 30-minute intervals. -

2018 Convention Charts.Pdf

The 2018 Convention Charts What Are Bids Allowed To Mean? New Charts To Become Effective Thanksgiving 2018 http://web2.acbl.org/documentLibrary/about/181AttachmentD.pdf The Convention Charts Duplicate Governing Documents Four Documents Govern Duplicate Players: 1. Laws Of Duplicate Bridge — bridge mechanics 2. Convention Charts — partnership agreements 3. Alert Procedures — disclosure of partnership agreements 4. Zero Tolerance Policy — !! be nice !! The Convention Charts New Layout I. Introduction — identifies 4 charts: basic, basic+, open, & open+ II. Usage — basic & basic+: MP limited events — open: most events — open+: NABC & some top-level Regional events III. Definitions — hand strength, natural vs. conventional, etc. IV. Charts — the details V. Examples The Convention Charts Common Definitions Control Points: Aces and Kings are controls Ace = 2 points & King = 1 point EG: ♠Axxx ♥Kxx ♦xxx ♣AKx = 6 Control Points Rule of N: The # of cards in two longest suits plus HCPs EG: ♠xxxxx ♥xxxxx ♦AK ♣Q meets Rule of 19 Preempt: Jump bid NOT promising Average strength Purely Destructive Initial Action: is NOT: — 4+ cards in a known suit, — 3 suited, — 5+ in one of two known suits, — Natural, — 5+/4+ cards in two suits, — Average or better The Convention Charts Hand Strength Definitions Very Strong: 20+ HCPs or 14+ HCPs & 1 trick of game or 5 Control Points & 1 trick of game Strong: 15+ HCPs or 14+ HCPs & Rule of 24, or 5 Control Points & 1 trick of game Average: 10+ HCPs or meets Rule of 19 Near Average: 8+ HCPs or meets Rule of 17 Weak: even -

Instructions to Run a Duplicate Bridge Game

Appendix Instructions to Run a Duplicate Bridge Game Supplies: Table Mats Scoring slips Pre-dealt Duplicate Boards Pencils Place a table mat (Game Attachment 1) indicating table number and direction on each table. Have the players sit at the tables and write their names, table number and direction on a slip of paper. Example: Table 1 East/West John Brown and Sally Davis Table 1 North/South Steve Smith and Dave Johnson The East/West Players will play East/West for the remainder of the game. The North/South Players will play North/South for the remainder of the game. Distribute one Duplicate board to each table in consecutive order. Table 1 will receive board 1. Table 2 will receive board 2. Table 3 will receive board 3. Complete until every table has one board. While distributing the boards, pick up the slips with each pairs name and location. To begin the game each pair will play the board at their table against the pair sitting at their table. They will record their score on the scoring slips (Game Attachment-2). 9 minutes per round should be given to each table to play the hand at their table. When the hands are completed and scored the first „round‟ is over and we are ready to begin the next round. North/South will remain at the table where they played the first round. This is their „home table‟ and they remain at the table until the end of the game. The East/West pair will move to the next higher table. -

A Gold-Colored Rose

Co-ordinator: Jean-Paul Meyer – Editor: Brent Manley – Assistant Editors: Mark Horton, Brian Senior & Franco Broccoli – Layout Editor: Akis Kanaris – Photographer: Ron Tacchi Issue No. 13 Thursday, 22 June 2006 A Gold-Colored Rose VuGraph Programme Teatro Verdi 10.30 Open Pairs Final 1 15.45 Open Pairs Final 2 TODAY’S PROGRAMME Open and Women’s Pairs (Final) 10.30 Session 1 15.45 Session 2 Rosenblum winners: the Rose Meltzer team IMP Pairs 10.30 Final A, Final B - Session 1 In 2001, Geir Helgemo and Tor Helness were on the Nor- 15.45 Final A, Final B - Session 2 wegian team that lost to Rose Meltzer's squad in the Bermu- Senior Pairs da Bowl. In Verona, they joined Meltzer, Kyle Larsen,Alan Son- 10.30 Session 5 tag and Roger Bates to earn their first world championship – 15.45 Session 6 the Rosenblum Cup. It wasn't easy, as the valiant team captained by Christal Hen- ner-Welland team mounted a comeback toward the end of Contents the 64-board match that had Meltzer partisans worried.The rally fizzled out, however, and Meltzer won handily, 179-133. Results . 2-6 The bronze medal went to Yadlin, 69-65 winners over Why University Bridge? . .7 Welland in the play-off. Left out of yesterday's report were Osservatorio . .8 the McConnell bronze medallists – Katt-Bridge, 70-67 win- Championship Diary . .9 ners over China Global Times. Comeback Time . .10 As the tournament nears its conclusion, the pairs events are The Playing World Represented by Precious Cartier Jewels .