Making Snapshot Isolation Serializable

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Fast Implementation of Parallel Snapshot Isolation

A FAST IMPLEMENTATION OF PARALLEL SNAPSHOT ISOLATION UNA IMPLEMENTACIÓN RÁPIDA DE PARALLEL SNAPSHOT ISOLATION Borja Arnau de Régil Basáñez Trabajo de Fin de Grado del Grado en Ingeniería Informática Facultad de Informática, Universidad Complutense de Madrid Junio 2020 Director: Maria Victoria López López Co-director: Alexey Gotsman Contents Acknowledgements iv Abstract v Resumen vi 1. Introduction1 1.1. Motivation . .1 1.2. Goals . .2 1.3. Work Plan . .3 1.4. Document Structure . .3 1.5. Sources and Repositories . .4 1.6. Related Program Courses . .4 2. Preliminaries5 2.1. Notation . .5 2.1.1. Objects and Replication . .5 2.1.2. Transactions . .5 2.1.3. Histories . .6 2.2. Consistency Models . .6 2.2.1. Read Committed (RC) . .7 2.2.2. Serialisability (SER) . .8 2.2.3. Snapshot Isolation (SI) . .9 2.2.4. Parallel Snapshot Isolation (PSI) . 10 2.2.5. Non-Monotonic Snapshot Isolation (NMSI) . 11 2.2.6. Anomaly Comparison . 11 3. The fastPSI protocol 12 3.1. Consistency Guarantees . 12 3.2. Overview and System Model . 13 3.3. Server data structures . 14 3.4. Protocol Description . 16 3.4.1. Transaction Execution . 16 3.4.2. Transaction Termination . 20 3.5. Consistency Tradeoffs and Read Aborts . 23 ii 4. Implementation and Evaluation 26 4.1. Implementation . 26 4.2. Evaluation . 27 4.2.1. Performance & Scalability Limits . 28 4.2.2. Abort Ratio . 31 5. Related Work 35 6. Conclusions and Future Work 37 6.1. Conclusions . 37 6.2. Future Work . 37 A. Serialisable and Read Committed Protocols 39 A.1. -

One-Copy Serializability with Snapshot Isolation Under the Hood

One-Copy Serializability with Snapshot Isolation under the Hood Mihaela A. Bornea 1, Orion Hodson 2, Sameh Elnikety 2, Alan Fekete 3 1Athens U. of Econ and Business, 2Microsoft Research, 3University of Sydney Abstract—This paper presents a method that allows a repli- With careful design and engineering, SI engines can be cated database system to provide a global isolation level stronger replicated to get even higher performance with a replicated than the isolation level provided on each individual database form of SI such as Generalized Snapshot Isolation (GSI) [13], replica. We propose a new multi-version concurrency control al- gorithm called, serializable generalized snapshot isolation (SGSI), which is also not serializable. The objective of this paper is that targets middleware replicated database systems. Each replica to provide a concurrency control algorithm for replicated SI runs snapshot isolation locally and the replication middleware databases that achieves almost the same performance as GSI guarantees global one-copy serializability. We introduce novel while providing global one-copy-serializability (1SR). techniques to provide a stronger global isolation level, namely To guarantee serializability, concurrency control algorithms readset extraction and enhanced certification that prevents read- write and write-write conflicts in a replicated setting. We prove typically require access to data read and written in update the correctness of the proposed algorithm, and build a proto- transactions. Accessing this data is especially difficult in a type replicated database system to evaluate SGSI performance replicated database system since built-in facilities inside a experimentally. Extensive experiments with an 8 replica database centralized DBMS, like the lock manager, are not available system under the TPC-W workload mixes demonstrate the at a global level. -

Firebird 3.0 Developer's Guide

Firebird 3.0 Developer’s Guide Denis Simonov Version 1.1, 27 June 2020 Preface Author of the written material and creator of the sample project on five development platforms, originally as a series of magazine articles: Denis Simonov Translation of original Russian text to English: Dmitry Borodin (MegaTranslations Ltd) Editor of the translated text: Helen Borrie Copyright © 2017-2020 Firebird Project and all contributing authors, under the Public Documentation License Version 1.0. Please refer to the License Notice in the Appendix This volume consists of chapters that walk through the development of a simple application for several language platforms, notably Delphi, Microsoft Entity Framework and MVC.NET (“Model-View-Controller”) for web applications, PHP and Java with the Spring framework. It is hoped that the work will grow in time, with contributions from authors using other stacks with Firebird. 1 Table of Contents Table of Contents 1. About the Firebird Developer’s Guide: for Firebird 3.0 . 6 1.1. About the Author . 6 1.1.1. Translation… . 6 1.1.2. … and More Translation . 6 1.2. Acknowledgments . 6 2. The examples.fdb Database . 8 2.1. Database Creation Script. 8 2.1.1. Database Aliases . 9 2.2. Creating the Database Objects. 10 2.2.1. Domains . 10 2.2.2. Primary Tables. 11 2.2.3. Secondary Tables . 13 2.2.4. Stored Procedures. 17 2.2.5. Roles and Privileges for Users . 25 2.3. Saving and Running the Script . 26 2.4. Loading Test Data . 27 3. Developing Firebird Applications in Delphi . 28 3.1. -

Ensuring Consistency Under the Snapshot Isolation

World Academy of Science, Engineering and Technology International Journal of Computer and Information Engineering Vol:8, No:7, 2014 Ensuring Consistency under the Snapshot Isolation Carlos Roberto Valencio,ˆ Fabio´ Renato de Almeida, Thatiane Kawabata, Leandro Alves Neves, Julio Cesar Momente, Mario Luiz Tronco, Angelo Cesar Colombini Abstract—By running transactions under the SNAPSHOT isolation both transactions write the same data item, giving rise to we can achieve a good level of concurrency, specially in databases a write-write conflict. In turn, a temporal overlap occurs with high-intensive read workloads. However, SNAPSHOT is not < when Ti and Tj run concurrently, that is tsi tcj and immune to all the problems that arise from competing transactions < and therefore no serialization warranty exists. We propose in this tsj tci [2]. Thus, a transaction Ti under the SNAPSHOT paper a technique to obtain data consistency with SNAPSHOT by using isolation is allowed to commit only if no other transaction with δ <δ< some special triggers that we named DAEMON TRIGGERS. Besides commit-timestamp in the running time of Ti (tsi tci ) keeping the benefits of the SNAPSHOT isolation, the technique is wrote a data item also written by Ti [4]. specially useful for those database systems that do not have an Implementations of the SNAPSHOT isolation have the isolation level that ensures serializability, like Firebird and Oracle. We describe all the anomalies that might arise when using the SNAPSHOT advantage that writes of a transaction do not block the isolation and show how to preclude them with DAEMON TRIGGERS. reads of others. -

Where We Are Snapshot Isolation Snapshot Isolation

Where We Are • ACID properties of transactions CSE 444: Database Internals • Concept of serializability • How to provide serializability with locking • Lowers level of isolation with locking • How to provide serializability with optimistic cc Lectures 16 – Timestamps/Multiversion or Validation Transactions: Snapshot Isolation • Today: lower level of isolation with multiversion cc – Snapshot isolation Magda Balazinska - CSE 444, Spring 2012 1 Magda Balazinska - CSE 444, Spring 2012 2 Snapshot Isolation Snapshot Isolation • Not described in the book, but good overview in Wikipedia • A type of multiversion concurrency control algorithm • Provides yet another level of isolation • Very efficient, and very popular – Oracle, PostgreSQL, SQL Server 2005 • Prevents many classical anomalies BUT… • Not serializable (!), yet ORACLE and PostgreSQL use it even for SERIALIZABLE transactions! – But “serializable snapshot isolation” now in PostgreSQL Magda Balazinska - CSE 444, Fall 2010 3 Magda Balazinska - CSE 444, Fall 2010 4 Snapshot Isolation Rules Snapshot Isolation (Details) • Multiversion concurrency control: • Each transactions receives a timestamp TS(T) – Versions of X: Xt1, Xt2, Xt3, . • Transaction T sees snapshot at time TS(T) of the database • When T reads X, return XTS(T). • When T commits, updated pages are written to disk • When T writes X: if other transaction updated X, abort – Not faithful to “first committer” rule, because the other transaction U might have committed after T. But once we abort • Write/write conflicts resolved by “first -

SQL: Transactions Introduction to Databases Compsci 316 Fall 2017 2 Announcements (Tue., Oct

SQL: Transactions Introduction to Databases CompSci 316 Fall 2017 2 Announcements (Tue., Oct. 17) • Midterm graded • Sample solution already posted on Sakai • Project Milestone #1 feedback by email this weekend 3 Transactions • A transaction is a sequence of database operations with the following properties (ACID): • Atomic: Operations of a transaction are executed all-or- nothing, and are never left “half-done” • Consistency: Assume all database constraints are satisfied at the start of a transaction, they should remain satisfied at the end of the transaction • Isolation: Transactions must behave as if they were executed in complete isolation from each other • Durability: If the DBMS crashes after a transaction commits, all effects of the transaction must remain in the database when DBMS comes back up 4 SQL transactions • A transaction is automatically started when a user executes an SQL statement • Subsequent statements in the same session are executed as part of this transaction • Statements see changes made by earlier ones in the same transaction • Statements in other concurrently running transactions do not • COMMIT command commits the transaction • Its effects are made final and visible to subsequent transactions • ROLLBACK command aborts the transaction • Its effects are undone 5 Fine prints • Schema operations (e.G., CREATE TABLE) implicitly commit the current transaction • Because it is often difficult to undo a schema operation • Many DBMS support an AUTOCOMMIT feature, which automatically commits every single statement • You -

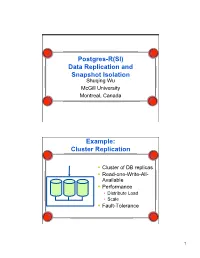

Postgres-R(SI) Data Replication and Snapshot Isolation Example

Postgres-R(SI) Data Replication and Snapshot Isolation Shuqing Wu McGill University Montreal, Canada Example: Cluster Replication . Cluster of DB replicas . Read-one-Write-All- Available . Performance • Distribute Load • Scale . Fault-Tolerance 1 Typical Replica Control . Keep copies consistent • Execute each update first at one replica • Return to user once one update has succeeded • Propagate updates to other replicas or execute updates at other replicas either after return to user (lazy) or before return to user (eager) W(x) ok x x x Replica Control . Challenge • Guarantee that updates succeed at all replicas • Combine with concurrency control . Primary Copy • Updates executed at primary and then sent to secondaries • Secondary only accept queries • Easry concurrency control . Update Everywhere • Each replica accepts update requests and propagates changes to others • Complex concurrency control but flexible W(x) W(x) W(x) W(x) 2 Middleware based . Client submits operations (e.g., SQL statements) to middleware . Middleware coordinates database replicas and where which operations are executed Client Middleware Middleware based . Many research prototypes, some commercial systems . Pro • No changes to DBS • Heterogeneous setup possible . Cons • Each solutions has particular restriction. For example . Transactions must be declared read-only/update in advance (primary copy approaches) . Transaction must declare all tables to be accessed in advance . Operations cannot be submitted one by one but in a bunch . Update must be executed on all replicas before confirmation is returned to user • Each operation filtered through the middleware . Inappropriate in any WAN communication (e.g., client/middleware) 3 Kernel Based . Each client connected to one replica . Transaction executed locally . -

Precisely Serializable Snapshot Isolation

Precisely Serializable Snapshot Isolation Stephen Revilak Ph.D. Dissertation Supervised by Patrick O'Neil University of Massachusetts Boston Nov. 8, 2011 1 / 38 What is Snapshot Isolation? Snapshot Isolation (SI) is a form of multiversion concurrency control. I The technique was first published in a 1995 paper, A Critique of ANSI Isolation Levels I SI is used in a variety of DBMS Systems: Oracle, Postgres, Microsoft SQL Server, BerkeleyDB, and others. SI's advantages and disadvantages: I Advantages: Good throughput. Reads never wait for writes. Writes never wait for reads. I Disadvantage: SI is not serializable; it permits anomalies that locking avoids. 2 / 38 What is PSSI? PSSI = Precisely Serializable Snapshot Isolation. PSSI is a set of extensions to SI that provide serializability. I PSSI detects dependency cycles; PSSI aborts transactions to break these cycles. I PSSI retains much of SI's throughput advantage. (Reads and writes do not block each other.) I PSSI is precise; PSSI minimizes the number of unnecessary aborts by waiting for complete cycles to form. 3 / 38 Talk Outline I Snapshot Isolation, SI anomalies, dependency theory I PSSI design I Testing and Experimental results 4 / 38 Mechanics of Snapshot Isolation I Each transaction has two timestamps: a start timestamp start(Ti ), and a commit timestamp, commit(Ti ). I All data is versioned (labeled with the writer's transaction id). Different transactions may read different versions of a single data item x. I When Ti reads x,Ti reads the last version of x committed prior to start(Ti ) I When Ti writes x, xi becomes visible to Tj that start after commit(Ti ). -

Snapshot Isolation

Advanced Database Systems Course introduction Feliz Gouveia UFP, january 2013 Introduction • 6 ECTS course of the 1st year, 2nd cycle of Engenharia Informática (Computer Science) • The Advanced Database Systems course extends the topics of the introductory Database Management Systems course, and introduces new ones: OODB, Spatial data, columnar databases Degree structure (2nd cycle) 1st year 2nd year IS Planning and Development / Mobile Final Project I (12) Networks and Services I (6) Mobile Computing (6) Knowledge Management / Mobile Networks and Services II (3) Professional Ethics (2) Multimedia and Interactive Systems / Mobile Applications Programming (4) Academic Internship (8) Programming Paradigms / Mobile Applications Project (4) Advanced Database Systems (6) Elective course (4) Artificial Intelligence (8) Research Methods (3) Distributed Systems (8) Final Project II (3) Man-Machine Interaction (6) Dissertation (27) Computer Security and Auditing (6) Elective course (4) Course goals • To provide the students with a better understanding of the essential techniques used in a Database Management System, either by revisiting them or by studying new approaches. • To provide students with knowledge to choose, design, and implement a dbms in a complex domain, making the best use of the available tools and techniques. • To provide students with knowledge to analyze and tune a given dbms, given a workload and usage patterns. Course goals • To allow the students to learn and experiment advanced database techniques, models and products, and to provide them with the knowledge to take decisions concerning implementation issues. • To provide students with knowledge to analyze, modify if necessary and experiment algorithms that make up the database internals. • To expose students to advanced topics and techniques that appear promising research directions. -

Serializable Isolation for Snapshot Databases

Serializable Isolation for Snapshot Databases This thesis is submitted in fulfillment of the requirements for the degree of Doctor of Philosophy in the School of Information Technologies at The University of Sydney MICHAEL JAMES CAHILL August 2009 Copyright © 2009 Michael James Cahill All Rights Reserved Abstract Many popular database management systems implement a multiversion concurrency control algorithm called snapshot isolation rather than providing full serializability based on locking. There are well- known anomalies permitted by snapshot isolation that can lead to violations of data consistency by interleaving transactions that would maintain consistency if run serially. Until now, the only way to pre- vent these anomalies was to modify the applications by introducing explicit locking or artificial update conflicts, following careful analysis of conflicts between all pairs of transactions. This thesis describes a modification to the concurrency control algorithm of a database management system that automatically detects and prevents snapshot isolation anomalies at runtime for arbitrary ap- plications, thus providing serializable isolation. The new algorithm preserves the properties that make snapshot isolation attractive, including that readers do not block writers and vice versa. An implementa- tion of the algorithm in a relational database management system is described, along with a benchmark and performance study, showing that the throughput approaches that of snapshot isolation in most cases. iii Acknowledgements Firstly, I would like to thank my supervisors, Dr Alan Fekete and Dr Uwe Röhm, without whose guid- ance I would not have reached this point. Many friends and colleagues, both at the University of Sydney and at Oracle, have helped me to develop my ideas and improve their expression. -

A Critique of ANSI SQL Isolation Levels

A Critique of ANSI SQL Isolation Levels Hal Berenson Microsoft Corp. [email protected] Phil Bernstein Microsoft Corp. [email protected] Jim Gray Microsoft Corp. [email protected] Jim Melton Sybase Corp. [email protected] Elizabeth O’Neil UMass/Boston [email protected] Patrick O'Neil UMass/Boston [email protected] June 1995 Technical Report MSR-TR-95-51 Microsoft Research Advanced Technology Division Microsoft Corporation One Microsoft Way Redmond, WA 98052 “A Critique of ANSI SQL Isolation Levels,” Proc. ACM SIGMOD 95, pp. 1-10, San Jose CA, June 1995, © ACM. -1- A Critique of ANSI SQL Isolation Levels Hal Berenson Microsoft Corp. [email protected] Phil Bernstein Microsoft Corp. [email protected] Jim Gray U.C. Berkeley [email protected] Jim Melton Sybase Corp. [email protected] Elizabeth O’Neil UMass/Boston [email protected] Patrick O'Neil UMass/Boston [email protected] Abstract: ANSI SQL-92 [MS, ANSI] defines Isolation distinction is not crucial for a general understanding. Levels in terms of phenomena: Dirty Reads, Non-Re- The ANSI isolation levels are related to the behavior of peatable Reads, and Phantoms. This paper shows that lock schedulers. Some lock schedulers allow transactions these phenomena and the ANSI SQL definitions fail to to vary the scope and duration of their lock requests, thus characterize several popular isolation levels, including the departing from pure two-phase locking. This idea was in- standard locking implementations of the levels. troduced by [GLPT], which defined Degrees of Consistency Investigating the ambiguities of the phenomena leads to in three ways: locking, data-flow graphs, and anomalies. -

Serializable Snapshot Isolation for Replicated Databases in High-Update Scenarios

Serializable Snapshot Isolation for Replicated Databases in High-Update Scenarios Hyungsoo Jungy Hyuck Han∗ Alan Feketey Uwe Rohm¨ y University of Sydney Seoul National University University of Sydney University of Sydney yffi[email protected] ∗[email protected] ABSTRACT pects of this work. Among these is the value of Snapshot Many proposals for managing replicated data use sites run- Isolation (SI) rather than serializability. SI is widely avail- ning the Snapshot Isolation (SI) concurrency control mech- able in DBMS engines like Oracle DB, PostgreSQL, and Mi- anism, and provide 1-copy SI or something similar, as the crosoft SQL Server. By using SI in each replica, and deliver- global isolation level. This allows good scalability, since only ing 1-copy SI as the global behavior, most recent proposals ww-conflicts need to be managed globally. However, 1-copy have obtained improved scalability, since local SI can be SI can lead to data corruption and violation of integrity con- combined into (some variant of) global 1-copy SI by han- straints [5]. 1-copy serializability is the global correctness dling ww-conflicts, but ignoring rw-conflicts. This has been condition that prevents data corruption. We propose a new the dominant replication approach, in the literature [10, 11, algorithm Replicated Serializable Snapshot Isolation (RSSI) 12, 13, 23, 27]. that uses SI at each site, and combines this with a certifi- While there are good reasons for replication to make use cation algorithm to guarantee 1-copy serializable global ex- of SI as a local isolation level (in particular, it is the best ecution.