Petascale Data Science Challenges in Astronomy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scientific Data Mining in Astronomy

SCIENTIFIC DATA MINING IN ASTRONOMY Kirk D. Borne Department of Computational and Data Sciences, George Mason University, Fairfax, VA 22030, USA [email protected] Abstract We describe the application of data mining algorithms to research prob- lems in astronomy. We posit that data mining has always been fundamen- tal to astronomical research, since data mining is the basis of evidence- based discovery, including classification, clustering, and novelty discov- ery. These algorithms represent a major set of computational tools for discovery in large databases, which will be increasingly essential in the era of data-intensive astronomy. Historical examples of data mining in astronomy are reviewed, followed by a discussion of one of the largest data-producing projects anticipated for the coming decade: the Large Synoptic Survey Telescope (LSST). To facilitate data-driven discoveries in astronomy, we envision a new data-oriented research paradigm for astron- omy and astrophysics – astroinformatics. Astroinformatics is described as both a research approach and an educational imperative for modern data-intensive astronomy. An important application area for large time- domain sky surveys (such as LSST) is the rapid identification, charac- terization, and classification of real-time sky events (including moving objects, photometrically variable objects, and the appearance of tran- sients). We describe one possible implementation of a classification broker for such events, which incorporates several astroinformatics techniques: user annotation, semantic tagging, metadata markup, heterogeneous data integration, and distributed data mining. Examples of these types of collaborative classification and discovery approaches within other science disciplines are presented. arXiv:0911.0505v1 [astro-ph.IM] 3 Nov 2009 1 Introduction It has been said that astronomers have been doing data mining for centuries: “the data are mine, and you cannot have them!”. -

Astroinformatics: Image Processing and Analysis of Digitized Astronomical Data with Web-Based Implementation

ASTROINFORMATICS: IMAGE PROCESSING AND ANALYSIS OF DIGITIZED ASTRONOMICAL DATA WITH WEB-BASED IMPLEMENTATION N. Kirov(1;2), O. Kounchev(2), M. Tsvetkov(3) (1)Computer Science Department, New Bulgarian University (2)Institute of Mathematics and Informatics, Bulgarian Academy of Sciences (3)Institute of Astronomy, Bulgarian Academy of Sciences [email protected], [email protected], [email protected] ABSTRACT The newly born area of Astroinformatics has arisen as an interdisciplinary domain from Astronomy and Information and Communication Technologies (ICT). Recently, four institutes of the Bulgarian Academy of Sciences launched a joint project called “Astroinformatics”. The main goal of the project is the development of methods and techniques for preservation and exploitation of the scientific, cultural and historic heritage of the astronomical observations. The Wide-Field Plate Data Base is an ICT project of the Institute of Astronomy, which has been launched in 1991, supported by International Astronomic Union [1]. Now over two million photographic plates, obtained with professional telescopes at 125 observatories worldwide, are identified and collected in this database. Digitization of photographic plates is an actual task for astronomical society. So far 150 000 plates have been digitized through several European research programs. As a result, a huge amount of data is collected (with about 2TB in size). The access, manipulation and data mining of this data is a serious challenge for the ICT community. In this article we discuss goals, tasks and achieve- ments in the area of Astroinformatics. 1 THE WIDE-FIELD PLATE DATA BASE The basic information for all wide-field (> 1o) photographic astronomical plate archives and for their content, stored in many observatories is collected in the Wide-Field Plate Database (WFPDB, skyarchive.org), established in 1991 and developed in the Institute of Astronomy, Bulgar- ian Academy of Sciences. -

Astro2020 State of the Profession Consideration White Paper

Astro2020 State of the Profession Consideration White Paper Realizing the potential of astrostatistics and astroinformatics September 27, 2019 Principal Author: Name: Gwendolyn Eadie4;5;6;15;17 Email: [email protected] Co-authors: Thomas Loredo1;19, Ashish A. Mahabal2;15;16;18, Aneta Siemiginowska3;15, Eric Feigelson7;15, Eric B. Ford7;15, S.G. Djorgovski2;20, Matthew Graham2;15;16, Zˇeljko Ivezic´6;16, Kirk Borne8;15, Jessi Cisewski-Kehe9;15;17, J. E. G. Peek10;11, Chad Schafer12;19, Padma A. Yanamandra-Fisher13;15, C. Alex Young14;15 1Cornell University, Cornell Center for Astrophysics and Planetary Science (CCAPS) & Department of Statistical Sciences, Ithaca, NY 14853, USA 2Division of Physics, Mathematics, & Astronomy, California Institute of Technology, Pasadena, CA 91125, USA 3Center for Astrophysics j Harvard & Smithsonian, Cambridge, MA 02138, USA 4eScience Institute, University of Washington, Seattle, WA 98195, USA 5DIRAC Institute, Department of Astronomy, University of Washington, Seattle, WA 98195, USA 6Department of Astronomy, University of Washington, Seattle, WA 98195, USA 7Penn State University, University Park, PA 16802, USA 8Booz Allen Hamilton, Annapolis Junction, MD, USA 9Department of Statistics & Data Science, Yale University, New Haven, CT 06511, USA 10Department of Physics & Astronomy, Johns Hopkins University, Baltimore, MD 21218, USA arXiv:1909.11714v1 [astro-ph.IM] 25 Sep 2019 11Space Telescope Science Institute, Baltimore, MD 21218, USA 12Department of Statistics & Data Science Carnegie Mellon University, Pittsburgh, -

Ontology Architectures for the Orbital Space Environment and Space Situational Awareness Domain

Ontology Architectures for the Orbital Space Environment and Space Situational Awareness Domain Robert John ROVETTOa,1 a University of Maryland, College Park Alumnus (2007), The State University of New York at Buffalo Alumnus (2011) American Public University System/AMU, space studies Abstract. This paper applies some ontology architectures to the space domain, specifically the orbital and near-earth space environment and the space situational awareness domain. I briefly summarize local, single and hybrid ontology architectures, and offer potential space ontology architectures for each by showing how actual space data sources and space organizations would be involved. Keywords. Ontology, formal ontology, ontology engineering, ontological architecture, space ontology, space environment ontology, space object ontology, space domain ontology, space situational awareness ontology, data-sharing, astroinformatics. 1. Introduction This paper applies some general ontology architectures to the space domain, specifically the orbital space environment and the space situational awareness (SSA) domain. As the number of artificial satellites in orbit increases, the potential for orbital debris and orbital collisions increases. This spotlights the need for more accurate and complete situational awareness of the space environment. This, in turn, requires gathering more data, sharing it, and analyzing it to generate knowledge that should be actionable in order to safeguard lives and infrastructure in space and on the surface of Earth. Toward this, ontologies may provide one avenue. This paper serves to introduce the space community to some ontology architecture options when considering computational ontology for their space data and space domain-modeling needs. I briefly summarize local, single and hybrid ontology architectures [1][2], and offer potential space domain architectures for each by showing how actual space data sources and space organizations would be involved. -

Astroinformatics Projects

Astroinformatics Projects Downloaded from https://www.cambridge.org/core. IP address: 170.106.33.19, on 03 Oct 2021 at 02:03:31, subject to the Cambridge Core terms of use, available at https://www.cambridge.org/core/terms. https://doi.org/10.1017/S1743921317003453 Astroinformatics Proceedings IAU Symposium No. 325, 2016 M. Brescia, S.G. Djorgovski, E. Feigelson, c International Astronomical Union 2017 G. Longo & S. Cavuoti, eds. doi:10.1017/S1743921317003453 The changing landscape of astrostatistics and astroinformatics Eric D. Feigelson Center for Astrostatistics and Department of Astronomy and Astrophysics, Pennsylvania State University, University Park PA 16802 USA email: [email protected] Abstract. The history and current status of the cross-disciplinary fields of astrostatistics and astroinformatics are reviewed. Astronomers need a wide range of statistical methods for both data reduction and science analysis. With the proliferation of high-throughput telescopes, ef- ficient large scale computational methods are also becoming essential. However, astronomers receive only weak training in these fields during their formal education. Interest in the fields is rapidly growing with conferences organized by scholarly societies, textbooks and tutorial work- shops, and research studies pushing the frontiers of methodology. R, the premier language of statistical computing, can provide an important software environment for the incorpo- ration of advanced statistical and computational methodology into the astronomical community. Keywords. data analysis, cosmology, statistics, computer science, machine learning, high per- formance computing, education An aphorism . The scientist collects data in order to understand natural phenomena . The statistician helps the scientist acquire understanding from the data . The computer scientist helps the scientist perform the needed calculations (*) . -

Astroinformatics: at the Intersection of Machine Learning, Automated

Astroinformatics: at the intersection of Machine Learning, Automated Information Extraction, and Astronomy Kirk Borne Dept of Computational & Data Sciences George Mason University [email protected] , http://classweb.gmu.edu/kborne/ Responding to the Data Flood • Big Data is a national challenge and a national priority … see August 9, 2010 announcement from OMB and OSTP @ http://www.aip.org/fyi (#87) • More data is not just more data… more is different ! • Several national study groups have issued reports on the urgency of establishing scientific and educational programs to face the data flood challenges. • Each of these reports has issued a call to action in response to the data avalanche in science, engineering, and the global scholarly environment. Data Sciences: A National Imperative 1. National Academies report: Bits of Power: Issues in Global Access to Scientific Data, (1997) downloaded from http://www.nap.edu/catalog.php?record_id=5504 2. NSF (National Science Foundation) report: Knowledge Lost in Information: Research Directions for Digital Libraries, (2003) downloaded from http://www.sis.pitt.edu/~dlwkshop/report.pdf 3. NSF report: Cyberinfrastructure for Environmental Research and Education, (2003) downloaded from http://www.ncar.ucar.edu/cyber/cyberreport.pdf 4. NSB (National Science Board) report: Long-lived Digital Data Collections: Enabling Research and Education in the 21st Century, (2005) downloaded from http://www.nsf.gov/nsb/documents/2005/LLDDC_report.pdf 5. NSF report with the Computing Research Association: Cyberinfrastructure for Education and Learning for the Future: A Vision and Research Agenda, (2005) downloaded from http://www.cra.org/reports/cyberinfrastructure.pdf 6. NSF Atkins Report: Revolutionizing Science & Engineering Through Cyberinfrastructure: Report of the NSF Blue-Ribbon Advisory Panel on Cyberinfrastructure, (2005) downloaded from http://www.nsf.gov/od/oci/reports/atkins.pdf 7. -

Immersive and Collaborative Data Visualization Using Virtual Reality Platforms

2014 IEEE International Conference on Big Data Immersive and Collaborative Data Visualization Using Virtual Reality Platforms Scott Davidoff, Jeffrey S. Norris Ciro Donalek, S. G. Djorgovski, Alex Cioc, Anwell Wang, Jet Propulsion Laboratory Jerry Zhang, Elizabeth Lawler, Stacy Yeh, Pasadena, CA 91109, USA Ashish Mahabal, Matthew Graham, Andrew Drake [Scott.Davidoff, jeffrey.s.norris]@jpl.nasa.gov California Institute of Technology Giuseppe Longo Pasadena, CA 91125, USA University Federico II [donalek,george]@astro.caltech.edu Napoli, Italy [email protected] Abstract — Effective data visualization is a key part of the The key point is that “big data science” is not about data: discovery process in the era of “big data”. It is the bridge it is about discovery and understanding of meaningful between the quantitative content of the data and human patterns hidden in the data. Massive and complex data sets – intuition, and thus an essential component of the scientific path no matter how content-rich or how expensively obtained – are from data into knowledge and understanding. Visualization is of no use if we cannot discover interesting patterns in them. also essential in the data mining process, directing the choice of the applicable algorithms, and in helping to identify and remove This applies to data both from measurements, and data bad data from the analysis. However, a high complexity or a generated as models or simulations that need to be compared high dimensionality of modern data sets represents a critical and interpreted. obstacle. How do we visualize interesting structures and patterns Visualization is the main bridge between the quantitative that may exist in hyper-dimensional data spaces? A better understanding of how we can perceive and interact with multi- content of the data and human intuition, and it can be argued dimensional information poses some deep questions in the field of that we cannot really understand or intuitively comprehend cognition technology and human-computer interaction. -

Teaching of Astroinformatics at the University of Belgrade

Proceedings of the VII Bulgarian-Serbian Astronomical Conference (VII BSAC) Chepelare, Bulgaria, June 1-4, 2010, Editors: M. K. Tsvetkov, M. S. Dimitrijeviü, K. Tsvetkova, O. Kounchev, Ž. Mijajloviü Publ. Astron. Soc. “Rudjer Boškoviü” No 11, 2012, 89-96 TEACHING OF ASTROINFORMATICS AT THE UNIVERSITY OF BELGRADE NADEŽDA PEJOVIû Faculty of Mathematics, University of Belgrade E-mail: [email protected] Abstract. The aim of this paper is to present the studies of astroinformatics at the Faculty of Mathematics of the Belgrade University. The studies of astroinformatics were started in 2009 and they are organized according to the Bologna declaration. 1. INTRODUCTION The Bologna Declaration is the main document which has conditioned the education reorganization throughout Europe. This declaration was signed in 1999 by the education Ministers of the EU member-countries. Till the present moment this document has been signed by a majority of countries including ours as well. The Bologna process is aimed at the formation of a unique European system of university teaching and scientific research till 2010. In this way one tends to form a more efficient system of advanced education in Europe which is in accordance with the world knowledge market. From the school year 2006/07 at the Faculty of Mathematics the teaching in mathematics, informatics and astronomy has been reformed in accordance with the Bologna process. According to the Law of the Republic of Serbia concerning the post-secondary school education, which follows the Bologna Declaration, there are three levels of university education: Basic academic studies lasting 3 or 4years. The condition to finish them is to collect 180 or 240 EPTS points. -

Data Mining CS 584 Data Mining (Fall 2016)

Introduction to Data Mining CS 584 Data Mining (Fall 2016) Huzefa Rangwala AssociateProfessor, Computer Science George Mason University Email: [email protected] Website: www.cs.gmu.edu/~hrangwal Slides are adapted from the available book slides developed by Tan, Steinbach and Kumar Roadmap for Today Welcome & Introduction ◦ Survey (Show of hands) Introduction to Data Mining ◦ Examples, Motivation, Definition, Methods Administrative/ Class Policies & Syllabus ◦ Grading, Assignments, Exams, Policies 10-15 minute break. Data ◦ Lets begin! What do you think of data mining? • Please could you write down examples that you know of or have heard of on the provided index card. • Also write down your own definition. Source: xkcd.com Data Deluge http://www.economist.com/node/15579717 Political Data Mining Inside the Secret World of the Data Crunchers Who Helped Obama Win Read more: http://swampland.time.com/2012/11/07/inside-the-secret-world-of-quants-and-data- crunchers-who-helped-obama-win/#ixzz2IuhEmNcB Mining Truth From Data Babel --- Nate Silver http://www.nytimes.com/2012/10/24/books/nate-silvers-signal-and-the-noise-examines- predictions.html?_r=0 Today (08/29) Source:http://projects.fivethirtyeight.com/2016- election-forecast/?ex_cid=rrpromo Large-scale Data is Everywhere! ! There has been enormous data growth in both commercial and scientific databases due to advances in Homeland Security data generation and collection technologies ! New mantra ! Gather whatever data you can whenever and wherever Business Data possible. Geo-spatial -

The Tonnabytes Big Data Challenge

The Tonnabytes Big Data Challenge: Transforming Science and Education Kirk Borne George Mason University Ever since we first began to explore our world… … humans have asked questions and … … have collected evidence (data) to help answer those questions. Astronomy: the world’s second oldest profession ! Characteristics of Big Data • Big quantities of data are acquired everywhere now. But… • What do we mean by “big”? • Gigabytes? Terabytes? Petabytes? Exabytes? • The meaning of “big” is domain-specific and resource- dependent (data storage, I/O bandwidth, computation cycles, communication costs) • I say … we all are dealing with our own “tonnabytes” • There are 4 dimensions to the Big Data challenge: 1. Volume (tonnabytes data challenge) 2. Complexity (variety, curse of dimensionality) 3. Rate of data and information flowing to us (velocity) 4. Verification (verifying inference-based models from data) • Therefore, we need something better to cope with the data tsunami … Data Science – Informatics – Data Mining Examples of Recommendations**: Inference from Massive or Complex Data • Advances in fundamental mathematics and statistics are needed to provide the language, structure, and tools for many needed methodologies of data-enabled scientific inference. – Example : Machine learning in massive data sets • Algorithmic advances in handling massive and complex data are crucial. • Visualization (visual analytics) and citizen science (human computation or data processing) will play key roles. • ** From the NSF report: Data-Enabled Science in the Mathematical and Physical Sciences, (2010) http://www.cra.org/ccc/docs/reports/DES-report_final.pdf This graphic says it all … • Clustering – examine the data and find the data clusters (clouds), without considering what the items are = Characterization ! • Classification – for each new data item, try to place it within a known class (i.e., a known category or cluster) = Classify ! • Outlier Detection – identify those data items that don’t fit into the known classes or clusters = Surprise ! Graphic provided by Professor S. -

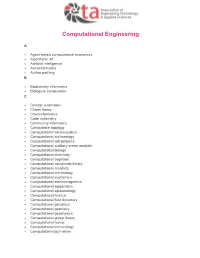

AETA-Computational-Engineering.Pdf

Computational Engineering A Agent-based computational economics Algorithmic art Artificial intelligence Astroinformatics Author profiling B Biodiversity informatics Biological computation C Cellular automaton Chaos theory Cheminformatics Code stylometry Community informatics Computable topology Computational aeroacoustics Computational archaeology Computational astrophysics Computational auditory scene analysis Computational biology Computational chemistry Computational cognition Computational complexity theory Computational creativity Computational criminology Computational economics Computational electromagnetics Computational epigenetics Computational epistemology Computational finance Computational fluid dynamics Computational genomics Computational geometry Computational geophysics Computational group theory Computational humor Computational immunology Computational journalism Computational law Computational learning theory Computational lexicology Computational linguistics Computational lithography Computational logic Computational magnetohydrodynamics Computational Materials Science Computational mechanics Computational musicology Computational neurogenetic modeling Computational neuroscience Computational number theory Computational particle physics Computational photography Computational physics Computational science Computational engineering Computational scientist Computational semantics Computational semiotics Computational social science Computational sociology Computational -

Report of the 2014 Committee of Visitors for the Division of Astronomical Sciences of the National Science Foundation December 17-19, 2014

Report of the 2014 Committee of Visitors for the Division of Astronomical Sciences of the National Science Foundation December 17-19, 2014 Committee Membership Katelyn Allers, Bucknell University Nancy Brickhouse, Harvard-Smithsonian Ctr. for Astrophysics Mike Briley, Appalachian State University Robert Brown, Cornell University (retired) 1 Lynn Cominsky, Vice Chair, Sonoma State University Kelle Cruz, City University of New York, Hunter College Kelly Holley-Bockelmann Vanderbilt University Mark Krumholz, University of California at Santa Cruz Charles McGruder, Western Kentucky University Windsor Morgan, Dickinson College Tim Paglione City University of New York, York College Lawrence Ramsey, Chair, The Pennsylvania State University Lou Strolger, Space Telescope Science Institute Hugh Van Horn, University of Rochester (retired) William Zajc, Columbia University 1 Deceased 20 December 2014 i Report of the 2014 MPS/AST Committee of Visitors Table of Contents Table of Contents ............................................................................................................................ 1 1 Executive Summary ..................................................................................................................... 3 1.1 Organization and Management of AST ................................................................................ 3 1.2 Research Grants Programs and Proposal Review Processes ................................................. 4 1.3 Facilities Management .........................................................................................................