Performance Analysis of the Technology Chess Program James

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Little Chess Evaluation Compendium by Lyudmil Tsvetkov, Sofia, Bulgaria

Little Chess Evaluation Compendium By Lyudmil Tsvetkov, Sofia, Bulgaria Version from 2012, an update to an original version first released in 2010 The purpose will be to give a fairly precise evaluation for all the most important terms. Some authors might find some interesting ideas. For abbreviations, p will mean pawns, cp – centipawns, if the number is not indicated it will be centipawns, mps - millipawns; b – bishop, n – knight, k- king, q – queen and r –rook. Also b will mean black and w – white. We will assume that the bishop value is 3ps, knight value – 3ps, rook value – 4.5 ps and queen value – 9ps. In brackets I will be giving purely speculative numbers for possible Elo increase if a specific function is implemented (only for the functions that might not be generally implemented). The exposition will be split in 3 parts, reflecting that opening, middlegame and endgame are very different from one another. The essence of chess in two words Chess is a game of capturing. This is the single most important thing worth considering. But in order to be able to capture well, you should consider a variety of other specific rules. The more rules you consider, the better you will be able to capture. If you consider 10 rules, you will be able to capture. If you consider 100 rules, you will be able to capture in a sufficiently good way. If you consider 1000 rules, you will be able to capture in an excellent way. The philosophy of chess Chess is a game of correlation, and not a game of fixed values. -

Learning Long-Term Chess Strategies from Databases

Mach Learn (2006) 63:329–340 DOI 10.1007/s10994-006-6747-7 TECHNICAL NOTE Learning long-term chess strategies from databases Aleksander Sadikov · Ivan Bratko Received: March 10, 2005 / Revised: December 14, 2005 / Accepted: December 21, 2005 / Published online: 28 March 2006 Springer Science + Business Media, LLC 2006 Abstract We propose an approach to the learning of long-term plans for playing chess endgames. We assume that a computer-generated database for an endgame is available, such as the king and rook vs. king, or king and queen vs. king and rook endgame. For each position in the endgame, the database gives the “value” of the position in terms of the minimum number of moves needed by the stronger side to win given that both sides play optimally. We propose a method for automatically dividing the endgame into stages characterised by different objectives of play. For each stage of such a game plan, a stage- specific evaluation function is induced, to be used by minimax search when playing the endgame. We aim at learning playing strategies that give good insight into the principles of playing specific endgames. Games played by these strategies should resemble human expert’s play in achieving goals and subgoals reliably, but not necessarily as quickly as possible. Keywords Machine learning . Computer chess . Long-term Strategy . Chess endgames . Chess databases 1. Introduction The standard approach used in chess playing programs relies on the minimax principle, efficiently implemented by alpha-beta algorithm, plus a heuristic evaluation function. This approach has proved to work particularly well in situations in which short-term tactics are prevalent, when evaluation function can easily recognise within the search horizon the con- sequences of good or bad moves. -

CHESS FEDERATION Newburgh, N.Y

Announcing an important new series of books on CONTEMPORARY CHESS OPENINGS Published by Chess Digest, Inc.-General Editor, R. G. Wade The first book in this current series is a fresh look at 's I IAN by Leonard Borden, William Hartston, and Raymond Keene Two of the most brilliant young ployers pool their talents with one of the world's well-established authorities on openings to produce a modern, definitive study of the King's Indian Defence. An essen tial work of reference which will help master and amateur alike to win more games. The King's Indian Defence has established itself as one of the most lively and populor openings and this book provides 0 systematic description of its strategy, tactics, and variations. Written to provide instruction and under standing, it contains well-chosen illustrative games from octuol ploy, many of them shown to the very lost move, and each with an analysis of its salient features. An excellent cloth-bound book in English Descriptive Notation, with cleor type, good diagrams, and an easy-to-follow format. The highest quality at a very reasonable price. Postpaid, only $4.40 DON'T WAIT-ORDER NOW-THE BOOK YOU MUST HAVE! FLA NINGS by Raymond Keene Raymond Keene, brightest star in the rising galaxy of young British players, was undefeated in the 1968 British Championship and in the 1968 Olympiad at Lugano. In this book, he posses along to you the benefit of his studies of the King's Indian Attack and the Reti, Catalan, English, and Benko Larsen openings. The notation is Algebraic, the notes comprehensive but easily understood and right to the point. -

THE PSYCHOLOGY of CHESS: an Interview with Author Fernand Gobet

FM MIKE KLEIN, the Chess Journalists Chess Life of America's (CJA) 2017 Chess Journalist of the Year, stepped OCTOBER to the other side of the camera for this month's cover shoot. COLUMNS For a list of all CJA CHESS TO ENJOY / ENTERTAINMENT 12 award winners, It Takes A Second please visit By GM Andy Soltis chessjournalism.org. 14 BACK TO BASICS / READER ANNOTATIONS The Spirits of Nimzo and Saemisch By GM Lev Alburt 16 IN THE ARENA / PLAYER OF THE MONTH Slugfest at the U.S. Juniors By GM Robert Hess 18 LOOKS AT BOOKS / SHOULD I BUY IT? Training Games By John Hartmann 46 SOLITAIRE CHESS / INSTRUCTION Go Bogo! By Bruce Pandolfini 48 THE PRACTICAL ENDGAME / INSTRUCTION How to Write an Endgame Thriller By GM Daniel Naroditsky DEPARTMENTS 5 OCTOBER PREVIEW / THIS MONTH IN CHESS LIFE AND US CHESS NEWS 20 GRAND PRIX EVENT / WORLD OPEN 6 COUNTERPLAY / READERS RESPOND Luck and Skill at the World Open BY JAMAAL ABDUL-ALIM 7 US CHESS AFFAIRS / GM Illia Nyzhnyk wins clear first at the 2018 World Open after a NEWS FOR OUR MEMBERS lucky break in the penultimate round. 8 FIRST MOVES / CHESS NEWS FROM AROUND THE U.S. 28 COVER STORY / ATTACKING CHESS 9 FACES ACROSS THE BOARD / How Practical Attacking Chess is Really Conducted BY AL LAWRENCE BY IM ERIK KISLIK 51 TOURNAMENT LIFE / OCTOBER The “secret sauce” to good attacking play isn’t what you think it is. 71 CLASSIFIEDS / OCTOBER CHESS PSYCHOLOGY / GOBET SOLUTIONS / OCTOBER 32 71 The Psychology of Chess: An Interview with Author 72 MY BEST MOVE / PERSONALITIES Fernand Gobet THIS MONTH: FM NATHAN RESIKA BY DR. -

April 2021 COLORADO CHESS INFORMANT

Volume 48, Number 2 COLORADO STATE CHESS ASSOCIATION April 2021 COLORADO CHESS INFORMANT COLORADO SCHOLASTIC ONLINE CHAMPIONSHIP Volume 48, Number 2 Colorado Chess Informant April 2021 From the Editor With measured steps, the Colorado chess scene may just be com- ing back to life. It has been announced that the Colorado Open has been sched- uled for Labor Day weekend this year - albeit with safety proto- cols in place. Be sure to check out the website as the date nears (www.ColoradoChess.com) because as we are aware, things The Colorado State Chess Association, Incorporated, is a could change. The Denver Chess Club has also announced a Section 501(C)(3) tax exempt, non-profit educational corpora- tournament in June of this year - go to their website tion formed to promote chess in Colorado. Contributions are (www.DenverChess.com) for more information on that one. tax deductible. It is with a heavy heart and profound sadness that a friend and Dues are $15 a year. Youth (under 20) and Senior (65 or older) ‘chess bud’ of mine has passed away. Not long after his 70th memberships are $10. Family memberships are available to birthday in January, Michael Wokurka collapsed at his home on additional family members for $3 off the regular dues. Scholas- the 22nd - and never regained consciousness. No prior warning tic tournament membership is available for $3. or health issues were known. His obituary online is listed here: ● Send address changes to - Attn: Alexander Freeman to the https://tinyurl.com/2rz9zrca. He loved the game of chess, and email address [email protected]. -

Kasparov's Nightmare!! Bobby Fischer Challenges IBM to Simul IBM

California Chess Journal Volume 20, Number 2 April 1st 2004 $4.50 Kasparov’s Nightmare!! Bobby Fischer Challenges IBM to Simul IBM Scrambles to Build 25 Deep Blues! Past Vs Future Special Issue • Young Fischer Fires Up S.F. • Fischer commentates 4 Boyscouts • Building your “Super Computer” • Building Fischer’s Dream House • Raise $500 playing chess! • Fischer Articles Galore! California Chess Journal Table of Con tents 2004 Cal Chess Scholastic Championships The annual scholastic tourney finishes in Santa Clara.......................................................3 FISCHER AND THE DEEP BLUE Editor: Eric Hicks Contributors: Daren Dillinger A miracle has happened in the Phillipines!......................................................................4 FM Eric Schiller IM John Donaldson Why Every Chess Player Needs a Computer Photographers: Richard Shorman Some titles speak for themselves......................................................................................5 Historical Consul: Kerry Lawless Founding Editor: Hans Poschmann Building Your Chess Dream Machine Some helpful hints when shopping for a silicon chess opponent........................................6 CalChess Board Young Fischer in San Francisco 1957 A complet accounting of an untold story that happened here in the bay area...................12 President: Elizabeth Shaughnessy Vice-President: Josh Bowman 1957 Fischer Game Spotlight Treasurer: Richard Peterson One game from the tournament commentated move by move.........................................16 Members at -

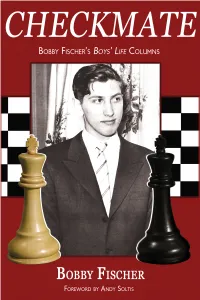

Checkmate Bobby Fischer's Boys' Life Columns

Bobby Fischer’s Boys’ Life Columns Checkmate Bobby Fischer’s Boys’ Life Columns by Bobby Fischer Foreword by Andy Soltis 2016 Russell Enterprises, Inc. Milford, CT USA 1 1 Checkmate Bobby Fischer’s Boys’ Life Columns by Bobby Fischer ISBN: 978-1-941270-51-6 (print) ISBN: 978-1-941270-52-3 (eBook) © Copyright 2016 Russell Enterprises, Inc. & Hanon W. Russell All Rights Reserved No part of this book may be used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Chess columns written by Bobby Fischer appeared from December 1966 through January 1970 in the magazine Boys’ Life, published by the Boy Scouts of America. Russell Enterprises, Inc. thanks the Boy Scouts of America for its permission to reprint these columns in this compilation. Published by: Russell Enterprises, Inc. P.O. Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Editing and proofreading by Peter Kurzdorfer Cover by Janel Lowrance 2 Table of Contents Foreword 4 April 53 by Andy Soltis May 59 From the Publisher 6 Timeline 60 June 61 July 69 Timeline 7 Timeline 70 August 71 1966 September 77 December 9 October 78 November 84 1967 February 11 1969 March 17 February 85 April 19 March 90 Timeline 22 May 23 April 91 June 24 July 31 May 98 Timeline 32 June 99 August 33 July 107 September 37 August 108 Timeline 38 September 115 October 39 October 116 November 46 November 122 December 123 1968 February 47 1970 March 52 January 128 3 Checkmate Foreword Bobby Fischer’s victory over Emil Nikolic at Vinkovci 1968 is one of his most spectacular, perhaps the last great game he played in which he was the bold, go-for-mate sacrificer of his earlier years. -

Chapter 10, Different Objectives of Play

Chapter 10 Different objectives of play [The normal objective of a game of chess is to give checkmate. Some of the games which can be played with chessmen have quite different objectives, and two of them, Extinction Chess and Losing Chess, have proved to be among the most popular of all chess variants.] 10.1 Capturing or baring the king Capturing the king. The Chess Monthly than about the snobbery of Mr Donisthorpe!] hosted a lively debate (1893-4) on the suggestion of a Mr Wordsworth Donisthorpe, Baring the king. The rules of the old chess whose very name seems to carry authority, allowed a (lesser) win by ‘bare king’ and that check and checkmate, and hence stalemate, and Réti and Bronstein have stalemate, should be abolished, the game favoured its reintroduction. [I haven’t traced ending with the capture of the king. The the Bronstein reference, but Réti’s will be purpose of this proposed reform was to reduce found on page 178 of the English edition of the number of draws then (as now) prevalent Modern Ideas in Chess. It is in fact explicit in master play. Donisthorpe claimed that both only in respect of stalemate, though the words Blackburne and the American master James ‘the original rules’ within it can be read as Mason were in favour of the change, adding supporting bare king as well, and perhaps ‘I have little doubt the reform would obtain I ought to quote it in full. After expounding the support of both Universities’ which says the ancient rules, he continues: ‘Those were something about the standing of Oxford and romantic times for chess. -

A GUIDE to SCHOLASTIC CHESS (11Th Edition Revised June 26, 2021)

A GUIDE TO SCHOLASTIC CHESS (11th Edition Revised June 26, 2021) PREFACE Dear Administrator, Teacher, or Coach This guide was created to help teachers and scholastic chess organizers who wish to begin, improve, or strengthen their school chess program. It covers how to organize a school chess club, run tournaments, keep interest high, and generate administrative, school district, parental and public support. I would like to thank the United States Chess Federation Club Development Committee, especially former Chairman Randy Siebert, for allowing us to use the framework of The Guide to a Successful Chess Club (1985) as a basis for this book. In addition, I want to thank FIDE Master Tom Brownscombe (NV), National Tournament Director, and the United States Chess Federation (US Chess) for their continuing help in the preparation of this publication. Scholastic chess, under the guidance of US Chess, has greatly expanded and made it possible for the wide distribution of this Guide. I look forward to working with them on many projects in the future. The following scholastic organizers reviewed various editions of this work and made many suggestions, which have been included. Thanks go to Jay Blem (CA), Leo Cotter (CA), Stephan Dann (MA), Bob Fischer (IN), Doug Meux (NM), Andy Nowak (NM), Andrew Smith (CA), Brian Bugbee (NY), WIM Beatriz Marinello (NY), WIM Alexey Root (TX), Ernest Schlich (VA), Tim Just (IL), Karis Bellisario and many others too numerous to mention. Finally, a special thanks to my wife, Susan, who has been patient and understanding. Dewain R. Barber Technical Editor: Tim Just (Author of My Opponent is Eating a Doughnut and Just Law; Editor 5th, 6th and 7th editions of the Official Rules of Chess). -

Extended Null-Move Reductions

Extended Null-Move Reductions Omid David-Tabibi1 and Nathan S. Netanyahu1,2 1 Department of Computer Science, Bar-Ilan University, Ramat-Gan 52900, Israel [email protected], [email protected] 2 Center for Automation Research, University of Maryland, College Park, MD 20742, USA [email protected] Abstract. In this paper we review the conventional versions of null- move pruning, and present our enhancements which allow for a deeper search with greater accuracy. While the conventional versions of null- move pruning use reduction values of R ≤ 3, we use an aggressive re- duction value of R = 4 within a verified adaptive configuration which maximizes the benefit from the more aggressive pruning, while limiting its tactical liabilities. Our experimental results using our grandmaster- level chess program, Falcon, show that our null-move reductions (NMR) outperform the conventional methods, with the tactical benefits of the deeper search dominating the deficiencies. Moreover, unlike standard null-move pruning, which fails badly in zugzwang positions, NMR is impervious to zugzwangs. Finally, the implementation of NMR in any program already using null-move pruning requires a modification of only a few lines of code. 1 Introduction Chess programs trying to search the same way humans think by generating “plausible” moves dominated until the mid-1970s. By using extensive chess knowledge at each node, these programs selected a few moves which they consid- ered plausible, and thus pruned large parts of the search tree. However, plausible- move generating programs had serious tactical shortcomings, and as soon as brute-force search programs such as Tech [17] and Chess 4.x [29] managed to reach depths of 5 plies and more, plausible-move generating programs fre- quently lost to brute-force searchers due to their tactical weaknesses. -

{Download PDF} Kasparov Versus Deep Blue : Computer Chess

KASPAROV VERSUS DEEP BLUE : COMPUTER CHESS COMES OF AGE PDF, EPUB, EBOOK Monty Newborn | 322 pages | 31 Jul 2012 | Springer-Verlag New York Inc. | 9781461274773 | English | New York, NY, United States Kasparov versus Deep Blue : Computer Chess Comes of Age PDF Book In terms of human comparison, the already existing hierarchy of rated chess players throughout the world gave engineers a scale with which to easily and accurately measure the success of their machine. This was the position on the board after 35 moves. Nxh5 Nd2 Kg4 Bc7 Famously, the mechanical Turk developed in thrilled and confounded luminaries as notable as Napoleon Bonaparte and Benjamin Franklin. Kf1 Bc3 Deep Blue. The only reason Deep Blue played in that way, as was later revealed, was because that very same day of the game the creators of Deep Blue had inputted the variation into the opening database. KevinC The allegation was that a grandmaster, presumably a top rival, had been behind the move. When it's in the open, anyone could respond to them, including people who do understand them. Qe2 Qd8 Bf3 Nd3 IBM Research. Nearly two decades later, the match still fascinates This week Time Magazine ran a story on the famous series of matches between IBM's supercomputer and Garry Kasparov. Because once you have an agenda, where does indifference fit into the picture? Raa6 Ba7 Black would have acquired strong counterplay. In fact, the first move I looked at was the immediate 36 Be4, but in a game, for the same safety-first reasons the black counterplay with a5 I might well have opted for 36 axb5. -

PUBLIC SUBMISSION Status: Pending Post Tracking No

As of: January 02, 2020 Received: December 20, 2019 PUBLIC SUBMISSION Status: Pending_Post Tracking No. 1k3-9dz4-mt2u Comments Due: January 10, 2020 Submission Type: Web Docket: PTO-C-2019-0038 Request for Comments on Intellectual Property Protection for Artificial Intelligence Innovation Comment On: PTO-C-2019-0038-0002 Intellectual Property Protection for Artificial Intelligence Innovation Document: PTO-C-2019-0038-DRAFT-0009 Comment on FR Doc # 2019-26104 Submitter Information Name: Yale Robinson Address: 505 Pleasant Street Apartment 103 Malden, MA, 02148 Email: [email protected] General Comment In the attached PDF document, I describe the history of chess puzzle composition with a focus on three intellectual property issues that determine whether a chess composition is considered eligible for publication in a magazine or tournament award: (1) having one unique solution; (2) not being anticipated, and (3) not being submitted simultaneously for two different publications. The process of composing a chess endgame study using computer analysis tools, including endgame tablebases, is cited as an example of the partnership between an AI device and a human creator. I conclude with a suggestion to distinguish creative value from financial profit in evaluating IP issues in AI inventions, based on the fact that most chess puzzle composers follow IP conventions despite lacking any expectation of financial profit. Attachments Letter to USPTO on AI and Chess Puzzle Composition 35 - Robinson second submission - PTO-C-2019-0038-DRAFT-0009.html[3/11/2020 9:29:01 PM] December 20, 2019 From: Yale Yechiel N. Robinson, Esq. 505 Pleasant Street #103 Malden, MA 02148 Email: [email protected] To: Andrei Iancu Director of the U.S.