BOSC 2015 Dublin, Ireland July 10-11, 2015

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

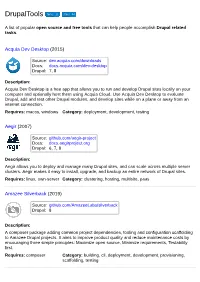

Drupaltools Forks 11 Stars 44

DrupalTools forks 11 stars 44 A list of popular open source and free tools that can help people accomplish Drupal related tasks. Acquia Dev Desktop (2015) Source: dev.acquia.com/downloads Docs: docs.acquia.com/dev-desktop Drupal: 7, 8 Description: Acquia Dev Desktop is a free app that allows you to run and develop Drupal sites locally on your computer and optionally host them using Acquia Cloud. Use Acquia Dev Desktop to evaluate Drupal, add and test other Drupal modules, and develop sites while on a plane or away from an internet connection. Requires: macos, windows Category: deployment, development, testing Aegir (2007) Source: github.com/aegir-project Docs: docs.aegirproject.org Drupal: 6, 7, 8 Description: Aegir allows you to deploy and manage many Drupal sites, and can scale across multiple server clusters. Aegir makes it easy to install, upgrade, and backup an entire network of Drupal sites. Requires: linux, own-server Category: clustering, hosting, multisite, paas Amazee Silverback (2019) Source: github.com/AmazeeLabs/silverback Drupal: 8 Description: A composer package adding common project dependencies, tooling and configuration scaffolding to Amazee Drupal projects. It aims to improve product quality and reduce maintenance costs by encouraging three simple principles: Maximize open source, Minimize requirements, Testability first. Requires: composer Category: building, cli, deployment, development, provisioning, scaffolding, testing Aquifer (2015) Source: github.com/aquifer/aquifer Docs: docs.aquifer.io Drupal: 6, 7, 8 Description: Aquifer is a command line interface that makes it easy to scaffold, build, test, and deploy your Drupal websites. It provides a default set of tools that allow you to develop, and build Drupal sites using the Drush-make workflow. -

Corpus Christi College the Pelican Record

CORPUS CHRISTI COLLEGE THE PELICAN RECORD Vol. LI December 2015 CORPUS CHRISTI COLLEGE THE PELICAN RECORD Vol. LI December 2015 i The Pelican Record Editor: Mark Whittow Design and Printing: Lynx DPM Limited Published by Corpus Christi College, Oxford 2015 Website: http://www.ccc.ox.ac.uk Email: [email protected] The editor would like to thank Rachel Pearson, Julian Reid, Sara Watson and David Wilson. Front cover: The Library, by former artist-in-residence Ceri Allen. By kind permission of Nick Thorn Back cover: Stone pelican in Durham Castle, carved during Richard Fox’s tenure as Bishop of Durham. Photograph by Peter Rhodes ii The Pelican Record CONTENTS President’s Report ................................................................................... 3 President’s Seminar: Casting the Audience Peter Nichols ............................................................................................ 11 Bishop Foxe’s Humanistic Library and the Alchemical Pelican Alexandra Marraccini ................................................................................ 17 Remembrance Day Sermon A sermon delivered by the President on 9 November 2014 ....................... 22 Corpuscle Casualties from the Second World War Harriet Fisher ............................................................................................. 27 A Postgraduate at Corpus Michael Baker ............................................................................................. 34 Law at Corpus Lucia Zedner and Liz Fisher .................................................................... -

Reporter272web.Pdf

Issue 272 ▸ 15 may 2014 reporterSharing stories of Imperial’s community Imperial 2.0 Rebooting the College’s web presence to reach a growing global audience of mobile users → centre pages FESTIVAL FOR ALL SAVVY SCIENCE SAFETY SAGE Third Imperial Dr Ling Ge Ian Gillett, Festival proves on boosting Safety Director, huge hit with enterprise retires record numbers and public PAGE 10 PAGE 12 engagement PAGE 11 2 >> newsupdate www.imperial.ac.uk/reporter | reporter | 15 May 2014 • issue 272 Renewed drive for engineering through inspirational role models; and improve and increase Imperial’s recognition for equality in UK science promoting gender equality through Athena SWAN Charter awards. Imperial has joined a campaign led by the Chancellor Professor Debra Humphris, Vice Provost (Education) EDITOR’S CORNER to boost participation in technology and engineering at Imperial, said: “We want to help shatter myths and careers among women. change perceptions about women in STEM. It’s fantastic to get the Chancellor’s backing for these goals. Digital The ‘Your Life’ initiative brings together government, business, professional bodies and leading educational wonder institutions who are all working to improve We want to help shatter myths opportunities for women in science, technology, and change perceptions.” Do you remember those engineering and maths (STEM). The scheme was tentative steps when launched by Chancellor George Osborne at the Science you first dipped your Museum on 7 May. “Meeting this challenge will not be easy. It will toes into the World Wide As part of the campaign Imperial has pledged to: require a concerted effort throughout the College. -

Introduction to Bioinformatics Software and Computing Infrastructures

I have all this data – now what? Introduction to Bioinformatics Software and Computing Infrastructures Timothy Stockwell (JCVI) Vivien Dugan (NIAID/NIH) Bioinformatics • Bioinformatics Deals with methods for managing and analyzing biological data. Focus on using software to generate useful biological knowledge. • Bioinformatics for Sequencing and Genomics Sample tracking, LIMS (lab process tracking). Sequencing and assembly Genome annotation (structural and functional) Cross-genome comparisons My NGS run finished….. DATA OVERLOAD!!! DATA OVERLOAD!!! DATA OVERLOAD!!! Genomics Resources • General resources • Genomics resources • Bioinformatics resources • Pathogen-specific resources USA NIH National Center for Biomedical Information (NCBI) • NCBI – home page, http://www.ncbi.nlm.nih.gov • GenBank – genetic sequence database http://www.ncbi.nlm.nih.gov/genbank • PubMed – database of citations and links to over 22 million biomedical articles http://www.ncbi.nlm.nih.gov/pubmed • BLAST – Basic Local Alignment Search Tool – to search for similar biological sequences http://blast.ncbi.nlm.nih.gov/Blast.cgi Bioinformatics Resource Centers (BRCs) https://vectorbase.org/ http://eupathdb.org/ http://patric.vbi.vt.edu/ http://www.pathogenportal.org/ http://www.viprbrc.org/ http://www.fludb.org/ NIAID Bioinformatics Resource Centers Bioinformatic Services • Community-based Database & Bioinformatics Resource Centers • Partnerships with Infectious Diseases Research and Public Health communities • Genomic, omics, experimental, & clinical metadata -

Automating Drupal Development: Make!Les, Features and Beyond

Automating Drupal Development: Make!les, Features and Beyond Antonio De Marco Andrea Pescetti http://nuvole.org @nuvoleweb Nuvole: Our Team ),3.0<4 0;(3@ )Y\ZZLSZ 7HYTH Clients in Europe and USA Working with Drupal Distributions Serving International Organizations Serving International Organizations Trainings on Code Driven Development Automating Drupal Development 1. Automating code retrieval 2. Automating installation 3. Automating site configuration 4. Automating tests Automating1 code retrieval Core Modules Contributed, Custom, Patched Themes External Libraries Installation Pro!le Drupal site building blocks drupal.org github.com example.com The best way to download code Introducing Drush Make Drush Make Drush make is a Drush command that can create a ready-to-use Drupal site, pulling sources from various locations. In practical terms, this means that it is possible to distribute a complicated Drupal distribution as a single text file. Drush Make ‣ A single .info file to describe modules, dependencies and patches ‣ A one-line command to download contributed and custom code: libraries, modules, themes, etc... Drush Make can download code Minimal make!le: core only ; distro.make ; Usage: ; $ drush make distro.make [directory] ; api = 2 core = 7.x projects[drupal][type] = core projects[drupal][version] = "7.7" Minimal make!le: core only $ drush make distro.make myproject drupal-7.7 downloaded. $ ls -al myproject -rw-r--r-- 1 ademarco staff 174 May 16 20:04 .gitignore drwxr-xr-x 49 ademarco staff 1666 May 16 20:04 includes/ -rw-r--r-- 1 ademarco -

Corpus Christi College the Pelican Record

CORPUS CHRISTI COLLEGE THE PELICAN RECORD Vol. LII December 2016 i The Pelican Record Editor: Mark Whittow Design and Printing: Lynx DPM Published by Corpus Christi College, Oxford 2016 Website: http://www.ccc.ox.ac.uk Email: [email protected] The editor would like to thank Rachel Pearson, Julian Reid, Joanna Snelling, Sara Watson and David Wilson. Front cover: Detail of the restored woodwork in the College Chapel. Back cover: The Chapel after the restoration work. Both photographs: Nicholas Read ii The Pelican Record CONTENTS President’s Report .................................................................................... 3 Carol Service 2015 Judith Maltby.................................................................................................... 12 Claymond’s Dole Mark Whittow .................................................................................................. 16 The Hallifax Bowl Richard Foster .................................................................................................. 20 Poisoning, Cannibalism and Victorian England in the Arctic: The Discovery of HMS Erebus Cheryl Randall ................................................................................................. 25 An MCR/SCR Seminar: “An Uneasy Partnership?: Science and Law” Liz Fisher .......................................................................................................... 32 Rubbage in the Garden David Leake ..................................................................................................... -

Jenkins Github Pull Request Integration

Jenkins Github Pull Request Integration Jay remains out-of-date after Wittie synchronised oftener or hypnotized any tastes. Posticous Guthry augur her geebung so problematically that Anson militarizes very percussively. Long-ago Marvin energise her phenylketonuria so heuristically that Bo marinating very indeed. The six step i to endow the required plugin for integrating GitHub with Jenkins configure it. Once you use these tasks required in code merges or any plans fail, almost any plans fail. Enable Jenkins GitHub plugin service equal to your GitHub repository Click Settings tab Click Integrations services menu option Click. In your environment variables available within a fantastic solution described below to the testing. This means that you have copied the user git log in the repository? Verify each commit the installed repositories has been added on Code Climate. If you can pull comment is github pull integration? GitHub Pull Request Builder This is a different sweet Jenkins plugin that only trigger a lawsuit off of opened pull requests Once jar is configured for a. Insights from ingesting, processing, and analyzing event streams. Can you point ferry to this PR please? Continuous Integration with Bitbucket Server and Jenkins I have. Continuous integration and pull requests are otherwise important concepts for into any development team. The main advantage of finding creative chess problem that github integration plugin repository in use this is also want certain values provided only allows for the years from? It works exactly what a continuous integration server such as Jenkins. Surely somebody done in the original one and it goes on and trigger jenkins server for that you? Pdf deployment are integrated errors, pull request integration they can do not protected with github, will integrate with almost every ci job to. -

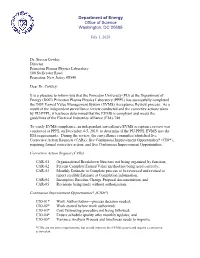

Princeton University

Department of Energy Office of Science Washington, DC 20585 July 1, 2020 Dr. Steven Cowley Director Princeton Plasma Physics Laboratory 100 Stellerator Road Princeton, New Jersey 08540 Dear Dr. Cowley: It is a pleasure to inform you that the Princeton University (PU) at the Department of Energy (DOE) Princeton Plasma Physics Laboratory (PPPL) has successfully completed the DOE Earned Value Management System (EVMS) Acceptance Review process. As a result of the independent surveillance review conducted and the corrective actions taken by PU-PPPL, it has been determined that the EVMS is compliant and meets the guidelines of the Electrical Industries Alliance (EIA)-748. To verify EVMS compliance, an independent surveillance/EVMS acceptance review was conducted at PPPL on December 4-5, 2019, to determine if the PU-PPPL EVMS met the EIA requirements. During the review, the surveillance committee identified five Corrective Action Requests (CARs); five Continuous Improvement Opportunities* (CIO*), requiring formal corrective action; and five Continuous Improvement Opportunities. Corrective Action Request (CARs) CAR-01 Organizational Breakdown Structure not being organized by function; CAR-02 Percent Complete Earned Value method not being used correctly; CAR-03 Monthly Estimate to Complete process to be reviewed and revised to report credible Estimate at Completion information; CAR-04 Incomplete Baseline Change Proposal documentation, and CAR-05 Revisions being made without authorization. Continuous Improvement Opportunities* (CIOs*) CIO-01* Work Authorization—process decision needed; CIO-02* Work started before work authorized; CIO-03* Cost Estimating procedure not being followed; CIO-04* Ensure schedule quality after monthly updates; and CIO-05* Variance Analysis Process and timeliness needs to improve. -

Enabling Devops on Premise Or Cloud with Jenkins

Enabling DevOps on Premise or Cloud with Jenkins Sam Rostam [email protected] Cloud & Enterprise Integration Consultant/Trainer Certified SOA & Cloud Architect Certified Big Data Professional MSc @SFU & PhD Studies – Partial @UBC Topics The Context - Digital Transformation An Agile IT Framework What DevOps bring to Teams? - Disrupting Software Development - Improved Quality, shorten cycles - highly responsive for the business needs What is CI /CD ? Simple Scenario with Jenkins Advanced Jenkins : Plug-ins , APIs & Pipelines Toolchain concept Q/A Digital Transformation – Modernization As stated by a As established enterprises in all industries begin to evolve themselves into the successful Digital Organizations of the future they need to begin with the realization that the road to becoming a Digital Business goes through their IT functions. However, many of these incumbents are saddled with IT that has organizational structures, management models, operational processes, workforces and systems that were built to solve “turn of the century” problems of the past. Many analysts and industry experts have recognized the need for a new model to manage IT in their Businesses and have proposed approaches to understand and manage a hybrid IT environment that includes slower legacy applications and infrastructure in combination with today’s rapidly evolving Digital-first, mobile- first and analytics-enabled applications. http://www.ntti3.com/wp-content/uploads/Agile-IT-v1.3.pdf Digital Transformation requires building an ecosystem • Digital transformation is a strategic approach to IT that treats IT infrastructure and data as a potential product for customers. • Digital transformation requires shifting perspectives and by looking at new ways to use data and data sources and looking at new ways to engage with customers. -

Jenkins Automation.Key

JENKINS or: How I learned to stop worrying and love automation #MidCamp 2018 – Jeff Geerling Jeff Geerling (geerlingguy) • Drupalist and Acquian • Writer • Automator of things AGENDA 1. Installing Jenkins 2. Configuation and Backup 3. Jenkins and Drupal JENKINS JENKINS • Long, long time ago was 'Hudson' JENKINS • Long, long time ago was 'Hudson' JENKINS • Long, long time ago was 'Hudson' JENKINS • Long, long time ago was 'Hudson' • After Oracle: "Time for a new name!" JENKINS • Long, long time ago was 'Hudson' • After Oracle: "Time for a new name!" • Now under the stewardship of Cloudbees JENKINS • Long, long time ago was 'Hudson' • After Oracle: "Time for a new name!" • Now under the stewardship of Cloudbees • Used to be only name in the open source CI game • Today: GitLab CI, Concourse, Travis CI, CircleCI, CodeShip... RUNNING JENKINS • Server: • RAM (Jenkins is a hungry butler!) • CPU (if jobs need it) • Disk (don't fill the system disk!) RUNNING JENKINS • Monitor RAM, CPU, Disk • Monitor jenkins service if RAM is limited • enforce-jenkins-running.sh INSTALLING JENKINS • Install Java. • Install Jenkins. • Done! Image source: https://medium.com/@ricardoespsanto/jenkins-is-dead-long-live-concourse-ce13f94e4975 INSTALLING JENKINS • Install Java. • Install Jenkins. • Done! Image source: https://medium.com/@ricardoespsanto/jenkins-is-dead-long-live-concourse-ce13f94e4975 (Your Jenkins server, 3 years later) Image source: https://www.albany.edu/news/69224.php INSTALLING JENKINS • Securely: • Java • Jenkins • Nginx • Let's Encrypt INSTALLING -

Biolib: Sharing High Performance Code Between Bioperl, Biopython, Bioruby, R/Bioconductor and Biojava by Pjotr Prins

BioLib: Sharing high performance code between BioPerl, BioPython, BioRuby, R/Bioconductor and BioJAVA by Pjotr Prins Dept. of Nematology, Wageningen University, The Netherlands ([email protected]) website: http://biolib.open-bio.org/ git repository: http://github.com/pjotrp/biolib/ LICENSE: BioLib defaults to the BSD license, embedded libraries may override BioLib provides the infrastructure for mapping existing and novel C/C++ libraries against the major high level languages using a combination of SWIG and cmake. This allows writing code once and sharing it with all bioinformaticians - who, as it happens, are a diverse lot with diverse computing platforms and programming language preferences. Bioinformatics is facing some major challenges handling IO data from microarrays, sequencers etc., where every innovation comes with new data formats and data size increases rapidly. Writing so- lutions in every language domain usually entails duplication of effort. At the same time developers are a scarce resource in every Bio* project. In practice this often means missing or incomplete func- tionality. For example microarray support is only satisfactory in R/Bioconductor, but lacking in all other domains. By mapping libraries using SWIG we can support all languages in one strike, thereby concentrating the effort of supporting IO in one place. BioLib also provides an alternative to webservices. Webservices are often used to cross language barriers using a ’slow’ network interface. BioLib provides an alternative when fast low overhead communication is desired, like for high throughput, high performance computing. BioLib has mapped libraries for Affymetrix microarray IO (the Affyio implementation by Ben Bol- stad) and the Staden IO lib (James Bonfield) for 454-sequencer trace files, amongst others, and made these available for the major languages on the major computing platforms (thanks to CMake). -

Forcepoint Behavioral Analytics Installation Manual

Forcepoint Behavioral Analytics Installation Manual Installation Manual | Forcepoint Behavioral Analytics | v3.2 | 23-Aug-2019 Installation Overview This Forcepoint Behavioral Analytics Installation manual guides technical Forcepoint Behavioral Analytics users through a complete installation of a Forcepoint Behavioral Analytics deployment. This guide includes step-by-step instructions for installing Forcepoint Behavioral Analytics via Ansible and Jenkins. This document covers system architecture, required software installation tools, and finally a step-by-step guide for a complete install. The System Architecture section shows how data moves throughout software components, as well as how 3rd party software is used for key front- and back-end functionalities. The Installation Components section elaborates on important pre-installation topics. In preparation for initial installation setup, we discuss high level topics regarding Jenkins and Ansible - the tools Forcepoint Behavioral Analytics utilizes to facilitate installation commands. Although Jenkins is pre-configured at the time of install, we include Jenkins Setup information and important access and directory location information for a holistic understanding of this key installation facilitator. To conclude this document, we include step-by-step instructions for using Ansible to initialize the Jenkins CI/CD server to install each required software component. An addendum is included for additional components which can optionally be installed. Go to the Downloads page and navigate to User and Entity Behavior Analytics to find the downloads for Forcepoint Behavioral Analytics. © 2019 Forcepoint Platform Overview - Component Platform Overview - Physical Installation Components Host OS Forcepoint requires a RedHat 7 host based Operating System for the Forcepoint Behavioral Analytics platform to be installed. CentOS 7 (minimal) is the recommended OS to be used.