ABSTRACT Guided Probabilistic Topic Models for Agenda-Setting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The History of Redistricting in Georgia

GEORGIA LAW REVIEW(DO NOT DELETE) 11/6/2018 8:33 PM THE HISTORY OF REDISTRICTING IN GEORGIA Charles S. Bullock III* In his memoirs, Chief Justice Earl Warren singled out the redistricting cases as the most significant decisions of his tenure on the Court.1 A review of the changes redistricting introduced in Georgia supports Warren’s assessment. Not only have the obligations to equalize populations across districts and to do so in a racially fair manner transformed the makeup of the state’s collegial bodies, Georgia has provided the setting for multiple cases that have defined the requirements to be met when designing districts. Other than the very first adjustments that occurred in the 1960s, changes in Georgia plans had to secure approval from the federal government pursuant to the Voting Rights Act. Also, the first four decades of the Redistricting Revolution occurred with a Democratic legislature and governor in place. Not surprisingly, the partisans in control of redistricting sought to protect their own and as that became difficult they employed more extreme measures. When in the minority, Republicans had no chance to enact plans on their own. Beginning in the 1980s and peaking a decade later, Republicans joined forces with black Democrats to devise alternatives to the proposals of white Democrats. The biracial, bipartisan coalition never had sufficient numbers to enact its ideas. After striking out in the legislature, African-Americans appealed to the U.S. Attorney General alleging that the plans enacted were less favorable to black interests than alternatives * Charles S. Bullock, III is a University Professor of Public and International Affairs at the University of Georgia where he holds the Richard B. -

Lawmakers Urge Congress to 'Man Up' on Transportation Funding

Lawmakers urge Congress to ‘man up’ on transportation funding Rosalind Rossi SunTimes April 9, 2015 http://chicago.suntimes.com/news/lawmakers‐urge‐congress‐to‐man‐up‐on‐transportation‐funding/ Illinois’ federal lawmakers stood united with area transit leaders Thursday in urging Congress to “man up” and pass a long‐term federal transportation funding bill before two key transit measures expire or teeter on insolvency. “My message to Congress is `Man up,’ ” U.S. Sen. Dick Durbin, (D‐Ill.), announced at a “Stand Up 4 Transportation” news conference at Chicago’s Union Station. Durbin joined U.S. Reps. Dan Lipinski (D‐Ill.), Mike Quigley (D‐Ill.), Bill Foster (D‐Ill.) and U.S. Rep. Bob Dold (R‐Ill.) in urging Congress to pass long‐term funding instead of patchwork short‐term solutions to bankroll the nation’s public transit and transportation systems. Lipinski noted that Congress has passed 11 short‐term extensions to fund the nation’s highway and transit needs in roughly the last six years. “This is not good for our country,” Lipinski said. “Congress cannot keep kicking the can down the road . The system becomes more and more expensive to fix.” Leaders of the CTA, Metra, Pace and Amtrak joined elected officials in urging long‐term, sustainable funding to maintain and upgrade transportation needs — from buses and trains to roads and bridges. Such a move is critical to not only the nation’s ability to move goods and carry people to work and commerce but to its future, they said. Quigley noted that investing in transportation infrastructure pays dividends. -

114TH CONGRESS / First Session Available at Frcaction.Org/Scorecard

FRC ACTION VOTE SCORECARD 114TH CONGRESS / First Session Available at FRCAction.org/scorecard U.S. House of Representatives and U.S. Senate Dear Voter and Friend of the Family, FRC Action presents our Vote Scorecard for the First Session of the 114th Congress. This online Scorecard contains a compilation of significant votes on federal legislation affecting faith, family, and freedom that FRC Action either supported or opposed. These recorded votes span the 2015 calendar year and include the greatest number of pro-life votes in history, after the U.S. House increased its Republican membership and the U.S. Senate was returned to Republican control. The year began with a bipartisan effort in the House to prohibit federal funds from being used to pay for abortion coverage under Obamacare. Congress successfully fought to restrict FDA approval of some forms of embryo-destructive research. The House, once again, passed legislation that would prevent late abortions on 5 month old pain-capable unborn children, and although the Senate was unable to pass the bill due to the 60 vote threshold, for the first time, a majority of Senators voted in favor of the bill. The public release of videos revealing Planned Parenthood’s organ harvesting practices renewed efforts to defund this scandal-ridden organization and redirect funding towards community health centers. In an unprecedented victory, the House and Senate passed a budget reconciliation bill, the Restoring Ameri- cans’ Healthcare Freedom Reconciliation Act, which would have eliminated a significant portion of Planned Parenthood’s funding—roughly 80%— and repealed key provisions of Obamacare. -

Lobbying Contribution Report

8/1/2016 LD203 Contribution Report LOBBYING CONTRIBUTION REPORT Clerk of the House of Representatives • Legislative Resource Center • 135 Cannon Building • Washington, DC 20515 Secretary of the Senate • Office of Public Records • 232 Hart Building • Washington, DC 20510 1. FILER TYPE AND NAME 2. IDENTIFICATION NUMBERS Type: House Registrant ID: Organization Lobbyist 35195 Organization Name: Senate Registrant ID: Honeywell International 57453 3. REPORTING PERIOD 4. CONTACT INFORMATION Year: Contact Name: 2016 Ms.Stacey Bernards MidYear (January 1 June 30) Email: YearEnd (July 1 December 31) [email protected] Amendment Phone: 2026622629 Address: 101 CONSTITUTION AVENUE, NW WASHINGTON, DC 20001 USA 5. POLITICAL ACTION COMMITTEE NAMES Honeywell International Political Action Committee 6. CONTRIBUTIONS No Contributions #1. Contribution Type: Contributor Name: Amount: Date: FECA Honeywell International Political Action Committee $1,500.00 01/14/2016 Payee: Honoree: Friends of Sam Johnson Sam Johnson #2. Contribution Type: Contributor Name: Amount: Date: FECA Honeywell International Political Action Committee $2,500.00 01/14/2016 Payee: Honoree: Kay Granger Campaign Fund Kay Granger #3. Contribution Type: Contributor Name: Amount: Date: FECA Honeywell International Political Action Committee $2,000.00 01/14/2016 Payee: Honoree: Paul Cook for Congress Paul Cook https://lda.congress.gov/LC/protected/LCWork/2016/MM/57453DOM.xml?1470093694684 1/75 8/1/2016 LD203 Contribution Report #4. Contribution Type: Contributor Name: Amount: Date: FECA Honeywell International Political Action Committee $1,000.00 01/14/2016 Payee: Honoree: DelBene for Congress Suzan DelBene #5. Contribution Type: Contributor Name: Amount: Date: FECA Honeywell International Political Action Committee $1,000.00 01/14/2016 Payee: Honoree: John Carter for Congress John Carter #6. -

Bloomberg Politics GOP Unity Tracker

Bloomberg Politics GOP Unity Tracker Below is a list of who current Republican office-holders, mega-donors, and influential conservatives plan to support in November, as of Bloomberg's latest count on June 7, 2016. Name Unity Status Group State Gov. Robert Bentley Trump supporter Elected Officials Alabama Rep. Bradley Byrne Trump supporter Elected Officials Alabama Rep. Martha Roby Trump supporter Elected Officials Alabama Rep. Mike Rogers Trump supporter Elected Officials Alabama Rep. Robert Aderholt Trump supporter Elected Officials Alabama Sen. Jeff Sessions Trump supporter Elected Officials Alabama Rep. Trent Franks Trump supporter Elected Officials Arizona Rep. Steve Womack Trump supporter Elected Officials Arkansas Rep. Darrell Issa Trump supporter Elected Officials California Rep. Duncan Hunter Trump supporter Elected Officials California Rep. Ken Calvert Trump supporter Elected Officials California Rep. Kevin McCarthy Trump supporter Elected Officials California Rep. Mimi Walters Trump supporter Elected Officials California Rep. Paul Cook Trump supporter Elected Officials California Rep. Tom McClintock Trump supporter Elected Officials California Rep. Doug Lamborn Trump supporter Elected Officials Colorado Rep. Scott Tipton Trump supporter Elected Officials Colorado Gov. Rick Scott Trump supporter Elected Officials Florida Rep. Curt Clawson Trump supporter Elected Officials Florida Rep. Dennis Ross Trump supporter Elected Officials Florida Rep. Jeff Miller Trump supporter Elected Officials Florida Rep. John Mica Trump supporter Elected Officials Florida Rep. Ted Yoho Trump supporter Elected Officials Florida Rep. Vern Buchanan Trump supporter Elected Officials Florida Gov. Nathan Deal Trump supporter Elected Officials Georgia Rep. Austin Scott Trump supporter Elected Officials Georgia Rep. Doug Collins Trump supporter Elected Officials Georgia Rep. Lynn Westmoreland Trump supporter Elected Officials Georgia Rep. -

Congressional Directory UTAH

276 Congressional Directory UTAH *** THIRD DISTRICT JOHN CURTIS, Republican, of Provo, UT; born in Salt Lake City, UT, May 10, 1960; edu- cation: B.S., business management, Brigham Young University, Provo, UT, 1985; professional: Mayor, Provo City, 2010–17; COO and part-owner, Action Target, 1999–2010; manager, O.C. Tanner Co., 1989–99; overseas lead buyer, Brazil International, 1987–88; territory representa- tive, Citizen Watch Co., 1984–86; missionary, Church of Jesus Christ of Latter-Day Saints, Tai- wan, 1979–81; religion: Church of Jesus Christ of Latter-Day Saints; married: Sue; children: Kirsten, Zane, Jacob, Sarah Jane, Emily, and Nicole; grandchildren: Jet, Jane, Clare, Sage, Hazel, and Genevieve; caucuses: Dietary Supplement Caucus; Friends of Wales Caucus; Repub- lican Main Street Partnership; Western Caucus; committees: Foreign Affairs; Natural Resources; elected, by special election, to the 115th Congress on November 17, 2017, to fill the vacancy caused by the resignation of U.S. Representative Jason Chaffetz; reelected to the 116th Con- gress on November 6, 2018. Office Listings https://curtis.house.gov https://facebook.com/RepJohnCurtis https://twitter.com/RepJohnCurtis 125 Cannon House Office Building, Washington, DC 20515 ................................................. (202) 225–7751 Chief of Staff.—Corey Norman. Legislative Director.—Jake Bornstein. Scheduler.—Stephanie Heinrich. Press Secretary.—Ally Riding. Legislative Assistants: Troy Dougall, Rebekah Rodriquez. Counsel.—Liz Whitlock. Staff Assistant.—Ray Phillips. -

2015 Voting Record

2015 VOTING RECORD 2016 INTRODUCTION The American Federation of Government Employees, AFL-CIO, is the nation’s largest federal employee union, representing more than 670,000 federal and D.C. government workers nationwide and overseas. Workers in virtually every function of government depend upon AFGE for legislative advocacy, legal representation, technical expertise, and informational services. AFGE is proud to represent federal and D.C. government workers because they are the vital threads of the fabric of American life. Government workers inspect the food we eat and the places we work. They protect citizens from the illicit flow of drugs, maintain the safety of our nation’s borders, and keep the national defense systems prepared for any danger. They care for our nation’s veterans and serve as a vital link to Social Security recipients. AFGE takes seriously its responsibility to protect the rights of the working and middle class Americans who make up the federal and D.C. workforces. The union believes the best way to improve government’s effectiveness and efficiency is to treat federal and D.C. workers as valuable resources rather than easy targets. Federal labor unions, including AFGE, are not currently afforded the same full-scope collective bargaining rights as unions representing private sector workers. For this reason, AFGE relies on a comprehensive legislative and political action program to deal with issues that affect the federal and D.C. workforces. When Congress tackles government employee pay and benefit issues or debates funding of vital government programs, AFGE is on the scene representing its members. The 2015 Voting Record shows where House and Senate lawmakers stood on the issues that were most important to federal and D.C. -

Congressional Record on Choice

2019 Congressional Record on Choice Government Relations Department 1725 I Street, NW Suite 900 Washington, DC 20006 202.973.3000 CONGRESSIONAL RECORD ON CHOICE 116TH CONGRESS, 1ST SESSION NARAL Pro-Choice America’s 2019 Congressional Record on Choice documents the key House and Senate votes on reproduc- For over 50 years, NARAL Pro-Choice tive freedom taken during the first session of the 116th Congress. The 116th Congress reflects a wave of historic firsts—most America has led the fight for repro- significantly the first pro-choice majority in the House of Representatives. There are a record number of women serving ductive freedom for everyone, includ- in the House, and more LGBTQ people serving in Congress than ever before. The freshman class is also younger than most ing the right to access abortion. recent incoming classes and the 116th Congress reflects record breaking racial, ethnic, and religious diversity. Nowhere was the new pro-choice House majority more NARAL Pro-Choice America is powered evident than in the appropriations process. House spending bills for fiscal year 2020 reflected increased funding for vital by our 2.5 million members—in every family planning programs, defunded harmful abstinence-on- ly-until-marriage programs, and blocked many of the Trump administration’s efforts to use the regulatory process to state and congressional district. restrict access to abortion and family planning services. Though the House bills were not passed by the Senate, we We represent the more than 7 in 10 now see what can happen when lawmakers committed to reproductive rights are in control. -

OCTOBER 6, 2020 WASHINGTON, DC @Congressfdn #Democracyawards Table of Contents

AWARDS CELEBRATION OCTOBER 6, 2020 WASHINGTON, DC www.CongressFoundation.org @CongressFdn #DemocracyAwards Table of Contents 3 About the Congressional Management Foundation 3 Special Thanks 4 About the Democracy Awards 5 Virtual Awards Ceremony 6 Democracy Awards for Constituent Service 7 Democracy Awards for Innovation and Modernization 8 Democracy Awards for “Life in Congress” Workplace Environment 9 Democracy Awards for Transparency and Accountability 10 Finalists for the Democracy Awards 14 Democracy Awards for Lifetime Achievement 18 Staff Finalists for Lifetime Achievement 21 Selection Committee Biographies 24 Thank You to Our Generous Supporters 2 • CongressFoundation.org • @CongressFdn • #DemocracyAwards About the Congressional Management Foundation The Congressional Management Foundation (CMF) is a 501(c)(3) QUICK FACTS nonpartisan nonprofit whose mission is to build trust and effectiveness in Congress. • More than 1,100 staff from more than 300 congressional We do this by enhancing the performance of the institution, offices participate in the training legislators and their staffs through research-based education programs CMF conducts annually. and training, and by strengthening the bridge and understanding between Congress and the People it serves. • Since 2014 CMF has conducted 500 educational sessions with Since 1977, CMF has worked internally with Member, committee, more than 90,000 citizens on leadership, and institutional offices in the House and Senate to effectively communicating with identify and disseminate best practices for management, workplace Congress. environment, communications, and constituent services. • Since 2000, CMF has conducted CMF also is the leading researcher and trainer on citizen more than 500 strategic planning engagement, educating thousands of individuals and facilitating or other consulting projects with better understanding, relationships, and communications with Members of Congress and their staffs. -

Congressional Directory These Times Are Difficult, but There Is No Opportunity to Back Down

Congressional Directory These times are difficult, but there is no opportunity to back down. With the current administration’s actions, citizens are now keeping themselves engaged and informed with a vigor we haven’t seen for a while. We are heartened to see this type of enthusiastic activism and want to encourage you to keep in contact with your representatives in the Senate and the House of Representatives. For your convenience, we have included a directory below. To find your district, visit njgin.state.nj.us/state/NJ_CongressionalDistricts/ Senate Cory Booker Bob Menendez Camden Office: (856) 338-8922 Newark Office: (973) 645-3030 Newark Office: (973) 639-8700 Barrington Office: (856) 757-5353 Washington D.C. Office: (202) 224-3224 Washington D.C. Office: (202) 224-4744 House of Representatives 1st District – Donald Norcross 2nd District – Frank LoBiondo Cherry Hill Office: (856) 427-7000 Mays Landing Office: (609) 625-5008 or (800) 471-4450 Washington D.C. Office: (202) 225-6501 Washington D.C. Office: (202) 225-6572 3rd District – Tom MacArthur 4Th District – Chris Smith Marlton Office: (856) 267-5182 Freehold Office: (732) 780-3035 Toms River Office: (732) 569-6495 Plumsted Office: (609) 286-2571 or (732) 350-2300 Washington D.C. Office: (202) 225-4765 Hamilton Office: (609) 585-7878 Washington D.C. Office: (202) 225-3765 5th District – Josh Gottheimer 6th District – Frank Pallone Glen Rock Office: (888) 216-5646 New Brunswick Office: (732) 249-8892 Newton Office: (888) 216-5646 Long Branch Office: (732) 571-1140 Washington D.C. Office: (202) 225-4465 Washington D.C. -

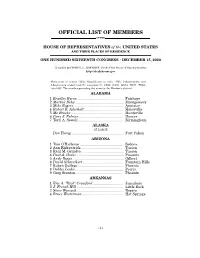

Official List of Members

OFFICIAL LIST OF MEMBERS OF THE HOUSE OF REPRESENTATIVES of the UNITED STATES AND THEIR PLACES OF RESIDENCE ONE HUNDRED SIXTEENTH CONGRESS • DECEMBER 15, 2020 Compiled by CHERYL L. JOHNSON, Clerk of the House of Representatives http://clerk.house.gov Democrats in roman (233); Republicans in italic (195); Independents and Libertarians underlined (2); vacancies (5) CA08, CA50, GA14, NC11, TX04; total 435. The number preceding the name is the Member's district. ALABAMA 1 Bradley Byrne .............................................. Fairhope 2 Martha Roby ................................................ Montgomery 3 Mike Rogers ................................................. Anniston 4 Robert B. Aderholt ....................................... Haleyville 5 Mo Brooks .................................................... Huntsville 6 Gary J. Palmer ............................................ Hoover 7 Terri A. Sewell ............................................. Birmingham ALASKA AT LARGE Don Young .................................................... Fort Yukon ARIZONA 1 Tom O'Halleran ........................................... Sedona 2 Ann Kirkpatrick .......................................... Tucson 3 Raúl M. Grijalva .......................................... Tucson 4 Paul A. Gosar ............................................... Prescott 5 Andy Biggs ................................................... Gilbert 6 David Schweikert ........................................ Fountain Hills 7 Ruben Gallego ............................................ -

Press Release

News Release CITY OF MOUNTLAKE TERRACE 6100 219TH STREET SW, SUITE 200 MOUNTLAKE TERRACE, WASHINGTON 98043 FOR MORE INFORMATION CONTACT: Virginia Olsen, City Clerk/Community Relations Director, (425) 744-6206 FOR IMMEDIATE RELEASE: March 6, 2015 MLT Seeks Partnerships for Downtown Revitalization MOUNTLAKE TERRACE — Mayor Jerry Smith, Mayor Pro Tem Laura Sonmore and City Manager Arlene Fisher recently met with Senators Patty Murray and Maria Cantwell, Congressman Rick Larsen, Congressman Dave Reichert and representatives from the offices of Governor Jay Inslee and others in Washington D.C. The main purpose of the meetings was to update the city’s legislators on funding needs for the revitalization of the downtown corridor. The city communicated its need to develop the infrastructure necessary to implement its vision for a revitalized downtown with the Main Street Revitalization Project. It is estimated that this investment of city, state and federal dollars would leverage millions in private investment to create jobs and opportunities in the downtown and move the community forward. The City of Mountlake Terrace has competed for funds within the TIGER program for its Main Street project and has scored exceptionally well on the economic cost basis models, but has failed to win an award due to the strong competition and relative size of the other projects from larger agencies. TIGER, which stands for Transportation Investments Generating Economic Recovery, was the result of efforts initiated by Senator Patty Murray several years ago. U.S. Representative Rick Larsen recently introduced a new version of the “TIGER CUBS” bill, federal legislation that would set aside 20% of special transportation infrastructure funding for smaller to medium-sized cities.