Multivariate Statistics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Moments of the Product and Ratio of Two Correlated Chi-Square Variables

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Springer - Publisher Connector Stat Papers (2009) 50:581–592 DOI 10.1007/s00362-007-0105-0 REGULAR ARTICLE Moments of the product and ratio of two correlated chi-square variables Anwar H. Joarder Received: 2 June 2006 / Revised: 8 October 2007 / Published online: 20 November 2007 © The Author(s) 2007 Abstract The exact probability density function of a bivariate chi-square distribu- tion with two correlated components is derived. Some moments of the product and ratio of two correlated chi-square random variables have been derived. The ratio of the two correlated chi-square variables is used to compare variability. One such applica- tion is referred to. Another application is pinpointed in connection with the distribution of correlation coefficient based on a bivariate t distribution. Keywords Bivariate chi-square distribution · Moments · Product of correlated chi-square variables · Ratio of correlated chi-square variables Mathematics Subject Classification (2000) 62E15 · 60E05 · 60E10 1 Introduction Fisher (1915) derived the distribution of mean-centered sum of squares and sum of products in order to study the distribution of correlation coefficient from a bivariate nor- mal sample. Let X1, X2,...,X N (N > 2) be two-dimensional independent random vectors where X j = (X1 j , X2 j ) , j = 1, 2,...,N is distributed as a bivariate normal distribution denoted by N2(θ, ) with θ = (θ1,θ2) and a 2 × 2 covariance matrix = (σik), i = 1, 2; k = 1, 2. The sample mean-centered sums of squares and sum of products are given by a = N (X − X¯ )2 = mS2, m = N − 1,(i = 1, 2) ii j=1 ij i i = N ( − ¯ )( − ¯ ) = and a12 j=1 X1 j X1 X2 j X2 mRS1 S2, respectively. -

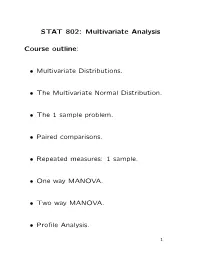

STAT 802: Multivariate Analysis Course Outline

STAT 802: Multivariate Analysis Course outline: Multivariate Distributions. • The Multivariate Normal Distribution. • The 1 sample problem. • Paired comparisons. • Repeated measures: 1 sample. • One way MANOVA. • Two way MANOVA. • Profile Analysis. • 1 Multivariate Multiple Regression. • Discriminant Analysis. • Clustering. • Principal Components. • Factor analysis. • Canonical Correlations. • 2 Basic structure of typical multivariate data set: Case by variables: data in matrix. Each row is a case, each column is a variable. Example: Fisher's iris data: 5 rows of 150 by 5 matrix: Case Sepal Sepal Petal Petal # Variety Length Width Length Width 1 Setosa 5.1 3.5 1.4 0.2 2 Setosa 4.9 3.0 1.4 0.2 . 51 Versicolor 7.0 3.2 4.7 1.4 . 3 Usual model: rows of data matrix are indepen- dent random variables. Vector valued random variable: function X : p T Ω R such that, writing X = (X ; : : : ; Xp) , 7! 1 P (X x ; : : : ; Xp xp) 1 ≤ 1 ≤ defined for any const's (x1; : : : ; xp). Cumulative Distribution Function (CDF) p of X: function FX on R defined by F (x ; : : : ; xp) = P (X x ; : : : ; Xp xp) : X 1 1 ≤ 1 ≤ 4 Defn: Distribution of rv X is absolutely con- tinuous if there is a function f such that P (X A) = f(x)dx (1) 2 ZA for any (Borel) set A. This is a p dimensional integral in general. Equivalently F (x1; : : : ; xp) = x1 xp f(y ; : : : ; yp) dyp; : : : ; dy : · · · 1 1 Z−∞ Z−∞ Defn: Any f satisfying (1) is a density of X. For most x F is differentiable at x and @pF (x) = f(x) : @x @xp 1 · · · 5 Building Multivariate Models Basic tactic: specify density of T X = (X1; : : : ; Xp) : Tools: marginal densities, conditional densi- ties, independence, transformation. -

A Multivariate Student's T-Distribution

Open Journal of Statistics, 2016, 6, 443-450 Published Online June 2016 in SciRes. http://www.scirp.org/journal/ojs http://dx.doi.org/10.4236/ojs.2016.63040 A Multivariate Student’s t-Distribution Daniel T. Cassidy Department of Engineering Physics, McMaster University, Hamilton, ON, Canada Received 29 March 2016; accepted 14 June 2016; published 17 June 2016 Copyright © 2016 by author and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativecommons.org/licenses/by/4.0/ Abstract A multivariate Student’s t-distribution is derived by analogy to the derivation of a multivariate normal (Gaussian) probability density function. This multivariate Student’s t-distribution can have different shape parameters νi for the marginal probability density functions of the multi- variate distribution. Expressions for the probability density function, for the variances, and for the covariances of the multivariate t-distribution with arbitrary shape parameters for the marginals are given. Keywords Multivariate Student’s t, Variance, Covariance, Arbitrary Shape Parameters 1. Introduction An expression for a multivariate Student’s t-distribution is presented. This expression, which is different in form than the form that is commonly used, allows the shape parameter ν for each marginal probability density function (pdf) of the multivariate pdf to be different. The form that is typically used is [1] −+ν Γ+((ν n) 2) T ( n) 2 +Σ−1 n 2 (1.[xx] [ ]) (1) ΓΣ(νν2)(π ) This “typical” form attempts to generalize the univariate Student’s t-distribution and is valid when the n marginal distributions have the same shape parameter ν . -

A Guide on Probability Distributions

powered project A guide on probability distributions R-forge distributions Core Team University Year 2008-2009 LATEXpowered Mac OS' TeXShop edited Contents Introduction 4 I Discrete distributions 6 1 Classic discrete distribution 7 2 Not so-common discrete distribution 27 II Continuous distributions 34 3 Finite support distribution 35 4 The Gaussian family 47 5 Exponential distribution and its extensions 56 6 Chi-squared's ditribution and related extensions 75 7 Student and related distributions 84 8 Pareto family 88 9 Logistic ditribution and related extensions 108 10 Extrem Value Theory distributions 111 3 4 CONTENTS III Multivariate and generalized distributions 116 11 Generalization of common distributions 117 12 Multivariate distributions 132 13 Misc 134 Conclusion 135 Bibliography 135 A Mathematical tools 138 Introduction This guide is intended to provide a quite exhaustive (at least as I can) view on probability distri- butions. It is constructed in chapters of distribution family with a section for each distribution. Each section focuses on the tryptic: definition - estimation - application. Ultimate bibles for probability distributions are Wimmer & Altmann (1999) which lists 750 univariate discrete distributions and Johnson et al. (1994) which details continuous distributions. In the appendix, we recall the basics of probability distributions as well as \common" mathe- matical functions, cf. section A.2. And for all distribution, we use the following notations • X a random variable following a given distribution, • x a realization of this random variable, • f the density function (if it exists), • F the (cumulative) distribution function, • P (X = k) the mass probability function in k, • M the moment generating function (if it exists), • G the probability generating function (if it exists), • φ the characteristic function (if it exists), Finally all graphics are done the open source statistical software R and its numerous packages available on the Comprehensive R Archive Network (CRAN∗). -

Mx (T) = 2$/2) T'w# (&&J Terp

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Applied Mathematics Letters PERGAMON Applied Mathematics Letters 16 (2003) 643-646 www.elsevier.com/locate/aml Multivariate Skew-Symmetric Distributions A. K. GUPTA Department of Mathematics and Statistics Bowling Green State University Bowling Green, OH 43403, U.S.A. [email protected] F.-C. CHANG Department of Applied Mathematics National Sun Yat-sen University Kaohsiung, Taiwan 804, R.O.C. [email protected] (Received November 2001; revised and accepted October 200.2) Abstract-In this paper, a class of multivariateskew distributions has been explored. Then its properties are derived. The relationship between the multivariate skew normal and the Wishart distribution is also studied. @ 2003 Elsevier Science Ltd. All rights reserved. Keywords-Skew normal distribution, Wishart distribution, Moment generating function, Mo- ments. Skewness. 1. INTRODUCTION The multivariate skew normal distribution has been studied by Gupta and Kollo [I] and Azzalini and Dalla Valle [2], and its applications are given in [3]. This class of distributions includes the normal distribution and has some properties like the normal and yet is skew. It is useful in studying robustness. Following Gupta and Kollo [l], the random vector X (p x 1) is said to have a multivariate skew normal distribution if it is continuous and its density function (p.d.f.) is given by fx(z) = 24&; W(a’z), x E Rp, (1.1) where C > 0, Q E RP, and 4,(x, C) is the p-dimensional normal density with zero mean vector and covariance matrix C, and a(.) is the standard normal distribution function (c.d.f.). -

Bayesian Inference Via Approximation of Log-Likelihood for Priors in Exponential Family

1 Bayesian Inference via Approximation of Log-likelihood for Priors in Exponential Family Tohid Ardeshiri, Umut Orguner, and Fredrik Gustafsson Abstract—In this paper, a Bayesian inference technique based x x x ··· x on Taylor series approximation of the logarithm of the likelihood 1 2 3 T function is presented. The proposed approximation is devised for the case, where the prior distribution belongs to the exponential family of distributions. The logarithm of the likelihood function is linearized with respect to the sufficient statistic of the prior distribution in exponential family such that the posterior obtains y1 y2 y3 yT the same exponential family form as the prior. Similarities between the proposed method and the extended Kalman filter for nonlinear filtering are illustrated. Furthermore, an extended Figure 1: A probabilistic graphical model for stochastic dy- target measurement update for target models where the target namical system with latent state xk and measurements yk. extent is represented by a random matrix having an inverse Wishart distribution is derived. The approximate update covers the important case where the spread of measurement is due to the target extent as well as the measurement noise in the sensor. (HMMs) whose probabilistic graphical model is presented in Fig. 1. In such filtering problems, the posterior to the last Index Terms—Approximate Bayesian inference, exponential processed measurement is in the same form as the prior family, Bayesian graphical models, extended Kalman filter, ex- distribution before the measurement update. Thus, the same tended target tracking, group target tracking, random matrices, inference algorithm can be used in a recursive manner. -

Interpolation of the Wishart and Non Central Wishart Distributions

Interpolation of the Wishart and non central Wishart distributions Gérard Letac∗ Angers, June 2016 ∗Laboratoire de Statistique et Probabilités, Université Paul Sabatier, 31062 Toulouse, France 1 Contents I Introduction 3 II Wishart distributions in the classical sense 3 III Wishart distributions on symmetric cones 5 IV Wishart distributions as natural exponential families 10 V Properties of the Wishart distributions 12 VI The one dimensional non central χ2 distributions and their interpola- tion 16 VII The classical non central Wishart distribution. 17 VIIIThe non central Wishart exists only if p is in the Gindikin set 17 IX The rank problem for the non central Wishart and the Mayerhofer conjecture. 19 X Reduction of the problem: the measures m(2p; k; d) 19 XI Computation of m(1; 2; 2) 23 XII Computation of the measure m(d − 1; d; d) 28 XII.1 Zonal functions . 28 XII.2 Three properties of zonal functions . 30 XII.3 The calculation of m(d − 1; d; d) ....................... 32 XII.4 Example: m(2; 3; 3) .............................. 34 XIIIConvolution lemmas in the cone Pd 35 XIVm(d − 2; d − 1; d) and m(d − 2; d; d) do not exist for d ≥ 3 37 XV References 38 2 I Introduction The aim of these notes is to present with the most natural generality the Wishart and non central Wishart distributions on symmetric real matrices, on Hermitian matrices and sometimes on a symmetric cone. While the classical χ2 square distributions are familiar to the statisticians, they quickly learn that these χ2 laws as parameterized by n their degree of freedom, can be interpolated by the gamma distributions with a continuous shape parameter. -

Gamma and Related Distributions

GAMMA AND RELATED DISTRIBUTIONS By Ayienda K. Carolynne Supervisor: Prof J.A.M Ottieno School of Mathematics University of Nairobi A thesis submitted to the School of Mathematics, University of Nairobi in partial fulfillment of the requirements for the degree of Master of Science in Statistics. November, 2013 Declaration I, Ayienda Kemunto Carolynne do hereby declare that this thesis is my original work and has not been submitted for a degree in any other University. Sign: ---------------------------------------- Date................................ AYIENDA KEMUNTO CAROLYNNE Sign: ---------------------------------------- Date............................... Supervisor: J.A.M OTTIENO i Dedication I wish to dedicate this thesis to my beloved husband Ezekiel Onyonka and son Steve Michael. To you all, I thank you for giving me the support needed to persue my academic dream. ii Acknowledgements I am grateful to the all-mighty God who has watched over me all my life and more so, during my challenging academic times. It is my prayer that he grants me more strength to achieve all my ambitions and aspirations in life. I am thankful to the University of Nairobi, which not only offered me a scholarship, but also moral support during this study. It is my pleasant privilege to acknowledge with gratitude one and all from whom I received guidance and encouragement during hard times of my study. Special thanks to my supervisor Prof. J.A.M Ottieno, School of Mathematics, University of Nairobi for his moral and material support, encouragement through which I have managed to do my work. Also I cannot forget to appreciate his invaluable guidance, tolerance and constructive criticism without which I would not have accomplished my objectives. -

Singular Inverse Wishart Distribution with Application to Portfolio Theory

Working Papers in Statistics No 2015:2 Department of Statistics School of Economics and Management Lund University Singular Inverse Wishart Distribution with Application to Portfolio Theory TARAS BODNAR, STOCKHOLM UNIVERSITY STEPAN MAZUR, LUND UNIVERSITY KRZYSZTOF PODGÓRSKI, LUND UNIVERSITY Singular Inverse Wishart Distribution with Application to Portfolio Theory Taras Bodnara, Stepan Mazurb and Krzysztof Podgorski´ b; 1 a Department of Mathematics, Stockholm University, Roslagsv¨agen101, SE-10691 Stockholm, Sweden bDepartment of Statistics, Lund University, Tycho Brahe 1, SE-22007 Lund, Sweden Abstract The inverse of the standard estimate of covariance matrix is frequently used in the portfolio theory to estimate the optimal portfolio weights. For this problem, the distribution of the linear transformation of the inverse is needed. We obtain this distribution in the case when the sample size is smaller than the dimension, the underlying covariance matrix is singular, and the vectors of returns are inde- pendent and normally distributed. For the result, the distribution of the inverse of covariance estimate is needed and it is derived and referred to as the singular inverse Wishart distribution. We use these results to provide an explicit stochastic representation of an estimate of the mean-variance portfolio weights as well as to derive its characteristic function and the moments of higher order. ASM Classification: 62H10, 62H12, 91G10 Keywords: singular Wishart distribution, mean-variance portfolio, sample estimate of pre- cision matrix, Moore-Penrose inverse 1Corresponding author. E-mail address: [email protected]. The authors appreciate the financial support of the Swedish Research Council Grant Dnr: 2013-5180 and Riksbankens Jubileumsfond Grant Dnr: P13-1024:1 1 1 Introduction Analyzing multivariate data having fewer observations than their dimension is an impor- tant problem in the multivariate data analysis. -

Hand-Book on STATISTICAL DISTRIBUTIONS for Experimentalists

Internal Report SUF–PFY/96–01 Stockholm, 11 December 1996 1st revision, 31 October 1998 last modification 10 September 2007 Hand-book on STATISTICAL DISTRIBUTIONS for experimentalists by Christian Walck Particle Physics Group Fysikum University of Stockholm (e-mail: [email protected]) Contents 1 Introduction 1 1.1 Random Number Generation .............................. 1 2 Probability Density Functions 3 2.1 Introduction ........................................ 3 2.2 Moments ......................................... 3 2.2.1 Errors of Moments ................................ 4 2.3 Characteristic Function ................................. 4 2.4 Probability Generating Function ............................ 5 2.5 Cumulants ......................................... 6 2.6 Random Number Generation .............................. 7 2.6.1 Cumulative Technique .............................. 7 2.6.2 Accept-Reject technique ............................. 7 2.6.3 Composition Techniques ............................. 8 2.7 Multivariate Distributions ................................ 9 2.7.1 Multivariate Moments .............................. 9 2.7.2 Errors of Bivariate Moments .......................... 9 2.7.3 Joint Characteristic Function .......................... 10 2.7.4 Random Number Generation .......................... 11 3 Bernoulli Distribution 12 3.1 Introduction ........................................ 12 3.2 Relation to Other Distributions ............................. 12 4 Beta distribution 13 4.1 Introduction ....................................... -

An Introduction to Wishart Matrix Moments Adrian N

An Introduction to Wishart Matrix Moments Adrian N. Bishop1, Pierre Del Moral2 and Angèle Niclas3 1University of Technology Sydney (UTS) and CSIRO, Australia; [email protected] 2INRIA, Bordeaux Research Center, France; [email protected] 3École Normale Supérieure de Lyon, France ABSTRACT These lecture notes provide a comprehensive, self-contained introduction to the analysis of Wishart matrix moments. This study may act as an introduction to some particular aspects of random matrix theory, or as a self-contained exposition of Wishart matrix moments. Random matrix theory plays a central role in statistical physics, computational mathematics and engineering sci- ences, including data assimilation, signal processing, combi- natorial optimization, compressed sensing, econometrics and mathematical finance, among numerous others. The mathe- matical foundations of the theory of random matrices lies at the intersection of combinatorics, non-commutative algebra, geometry, multivariate functional and spectral analysis, and of course statistics and probability theory. As a result, most of the classical topics in random matrix theory are technical, and mathematically difficult to penetrate for non-experts and regular users and practitioners. The technical aim of these notes is to review and extend some important results in random matrix theory in the specific Adrian N. Bishop, Pierre Del Moral and Angèle Niclas (2018), “An Introduction to Wishart Matrix Moments”, Foundations and Trends R in Machine Learning: Vol. 11, No. 2, pp 97–218. DOI: 10.1561/2200000072. 2 context of real random Wishart matrices. This special class of Gaussian-type sample covariance matrix plays an impor- tant role in multivariate analysis and in statistical theory. -

Handbook on Probability Distributions

R powered R-forge project Handbook on probability distributions R-forge distributions Core Team University Year 2009-2010 LATEXpowered Mac OS' TeXShop edited Contents Introduction 4 I Discrete distributions 6 1 Classic discrete distribution 7 2 Not so-common discrete distribution 27 II Continuous distributions 34 3 Finite support distribution 35 4 The Gaussian family 47 5 Exponential distribution and its extensions 56 6 Chi-squared's ditribution and related extensions 75 7 Student and related distributions 84 8 Pareto family 88 9 Logistic distribution and related extensions 108 10 Extrem Value Theory distributions 111 3 4 CONTENTS III Multivariate and generalized distributions 116 11 Generalization of common distributions 117 12 Multivariate distributions 133 13 Misc 135 Conclusion 137 Bibliography 137 A Mathematical tools 141 Introduction This guide is intended to provide a quite exhaustive (at least as I can) view on probability distri- butions. It is constructed in chapters of distribution family with a section for each distribution. Each section focuses on the tryptic: definition - estimation - application. Ultimate bibles for probability distributions are Wimmer & Altmann (1999) which lists 750 univariate discrete distributions and Johnson et al. (1994) which details continuous distributions. In the appendix, we recall the basics of probability distributions as well as \common" mathe- matical functions, cf. section A.2. And for all distribution, we use the following notations • X a random variable following a given distribution, • x a realization of this random variable, • f the density function (if it exists), • F the (cumulative) distribution function, • P (X = k) the mass probability function in k, • M the moment generating function (if it exists), • G the probability generating function (if it exists), • φ the characteristic function (if it exists), Finally all graphics are done the open source statistical software R and its numerous packages available on the Comprehensive R Archive Network (CRAN∗).