SIGMOD 2009 Booklet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Romanian Civilization Supplement 1 One Hundred Romanian Authors in Theoretical Computer Science

ROMANIAN CIVILIZATION SUPPLEMENT 1 ONE HUNDRED ROMANIAN AUTHORS IN THEORETICAL COMPUTER SCIENCE ROMANIAN CIVILIZATION General Editor: Victor SPINEI SUPPLEMENT 1 THE ROMANIAN ACADEMY THE INFORMATION SCIENCE AND TECHNOLOGY SECTION ONE HUNDRED ROMANIAN AUTHORS IN THEORETICAL COMPUTER SCIENCE Edited by: SVETLANA COJOCARU GHEORGHE PĂUN DRAGOŞ VAIDA EDITURA ACADEMIEI ROMÂNE Bucureşti, 2018 III Copyright © Editura Academiei Române, 2018. All rights reserved. EDITURA ACADEMIEI ROMÂNE Calea 13 Septembrie nr. 13, sector 5 050711, Bucureşti, România Tel: 4021-318 81 46, 4021-318 81 06 Fax: 4021-318 24 44 E-mail: [email protected] Web: www.ear.ro Peer reviewer: Acad. Victor SPINEI Descrierea CIP a Bibliotecii Naţionale a României One hundred Romanian authors in theoretical computer science / ed. by: Svetlana Cojocaru, Gheorghe Păun, Dragoş Vaida. - Bucureşti : Editura Academiei Române, 2018 ISBN 978-973-27-2908-3 I. Cojocaru, Svetlana (ed.) II. Păun, Gheorghe informatică (ed.) III. Vaida, Dragoş (ed.) 004 Editorial assistant: Doina ARGEŞANU Computer operator: Doina STOIA Cover: Mariana ŞERBĂNESCU Funal proof: 12.04.2018. Format: 16/70 × 100 Proof in sheets: 19,75 D.L.C. for large libraries: 007 (498) D.L.C. for small libraries: 007 PREFACE This book may look like a Who’s Who in the Romanian Theoretical Computer Science (TCS), it is a considerable step towards such an ambitious goal, yet the title should warn us about several aspects. From the very beginning we started working on the book having in mind to collect exactly 100 short CVs. This was an artificial decision with respect to the number of Romanian computer scientists, but natural having in view the circumstances the volume was born: it belongs to a series initiated by the Romanian Academy on the occasion of celebrating one century since the Great Romania was formed, at the end of the First World War. -

Curriculum Vitae of Anna G´Al

Curriculum vitae of Anna G´al Address: Department of Computer Science email: [email protected] The University of Texas at Austin Phone: (512) 471-9539 Austin, TX 78712 WWW: http://www.cs.utexas.edu/users/panni Research Interests: Computational complexity theory, lower bound methods, circuit complexity, communi- cation complexity, fault tolerance, randomness and computation, algorithms, combinatorics. Degrees: • Ph.D. in Computer Science at the University of Chicago, August 1995 Ph.D. thesis title: Combinatorial methods in Boolean function complexity Ph.D. thesis advisor: Professor J´anosSimon • Master's degree in Computer Science at the University of Chicago, June 1990 Awards: • Best Paper Award at Track A of ICALP 2003 • Alfred P. Sloan Research Fellowship, 2001-2005 • NSF CAREER Award July 1999 - June 2004 • NSF/DIMACS Postdoctoral Fellowship: Institute for Advanced Study, Princeton 1995- 96; DIMACS at Princeton University, 1996-97. • Machtey Award for \the most outstanding paper written by a student or students" at the 32nd IEEE Symposium on Foundations of Computer Science, FOCS 1991 Employment: • Professor at the Department of Computer Science of the University of Texas at Austin, starting September 1, 2012. • Associate professor at the Department of Computer Science of the University of Texas at Austin, September 1, 2004 - August 31 2012. • Assistant professor at the Department of Computer Science of the University of Texas at Austin, September 1, 1997 - August 31 2004. • Visiting scholar at the Department of Computer Science of the University of Toronto and the Fields Institute in Toronto, January 1998 - July 1998. 1 • NSF/DIMACS postdoctoral fellow September 1995 - August 1997: at the Department of Computer Science of Princeton University, 1996/97 at the School of Mathematics of the Institute for Advanced Study in Princeton, 1995/96. -

An Examination of the Factors and Characteristics That Contribute to the Success of Putnam Fellows Robert A

University of Connecticut OpenCommons@UConn Doctoral Dissertations University of Connecticut Graduate School 12-17-2015 An Examination of the Factors and Characteristics that Contribute to the Success of Putnam Fellows Robert A. J. Stroud University of Connecticut - Storrs, [email protected] Follow this and additional works at: https://opencommons.uconn.edu/dissertations Recommended Citation Stroud, Robert A. J., "An Examination of the Factors and Characteristics that Contribute to the Success of Putnam Fellows" (2015). Doctoral Dissertations. 985. https://opencommons.uconn.edu/dissertations/985 An Examination of the Factors and Characteristics that Contribute to the Success of Putnam Fellows Robert Alexander James Stroud, PhD University of Connecticut, 2015 The William Lowell Putnam Mathematical Competition is an intercollegiate mathematics competition for undergraduate college and university students in the United States and Canada and is regarded as the most prestigious and challenging mathematics competition in North America (Alexanderson, 2004; Grossman, 2002; Reznick, 1994; Schoenfeld, 1985). Students who earn the five highest scores on the examination are named Putnam Fellows and to date, despite the thousands of students who have taken the Putnam Examination, only 280 individuals have won the Putnam Competition. Clearly, based on their performance being named a Putnam Fellow is a remarkable achievement. In addition, Putnam Fellows go on to graduate school and have extraordinary careers in mathematics or mathematics- related fields. Therefore, understanding the factors and characteristics that contribute to their success is important for students interested in STEM-related fields. The participants were 25 males who attended eight different colleges and universities in North America at the time they were named Putnam Fellows and won the Putnam Competition four, three, or two times. -

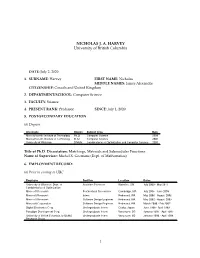

NICHOLAS J. A. HARVEY University of British Columbia

NICHOLAS J. A. HARVEY University of British Columbia DATE: July 2, 2020 1. SURNAME: Harvey FIRST NAME: Nicholas MIDDLE NAMES: James Alexander CITIZENSHIP: Canada and United Kingdom 2. DEPARTMENT/SCHOOL: Computer Science 3. FACULTY: Science 4. PRESENT RANK: Professor SINCE: July 1, 2020 5. POST-SECONDARY EDUCATION (a) Degrees University Degree Subject Area Date Massachusetts Institute of Technology Ph.D. Computer Science 2008 Massachusetts Institute of Technology M.Sc. Computer Science 2005 University of Waterloo B.Math. Combinatorics & Optimization and Computer Science 2000 Title of Ph.D. Dissertation: Matchings, Matroids and Submodular Functions Name of Supervisor: Michel X. Goemans (Dept. of Mathematics) 6. EMPLOYMENT RECORD: (a) Prior to coming to UBC Employer Position Location Dates University of Waterloo, Dept. of Assistant Professor Waterloo, ON July 2009 - May 2011 Combinatorics & Optimization Microsoft Research Postdoctoral Researcher Cambridge, MA July 2008 - June 2009 Microsoft Research Intern Redmond, WA May 2006 - August 2006 Microsoft Research Software Design Engineer Redmond, WA May 2002 - August 2003 Microsoft Corporation Software Design Engineer Redmond, WA March 2000 - Feb 2002 Digital Electronics Corp. Undergraduate Intern Osaka, Japan June 1998 - April 1999 Paradigm Development Corp. Undergraduate Intern Vancouver, BC January 1997 - April 1997 University of British Columbia, E-GEMS Undergraduate Intern Vancouver, BC January 1996 - April 1996 Research Group 1 Nicholas J. A. Harvey Page 2 (b) At UBC Rank or Title Date Professor July 1, 2020 - present Canada Research Chair in Algorithm Design (Tier 2, 5 year renewal) April 1, 2017 - March 31, 2022 Associate Professor July 1, 2015 - June 30, 2020 Canada Research Chair in Algorithm Design (Tier 2, 5 year appointment) April 1, 2012 - March 31, 2017 Assistant Professor June 1, 2011 - June 30, 2015 7. -

Curriculum Vitae of Anna G´Al

Curriculum vitae of Anna G´al Address: Department of Computer Science email: [email protected] The University of Texas at Austin Phone: (512) 471-9539 Austin, TX 78712 WWW: http://www.cs.utexas.edu/users/panni Research Interests: Computational complexity, lower bounds for complexity of Boolean functions, communication complexity, fault tolerance, randomness and computation, algorithms, combinatorics. Degrees: • Ph.D. in Computer Science at the University of Chicago, August 1995 Ph.D. thesis title: Combinatorial methods in Boolean function complexity Ph.D. thesis advisor: Professor J´anosSimon • Master's degree in Computer Science at the University of Chicago, June 1990 Awards: • Best Paper Award at Track A of ICALP 2003 • Alfred P. Sloan Research Fellowship, 2001-2005 • NSF CAREER Award July 1999 - June 2004 • Faculty Fellowship, Department of Computer Science, University of Texas at Austin, 2000 - present. • NSF/DIMACS Postdoctoral Fellowship: Institute for Advanced Study, Princeton 1995-96; DIMACS at Princeton University, 1996-97. • Machtey Award for \the most outstanding paper written by a student or students" at the 32nd IEEE Symposium on Foundations of Computer Science, FOCS 1991 • Award for excellent studies (only grades \A" during high school and undergraduate studies) from the Department of Education of Hungary, 1984 Employment: • Professor at the Department of Computer Science of the University of Texas at Austin, starting September 1, 2012. • Associate professor at the Department of Computer Science of the University of Texas at Austin, September 1, 2004 - August 31 2012. • Assistant professor at the Department of Computer Science of the University of Texas at Austin, September 1, 1997 - August 31 2004. -

Curriculum Vitae

Curriculum Vitae Name: Prabhakar Raghavan Title: Chief Strategy Officer and Head, Yahoo! Labs Office address: Yahoo! 701 First Avenue, Sunnyvale, CA 94089, USA. Office phone: (408) 349 2326 Internet: [email protected] http://theory.stanford.edu/~pragh Home address: 13636 Deer Trail Court Saratoga, CA 95070, USA. Education Years University Department Degree 1976-81 IIT, Madras Electrical Engineering B.Tech 1981-82 U.C. Santa Barbara Electrical & Computer Engineering M.S. 1982-86 U.C. Berkeley Computer Science Ph.D. Employment 1981-1986 Graduate Assistantships and Fellowships, UC Berkeley and Santa Barbara. 1986-1994 Research Staff Member, IBM T.J. Watson Research Center. 1994-1995 Manager, Theory of Computing, IBM T.J. Watson Research Center. 1995-2000 Sr. Manager, Computer Science Principles, IBM Almaden Research Center. 2000-2004 Vice-President and Chief Technology Officer, Verity, Inc. 2004-2005 Senior Vice-President and Chief Technology Officer, Verity, Inc. 2005- VP/SVP and Head, Yahoo! Labs. Additional Appointments 1989 Visiting Instructor, Department of Computer Science, Yale University. 1991 and 1994 Visiting Scientist, IBM Tokyo Research Laboratory. 1995-1997 Consulting Associate Professor, Dept. of Computer Science, Stanford University. 1997 - Consulting Professor, Dept. of Computer Science, Stanford University. Awards and honors 1984 Outstanding Teaching Assistant, Academic Senate, U.C. Berkeley. 1985-1986 IBM Doctoral Fellowship 1986 Machtey Award for the Best Student Paper, 27th IEEE Symposium on Foundations of Computer Science. 1989 IBM Research Division Award for PLA partitioning. 2000 Fellow of the IEEE. 2000 Best Paper, ACM Symposium on Principles of Database Systems. 2000 Best Paper, Ninth International World-Wide Web Conference (WWW9). -

August 6, 2020 David Zuckerman

August 6, 2020 David Zuckerman Computer Science Department, University of Texas at Austin, 2317 Speedway, Stop D9500, Austin, TX 78712 [email protected] http://www.cs.utexas.edu/~diz (512) 471-9729 Research The role of randomness in computation, pseudorandomness, complexity theory, Interests coding theory, distributed computing, cryptography, approximability, random walks. Professional University of Texas at Austin, Department of Computer Science Experience Endowed Professorship, September 2010 - present; Professor, September 2003 - present; Associate Professor (tenured), September 1998 - August 2003; Assistant Professor, January 1994 - August 1998. Simons Institute for the Theory of Computing, U.C. Berkeley Visiting Scholar and Co-organizer of the Pseudorandomness program, Spring 2017. Institute for Advanced Study, School of Mathematics Member, September 2011 - August 2012. Harvard University, Radcliffe Institute for Advanced Study and DEAS Radcliffe Fellow, Guggenheim Fellow, and Visiting Scholar, July 2004 - June 2005. University of California at Berkeley, Computer Science Division Visiting Scholar and Visiting McKay Lecturer, January 1999 - December 2000. Hebrew University of Jerusalem, Institute for Computer Science Lady Davis Postdoctoral Fellow, Oct. - Dec. 1993. Sponsor: Avi Wigderson. Massachusetts Institute of Technology, Laboratory for Computer Science NSF Mathematical Sciences Postdoctoral Fellow, 1991-93. Sponsor: Silvio Micali. Education Ph.D., University of California at Berkeley, Computer Science, 1991. Advisor: Umesh Vazirani. AT&T Bell Labs Fellowship, NSF Graduate Fellowship. A.B., Harvard University, Mathematics, 1987. Summa cum laude, Phi Beta Kappa. Major Honors Simons Investigator Award, 2016-21 and Awards Best Paper Award, STOC 2016 ACM Fellow, 2013 John S. Guggenheim Memorial Foundation Fellowship, 2004-05 David and Lucile Packard Fellowship for Science and Engineering, 1996-2006 Alfred P. -

Robotics and Automation, IEEE Transactions On

IEEE TRANSACTIONS ON ROBOTICS AND AUTOMATION, VOL. 15, NO. 5, OCTOBER 1999 849 Part Pose Statistics: Estimators and Experiments Ken Goldberg, Senior Member, IEEE, Brian V. Mirtich, Member, IEEE, Yan Zhuang, Member, IEEE, John Craig, Member, IEEE, Brian R. Carlisle, Senior Member, IEEE, and John Canny Abstract—Many of the most fundamental examples in probabil- We treat part orientations as equivalent if one can be ity involve the pose statistics of coins and dice as they are dropped transformed to another with a rotation about the gravity axis in on a flat surface. For these parts, the probability assigned to the world frame. We refer to the set of equivalent orientations each stable face is justified based on part symmetry, although most gamblers are familiar with the possibility of loaded dice. In as a pose of the part. To represent each pose, we attach a industrial part feeding, parts also arrive in random orientations. body frame to the part with origin at its center of mass. The We consider the following problem: given part geometry and unit gravity vector in this frame corresponds to a point on parameters such as center of mass, estimate the probability of the unit sphere in the body frame. Such a point uniquely encountering each stable pose of the part. defines a pose of the part. Thus we represent the space of We describe three estimators for solving this problem for poly- hedral parts with known center of mass. The first estimator uses part poses with the unit sphere. Let be the initial a quasistatic motion model that is computed in time y@n log nA probability density function on this space of poses and for a part with n vertices. -

An Examination of the Factors and Characteristics That Contribute To

An Examination of the Factors and Characteristics that Contribute to the Success of Putnam Fellows Robert Alexander James Stroud, PhD University of Connecticut, 2015 The William Lowell Putnam Mathematical Competition is an intercollegiate mathematics competition for undergraduate college and university students in the United States and Canada and is regarded as the most prestigious and challenging mathematics competition in North America (Alexanderson, 2004; Grossman, 2002; Reznick, 1994; Schoenfeld, 1985). Students who earn the five highest scores on the examination are named Putnam Fellows and to date, despite the thousands of students who have taken the Putnam Examination, only 280 individuals have won the Putnam Competition. Clearly, based on their performance being named a Putnam Fellow is a remarkable achievement. In addition, Putnam Fellows go on to graduate school and have extraordinary careers in mathematics or mathematics- related fields. Therefore, understanding the factors and characteristics that contribute to their success is important for students interested in STEM-related fields. The participants were 25 males who attended eight different colleges and universities in North America at the time they were named Putnam Fellows and won the Putnam Competition four, three, or two times. An 18-item questionnaire, adapted form the Walberg Educational Productivity Model, was used as a framework to investigate the personal and the formal educational experiences as well as the role the affective domain played in their development as Putnam Fellows. Further, research (DeFranco, 1996; Schoenfeld, 1992) on the characteristics of expert problem solvers was used to understand those elements of the cognitive domain that contributed to their success. Data was collected through audio-recorded interviews conducted over the telephone or through Skype, and through written e-mail responses.