Linear Regression Con�Nued Outline for Today

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bronx Bombers

BRONX BOMBERS BY ERIC SIMONSON CONCEIVED BY FRAN KIRMSER DRAMATISTS PLAY SERVICE INC. BRONX BOMBERS Copyright © 2014, Eric Simonson All Rights Reserved CAUTION: Professionals and amateurs are hereby warned that performance of BRONX BOMBERS is subject to payment of a royalty. It is fully protected under the copyright laws of the United States of America, and of all countries covered by the International Copyright Union (including the Dominion of Canada and the rest of the British Commonwealth), and of all countries covered by the Pan-American Copyright Convention, the Universal Copyright Convention, the Berne Convention, and of all countries with which the United States has reciprocal copyright relations. All rights, including without limitation professional/amateur stage rights, motion picture, recitation, lecturing, public reading, radio broadcasting, television, video or sound recording, all other forms of mechanical, electronic and digital reproduction, transmission and distribution, such as CD, DVD, the Internet, private and file-sharing networks, information storage and retrieval systems, photocopying, and the rights of translation into foreign languages are strictly reserved. Particular emphasis is placed upon the matter of readings, permission for which must be secured from the Author’s agent in writing. The English language stock and amateur stage performance rights in the United States, its territories, possessions and Canada for BRONX BOMBERS are controlled exclusively by DRAMATISTS PLAY SERVICE, INC., 440 Park Avenue South, New York, NY 10016. No professional or nonprofessional performance of the Play may be given without obtaining in advance the written permission of DRAMATISTS PLAY SERVICE, INC., and paying the requisite fee. Inquiries concerning all other rights should be addressed to Creative Artists Agency, 405 Lexington Avenue, 19th Floor, New York, NY 10174. -

DEREK JETER's BIOGRAPHY by the Time He Stepped Away from Baseball in 2014 After a Historic 20-Year Career, Derek Jeter Had Es

DEREK JETER’S BIOGRAPHY By the time he stepped away from baseball in 2014 after a historic 20-year career, Derek Jeter had established himself as one of the most respected men to ever have played the game. With a reputation as an unmatched leader both on and off the field, Jeter has quickly transitioned into a second career leading a variety of successful business ventures and entrepreneurial investments. Most recently, he became part-owner of MLB’s Miami Marlins, for whom he also now serves as CEO, overseeing the day-to-day for both the business and baseball operations for the team. He is the first African-American to serve in this role for any major league team in the history of the sport. Jeter is also the founder of The Players’ Tribune, an innovative multimedia company where world- class athletes share their honest and unique perspectives, bringing fans closer to the games they love. In November 2013, Jeter and Simon & Schuster announced the Jeter Publishing imprint, which includes both adult and children’s books verticals. The first book released, The Contract, debuted at #2 on The New York Times Children’s Middle Grade Best Seller list. In October 2014, the imprint released a full-color photo book called Jeter Unfiltered, also a Best Seller, providing fans an in-depth look at his life through never-before-published images taken over the course of his final season. He also serves as Partner and Brand Development Officer of Luvo, a transformational lifestyle food brand where he is involved in product development, brand awareness and strategic partnerships. -

Sports Figures Price Guide

SPORTS FIGURES PRICE GUIDE All values listed are for Mint (white jersey) .......... 16.00- David Ortiz (white jersey). 22.00- Ching-Ming Wang ........ 15 Tracy McGrady (white jrsy) 12.00- Lamar Odom (purple jersey) 16.00 Patrick Ewing .......... $12 (blue jersey) .......... 110.00 figures still in the packaging. The Jim Thome (Phillies jersey) 12.00 (gray jersey). 40.00+ Kevin Youkilis (white jersey) 22 (blue jersey) ........... 22.00- (yellow jersey) ......... 25.00 (Blue Uniform) ......... $25 (blue jersey, snow). 350.00 package must have four perfect (Indians jersey) ........ 25.00 Scott Rolen (white jersey) .. 12.00 (grey jersey) ............ 20 Dirk Nowitzki (blue jersey) 15.00- Shaquille O’Neal (red jersey) 12.00 Spud Webb ............ $12 Stephen Davis (white jersey) 20.00 corners and the blister bubble 2003 SERIES 7 (gray jersey). 18.00 Barry Zito (white jersey) ..... .10 (white jersey) .......... 25.00- (black jersey) .......... 22.00 Larry Bird ............. $15 (70th Anniversary jersey) 75.00 cannot be creased, dented, or Jim Edmonds (Angels jersey) 20.00 2005 SERIES 13 (grey jersey ............... .12 Shaquille O’Neal (yellow jrsy) 15.00 2005 SERIES 9 Julius Erving ........... $15 Jeff Garcia damaged in any way. Troy Glaus (white sleeves) . 10.00 Moises Alou (Giants jersey) 15.00 MCFARLANE MLB 21 (purple jersey) ......... 25.00 Kobe Bryant (yellow jersey) 14.00 Elgin Baylor ............ $15 (white jsy/no stripe shoes) 15.00 (red sleeves) .......... 80.00+ Randy Johnson (Yankees jsy) 17.00 Jorge Posada NY Yankees $15.00 John Stockton (white jersey) 12.00 (purple jersey) ......... 30.00 George Gervin .......... $15 (whte jsy/ed stripe shoes) 22.00 Randy Johnson (white jersey) 10.00 Pedro Martinez (Mets jersey) 12.00 Daisuke Matsuzaka .... -

Yankees Trivia

Yankees Trivia 1. Before they were known as the Yankees, they were first known as the: a) Doodle Dandies b) Hilltoppers c) Highlanders d) Pinstripers 2. Before the Yankees moved into Yankee Stadium in 1923, they played in: a) The Polo Grounds b) The Meadowlands c) Randall’s Island d) Columbia University 3. George Herman Ruth was nicknamed Babe because: a) He loved the ladies and they loved him. b) At 19, his minor-league teammates said he was the owner’s latest babe. c) He had a baby face and baby’s bottom. d) He was named after future Yankee Babe Dahlgren. 4. Who named Yankee Stadium “The House that Ruth Built?” a) Babe Ruth b) Newspaperman Damon Runyon c) Fred Lieb of the New York Evening Telegram d) Future New York governor Franklin Roosevelt 5. Which did NOT happen in 1939? a) The Yankees won their fourth consecutive championship. b) Lou Gehrig announced his retirement. c) Joe DiMaggio was named MVP. d) Mariano Rivera was born. 6. Joe DiMaggio is forever known for: a) Being a world-class fisherman growing up in San Francisco b) Making coffee for all his teammates c) Being immortalized in Paul Simon’s song “Mrs. Robinson.” d) Knocking in 56 consecutive game-winning hits in 1941. 7. Casey Stengel managed the Yankees to: a) A record five consecutive championships. b) All talk in the same rambling, double-speak like him. c) Adhere to strict 9 p.m. curfews. d) Address him as Mr. Ol’ Perfesser, sir. 8. Legendary Yankee announcer Mel Allen was famous for: a) His trademark home run call, “It is high …. -

2021 Topps X Derek Jeter

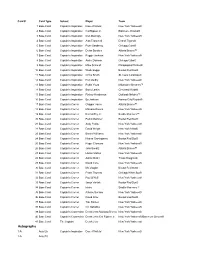

Card # Card Type Subset Player Team 1 Base Card Captain's Inspiration Dave Winfield New York Yankees® 2 Base Card Captain's Inspiration Cal Ripken Jr. Baltimore Orioles® 3 Base Card Captain's Inspiration Don Mattingly New York Yankees® 4 Base Card Captain's Inspiration Alan Trammell Detroit Tigers® 5 Base Card Captain's Inspiration Ryne Sandberg Chicago Cubs® 6 Base Card Captain's Inspiration Deion Sanders Atlanta Braves™ 7 Base Card Captain's Inspiration Reggie Jackson New York Yankees® 8 Base Card Captain's Inspiration Andre Dawson Chicago Cubs® 9 Base Card Captain's Inspiration Mike Schmidt Philadelphia Phillies® 10 Base Card Captain's Inspiration Wade Boggs Boston Red Sox® 11 Base Card Captain's Inspiration Ozzie Smith St. Louis Cardinals® 12 Base Card Captain's Inspiration Ron Guidry New York Yankees® 13 Base Card Captain's Inspiration Robin Yount Milwaukee Brewers™ 14 Base Card Captain's Inspiration Barry Larkin Cincinnati Reds® 15 Base Card Captain's Inspiration Rickey Henderson Oakland Athletics™ 16 Base Card Captain's Inspiration Bo Jackson Kansas City Royals® 17 Base Card Captain's Corner Chipper Jones Atlanta Braves™ 18 Base Card Captain's Corner Mariano Rivera New York Yankees® 19 Base Card Captain's Corner Ken Griffey Jr. Seattle Mariners™ 20 Base Card Captain's Corner Pedro Martinez Boston Red Sox® 21 Base Card Captain's Corner Andy Pettitte New York Yankees® 22 Base Card Captain's Corner David Wright New York Mets® 23 Base Card Captain's Corner Bernie Williams New York Yankees® 24 Base Card Captain's Corner Nomar Garciaparra -

Astros Dash Hopes of Bahamian Dream World Series Pitting Yanks

20 THE FREEPORT NEWS Monday, October 23, 2017 SPORTS NEW YORK YANKEES GREAT BABE RUTH YANKEES HAVE WON WORLD SERIES 27 TIMES Astros dash hopes of Bahamian dream World Series pitting Yanks VS Dodgers n Saturday night, comeback, taking three con- when the Houston secutive games to go up 3-2 Astros defeated the against the Astros. Alas, there NewO York Yankees 4-0, at the was to be no joy for Yankees Minute Maid Park home field, fans like this columnist. At the hopes of many Bahami- home with a beloved fan base, an baseball followers were the Astros prevailed in the fi- dashed. There would not be nal two games of the series and another meeting of the Yankees now get to take on the Dodg- and the Dodgers in the World ers. Series. The Los Angeles Dodg- Although the Dodgers always ers earned their way into the had the great majority of fans October classic by taking out in The Bahamas, the Yankees the Chicago Cubs in the Na- boasted devoted supporters as tional League Champions Se- well. Some of us just were in- ries four games to one. tent in being different, so while The Yankees/Dodgers en- we fully respected the barrier counters in The Bahamas are breaking milestone of Rob- legendary. Fans in this coun- inson, his playing ability, and try of both teams are bonded that of Roy Campanella, Don by the unique rivalry. In 1947, Newcombe, Junior Gilliam, Jackie Robinson became the Charley Neal, Sandy Koufax, first Black player to perform in Pee Wee Reese, Gil Hodges the Major Leagues. -

Baseball Honors Retiring Yankee Captain on Fox Sports 1

BASEBALL HONORS RETIRING YANKEE CAPTAIN ON FOX SPORTS 1 DEREK JETER: A TRIBUTE Premieres April 29 Current and Former Players Share Stories, Memories of Jeter SNEAK PEEK – DEREK JETER: A TRIBUTE http://m.mlb.com/video/v32285481/derek-jeter-a-tribute-on-fs1 New York – Before he announced he would hang up his cleats at the end of the season, New York Yankees shortstop Derek Jeter had already made a lasting impression as one of the most graceful, successful and iconic figures ever to play the game. Led by current and former players from around Major League Baseball, FOX Sports and MLB Productions honor one of baseball’s best ambassadors in DEREK JETER: A TRIBUTE, a one-hour special premiering Tuesday, April 29 (8:30 PM ET) on FOX Sports 1. A career New York Yankee, Jeter’s numerous accolades and achievements include the 1996 Rookie of the Year Award, 13 All-Star Game selections, five World Series rings, membership in MLB’s prestigious 3,000 hits club and the longest-tenured captaincy of any Yankees player. In his two decades in baseball, he has been admired by fans, media and his peers for his professionalism, poise and leadership. DEREK JETER: A TRIBUTE presents intimate and previously untold stories directly from those whom Jeter played with and against combined with 20 years of archival footage from Jeter’s career. Narrated by Jeter’s longtime manager Joe Torre, DEREK JETER: A TRIBUTE includes new interviews from more than 30 current players sharing personal anecdotes, memories and more recorded in the days following Jeter’s retirement announcement. -

1. 16 X 20 Mickey Mantle Autographed Photo 2. Babe Ruth Underwear Label 3

1. 16 X 20 Mickey Mantle Autographed photo 2. Babe Ruth underwear label 3. Perez Steele Willie, Mickey & The Duke 4. Babe Ruth numbered painting Geo. Wright 5. Mickey Mantle print 6. Phil Rizzuto autographed print by Upper deck 7. Joe DiMaggio game used bat plaque by Highland Mint 8. Arthur W. Perdue Stadium drawing by Keith Whitelock 9. 1947 Chesterfield Advertising poster 10. 16 X 20 Mickey Mantle autographed photo 11. 1960 World series Mickey at bat 5 frame photo 12. First Game Print signed by artist 13. 1964 Babe Ruth poster (rare) 14. Joe Jackson game used bat plaque by Highland Mint 15. Framed advertising Whitey, Roger & Mickey 16. 1998 Yankee Team (Cooperstown Collection) 17. Don Larsen Perfect Game statue Danbury Mint 18. Babe Ruth Called Shot statue Danbury Mint 19. Golf cart collection 20. Yankee hauler 21. Babe Ruth car 22. Yankee motorcycle 23. Lou Gehrig car & bobbleheads 24. Yogi Berra statue & bobbleheads 25. Babe Ruth bobble heads, bust & ball 26. Joe DiMaggio bobble heads 27. Joe DiMaggio autographed statue (Broken bat) 28. Whitey Ford bobble heads statue by Gartland 29. Whitey Ford autographed statue 30. Don Mattingly autographed photo, bobble head, ceramic plate, handmade statue 31. 1960 card collector shoe box w/1961 Topps stamps 32. 1970 & 1977 Yankee Team ball 33. 1993 Bat Girl autographed card – Mattingly, Boggs & others 34. Yogi Berra autographed statue & bust Hartland Statue 35. Yogi Berra autographed photo & bobble head 36. Shorebirds First Game (Aerial photo) 37. 1961 Yankees World Champion plaque 38. Baseball collage plaque 39. Mike Mussina autographed hat 40. -

Take Me out to the Ball Game Jack Norworth Prek Froggy Plays T-Ball

Grade Level Title Author (If Known) Take Me Out to the Ball Game Jack Norworth PreK Froggy Plays T-Ball Jonathan London PreK Elephant & Piggie: Watch Me Throw the Ball! Mo Willems PreK My First Book oF Baseball Sports Illustrated PreK Goodnight Baseball Michael Dahl PreK-2 Brothers at Bat Audrey Vernick PreK-3 Bats at the Ballgame Brian Lies PreK-3 Randy Riley's Really Big Hit Chris Van Dusen PreK-3 The Babe & I David Adler PreK-3 How Georgie Radbourn Saved Baseball David Shannon PreK-3 You Never Heard oF Willie Mays?! Jonah Winter PreK-3 Curious George at the Baseball Game Margret & H.A. Rey PreK-3 Teammates Peter Golenbock PreK-3 Players in Pigtails Shana Corey PreK-3 For the Love oF Baseball: An A-to-Z Primer For Baseball Fans oF All Ages Frederick C. Klein K+ Rocky the Mudhen: We're Talkin' Baseball Sam Shane K+ Baseball Saved Us Ken Mochizuki K-2 Girl Wonder: A Baseball Story in Nine Innings Deborah Hopkinson K-3 Flat Stanley at Bat JeFF Brown K-3 The Berenstain Bears Go Out For the Team Stan & Jan Berenstain K-3 The Berenstain Bears We Love Baseball Stan & Jan Berenstain K-3 Jinx Glove Matt Christopher 1-3 Johnny No Hit Matt Christopher 1-3 The Lucky Baseball Bat Matt Christopher 1-3 Ballpark Mysteries 16 Book Series David A. Kelly 1-4 The Missing Playbook Franklin W. Dixon & Matt David 1-4 Barbed Wire Baseball Marissa Moss 1-4 Magic Tree House: A Big Day For Baseball Mary Pope Osborne 1-4 The Magic Tree House: A Big Day For Baseball Mary Pope Osborne 1-4 Baseball Legends Alphabet Book Beck Feiner 1-5 Satchel Paige Lesa Cline-Ransome -

From the Dugout

WWW.BNLSTARSBASEBALL.COM March 25th, 2016 Volume 3, Issue 3 The Official Newsletter of BNL Baseball From The Dugout 2016 BNL YOUTH BASEBALL CLINIC – MARCH 19TH THE COLD AND RAIN DIDN’T DAMPEN THE SPIRITS OF THESE BASEBALL PLAYERS FROM BEING A PART OF THE 3RD ANNUAL STARS BASEBALL CLINIC. TWO HOURS OF BASEBALL SKILLS AND FUNDAMENTAL INSTRUCTION BY THE BNL COACHES AND PLAYERS WERE PROVIDED TO 40+ YOUTH CAMPERS. LUNCH CAPPED OFF THE CAMP SERVED BY THE BNL RBI CLUB. GO TO “BNLSTARSBASEBALL” FACEBOOK PAGE FOR MORE PHOTOS GAME 1 (Scheduled after the BNL Youth Camp on March 19th) was cancelled due to inclement weather RED BLUE GAME 2: GAME 3: SATURDAY, March 26th – TUESDAY, March 29th – vs. Wooden Bat Game Traditional 7-Inning Game INTRASQUAD PLAY COME WATCH THEM PLAY! Varsity Home O pener: Friday, April 8th vs. Rockford, MI 2nd Annual Alumni Game (BATTLE FOR THE BAT) ROAD TRIP TO NASHVILLE, TN for 3 games th Saturday, March 26 , 2016 Friday/Saturday, APRIL 15TH/16th Old Guys vs. Older Guys Looking COACHES vs. CANCER FUNDRAISER After RED vs. BLUE Intrasquad game AHEAD TUESDAY, April 5TH in APRIL (around 1:00ishPM) @ pizza hut in BEDFORD 10% of all proceeds go to Cancer Fund Second Annual! Mason Mack Kyler Sherrill Ronnie Watson Get your chance to MLB Team – LA Dodgers MLB Team – Chicago Cubs MLB Team – New York Yankees College Team – Indiana Hoosiers College Team – Indiana Hoosiers College Team – Indiana Hoosiers win $500 MLB Player – Pete Rose MLB Player – Derek Jeter MLB Player – Derek Jeter Over 50 Baseball Movie – Major League Baseball Movie – For the Love of the Game Baseball Movie - Sandlot (Ticket is parents and players $25/chance) helped clean-up Proceeds go to RBI booster club and to support BNL Baseball shape-up the BNL baseball Contact facility on Misty Thursday, McDonald th March 17 . -

Download Sample

HOT DOG MAN: We all are! But you should Hot Dog Man – our story teller always root for the home team! Peanut Vendor— also a storyteller Fan #1, #2, and #3 – baseball fans today ANNOUNCER (off stage): With two outs, Jeter Sports Announcer comes to the plate. Here’s the pitch. Sound Effects Branch Rickey – the President of the Dodgers SOUND EFFECTS (as bat hitting ball): Wham! “Pee Wee” Reese – the Dodger shortstop Jackie Robinson ANNOUNCER: Swinging on the first pitch, Dodger #1 and #2 – other players Jeter rips it down the left field line. Spectator #1 and #2 – fans at a game in 1947 SOUND EFFECTS (as crowd): Roar!!!!! ANNOUNCER: He’s rounding first and heading into second. Here’s the throw now. He slides. SOUND EFFECTS (as umpire): Safe! HOT DOG MAN: Hot dogs! Get your hot dogs here! ANNOUNCER: He’s safe at second! PEANUT VENDOR: FAN #1: I’ll take two, Hot Dog Man! What is a Whew! What a game! No vendor? What are HOT DOG MAN: Comin’ right up. wonder it’s the National the vendors doing in this scene? Pastime. Cracker Jacks! FAN #2: Hey Hot Dog Man, who’s gonna win Peanuts! Get your peanuts today? here! But it wasn’t always this way. Page 2 of 8 ReadAloudPlays.com © 2009 Mack Lewis. All Rights Reserved. HOT DOG MAN: That’s right. There was a time MR. RICKEY: Let me get right to the point, when many of the players you’re watching today Jackie. I believe it’s unfair to judge a man by the wouldn’t have been allowed to play America’s color of his skin, and I believe it’s bad business game. -

2021 Leaf Lumber Kings Baseball Checklist

2021 Leaf Lumber Baseball Final Checklist Set Subset Name Checklist Bronze Purple Platinum Pewter Navy Blue Silver Gold Emerald Red Notes Plates 2021 Leaf Lumber 3 Home Run Games Babe Ruth / Mel Ott / Johnny Mize 3HRG-01 7 6 5 4 3 2 1 1 1 2021 Leaf Lumber 3 Home Run Games Bobby Doerr / Mickey Mantle / Ted Williams 3HRG-02 12 7 4 3 2 1 1 1 1 2021 Leaf Lumber 3 Home Run Games Carlos Delgado / Jason Varitek / Ellis Burks 3HRG-23 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber Carlos Delgado / Manny Ramirez / Juan 3 Home Run Games 3HRG-21 20 9 6 4 3 2 1 4 4 Baseball González 2021 Leaf Lumber Darryl Strawberry / Frank Thomas / Mo 3 Home Run Games 3HRG-20 20 9 6 4 3 2 1 4 4 Baseball Vaughn 2021 Leaf Lumber 3 Home Run Games Davey Lopes / Mike Schmidt / Gary Carter 3HRG-09 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Duke Snider / Eddie Mathews / Stan Musial 3HRG-03 15 8 6 4 3 2 1 1 1 2021 Leaf Lumber 3 Home Run Games Eddie Murray / Joe Carter / Reggie Jackson 3HRG-13 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Eddie Murray / Paul Molitor / Harold Baines 3HRG-11 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Fred Lynn / Don Baylor / Carl Yastrzemski 3HRG-08 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber Gary Carter / Andre Dawson / Darryl 3 Home Run Games 3HRG-12 20 9 6 4 3 2 1 4 4 Baseball Strawberry 2021 Leaf Lumber 3 Home Run Games George Brett / Jim Rice / Harold Baines 3HRG-14 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Jeff Bagwell / Barry Bonds / Andres 3HRG-18 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Jim Rice / Pete Rose / George Brett 3HRG-10 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Joe Carter / Mark McGwire / Bill Madlock 3HRG-15 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber 3 Home Run Games Juan González / Albert Belle / Joe Carter 3HRG-16 20 9 6 4 3 2 1 4 4 2021 Leaf Lumber Ken Griffey Jr.